Learning tensorflow for a period of time, feeling very useful, sometimes the most important thing is to think about the structure of the neural network, so that you can optionally add the hidden layer in the neural network, especially the problem of matrix dimension multiplication. Next, we will use tensorflow to realize the neural network, to do our own understanding.

Implementing Neural Network without Hidden Layer

Following is an example of handwritten numeral recognition.

read in data

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('MNIST_data/',one_hot=True)View the data:

It can be divided into training data, validation data and test data.

print mnist.train.images.shape

print mnist.train.labels.shape

print mnist.test.images.shape

print mnist.test.labels.shape

print mnist.validation.images.shape

print mnist.validation.labels.shape

######################

##There are 55000 * 784, 784 dimensions for each picture, pulled into a long vector.

(55000, 784)

(55000, 10)

(10000, 784)

(10000, 10)

(5000, 784)

(5000, 10)Note here that the input training x is n * 784, W is 784 * 10, and the output y is n*10, i. e. each row vector has 10 columns, which means that it represents 0,1... The probability of 9.

x = tf.placeholder(tf.float32,[None,784]) is the representation that reads data only when run ning.

y = tf.nn.softmax(tf.matmul(x,W)+b) softmax represents the predicted results for the probability of each tag being rolled out.

As you can see, it's just a probability statistic of the output.

As a result, we also need a loss function, transfer error, and the loss function used is cross-entropy loss.

Where y is the real value and y'is the predicted value

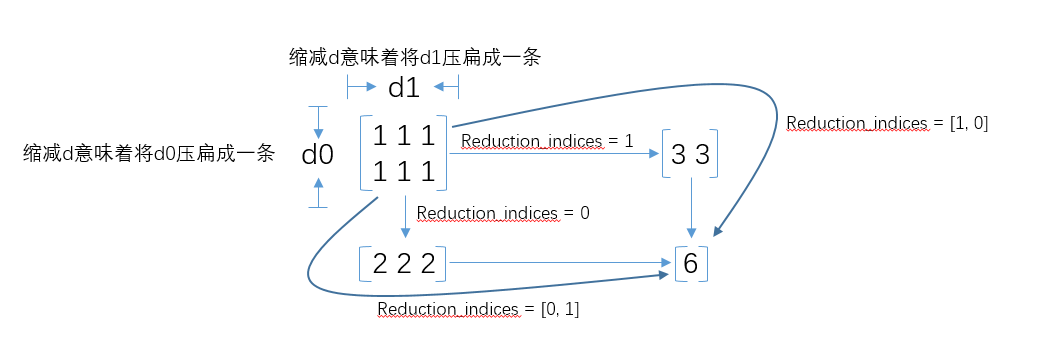

Note the use of reduce_sum:

Photo Source: Know about

reduce_mean(-tf.reduce_sum(y_*tf.log(y),reduction_indices=[1]))

So what this statement means is to add up the loss of each sample and then average it with reduce_mean(). Because y,y'are vectors of 1*10, which should be noted.

The next step is to define the training method, using gradient descent to minimize cross-entropy.

tf.train.GradientDescentOptimizer(0.5).minimize(cross_entropy)

sess = tf.InteractiveSession()

#real data

x = tf.placeholder(tf.float32,[None,784])

y_ = tf.placeholder(tf.float32,[None,10])

W = tf.Variable(tf.zeros([784,10]))

b = tf.Variable(tf.zeros([10]))

#predict

y = tf.nn.softmax(tf.matmul(x,W)+b)

#loss

cross_entropy = tf.reduce_mean(-tf.reduce_sum(y_*tf.log(y),reduction_indices=[1]))

#train ways

train_step = tf.train.GradientDescentOptimizer(0.5).minimize(cross_entropy)Training

##Focus, global parameter initialization

tf.global_variables_initializer().run()

##Iteration 1000 times, each time take out 100 samples for training SGD

for i in range(1000):

batch_x,batch_y = mnist.train.next_batch(100)

train_step.run({x:batch_x,y_:batch_y})train_step.run({x:batch_x,y_:batch_y}) is a way to feed_dict to the value of x,y_at runtime, where x is the training sample and y_is the corresponding true value.

Assessment

#test

correct_prediction = tf.equal(tf.argmax(y,1),tf.argmax(y_,1)) #Higher Dimensional

acuracy = tf.reduce_mean(tf.cast(correct_prediction,tf.float32)) #Use reduce_mean

print acuracy.eval({x:mnist.test.images,y_:mnist.test.labels})The final result is: 0.9174

Realization of Multilayer Neural Network

In order to add layer conveniently, write a function of adding layer, where in_size,out_size are the scale of neurons, input is the output of the upper layer and output is the output of this layer.

The complete code is as follows:

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("MNIST_data/",one_hot=True)

sess = tf.InteractiveSession()

#Define functions that add hidden layers

def add_layer(inputs, in_size, out_size,keep_prob=1.0,activation_function=None):

Weights = tf.Variable(tf.truncated_normal([in_size,out_size],stddev=0.1))

biases = tf.Variable(tf.zeros([out_size]))

Wx_plus_b = tf.matmul(inputs, Weights) + biases

if activation_function is None:

outputs = Wx_plus_b

else:

outputs = activation_function(Wx_plus_b)

outputs = tf.nn.dropout(outputs,keep_prob) #Random inactivation

return outputs

# holder variable

x = tf.placeholder(tf.float32,[None,784])

y_ = tf.placeholder(tf.float32,[None,10])

keep_prob = tf.placeholder(tf.float32) # probability

h1 = add_layer(x,784,300,keep_prob,tf.nn.relu)

##output layer

w = tf.Variable(tf.zeros([300,10])) #300*10

b = tf.Variable(tf.zeros([10]))

y = tf.nn.softmax(tf.matmul(h1,w)+b)

#Define loss,optimizer

cross_entropy = tf.reduce_mean(-tf.reduce_sum(y_ * tf.log(y),reduction_indices=[1]))

train_step =tf.train.AdagradOptimizer(0.35).minimize(cross_entropy)

correct_prediction = tf.equal(tf.argmax(y,1),tf.argmax(y_,1)) #Higher Dimensional

acuracy = tf.reduce_mean(tf.cast(correct_prediction,tf.float32)) #Use reduce_mean

tf.global_variables_initializer().run()

for i in range(3000):

batch_x,batch_y = mnist.train.next_batch(100)

train_step.run({x:batch_x,y_:batch_y,keep_prob:0.75})

if i%1000==0:

train_accuracy = acuracy.eval({x:batch_x,y_:batch_y,keep_prob:1.0})

print("step %d,train_accuracy %g"%(i,train_accuracy))

###########test

print acuracy.eval({x:mnist.test.images,y_:mnist.test.labels,keep_prob:1.0})Running the above program, the correct result is: 0.9784, which only adds a hidden layer.

The nodes of the hidden layer above add 300 neurons, which is very simple if 400 neurons are added.

h1 = add_layer(x,784,300,keep_prob,tf.nn.relu)

h2 = add_layer(h1,300,400,keep_prob,tf.nn.relu)

##output layer

w = tf.Variable(tf.zeros([400,10])) #300*10

b = tf.Variable(tf.zeros([10]))

y = tf.nn.softmax(tf.matmul(h2,w)+b)As you can see, you can optionally add hidden nodes, and the number of nodes, it is important to note that the dimension is not wrong.

Reference material:

- https://github.com/tensorflow/tensorflow

- tensorflow