1.1 personal records

① Using Python 3.7

② The editor is pycharm (I don't think it's easy to use, many libraries will fail directly, and print can't save - complaints of lazy cancer), the solution. For example, scipy, please download pip install scipy in cmd.

imshow () does not show the problem of image solution: import pylab library and call pylab.show(), pycharm compiler problem.

1.2 handwritten digits based on Neural Network

import numpy import matplotlib.pyplot import scipy.special import pylab import _cython_0_29_13 class neuralNetwork: def __init__(self, inputnodes, hiddennodes, outputnodes, learningrate): self.inodes = inputnodes self.hnodes = hiddennodes self.onodes = outputnodes # The average number is 0; the variance is the negative 0.5 power of the number of hidden layer nodes, and the generated size is the matrix of the number of input layer nodes multiplied by the number of hidden layer nodes self.wih = numpy.random.normal(0.0, pow(self.hnodes, -0.5), (self.hnodes, self.inodes)) # The average number is 0; the variance is the negative 0.5 power of the number of nodes in the output layer, and the generated matrix is the number of nodes in the output layer multiplied by the number of nodes in the hidden layer self.who = numpy.random.normal(0.0, pow(self.onodes, -0.5), (self.onodes, self.hnodes)) # Learning rate self.lr = learningrate # Activation function self.activation_function = lambda x: scipy.special.expit(x) def train(self, inputs_list, targets_list): inputs = numpy.array(inputs_list, ndmin=2).T targets = numpy.array(targets_list, ndmin=2).T # Hide layer output hidden_inputs = numpy.dot(self.wih, inputs) # Hide layer final output hidden_outputs = self.activation_function(hidden_inputs) # Output layer output = hidden layer to output layer node weight multiplied by hidden layer output final_inputs = numpy.dot(self.who, hidden_outputs) # Output layer final output = put output into activation function final_outputs = self.activation_function(final_inputs) # Output layer error = target value - final output output_errors = targets - final_outputs # Hidden layer error = weight from hidden layer to output layer multiplied by output error hidden_errors = numpy.dot(self.who.T, output_errors) # Update weight (weight between hidden layer and output layer) = original weight (weight between hidden layer and output layer) # +Learning rate * (output layer error * output layer final output * (1-output layer final output)) * hide layer final output (output layer input) transpose self.who += self.lr * numpy.dot((output_errors * final_outputs * (1.0 - final_outputs)), numpy.transpose(hidden_outputs)) self.wih += self.lr * numpy.dot((hidden_errors * hidden_outputs * (1.0 - hidden_outputs)), numpy.transpose(inputs)) # Query the final output value of forward propagation def query(self, inputs_list): inputs = numpy.array(inputs_list, ndmin=2).T hidden_inputs = numpy.dot(self.wih, inputs) hidden_outputs = self.activation_function(hidden_inputs) final_inputs = numpy.dot(self.who, hidden_outputs) final_outputs = self.activation_function(final_inputs) return final_outputs # Set the values of input layer, hidden layer, output layer and learning rate input_nodes = 784 hidden_nodes = 100 output_nodes = 10 learning_rate = 0.2 n = neuralNetwork(input_nodes, hidden_nodes, output_nodes, learning_rate) training_data_file = open(r"D:/Artificial intelligence handwriting recognition tool/mnist_test.csv", 'r') training_data_list = training_data_file.readlines() training_data_file.close() for record in training_data_list: all_values = record.split(',') inputs = (numpy.asfarray(all_values[1:]) / 255.0 * 0.99) + 0.01 # Create an array with a length of (output ﹣ nodes), i.e. 10, and add 0.01 to all the values to solve the problem that the activation function cannot operate due to the input of 0 targets = numpy.zeros(output_nodes) + 0.01 # The first element of each list is converted to int format and the corresponding number is 0.99 targets[int(all_values[0])] = 0.99 n.train(inputs, targets) test_data_file = open(r"D:/Artificial intelligence handwriting recognition tool/mnist_test.csv", 'r') test_data_list = test_data_file.readlines() test_data_file.close() #Test the number of the first list all_values = test_data_list[0].split(',') print(all_values[0]) image_array = numpy.asfarray(all_values[1:]).reshape((28, 28)) matplotlib.pyplot.imshow(image_array, cmap='Greys', interpolation='None') pylab.show() print(n.query((numpy.asfarray(all_values[1:])))) # print("all values") # print(all_values)

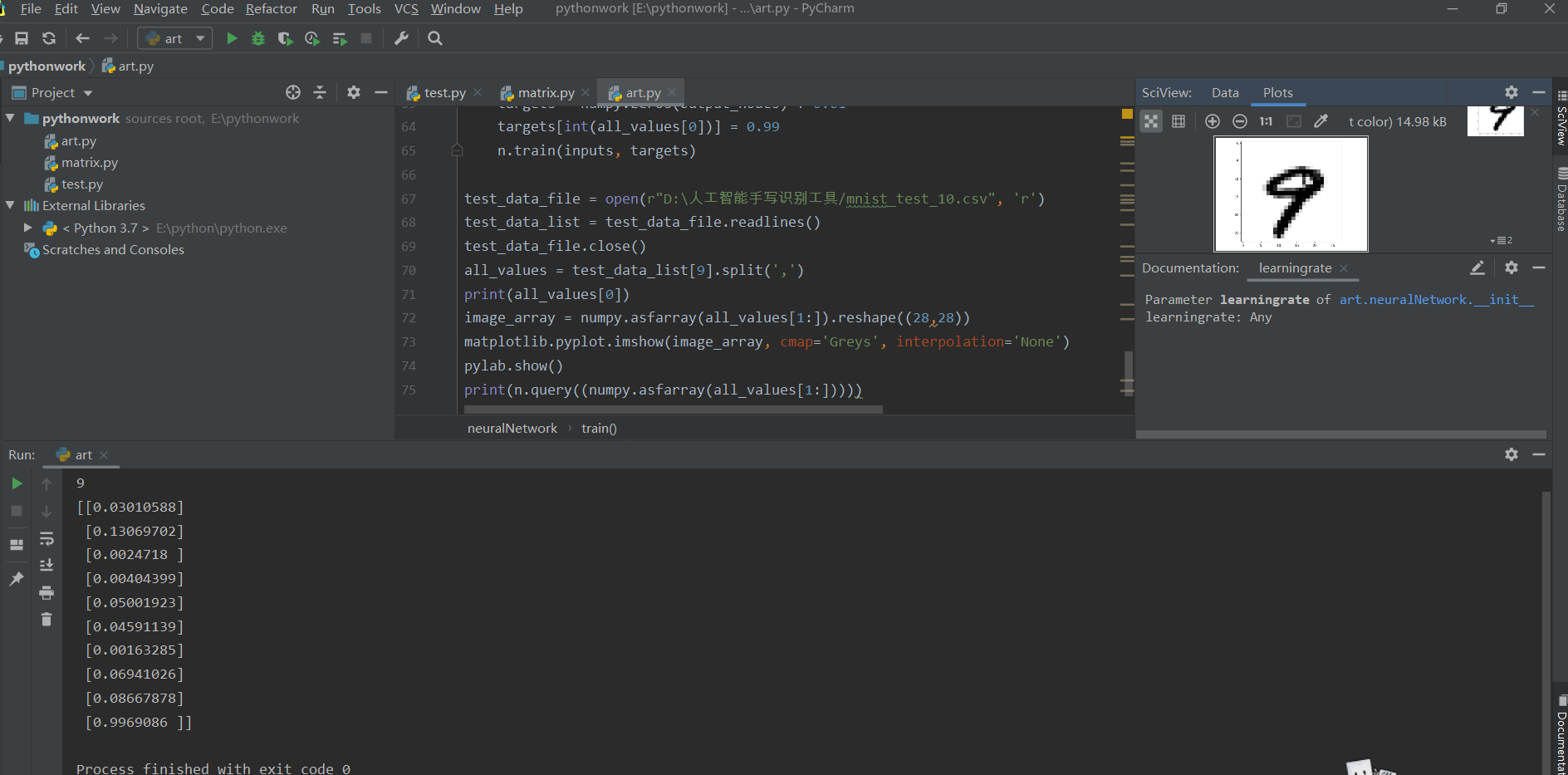

Display effect

1.2.1 add Scorecard

#Set up Scorecard scorecard=[] for record in test_data_list: all_values = record. split(',') correct_label = int(all_values[0]) print(correct_label, "correct label") inputs = (numpy. asfarray(all_values[1:])/255.0*0.99)+0.01 outputs = n.query(inputs) #Returns the largest index and records the index number label = numpy.argmax(outputs) print(label, "network's answer") if (label == correct_label): scorecard.append(1) else: scorecard.append(0) print(scorecard) print(outputs) #Accuracy rate scorecard_array = numpy.asarray(scorecard) print("result =",scorecard_array.sum()/scorecard_array.size)

Display effect

1.2.2 optimization

Add 5 generations, carry out 5 for training cycles, adjust the hidden layer to 200, and the learning rate is 0.1

# Set the values of input layer, hidden layer, output layer and learning rate input_nodes = 784 hidden_nodes = 200 output_nodes = 10 learning_rate = 0.1 n = neuralNetwork(input_nodes, hidden_nodes, output_nodes, learning_rate) training_data_file = open(r"D:/Artificial intelligence handwriting recognition tool/mnist_test.csv", 'r') training_data_list = training_data_file.readlines() training_data_file.close() # Training 5 generations epochs = 5 for e in range(epochs): for record in training_data_list: all_values = record.split(',') inputs = (numpy.asfarray(all_values[1:]) / 255.0 * 0.99) + 0.01 # Create an array with a length of (output ﹣ nodes), i.e. 10, and add 0.01 to all the values to solve the problem that the activation function cannot operate due to the input of 0 targets = numpy.zeros(output_nodes) + 0.01 # The first element of each list is converted to int format and the corresponding number is 0.99 targets[int(all_values[0])] = 0.99 n.train(inputs, targets)

Display effect

Accuracy increased to 98.4

1.2.3 complete code

import numpy import matplotlib.pyplot import scipy.special import pylab import _cython_0_29_13 class neuralNetwork: def __init__(self, inputnodes, hiddennodes, outputnodes, learningrate): self.inodes = inputnodes self.hnodes = hiddennodes self.onodes = outputnodes # The average number is 0; the variance is the negative 0.5 power of the number of hidden layer nodes, and the generated size is the matrix of the number of input layer nodes multiplied by the number of hidden layer nodes self.wih = numpy.random.normal(0.0, pow(self.hnodes, -0.5), (self.hnodes, self.inodes)) # The average number is 0; the variance is the negative 0.5 power of the number of nodes in the output layer, and the generated matrix is the number of nodes in the output layer multiplied by the number of nodes in the hidden layer self.who = numpy.random.normal(0.0, pow(self.onodes, -0.5), (self.onodes, self.hnodes)) # Learning rate self.lr = learningrate # Activation function self.activation_function = lambda x: scipy.special.expit(x) def train(self, inputs_list, targets_list): inputs = numpy.array(inputs_list, ndmin=2).T targets = numpy.array(targets_list, ndmin=2).T # Hide layer output hidden_inputs = numpy.dot(self.wih, inputs) # Hide layer final output hidden_outputs = self.activation_function(hidden_inputs) # Output layer output = hidden layer to output layer node weight multiplied by hidden layer output final_inputs = numpy.dot(self.who, hidden_outputs) # Output layer final output = put output into activation function final_outputs = self.activation_function(final_inputs) # Output layer error = target value - final output output_errors = targets - final_outputs # Hidden layer error = weight from hidden layer to output layer multiplied by output error hidden_errors = numpy.dot(self.who.T, output_errors) # Update weight (weight between hidden layer and output layer) = original weight (weight between hidden layer and output layer) # +Learning rate * (output layer error * output layer final output * (1-output layer final output)) * hide layer final output (output layer input) transpose self.who += self.lr * numpy.dot((output_errors * final_outputs * (1.0 - final_outputs)), numpy.transpose(hidden_outputs)) self.wih += self.lr * numpy.dot((hidden_errors * hidden_outputs * (1.0 - hidden_outputs)), numpy.transpose(inputs)) # Query the final output value of forward propagation def query(self, inputs_list): inputs = numpy.array(inputs_list, ndmin=2).T hidden_inputs = numpy.dot(self.wih, inputs) hidden_outputs = self.activation_function(hidden_inputs) final_inputs = numpy.dot(self.who, hidden_outputs) final_outputs = self.activation_function(final_inputs) return final_outputs # Set the values of input layer, hidden layer, output layer and learning rate input_nodes = 784 hidden_nodes = 200 output_nodes = 10 learning_rate = 0.1 n = neuralNetwork(input_nodes, hidden_nodes, output_nodes, learning_rate) training_data_file = open(r"D:/Artificial intelligence handwriting recognition tool/mnist_test.csv", 'r') training_data_list = training_data_file.readlines() training_data_file.close() # Training 5 generations epochs = 5 for e in range(epochs): for record in training_data_list: all_values = record.split(',') inputs = (numpy.asfarray(all_values[1:]) / 255.0 * 0.99) + 0.01 # Create an array with a length of (output ﹣ nodes), i.e. 10, and add 0.01 to all the values to solve the problem that the activation function cannot operate due to the input of 0 targets = numpy.zeros(output_nodes) + 0.01 # The first element of each list is converted to int format and the corresponding number is 0.99 targets[int(all_values[0])] = 0.99 n.train(inputs, targets) test_data_file = open(r"D:/Artificial intelligence handwriting recognition tool/mnist_test.csv", 'r') test_data_list = test_data_file.readlines() test_data_file.close() # Test the number of the first list all_values = test_data_list[9].split(',') print(all_values[0]) image_array = numpy.asfarray(all_values[1:]).reshape((28, 28)) matplotlib.pyplot.imshow(image_array, cmap='Greys', interpolation='None') pylab.show() print(n.query((numpy.asfarray(all_values[1:])))) # print("all values") # print(all_values) # Set up Scorecard scorecard = [] for record in test_data_list: all_values = record.split(',') correct_label = int(all_values[0]) # print(correct_label, "correct label") inputs = (numpy.asfarray(all_values[1:]) / 255.0 * 0.99) + 0.01 outputs = n.query(inputs) # Returns the largest index and records the index number label = numpy.argmax(outputs) # print(label, "network's answer") if (label == correct_label): scorecard.append(1) else: scorecard.append(0) print(scorecard) # Accuracy rate scorecard_array = numpy.asarray(scorecard) print("result =", scorecard_array.sum() / scorecard_array.size)

(from people's post and Telecommunications Press, Python neural network programming, translated by Tariq Rashid, Lin CI)

This book is a reference for self-study and summary. The notes of this book are based on my understanding. If there is any mistake, please give me more advice.