Semidimensional COS Graph Crawl-Write in front

Today, when I was browsing the website, an inexplicable link led me to jump to the half-dimensional website https://bcy.net/. After opening it, I found that there was nothing interesting. Professional sensitivity reminded me of cosplay in an instant. This kind of website is bound to exist, so I am ready for me. Big crawler.

After opening the link above, I found out that my eighth feeling is good. Next is to find the entrance, you must find the entrance of the picture link before you can do the following operation

This page drags down and loads all the time. When you drag for a while, it stops. That's the time.

Discover the entrance, in my actual operation, in fact, also found many other entries, this is not explained one by one, get on the bus, enter the view more, found that the page is still a drop-down refresh layout, the professional term waterfall flow.

Semidimensional COS Graph Crawling-First Step of python Crawler

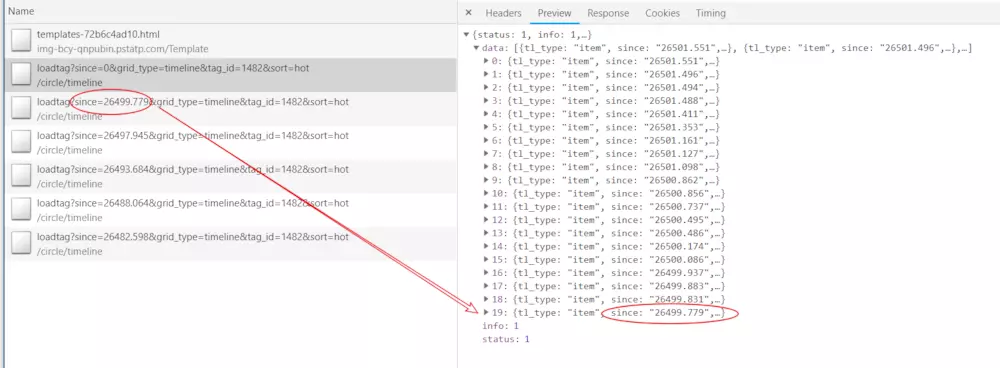

Open the developer's tools, switch to network, find many xhr requests, find this, on behalf of the site is easy to crawl.

Extract the links to be crawled and analyze the rules

https://bcy.net/circle/timeline/loadtag?since=0&grid_type=timeline&tag_id=1482&sort=hot https://bcy.net/circle/timeline/loadtag?since=26499.779&grid_type=timeline&tag_id=1482&sort=hot https://bcy.net/circle/timeline/loadtag?since=26497.945&grid_type=timeline&tag_id=1482&sort=hot

Find that only one parameter is changing, and the change seems to have no rules to look for, no matter, look at the data, you can find the mystery of it.

The principle of this website is very simple, that is, by constantly getting the last since of each data and then getting the next data, then we can implement the code according to its rules, do not multi-threading, this rule is impossible to operate.

This time I store it in mongodb, because I can't get it all at once, so maybe I need to continue using it next time.

if __name__ == '__main__':

### Some basic operations of mongodb

DATABASE_IP = '127.0.0.1'

DATABASE_PORT = 27017

DATABASE_NAME = 'sun'

start_url = "https://bcy.net/circle/timeline/loadtag?since={}&grid_type=timeline&tag_id=399&sort=recent"

client = MongoClient(DATABASE_IP, DATABASE_PORT)

db = client.sun

db.authenticate("dba", "dba")

collection = db.bcy # Prepare to insert data

#####################################3333

get_data(start_url,collection)

Python Resource sharing qun 784758214 ,Installation packages are included. PDF,Learning videos, here is Python The gathering place of learners, zero foundation and advanced level are all welcomed.

Getting web data is a place where our previous experience has made it easy.

## Semidimensional COS Graph Crawling-Getting Data Function

def get_data(start_url,collection):

since = 0

while 1:

try:

with requests.Session() as s:

response = s.get(start_url.format(str(since)),headers=headers,timeout=3)

res_data = response.json()

if res_data["status"] == 1:

data = res_data["data"] # Get the Data array

time.sleep(0.5)

## data processing

since = data[-1]["since"] # since in the last json data to get 20 pieces of data

ret = json_handle(data) # The code implementation is as follows

try:

print(ret)

collection.insert_many(ret) # Batch Access Database

print("The above data was inserted successfully!!!!!!!!")

except Exception as e:

print("Insertion failure")

print(ret)

##

except Exception as e:

print("!",end="Exceptions, please note")

print(e,end=" ")

else:

print("Completion of the cycle")

Page parsing code

# Processing JSON data

def json_handle(data):

# Extraction of key data

list_infos = []

for item in data:

item = item["item_detail"]

try:

avatar = item["avatar"] # User Avatar

item_id = item["item_id"] # Picture Details Page

like_count = item["like_count"] # Number of likes

pic_num = item["pic_num"] if "pic_num" in item else 0 # Total number of pictures

reply_count =item["reply_count"]

share_count =item["share_count"]

uid = item["uid"]

plain = item["plain"]

uname = item["uname"]

list_infos.append({"avatar":avatar,

"item_id":item_id,

"like_count":like_count,

"pic_num":pic_num,

"reply_count":reply_count,

"share_count":share_count,

"uid":uid,

"plain":plain,

"uname":uname})

except Exception as e:

print(e)

continue

return list_infos

Python Resource sharing qun 784758214 ,Installation packages are included. PDF,Learning videos, here is Python The gathering place of learners, zero foundation and advanced level are all welcomed.

Now it's done, and the code runs.