Introduction to FFmpeg: Common API usage and C language development

For project reasons, ffmpeg was used when ffmpeg was called with c#to transcode the video by instructions.Instructions are easier to use, but if you are involved in secondary development of audio and video with complex points, it is difficult to understand the meaning and logic of the code without having some understanding of audio and video related concepts.Because of interest, I recently began to explore the use of the ffmpeg API for learning.

Understanding Related Concepts

1. Basic concepts of multimedia files

- Multimedia files are a container

- There are many streams in the container (Stream/Track)

- Each stream is encoded by a different encoder

- Data read from a stream is called a package

- Contains one or more frames in a package

2. Quantitative encoding of audio

- The process of converting analog signals to digital signals (continuous->discrete, discontinuous processes can only be used by computers

- Analog signal - > sampling - > quantization - > encoding - > digital signal

- The basic concept of quantification: sample size: how many bits to store a sample, commonly 16 bits

- Sampling rate: Sampling frequency (sampling times in one second). Generally, the sampling rate is 8 kHz, 16 kHz, 32 kHz, 44.1 kHz, 48 kHz, etc. The higher the sampling frequency, the more true and natural the sound restoration, and of course, the larger the amount of data

- Channel Number: In order to restore the true sound field when playing sound, sound is acquired from several different positions at the same time when recording sound, one channel for each position.The number of channels is the number of sound sources during sound recording or the number of corresponding speakers during playback. They are mono, dual, and multichannel.

- Bit rate: Also called bit rate, refers to the number of bits transmitted per second.The unit is bps(Bit Per Second). The higher the bit rate, the more data is transmitted per second, and the better the sound quality.

Code rate calculation formula: Bit Rate = Sampling Rate * Sampling Size * Channel Number For example, a sample rate of 44.1 kHz, a sample size of 16 bits, and a two-channel PCM-encoded WAV file: Bit rate=44.1hHz*16bit*2=1411.2kbit/s. The size of the music recorded for one minute is (1411.2 * 1000 * 60) / 8 / 1024 / 1024 = 10.09M.

3. Time Base

- Time_base is a measure of time, such as time_base = {1,40}, which means that a second is divided into 40 segments, so each segment is 1/40 seconds. In FFmpeg, the function av_q2d(time_base) is used to calculate a period of time, and the result is 1/40 seconds.For example, if a frame in a video has a pts of 800, that is, 800 segments, how many seconds does it represent, pts av_q2d(time_base) =800 (1/40) =20s, that is, to play the conversion of the frame's time base at the 20th second.Different formats have different time bases.

- PTS is the time stamp used for rendering.DTS is the decoding timestamp.

pts for Audio: Take AAC Audio as an example, an AAC raw frame contains 1024 samples and related data over a period of time, that is, 1024 samples per frame. If the sampling rate is 44.1 kHz (44100 samples are collected in one second), the aac audio has 44100/1024 frames in one second, and the duration of each frame is 1024/44100 seconds, then pts for each frame can be calculated. - Conversion Formula

Timestamp (seconds) = PTS * av_q2d (st->time_base)//Calculate the position of the frame in the video audio Time (seconds) = st->duration * av_q2d (st->time_base)//Calculate length in video audio st is the AVStream stream pointer Time Base Conversion Formula timestamp(ffmpeg internal timestamp) = AV_TIME_BASE * time (seconds) Time (seconds) = AV_TIME_BASE_Q * timestamp(ffmpeg internal timestamp)//timestamp even PTS/DTS

Environment Configuration

Related Downloads

Get into Official Web Download Dev and Shared packages, respectively.Download Note Platform Selection Corresponds.

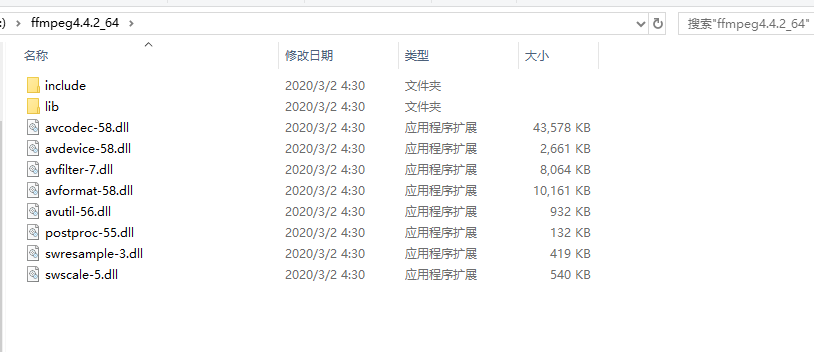

Unzip the include and lib files from dev into the following directories, respectively.An error will occur if the dll file in shared is copied to the project Debug directory.

Environment Configuration

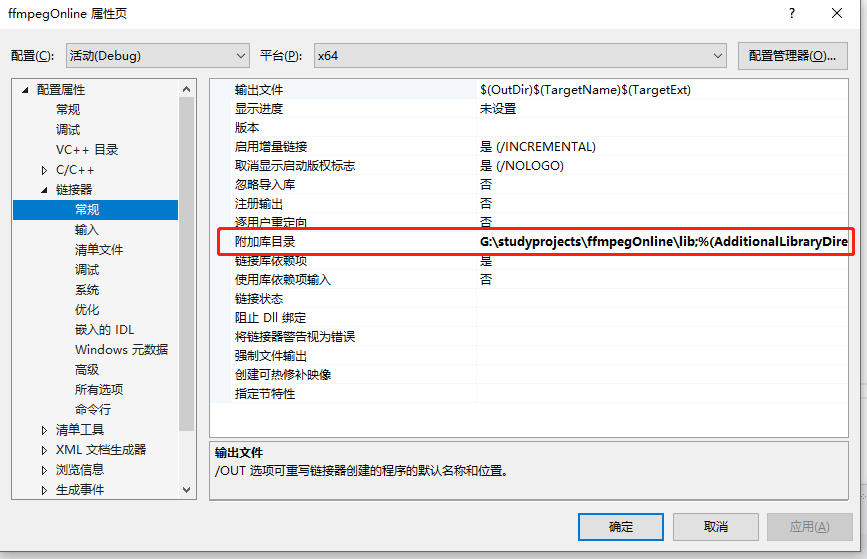

Create c/c++ project in VS, right-click project properties

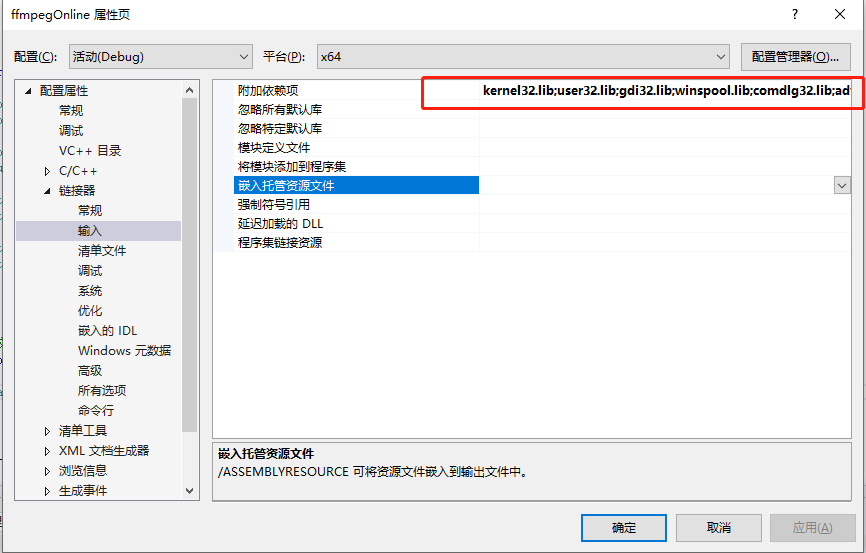

Add the following dll file to it

avcodec.lib; avformat.lib; avutil.lib; avdevice.lib; avfilter.lib; postproc.lib; swresample.lib; swscale.lib

libavcodec provides implementation of a series of encoders libavformat implements streaming protocols, container formats, and their IO access libavutil includes hash decoders, decoders, and various tool functions libavfilter provides various audio and video filters libavdevice provides access to capture and playback devices libswresample realizes mixing and resampling libswscale implements color conversion and scaling

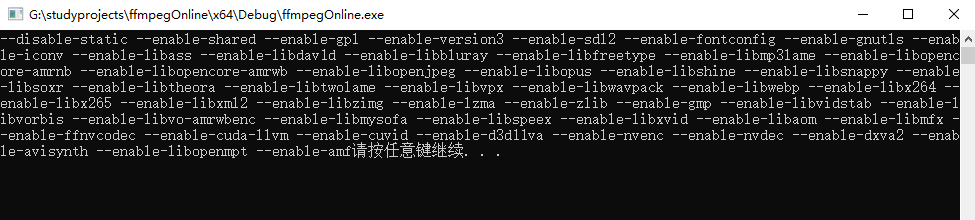

test

I developed using VS2017 as an editor.

#include<stdio.h> #include <iostream> extern "C" { #include "libavcodec/avcodec.h" #include "libavformat/avformat.h" } int main(int argc, char* argv[]) { printf(avcodec_configuration()); system("pause"); return 0; }

Development Cases

To achieve video-audio mixing of two sets of videos, a function similar to a small curry show.

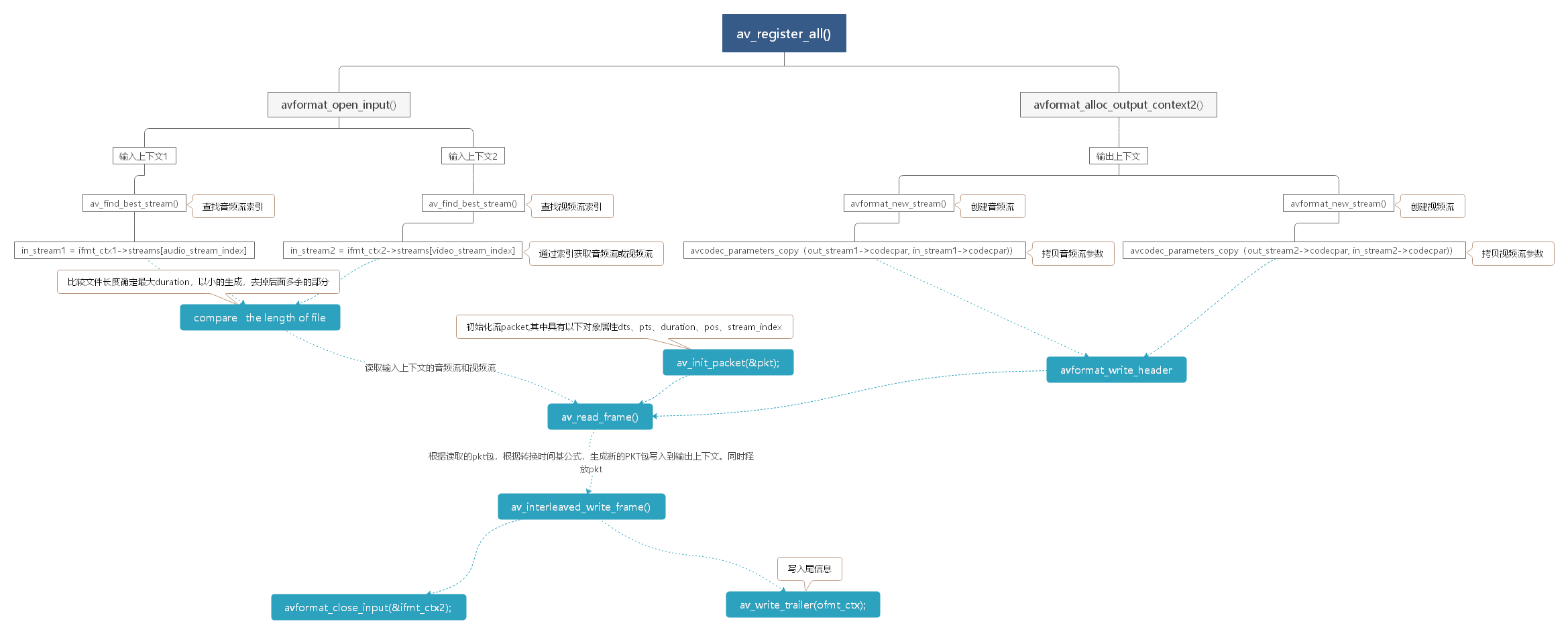

Processing logic and using API s

- API Registration

- Create input and output contexts

- Get Input Audio Stream, Input Video Stream

- Create Output Audio Stream, Output Video Stream

- Copy input stream parameters to output stream parameters

- Determine file size and output file length

- Write header information

- Initialize package, read audio and video data separately, and write to file

Related Codes

#include<stdio.h> #include <iostream> extern "C" { #include "libavcodec/avcodec.h" #include "libavformat/avformat.h" #include "libavformat/avio.h" #include <libavutil/log.h> #include <libavutil/timestamp.h> } #define ERROR_STR_SIZE 1024 int main(int argc, char const *argv[]) { int ret = -1; int err_code; char errors[ERROR_STR_SIZE]; AVFormatContext *ifmt_ctx1 = NULL; AVFormatContext *ifmt_ctx2 = NULL; AVFormatContext *ofmt_ctx = NULL; AVOutputFormat *ofmt = NULL; AVStream *in_stream1 = NULL; AVStream *in_stream2 = NULL; AVStream *out_stream1 = NULL; AVStream *out_stream2 = NULL; int audio_stream_index = 0; int vedio_stream_indes = 0; // Maximum file length to ensure consistent audio and video data length double max_duration = 0; AVPacket pkt; int stream1 = 0, stream2 = 0; av_log_set_level(AV_LOG_DEBUG); //Open two input files if ((err_code = avformat_open_input(&ifmt_ctx1, "C:\\Users\\haizhengzheng\\Desktop\\meta.mp4", 0, 0)) < 0) { av_strerror(err_code, errors, ERROR_STR_SIZE); av_log(NULL, AV_LOG_ERROR, "Could not open src file, %s, %d(%s)\n", "C:\\Users\\haizhengzheng\\Desktop\\meta.mp4", err_code, errors); goto END; } if ((err_code = avformat_open_input(&ifmt_ctx2, "C:\\Users\\haizhengzheng\\Desktop\\mercury.mp4", 0, 0)) < 0) { av_strerror(err_code, errors, ERROR_STR_SIZE); av_log(NULL, AV_LOG_ERROR, "Could not open the second src file, %s, %d(%s)\n", "C:\\Users\\haizhengzheng\\Desktop\\mercury.mp4", err_code, errors); goto END; } //Create output context if ((err_code = avformat_alloc_output_context2(&ofmt_ctx, NULL, NULL, "C:\\Users\\haizhengzheng\\Desktop\\amv.mp4")) < 0) { av_strerror(err_code, errors, ERROR_STR_SIZE); av_log(NULL, AV_LOG_ERROR, "Failed to create an context of outfile , %d(%s) \n", err_code, errors); } ofmt = ofmt_ctx->oformat;//Get format information for output file // Find the best audio stream in the first parameter and the video stream subscript in the second file audio_stream_index = av_find_best_stream(ifmt_ctx1, AVMEDIA_TYPE_AUDIO, -1, -1, NULL, 0);//Get Audio Stream Subscript vedio_stream_indes = av_find_best_stream(ifmt_ctx2, AVMEDIA_TYPE_VIDEO, -1, -1, NULL, 0);//Get video stream Subscripts // Get the audio stream from the first file in_stream1 = ifmt_ctx1->streams[audio_stream_index]; stream1 = 0; // Create Audio Output Stream out_stream1 = avformat_new_stream(ofmt_ctx, NULL); if (!out_stream1) { av_log(NULL, AV_LOG_ERROR, "Failed to alloc out stream!\n"); goto END; } // Copy Flow Parameters if ((err_code = avcodec_parameters_copy(out_stream1->codecpar, in_stream1->codecpar)) < 0) { av_strerror(err_code, errors, ERROR_STR_SIZE); av_log(NULL, AV_LOG_ERROR, "Failed to copy codec parameter, %d(%s)\n", err_code, errors); } out_stream1->codecpar->codec_tag = 0; // Get the video stream from the second file in_stream2 = ifmt_ctx2->streams[vedio_stream_indes]; stream2 = 1; // Create video output stream out_stream2 = avformat_new_stream(ofmt_ctx, NULL); if (!out_stream2) { av_log(NULL, AV_LOG_ERROR, "Failed to alloc out stream!\n"); goto END; } // Copy Flow Parameters if ((err_code = avcodec_parameters_copy(out_stream2->codecpar, in_stream2->codecpar)) < 0) { av_strerror(err_code, errors, ERROR_STR_SIZE); av_log(NULL, AV_LOG_ERROR, "Failed to copy codec parameter, %d(%s)\n", err_code, errors); goto END; } out_stream2->codecpar->codec_tag = 0; //Output Stream Information av_dump_format(ofmt_ctx, 0, "C:\\Users\\haizhengzheng\\Desktop\\amv.mp4", 1); // Determine the length of the two streams and determine the length of the final file, time (seconds) = st->duration * av_q2d (st->time_base) duration is dtspts av_q2d() is the reciprocal if (in_stream1->duration * av_q2d(in_stream1->time_base) > in_stream2->duration * av_q2d(in_stream2->time_base)) { max_duration = in_stream2->duration * av_q2d(in_stream2->time_base); } else { max_duration = in_stream1->duration * av_q2d(in_stream1->time_base); } //Open Output File if (!(ofmt->flags & AVFMT_NOFILE)) { if ((err_code = avio_open(&ofmt_ctx->pb, "C:\\Users\\haizhengzheng\\Desktop\\amv.mp4", AVIO_FLAG_WRITE)) < 0) { av_strerror(err_code, errors, ERROR_STR_SIZE); av_log(NULL, AV_LOG_ERROR, "Could not open output file, %s, %d(%s)\n", "C:\\Users\\haizhengzheng\\Desktop\\amv.mp4", err_code, errors); goto END; } } //Header Information avformat_write_header(ofmt_ctx, NULL); av_init_packet(&pkt); // Read audio data and write to output file while (av_read_frame(ifmt_ctx1, &pkt) >= 0) { // If the reading time exceeds the maximum time indicating that the frame is not needed, skip if (pkt.pts * av_q2d(in_stream1->time_base) > max_duration) { av_packet_unref(&pkt); continue; } // If it is the audio stream we need, write the file av_rescale_q_rnd() time base conversion function after converting the time base if (pkt.stream_index == audio_stream_index) { pkt.pts = av_rescale_q_rnd(pkt.pts, in_stream1->time_base, out_stream1->time_base,//Get PTS\DTS\duration of package (AVRounding)(AV_ROUND_NEAR_INF|AV_ROUND_PASS_MINMAX)); pkt.dts = av_rescale_q_rnd(pkt.dts, in_stream1->time_base, out_stream1->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX)); pkt.duration = av_rescale_q(max_duration, in_stream1->time_base, out_stream1->time_base); pkt.pos = -1; pkt.stream_index = stream1; av_interleaved_write_frame(ofmt_ctx, &pkt); av_packet_unref(&pkt); } } // Read video data and write to output file while (av_read_frame(ifmt_ctx2, &pkt) >= 0) { // If the reading time exceeds the maximum time indicating that the frame is not needed, skip if (pkt.pts * av_q2d(in_stream2->time_base) > max_duration) { av_packet_unref(&pkt); continue; } // If this is the video stream we need, write the file after converting the time base if (pkt.stream_index == vedio_stream_indes) { pkt.pts = av_rescale_q_rnd(pkt.pts, in_stream2->time_base, out_stream2->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX)); pkt.dts = av_rescale_q_rnd(pkt.dts, in_stream2->time_base, out_stream2->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX)); pkt.duration = av_rescale_q(max_duration, in_stream2->time_base, out_stream2->time_base); pkt.pos = -1; pkt.stream_index = stream2; av_interleaved_write_frame(ofmt_ctx, &pkt); av_packet_unref(&pkt); } } //End Write Information av_write_trailer(ofmt_ctx); ret = 0; END: // Release memory if (ifmt_ctx1) { avformat_close_input(&ifmt_ctx1); } if (ifmt_ctx2) { avformat_close_input(&ifmt_ctx2); } if (ofmt_ctx) { if (!(ofmt->flags & AVFMT_NOFILE)) { avio_closep(&ofmt_ctx->pb); } avformat_free_context(ofmt_ctx); } }