catalogue

preface

Like Map Key Value It is a very classic structure in software development, which is often used to store data in memory.

This article mainly wants to discuss a concurrent container such as ConcurrentHashMap. Before we officially start, I think it is necessary to talk about HashMap. Without it, there will be no subsequent ConcurrentHashMap.

HashMap

As we all know, the bottom layer of HashMap is based on Array + linked list But the specific implementation is slightly different in jdk1.7 and 1.8.

Base 1.7

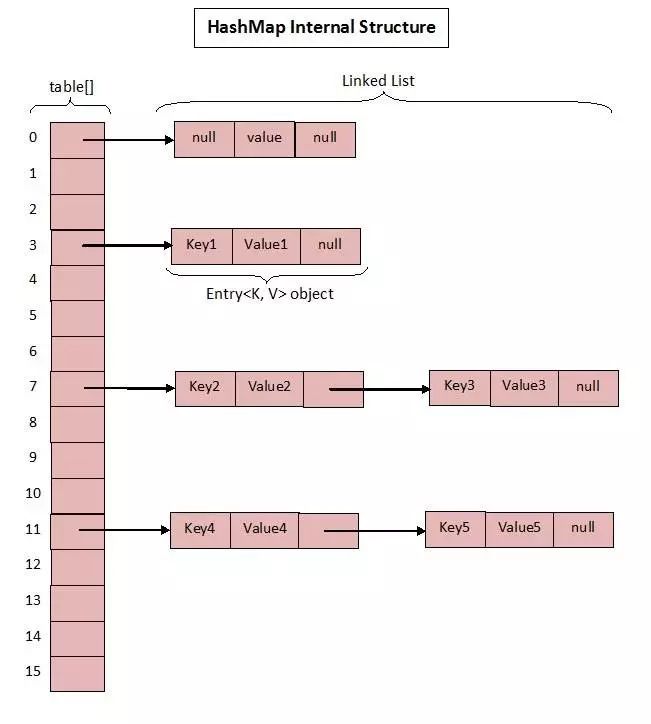

Data structure diagram in 1.7:

Let's take a look at the implementation in 1.7.

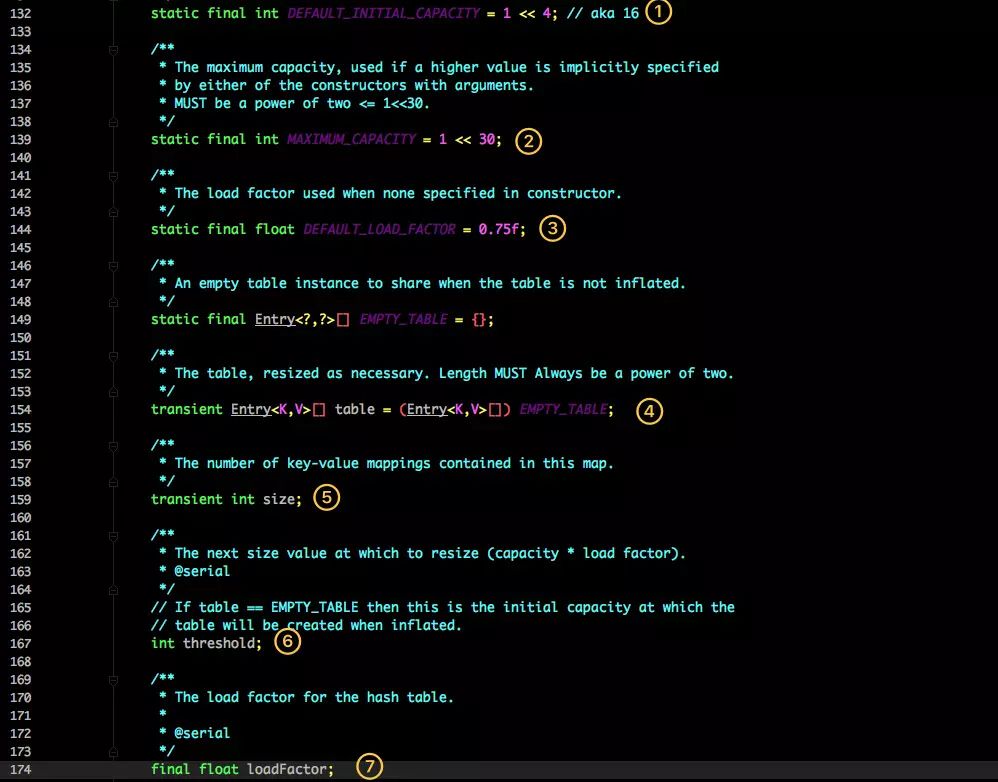

These are several core member variables in HashMap; See what they mean?

-

Initialize the bucket size. Because the bottom layer is an array, this is the default size of the array.

-

Bucket maximum.

-

Default load factor (0.75)

-

table An array that really holds data.

-

Map Size of storage quantity.

-

Bucket size, which can be specified explicitly during initialization.

-

Load factor, which can be specified explicitly during initialization.

Focus on the following load factors:

Because the capacity of a given HashMap is fixed, such as default initialization:

public HashMap() {

this(DEFAULT_INITIAL_CAPACITY, DEFAULT_LOAD_FACTOR);

}

public HashMap(int initialCapacity, float loadFactor) {

if (initialCapacity < 0)

throw new IllegalArgumentException("Illegal initial capacity: " + initialCapacity);

if (initialCapacity > MAXIMUM_CAPACITY)

initialCapacity = MAXIMUM_CAPACITY;

if (loadFactor <= 0 || Float.isNaN(loadFactor))

throw new IllegalArgumentException("Illegal load factor: " + loadFactor);

this.loadFactor = loadFactor;

threshold = initialCapacity;

init();

}The given default capacity is 16 and the load factor is 0.75. During the use of Map, data is continuously stored in it. When the quantity reaches 16 * 0.75 = 12 It is necessary to expand the capacity of the current 16, and the expansion process involves rehash, data replication and other operations, so it is very performance consuming.

Therefore, it is generally recommended to estimate the size of HashMap in advance to minimize the performance loss caused by capacity expansion.

According to the code, you can see that what actually stores data is

transient Entry<K,V>[] table = (Entry<K,V>[]) EMPTY_TABLE;

This array, how is it defined?

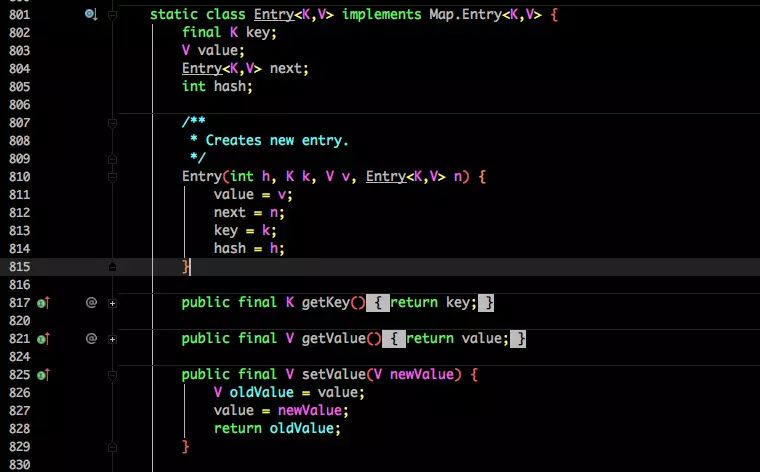

Entry is an internal class in HashMap. It is easy to see from its member variables:

-

Key is the key when writing.

-

Value is naturally value.

-

At the beginning, I mentioned that HashMap is composed of arrays and linked lists, so the next is used to implement the linked list structure.

-

hash stores the hashcode of the current key.

After knowing the basic structure, let's take a look at the important write and get functions:

put method

1 public V put(K key, V value) {

2 if (table == EMPTY_TABLE) {

3 inflateTable(threshold);

4 }

5 if (key == null)

6 return putForNullKey(value);

7 int hash = hash(key);

8 int i = indexFor(hash, table.length);

9 for (Entry<K,V> e = table[i]; e != null; e = e.next) {

10 Object k;

11 if (e.hash == hash && ((k = e.key) == key || key.equals(k))) {

12 V oldValue = e.value;

13 e.value = value;

14 e.recordAccess(this);

15 return oldValue;

16 }

17 }

18

19 modCount++;

20 addEntry(hash, key, value, i);

21 return null;

22 }

-

Determine whether the current array needs to be initialized.

-

If the key is empty, put a null value into it.

-

Calculate the hashcode according to the key.

-

Locate the bucket according to the calculated hashcode.

-

If the bucket is a linked list, you need to traverse to determine whether the hashcode and key in it are equal to the incoming key. If they are equal, overwrite them and return the original value.

-

If the bucket is empty, there is no data stored in the current position; Add an Entry object to write to the current location.

1 void addEntry(int hash, K key, V value, int bucketIndex) {

2 if ((size >= threshold) && (null != table[bucketIndex])) {

3 resize(2 * table.length);

4 hash = (null != key) ? hash(key) : 0;

5 bucketIndex = indexFor(hash, table.length);

6 }

7

8 createEntry(hash, key, value, bucketIndex);

9 }

10

11 void createEntry(int hash, K key, V value, int bucketIndex) {

12 Entry<K,V> e = table[bucketIndex];

13 table[bucketIndex] = new Entry<>(hash, key, value, e);

14 size++;

15 }When calling addEntry to write Entry, you need to judge whether capacity expansion is required.

Double expand if necessary, and re hash and locate the current key.

And in createEntry The bucket in the current location will be transferred into the new bucket. If the current bucket has a value, a linked list will be formed at the location.

get method

Let's look at the get function:

1 public V get(Object key) {

2 if (key == null)

3 return getForNullKey();

4 Entry<K,V> entry = getEntry(key);

5

6 return null == entry ? null : entry.getValue();

7 }

8

9 final Entry<K,V> getEntry(Object key) {

10 if (size == 0) {

11 return null;

12 }

13

14 int hash = (key == null) ? 0 : hash(key);

15 for (Entry<K,V> e = table[indexFor(hash, table.length)];

16 e != null;

17 e = e.next) {

18 Object k;

19 if (e.hash == hash &&

20 ((k = e.key) == key || (key != null && key.equals(k))))

21 return e;

22 }

23 return null;

24 }-

First, calculate the hashcode according to the key, and then locate it in the specific bucket.

-

Judge whether the location is a linked list.

-

It's not a linked list Key, hashcode of key Returns a value based on equality.

-

For a linked list, you need to traverse until the key and hashcode are equal.

-

If you don't get anything, you can directly return null.

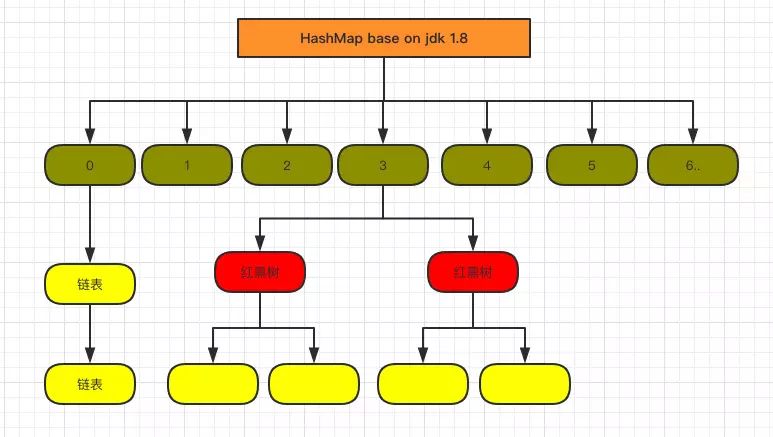

Base 1.8

I don't know the implementation of 1.7. Do you see the points that need to be optimized?

In fact, one obvious thing is:

When the Hash conflict is serious, the linked list formed on the bucket will become longer and longer, so the efficiency of query will be lower and lower; The time complexity is O(N).

Therefore, 1.8 focuses on optimizing the query efficiency.

1.8 HashMap structure diagram:

Let's take a look at several core member variables:

1 static final int DEFAULT_INITIAL_CAPACITY = 1 << 4; // aka 16 2 3 /** 4 * The maximum capacity, used if a higher value is implicitly specified 5 * by either of the constructors with arguments. 6 * MUST be a power of two <= 1<<30. 7 */ 8 static final int MAXIMUM_CAPACITY = 1 << 30; 9 10 /** 11 * The load factor used when none specified in constructor. 12 */ 13 static final float DEFAULT_LOAD_FACTOR = 0.75f; 14 15 static final int TREEIFY_THRESHOLD = 8; 16 17 transient Node<K,V>[] table; 18 19 /** 20 * Holds cached entrySet(). Note that AbstractMap fields are used 21 * for keySet() and values(). 22 */ 23 transient Set<Map.Entry<K,V>> entrySet; 24 25 /** 26 * The number of key-value mappings contained in this map. 27 */ 28 transient int size;

It is almost the same as 1.7, but there are several important differences:

-

TREEIFY_THRESHOLD The threshold used to determine whether the linked list needs to be converted into a red black tree.

-

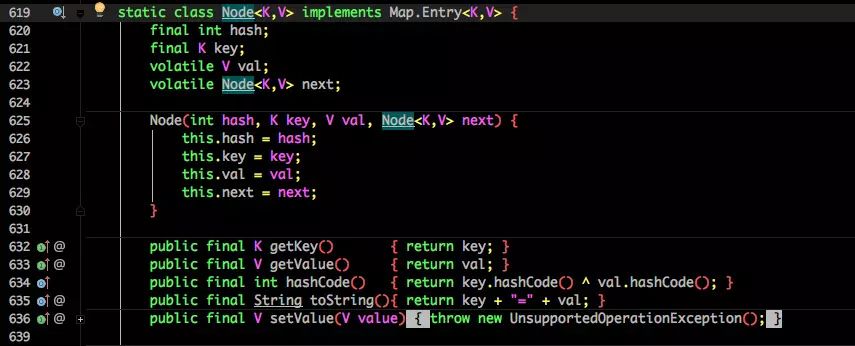

Change HashEntry to Node.

In fact, the core composition of Node is the same as that of HashEntry in 1.7 key value hashcode next And other data.

Let's look at the core method.

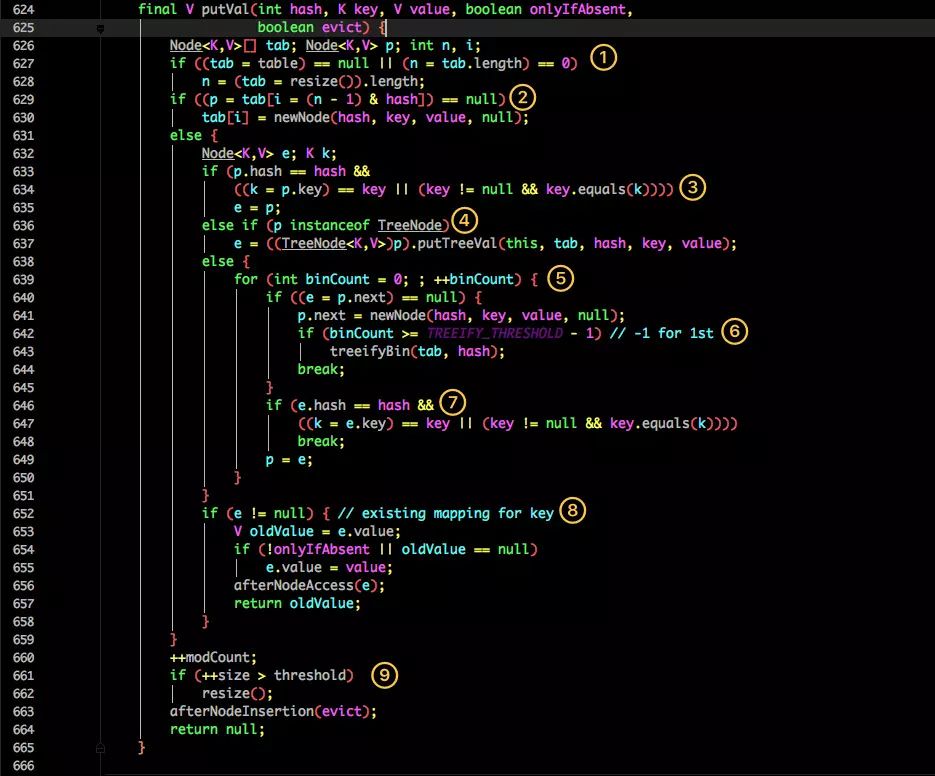

put method

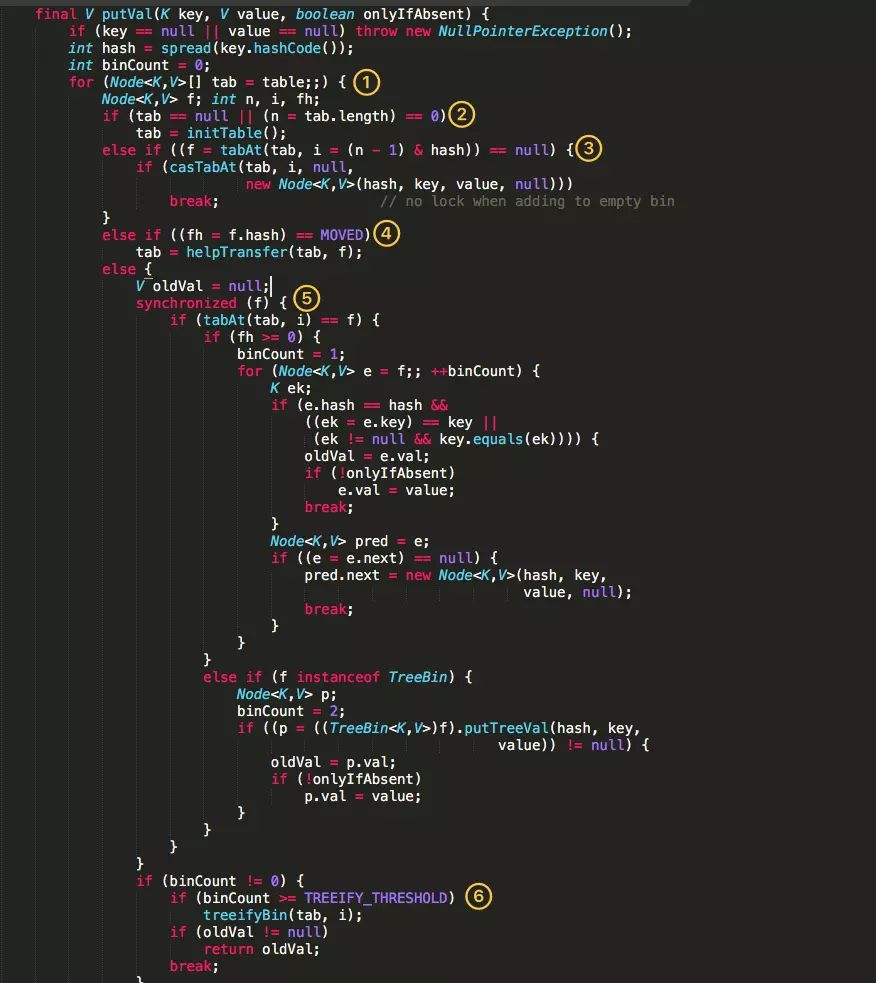

It seems to be more complex than 1.7. We disassemble it step by step:

-

Judge whether the current bucket is empty. If it is empty, it needs to be initialized (whether to initialize will be determined in resize).

-

Locate the hashcode of the current key into a specific bucket and judge whether it is empty. If it is empty, it indicates that there is no Hash conflict. Just create a new bucket at the current location.

-

If the current bucket has a value (Hash conflict), compare the values in the current bucket Key, hashcode of key Whether it is equal to the written key is assigned to e. In step 8, the assignment and return will be carried out uniformly.

-

If the current bucket is a red black tree, data should be written in the way of a red black tree.

-

If it is a linked list, you need to encapsulate the current key and value into a new node and write it to the back of the current bucket (form a linked list).

-

Then judge whether the size of the current linked list is greater than the preset threshold. If it is greater than, it will be converted to red black tree.

-

If the same key is found during the traversal, exit the traversal directly.

-

If e != null It is equivalent to the existence of the same key, so you need to overwrite the value.

-

Finally, judge whether expansion is required.

get method

1 public V get(Object key) {

2 Node<K,V> e;

3 return (e = getNode(hash(key), key)) == null ? null : e.value;

4 }

5

6 final Node<K,V> getNode(int hash, Object key) {

7 Node<K,V>[] tab; Node<K,V> first, e; int n; K k; 8 if ((tab = table) != null && (n = tab.length) > 0 && 9 (first = tab[(n - 1) & hash]) != null) {

10 if (first.hash == hash && // always check first node

11 ((k = first.key) == key || (key != null && key.equals(k))))

12 return first;

13 if ((e = first.next) != null) {

14 if (first instanceof TreeNode)

15 return ((TreeNode<K,V>)first).getTreeNode(hash, key);

16 do {

17 if (e.hash == hash &&

18 ((k = e.key) == key || (key != null && key.equals(k))))

19 return e;

20 } while ((e = e.next) != null);

21 }

22 }

23 return null;

24 }The get method looks much simpler.

-

First, after the key hash, obtain the located bucket.

-

If the bucket is empty, null is returned directly.

-

Otherwise, judge whether the key of the first position of the bucket (possibly linked list or red black tree) is the key of the query, and if so, directly return value.

-

If the first one does not match, judge whether its next one is a red black tree or a linked list.

-

The red black tree returns the value according to the search method of the tree.

-

Otherwise, it will traverse the matching return value in the way of linked list.

From these two core methods (get/put), we can see that the large linked list is optimized in 1.8. After it is modified to red black tree, the query efficiency is directly improved to O(logn).

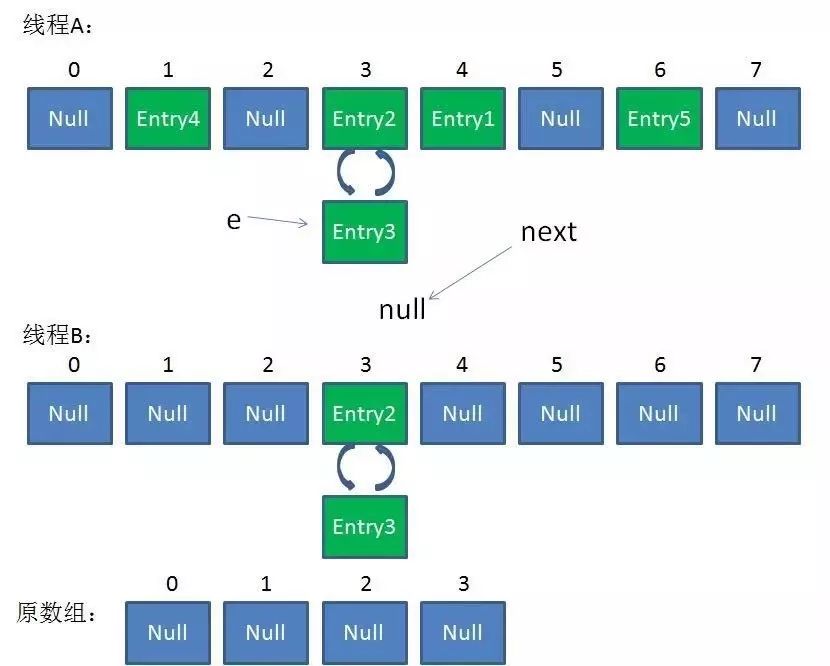

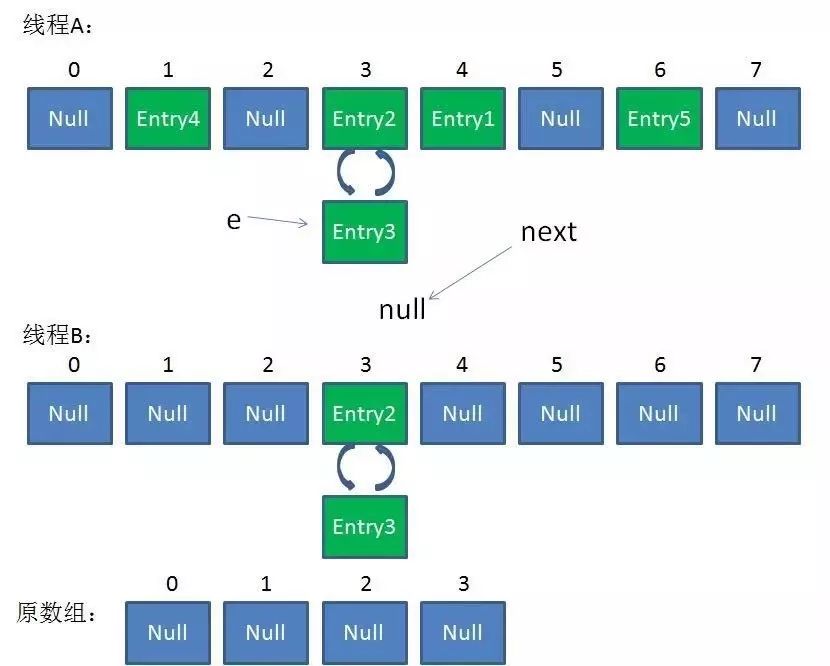

However, the original problems of HashMap also exist. For example, it is prone to dead loops when used in concurrent scenarios.

1final HashMap<String, String> map = new HashMap<String, String>();

2for (int i = 0; i < 1000; i++) {

3 new Thread(new Runnable() {

4 @Override

5 public void run() {

6 map.put(UUID.randomUUID().toString(), "");

7 }

8 }).start();

9}But why? Simple analysis.

After reading the above, I still remember that it will be called when HashMap is expanded resize() The method is that the concurrent operation here is easy to form a ring linked list on a bucket; In this way, when a nonexistent key is obtained, the calculated index is exactly the subscript of the ring linked list, and an endless loop will appear.

As shown below:

Traversal mode

It is also worth noting that the traversal methods of HashMap usually include the following:

1 Iterator<Map.Entry<String, Integer>> entryIterator = map.entrySet().iterator(); 2 while (entryIterator.hasNext()) { 3 Map.Entry<String, Integer> next = entryIterator.next();

4 System.out.println("key=" + next.getKey() + " value=" + next.getValue());

5 }

6

7 Iterator<String> iterator = map.keySet().iterator();

8 while (iterator.hasNext()){

9 String key = iterator.next();

10 System.out.println("key=" + key + " value=" + map.get(key));

11

12 }It is strongly recommended to use the first EntrySet for traversal.

The first method can get the key value at the same time. The second method needs to get the value through the key once, which is inefficient.

To sum up, HashMap: whether it is 1.7 or 1.8, it can be seen that JDK does not do any synchronization operation on it, so concurrency problems will occur, and even dead loops will lead to system unavailability.

Therefore, JDK has launched a special concurrent HashMap, which is located in java.util.concurrent Package, which is specially used to solve concurrency problems.

Friends who insist on seeing here have laid a solid foundation for ConcurrentHashMap. Let's officially start the analysis.

ConcurrentHashMap

Concurrent HashMap is also divided into versions 1.7 and 1.8, which are slightly different in implementation.

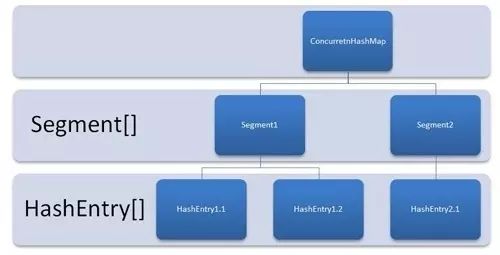

Base 1.7

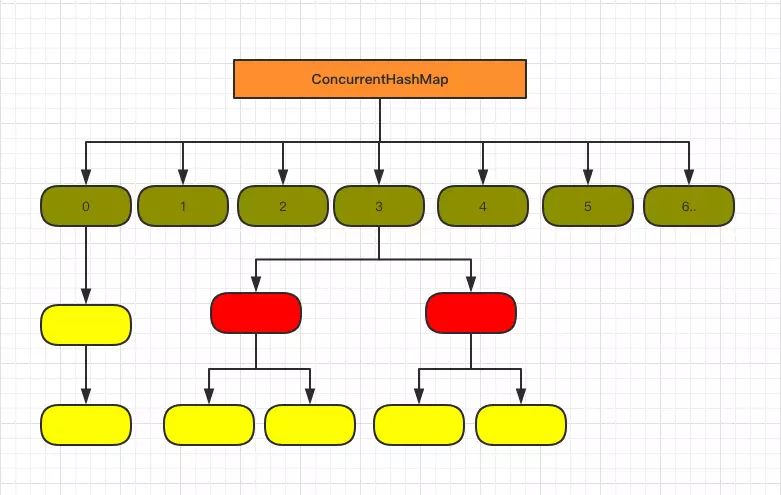

Let's take a look at the implementation of 1.7. The following is its structure diagram:

As shown in the figure, it is composed of Segment array and HashEntry. Like HashMap, it is still array plus linked list.

Its core member variables:

1 /** 2 * Segment Array. When storing data, you first need to locate the specific Segment Yes 3 */ 4 final Segment<K,V>[] segments; 5 6 transient Set<K> keySet; 7 transient Set<Map.Entry<K,V>> entrySet;

Segment is an internal class of ConcurrentHashMap. Its main components are as follows:

1 static final class Segment<K,V> extends ReentrantLock implements Serializable {

2

3 private static final long serialVersionUID = 2249069246763182397L;

4

5 // and HashMap Medium HashEntry The function is the same as the bucket 6 that really stores data transient volatile HashEntry<K,V>[] table;

7

8 transient int count;

9

10 transient int modCount;

11

12 transient int threshold;

13

14 final float loadFactor;

15

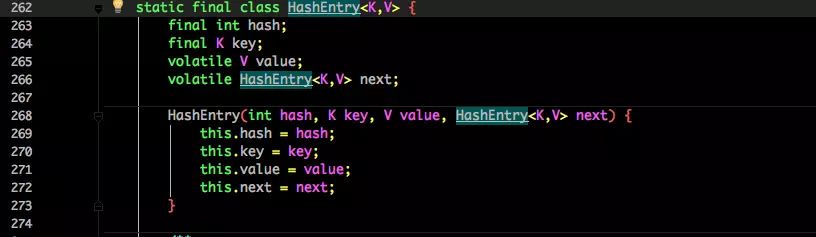

16 }See the composition of HashEntry:

It is very similar to HashMap. The only difference is that the core data such as value and linked list are modified by volatile to ensure the visibility during acquisition.

In principle, ConcurrentHashMap adopts Segment lock technology, in which Segment inherits ReentrantLock. Unlike HashTable, both put and get operations need to be synchronized. Theoretically, ConcurrentHashMap supports thread concurrency of currencylevel (number of Segment arrays). Every time a thread accesses a Segment using a lock, it will not affect other segments.

Let's also take a look at the core put get method.

put method

1 public V put(K key, V value) {

2 Segment<K,V> s;

3 if (value == null)

4 throw new NullPointerException();

5 int hash = hash(key); 6 int j = (hash >>> segmentShift) & segmentMask;

7 if ((s = (Segment<K,V>)UNSAFE.getObject // nonvolatile; recheck

8 (segments, (j << SSHIFT) + SBASE)) == null) // in ensureSegment 9 s = ensureSegment(j);

10 return s.put(key, hash, value, false);

11 }First, locate the Segment through the key, and then make a specific put in the corresponding Segment.

1 final V put(K key, int hash, V value, boolean onlyIfAbsent) {

2 HashEntry<K,V> node = tryLock() ? null :

3 scanAndLockForPut(key, hash, value);

4 V oldValue;

5 try {

6 HashEntry<K,V>[] tab = table;

7 int index = (tab.length - 1) & hash;

8 HashEntry<K,V> first = entryAt(tab, index);

9 for (HashEntry<K,V> e = first;;) {

10 if (e != null) {

11 K k;

12 if ((k = e.key) == key ||

13 (e.hash == hash && key.equals(k))) {

14 oldValue = e.value;

15 if (!onlyIfAbsent) {

16 e.value = value;

17 ++modCount;

18 }

19 break;

20 }

21 e = e.next;

22 }

23 else {

24 if (node != null)

25 node.setNext(first);

26 else

27 node = new HashEntry<K,V>(hash, key, value, first);

28 int c = count + 1;

29 if (c > threshold && tab.length < MAXIMUM_CAPACITY)

30 rehash(node);

31 else

32 setEntryAt(tab, index, node);

33 ++modCount;

34 count = c;

35 oldValue = null;

36 break;

37 }

38 }

39 } finally {

40 unlock();

41 }

42 return oldValue;

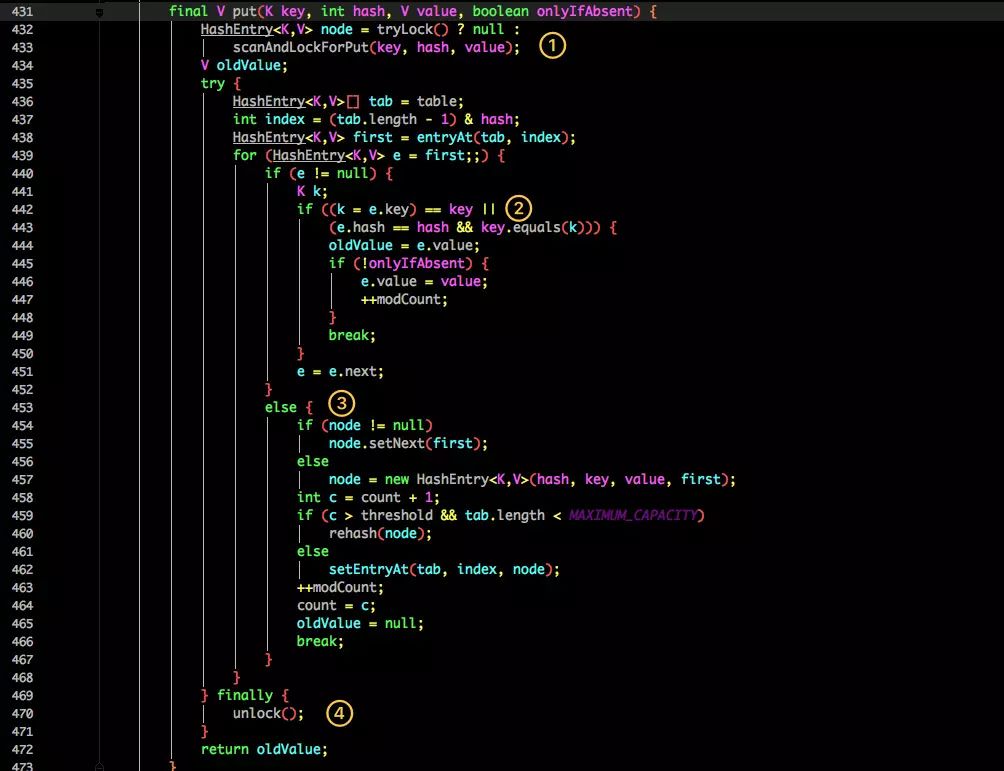

43 }Although the value in HashEntry is modified with volatile keyword, it does not guarantee the concurrency atomicity, so it still needs to be locked during put operation.

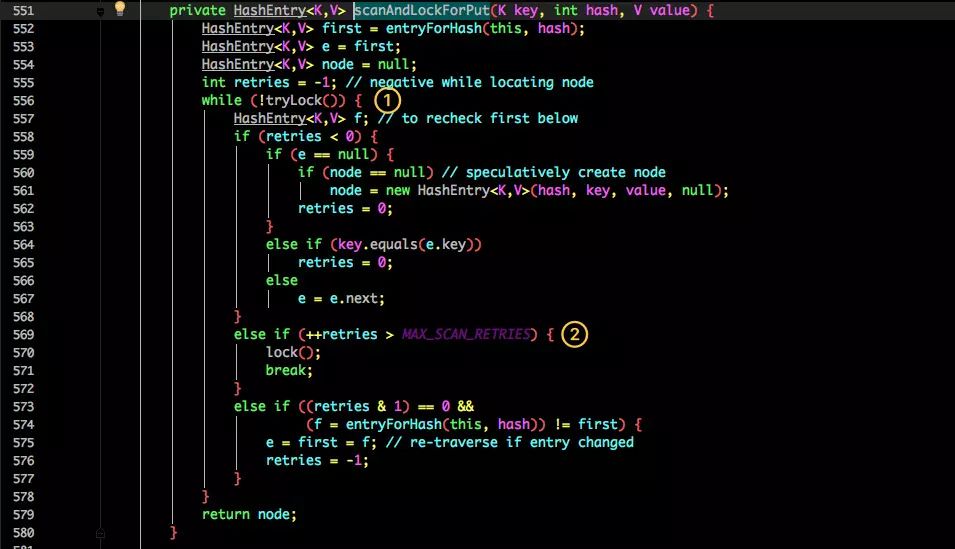

First, in the first step, you will try to obtain the lock. If the acquisition fails, there must be competition from other threads, then use the scanAndLockForPut() Spin acquisition lock.

-

Try to acquire the lock.

-

If the number of retries reaches MAX_SCAN_RETRIES It is changed to block lock acquisition to ensure success.

Then combine the diagram to see the flow of put.

-

Locate the table in the current Segment to the HashEntry through the hashcode of the key.

-

Traverse the HashEntry. If it is not empty, judge whether the passed key is equal to the currently traversed key. If it is equal, overwrite the old value.

-

If it is not empty, you need to create a HashEntry and add it to the Segment. At the same time, you will first judge whether you need to expand the capacity.

-

Finally, the lock of the current Segment obtained in 1 will be released.

get method

1 public V get(Object key) {

2 Segment<K,V> s; // manually integrate access methods to reduce overhead

3 HashEntry<K,V>[] tab;

4 int h = hash(key);

5 long u = (((h >>> segmentShift) & segmentMask) << SSHIFT) + SBASE;

6 if ((s = (Segment<K,V>)UNSAFE.getObjectVolatile(segments, u)) != null &&

7 (tab = s.table) != null) {

8 for (HashEntry<K,V> e = (HashEntry<K,V>) UNSAFE.getObjectVolatile

9 (tab, ((long)(((tab.length - 1) & h)) << TSHIFT) + TBASE);

10 e != null; e = e.next) {

11 K k;

12 if ((k = e.key) == key || (e.hash == h && key.equals(k)))

13 return e.value;

14 }

15 }

16 return null;

17 }The get logic is relatively simple:

You only need to locate the Key to the specific Segment through the Hash, and then locate the Key to the specific element through the Hash.

Because the value attribute in HashEntry is decorated with volatile keyword to ensure memory visibility, it is the latest value every time it is obtained.

The get method of ConcurrentHashMap is very efficient because the whole process does not need to be locked.

Base 1.8

1.7 has solved the concurrency problem and can support the concurrency of N segments for so many times, but there is still the problem of HashMap in version 1.7.

That is, the efficiency of query traversal of linked list is too low.

Therefore, some data structure adjustments have been made in 1.8.

First, let's look at the composition of the bottom layer:

Does it look similar to the 1.8 HashMap structure?

The original Segment lock is abandoned and the CAS + synchronized To ensure concurrency security.

The HashEntry storing data in 1.7 is also changed to Node, but the functions are the same.

Among them val next Are decorated with volatile to ensure visibility.

put method

Let's focus on the put function:

-

Calculate the hashcode according to the key.

-

Determine whether initialization is required.

-

f That is, the Node located by the current key. If it is empty, it means that data can be written in the current position. If CAS attempts to write, it will be successful.

-

If the current location is hashcode == MOVED == -1, capacity expansion is required.

-

If they are not satisfied, the synchronized lock is used to write data.

-

If the quantity is greater than TREEIFY_THRESHOLD To convert to red black tree.

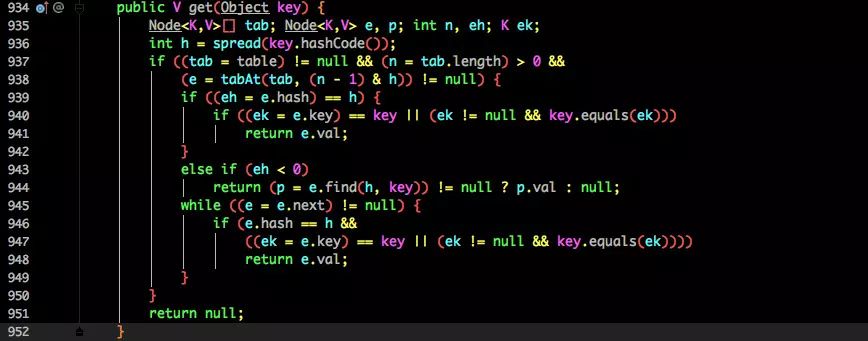

get method

-

According to the calculated hashcode addressing, if it is on the bucket, the value is returned directly.

-

If it is a red black tree, get the value as a tree.

-

If it is not satisfied, traverse and obtain the value in the way of linked list.

1.8 major changes have been made to the data structure of 1.7. After the red black tree is adopted, the query efficiency (O(logn)) can be guaranteed, and the ReentrantLock is even cancelled and changed to synchronized. This shows that the synchronized optimization is in place in the new version of JDK.

summary

After reading the different implementation methods of the whole HashMap and concurrent HashMap in 1.7 and 1.8, I believe everyone's understanding of them should be more in place.

In fact, this is also the key content of the interview. The usual routine is:

-

Talk about your understanding of HashMap and the get put process.

-

1.8 what optimization has been done?

-

Is it thread safe?

-

What problems can insecurity cause?

-

How to solve it? Is there a thread safe concurrency container?

-

How is ConcurrentHashMap implemented? What is the difference between the implementation of 1.7 and 1.8? Why?

I believe you can get back to the interviewer after reading this series of questions carefully.

In addition to being asked in the interview, there are actually quite a lot of applications, as mentioned earlier Cache in Guava The implementation of is to use the idea of ConcurrentHashMap.

At the same time, you can also learn the optimization ideas and concurrency solutions of JDK authors.