Guide reading

- A few days ago, I discussed with a friend about their company's system problems, traditional single application and cluster deployment. He said that there might be a risk of instantaneous increase in the concurrent volume of services in the near future. Although the cluster is deployed, the request delay is still very large after the pressure test. I want to ask what I can do to improve. I pondered for a while, and now it's obviously impossible to change the architecture, so I gave a suggestion, let him do an interface current limiting, so as to ensure that the instantaneous concurrent volume is high without the problem of request delay, and the user's experience will also be improved.

- As for what is interface current limiting? How to realize interface current limiting? How to realize the current limiting of single machine application? How to realize the current limiting of distributed application? This article will elaborate.

Common algorithms of current limiting

- There are many common current limiting algorithms, but the most commonly used algorithms are the following four.

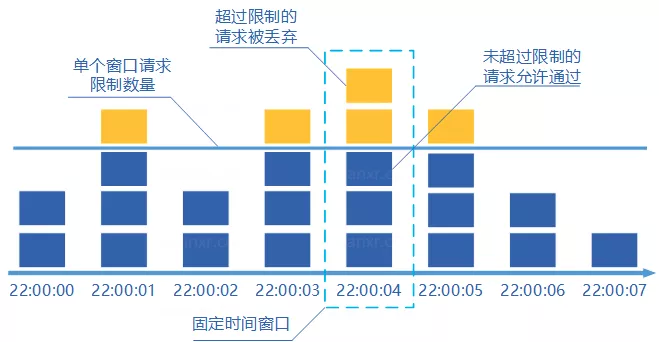

Fixed window counter

- The concept of fixed algorithm is as follows

- Divide time into windows

- Add one counter to each request in each window

- If the counter exceeds the limit, all requests in this window are discarded. When the time reaches the next window, the counter is reset.

- Fixed window counter is the simplest algorithm, but this algorithm sometimes allows twice the limit of passing requests. Consider the following situation: the maximum number of requests passed in 1 second is limited to 5, 5 requests passed in the last half second of the first window, and 5 requests passed in the first half second of the second window. It looks like 10 requests passed in one second.

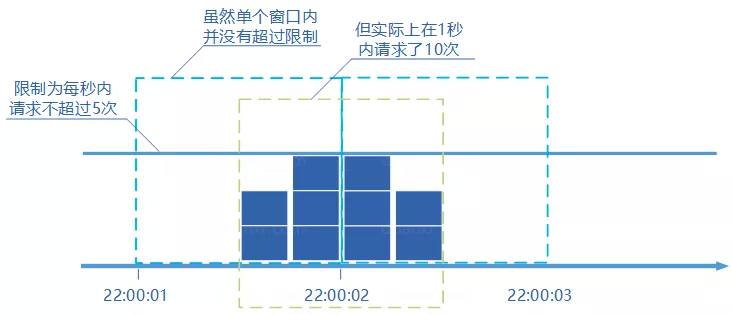

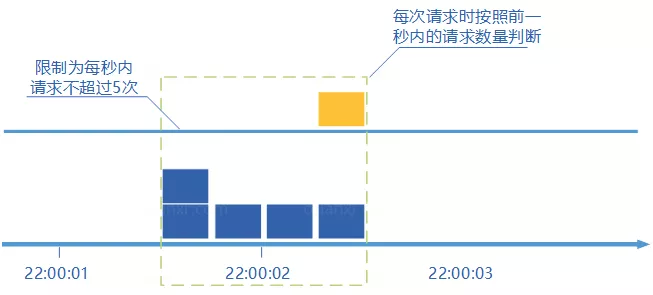

Sliding window counter

- The concept of sliding window counter algorithm is as follows:

- Time is divided into several intervals;

- In each interval, each request will add one counter to maintain a time window, occupying multiple intervals;

- After each interval, the oldest interval is discarded and included in the latest interval;

- If the total number of requests in the current window exceeds the limit, all requests in this window will be discarded.

- The sliding window counter is to subdivide the window and "slide" it according to the time. This algorithm avoids the double burst request brought by the fixed window counter, but the higher the precision of the time interval, the larger the space capacity required by the algorithm.

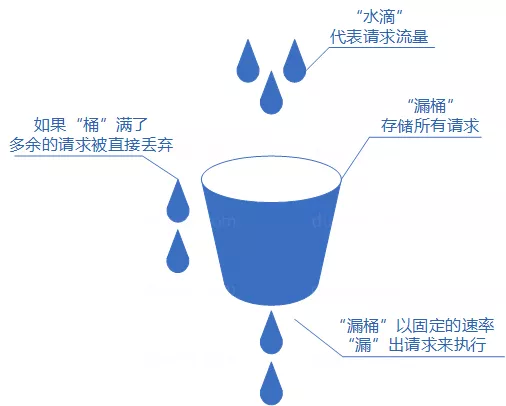

Leaky bucket algorithm

- The concept of leaky bucket algorithm is as follows:

- Put each request as a "water drop" into the "leaky bucket" for storage;

- "Leaky bucket" leaks out at a fixed rate "requests to execute if" leaky bucket "is empty, stop" leaking ";

- If the "leaky bucket" is full, the extra "water drop" will be discarded directly.

- The leaky bucket algorithm mostly uses queue to implement, the service requests will be stored in the queue, the service provider will take out the requests from the queue and execute them at a fixed rate, and too many requests will be put in the queue or directly rejected.

- The defect of leaky bucket algorithm is also obvious. When there are a large number of burst requests in a short period of time, even if the server does not have any load at this time, each request has to wait in the queue for a period of time before it can be responded.

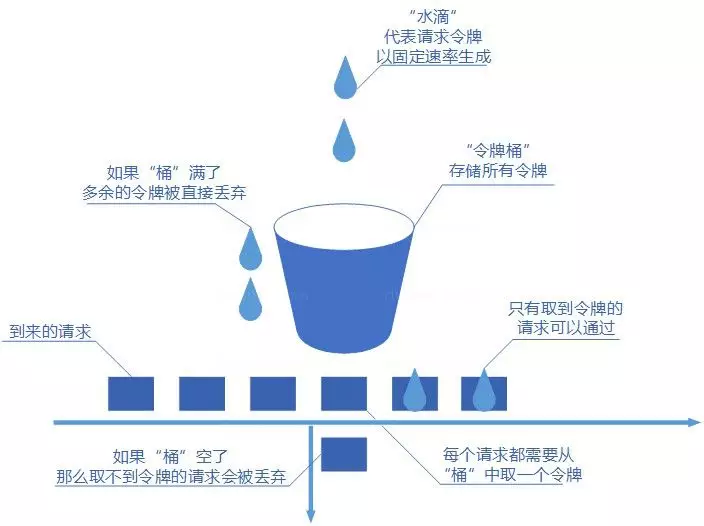

Token Bucket

- The concept of token bucket algorithm is as follows:

- Tokens are generated at a fixed rate.

- The generated token is stored in the token bucket. If the token bucket is full, the extra tokens will be discarded directly. When the request arrives, it will try to get the token from the token bucket, and the request that gets the token can be executed.

- If the bucket is empty, the request to try to get the token will be discarded directly.

- Token bucket algorithm can not only distribute all requests to the time interval, but also accept the burst requests that the server can bear, so it is a widely used current limiting algorithm.

Single application implementation

- In the traditional single application, we only need to consider multithreading. We can use Google open source tool class guava. One of them, RateLimiter, uses token bucket algorithm to limit the flow of single application.

- The current limitation of monomer application is not the focus of this article. The existing API on the official website can be read by the readers themselves, and will not be explained in detail here.

Distributed current limiting

- There are many ready-made tools for distributed current limiting and fusing, such as Hystrix, Sentinel, etc., but some enterprises do not refer to foreign class libraries, so they need to implement them by themselves.

- Redis, as a single thread multiplexing feature, is obviously competent for this task.

How to realize Redis

- Using token bucket algorithm implementation, according to the previous introduction, we understand that the basis of token bucket algorithm requires two variables, namely bucket capacity, the rate of token generation.

- Here we implement the rate of production per second plus a bucket capacity. But how? Here are a few questions.

-

What data needs to be saved in redis?

- Current bucket capacity, latest request time

- In what data structure?

- Because it is aimed at the interface current restriction, the business logic of each interface is different, and the concurrent processing is also different, so it needs to be refined to the current restriction of each interface. At this time, we choose the structure of HashMap. hashKey is the unique id of the interface, which can be the uri of the request, in which the capacity of the current bucket and the latest request time are stored respectively.

- How to calculate the token to be put?

- According to the comparison between the last request time saved by redis and the current time, if the difference is greater than, the token will be generated again (Chen's implementation is 1 second). At this time, the bucket capacity is the current token + the generated token

- How to ensure the atomicity of redis?

- To ensure the atomicity of redis, use lua script.

With the above problems, it can be easily realized.

Open and close

1. The lua script is as follows:

local ratelimit_info = redis.pcall('HMGET',KEYS[1],'last_time','current_token')

local last_time = ratelimit_info[1]

local current_token = tonumber(ratelimit_info[2])

local max_token = tonumber(ARGV[1])

local token_rate = tonumber(ARGV[2])

local current_time = tonumber(ARGV[3])

if current_token == nil then

current_token = max_token

last_time = current_time

else

local past_time = current_time-last_time

if past_time>1000 then

current_token = current_token+token_rate

last_time = current_time

end

## Prevent overflow

if current_token>max_token then

current_token = max_token

last_time = current_time

end

end

local result = 0

if(current_token>0) then

result = 1

current_token = current_token-1

last_time = current_time

end

redis.call('HMSET',KEYS[1],'last_time',last_time,'current_token',current_token)

return result- The lua script is called to give four parameters: the unique id of the interface method, the bucket capacity, the number of tokens generated per second, and the timestamp of the current request.

2. Spring boot code implementation

- Spring data redis is used to implement the execution of lua script.

- Redis serialization configuration:

/**

* Reinjection template

*/

@Bean(value = "redisTemplate")

@Primary

public RedisTemplate redisTemplate(RedisConnectionFactory redisConnectionFactory){

RedisTemplate<String, Object> template = new RedisTemplate<>();

template.setConnectionFactory(redisConnectionFactory);

ObjectMapper objectMapper = new ObjectMapper();

objectMapper.setSerializationInclusion(JsonInclude.Include.NON_NULL);

objectMapper.enableDefaultTyping(ObjectMapper.DefaultTyping.NON_FINAL);

//Set the serialization method, key to string, and value to json

StringRedisSerializer stringRedisSerializer = new StringRedisSerializer();

Jackson2JsonRedisSerializer jsonRedisSerializer = new Jackson2JsonRedisSerializer(Object.class);

jsonRedisSerializer.setObjectMapper(objectMapper);

template.setEnableDefaultSerializer(false);

template.setKeySerializer(stringRedisSerializer);

template.setHashKeySerializer(stringRedisSerializer);

template.setValueSerializer(jsonRedisSerializer);

template.setHashValueSerializer(jsonRedisSerializer);

return template;

}

- Current limiting tools

/**

* @Description Current limiting tools

* @Author CJB

* @Date 2020/3/19 17:21

*/

public class RedisLimiterUtils {

private static StringRedisTemplate stringRedisTemplate=ApplicationContextUtils.applicationContext.getBean(StringRedisTemplate.class);

/**

* lua Script, current limiting

*/

private final static String TEXT="local ratelimit_info = redis.pcall('HMGET',KEYS[1],'last_time','current_token')\n" +

"local last_time = ratelimit_info[1]\n" +

"local current_token = tonumber(ratelimit_info[2])\n" +

"local max_token = tonumber(ARGV[1])\n" +

"local token_rate = tonumber(ARGV[2])\n" +

"local current_time = tonumber(ARGV[3])\n" +

"if current_token == nil then\n" +

" current_token = max_token\n" +

" last_time = current_time\n" +

"else\n" +

" local past_time = current_time-last_time\n" +

" \n" +

" if past_time>1000 then\n" +

"\t current_token = current_token+token_rate\n" +

"\t last_time = current_time\n" +

" end\n" +

"\n" +

" if current_token>max_token then\n" +

" current_token = max_token\n" +

"\tlast_time = current_time\n" +

" end\n" +

"end\n" +

"\n" +

"local result = 0\n" +

"if(current_token>0) then\n" +

" result = 1\n" +

" current_token = current_token-1\n" +

" last_time = current_time\n" +

"end\n" +

"redis.call('HMSET',KEYS[1],'last_time',last_time,'current_token',current_token)\n" +

"return result";

/**

* Get token

* @param key Request id

* @param max Maximum concurrent capacity (barrel capacity)

* @param rate How many tokens are generated per second

* @return Get token return true, not get return false

*/

public static boolean tryAcquire(String key, int max,int rate) {

List<String> keyList = new ArrayList<>(1);

keyList.add(key);

DefaultRedisScript<Long> script = new DefaultRedisScript<>();

script.setResultType(Long.class);

script.setScriptText(TEXT);

return Long.valueOf(1).equals(stringRedisTemplate.execute(script,keyList,Integer.toString(max), Integer.toString(rate),

Long.toString(System.currentTimeMillis())));

}

}- The method of interceptor + annotation is adopted. The annotation is as follows:

/**

* @Description Annotation of current limiting, marked on class or method. The annotation on the method will override the annotation on the class, the same as @ Transactional

* @Author CJB

* @Date 2020/3/20 13:36

*/

@Inherited

@Target({ElementType.TYPE, ElementType.METHOD})

@Retention(RetentionPolicy.RUNTIME)

public @interface RateLimit {

/**

* Capacity of token bucket, default 100

* @return

*/

int capacity() default 100;

/**

* The default number of tokens per second is 10

* @return

*/

int rate() default 10;

}- The interceptors are as follows:

/**

* @Description Current limiter

* @Author CJB

* @Date 2020/3/19 14:34

*/

@Component

public class RateLimiterIntercept implements HandlerInterceptor {

@Override

public boolean preHandle(HttpServletRequest request, HttpServletResponse response, Object handler) throws Exception {

if (handler instanceof HandlerMethod){

HandlerMethod handlerMethod=(HandlerMethod)handler;

Method method = handlerMethod.getMethod();

/**

* Get annotation on method first

*/

RateLimit rateLimit = AnnotationUtils.findAnnotation(method, RateLimit.class);

//The annotation is not marked on the method. Try to get the annotation on the class

if (Objects.isNull(rateLimit)){

//Get annotation on class

rateLimit = AnnotationUtils.findAnnotation(handlerMethod.getBean().getClass(), RateLimit.class);

}

//No comments, release

if (Objects.isNull(rateLimit))

return true;

//Try to get the token, if there is no token

if (!RedisLimiterUtils.tryAcquire(request.getRequestURI(),rateLimit.capacity(),rateLimit.rate())){

//Throw the exception of request timeout

throw new TimeOutException();

}

}

return true;

}

}The code of spring boot configuration interceptor will not be pasted. The above is the complete code, so far the distributed flow restriction is completed.

If you think the author wrote well and got something, please pay attention and recommend it!!!