Ingress

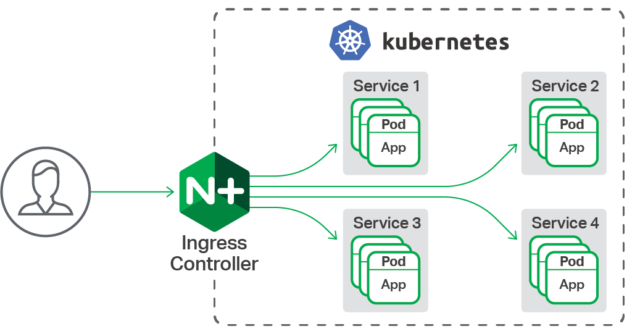

An API object, typically HTTP, that manages external access to services in a cluster.

Ingress provides load balancing, SSL termination, and name-based virtual hosts.

What is Ingress

Ingress, Kubernetes v1.1 started to increase, exposing clusters services Routes to http and https of.The control of traffic routing rules is defined in the Ingress resource.

internet

|

[ Ingress ]

--|-----|--

[ Services ]

Ingress provides service s with URL s accessed outside the cluster, load balancing, SSL termination, and name-based virtual hosts.( Ingress controller The function responsible for implementing Ingress, usually a load balancer, monitors changes in Ingress and service s, configures load balancing according to rules, and provides access.

Ingress does not expose any ports or protocols.Exposing services other than HTTP and HTTPS to the Internet usually uses Service.Type = NodePort or Service.Type = LoadBalancer.

The Ingress Resource

The simplest example of Ingress Resource is as follows

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: test-ingress

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- http:

paths:

- path: /testpath

backend:

serviceName: test

servicePort: 80

Like other Kubernetes resources, Ingress requires apiVersion, type, and metadata attributes.

For additional property configurations of configurations, see deploying applications, configuring containers, managing resources.

Ingress often uses comments to configure options, depending on the Ingress controller, see examples rewrite-target annotation.

Different Ingress controller Support different comments. Read the documentation for the Ingress controller you selected to see which comments are supported.

The Ingress specification has all the information needed to configure a load balancer or proxy server.Most importantly, it contains a list of rules that match all incoming requests.Ingress resources only support rules for HTTP traffic.

Ingress Rule

Each http rule contains the following information:

- Optional host.In this example, no host is specified, so the rule applies to all inbound HTTP traffic through the specified IP address.If a host is provided (for example, foo.bar.com), the rule applies to that host.

- A list of paths (for example, / testpath), each of which defines a service Name and service Port associated backend.Both host and path must match the content of the incoming request so that the load balancer can refer directly to the back-end service.

- The backend is in services Document A combination of service names and port names defined in.HTTPS (and HTTPS) requests to Ingress-matched hosts and routing rules will be sent to the listed backends.

The default backend is typically configured in an Ingress controller that will service requests that do not match the path in the specification.

Default Backend

Ingress without rules sends all traffic to a single default backend.The default backend is usually a configuration option for the Ingress controller and is not specified in the Ingress resource.

Ingress type

1. Single Service Ingress

The existing Kubernetes concept allows exposing a single service (see alternatives ).You can also use Ingress to do this by specifying a default backend without rules.

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: test-ingress

spec:

backend:

serviceName: testsvc

servicePort: 80

If you use kubectl apply -f, you will see the following information:

kubectl get ingress test-ingress NAME HOSTS ADDRESS PORTS AGE test-ingress * 107.178.254.228 80 59s

Of these, 107.178.254.228 is the IP assigned by the Ingress controller to satisfy this Ingress.

2. Ingress Routing to Multiple Services

The multiservice configuration routes traffic from a single IP address to multiple services based on the requested HTTP URI.Ingress allows you to minimize the number of load balancers.For example, the settings are as follows:

foo.bar.com -> 178.91.123.132 -> / foo service1:4200

/ bar service2:8080

You can define it with the following Ingress:

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: simple-fanout-example

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: foo.bar.com

http:

paths:

- path: /foo

backend:

serviceName: service1

servicePort: 4200

- path: /bar

backend:

serviceName: service2

servicePort: 8080

After creating ingress using kubectl create -f:

kubectl describe ingress simple-fanout-example

Name: simple-fanout-example

Namespace: default

Address: 178.91.123.132

Default backend: default-http-backend:80 (10.8.2.3:8080)

Rules:

Host Path Backends

---- ---- --------

foo.bar.com

/foo service1:4200 (10.8.0.90:4200)

/bar service2:8080 (10.8.0.91:8080)

Annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ADD 22s loadbalancer-controller default/test

As long as the service (s1, s2) exists, the Ingress controller provides a load balancer that satisfies the specific implementation of Ingress.Once created, you can view the address of the load balancer in the Address field.

3. Name-based virtual hosts

Virtual host Ingress forwards to different backend services by name, and they share the same IP address

foo.bar.com --| |-> foo.bar.com s1:80

| 178.91.123.132 |

bar.foo.com --| |-> bar.foo.com s2:80

Here is a sample based on Host header Ingress for routing requests:

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: name-virtual-host-ingress

spec:

rules:

- host: foo.bar.com

http:

paths:

- backend:

serviceName: service1

servicePort: 80

- host: bar.foo.com

http:

paths:

- backend:

serviceName: service2

servicePort: 80

If you create an Ingress resouce that does not define any hosts in rules, then a web request based on the IP address of the Ingress controller can match to a virtual host without a name.For example, the following Ingress resource routes the first.bar.com request to service1, the second.foo.com to service2, and the other only IP addresses that have no hostname defined (that is, no hostname defined in the header) will route to service3

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: name-virtual-host-ingress

spec:

rules:

- host: first.bar.com

http:

paths:

- backend:

serviceName: service1

servicePort: 80

- host: second.foo.com

http:

paths:

- backend:

serviceName: service2

servicePort: 80

- http:

paths:

- backend:

serviceName: service3

servicePort: 80

4,TLS

You can specify which contains the TLS private key and certificate. secret To reinforce Ingress.Currently, Ingress supports only one TLS port 443 and assumes TLS termination.If the TLS configuration section in Ingress specifies different hosts, they will be reused on the same port based on the host name specified through the SNI TLS extension (assuming the Ingress controller supports SNI), and TLS secret must contain keys named tls.crt and tls.key, which contain the certificate and private key used for TLS.For example:

apiVersion: v1 kind: Secret metadata: name: testsecret-tls namespace: default data: tls.crt: base64 encoded cert tls.key: base64 encoded key type: kubernetes.io/tls

Referencing this secret in Ingress tells the Ingress controller to use TLS to protect the channel from the client to the load balancer.You need to make sure that the TLS Secret you create comes from a certificate that contains the CN s of sslexample.foo.com.

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: tls-example-ingress

spec:

tls:

- hosts:

- sslexample.foo.com

secretName: testsecret-tls

rules:

- host: sslexample.foo.com

http:

paths:

- path: /

backend:

serviceName: service1

servicePort: 80

Different Ingress controller s support different TLS functions.See About nginx,GCE Or any other Ingress controller documentation for TLS support.

4. Load Balancing

Ingress controller bootstraps through a number of load balancing policy settings that apply to all Ingress, such as load balancing algorithms, back-end weighting schemes, and so on.Other advanced load balancing concepts, such as persistent sessions and dynamic weights, are not exposed through Ingress.However, you can still get these functions through the load balancer.

It is worth noting that even if health checks are not made public directly through Ingress, similar concepts exist in Kubernetes, such as readiness probes, which can achieve the same end result.You can refer to the controller s'documentation to see how they handle health checks. nginx, GCE).

5. Update Ingress

To update an existing Ingress to add a new host, you can update it by editing the resource:

kubectl describe ingress test

Name: test

Namespace: default

Address: 178.91.123.132

Default backend: default-http-backend:80 (10.8.2.3:8080)

Rules:

Host Path Backends

---- ---- --------

foo.bar.com

/foo s1:80 (10.8.0.90:80)

Annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ADD 35s loadbalancer-controller default/test

kubectl edit ingress test

This should pop up an editor containing the existing yaml to modify it to include the new host:

spec:

rules:

- host: foo.bar.com

http:

paths:

- backend:

serviceName: s1

servicePort: 80

path: /foo

- host: bar.baz.com

http:

paths:

- backend:

serviceName: s2

servicePort: 80

path: /foo

..

Saving yaml updates the resources in the API server, which tells the Ingress controller to reconfigure the load balancer.

kubectl describe ingress test

Name: test

Namespace: default

Address: 178.91.123.132

Default backend: default-http-backend:80 (10.8.2.3:8080)

Rules:

Host Path Backends

---- ---- --------

foo.bar.com

/foo s1:80 (10.8.0.90:80)

bar.baz.com

/foo s2:80 (10.8.0.91:80)

Annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ADD 45s loadbalancer-controller default/test

You can do the same by modifying the Ingress yaml file and calling kubectl replace-f.

Ingress Controllers

Ingress functioning requires Ingress Controller to be running in the cluster.Ingress Controller, unlike other controllers that are automatically started at cluster creation as kube-controller-manager, requires users to choose the Ingress Controller that best suits their cluster.

Current support and maintenance for the Kubernetes project GCE and nginx controllers.

Ingress Controller is deployed as Kubernetes Pod, runs as daemon, and maintains watch Apiserver's/ingress interface to update Ingress resources to meet Ingress's requests.For example, you can use Nginx Ingress Controller:

Other Ingress Controller s include:

-

Ambassador API Gateway is an Envoy based ingress controller with community or commercial support from Datawire.

-

AppsCode Inc. offers support and maintenance for the most widely used HAProxy based ingress controller Voyager.

-

Contour is an Envoy based ingress controller provided and supported by Heptio.

-

Citrix provides an Ingress Controller for its hardware (MPX), virtualized (VPX) and free containerized (CPX) ADC for baremetal and cloud deployments.

-

F5 Networks provides support and maintenance for the F5 BIG-IP Controller for Kubernetes.

-

Gloo is an open-source ingress controller based on Envoy which offers API Gateway functionality with enterprise support from solo.io.

-

HAProxy based ingress controller jcmoraisjr/haproxy-ingress which is mentioned on the blog post HAProxy Ingress Controller for Kubernetes. HAProxy Technologies offers support and maintenance for HAProxy Enterprise and the ingress controller jcmoraisjr/haproxy-ingress.

-

Istio based ingress controller Control Ingress Traffic.

-

Kong offers community or commercial support and maintenance for the Kong Ingress Controller for Kubernetes.

-

NGINX, Inc. offers support and maintenance for the NGINX Ingress Controller for Kubernetes.

-

Traefik is a fully featured ingress controller (Let's Encrypt, secrets, http2, websocket), and it also comes with commercial support by Containous.

Using multiple Ingress controllers

You can deploy multiple in a cluster any number of ingress controllers When you deploy multiple ingress controllers in a cluster, you need to specify with a comment when creating ingress ingress.class So that the cluster can select the correct ingress controller.

When you do not specify ingress.class, cloud providers may use the default ingress entry.

In general, all Ingress controllers should meet this specification, but the various Ingress controllers operate slightly differently.

Check the Ingress controller documentation for considerations on selecting it

Let's go into details next kubernetes/ingress-nginx As an example to introduce Ingress controllers.

ingress-nginx

Kubernetes is an open source container scheduling and scheduling system originally created by Google and donated to Cloud Native Computing Foundation.The Kubernetes automatic dispatch container runs in a server cluster, freeing up the complex tasks of container organization for developers and maintenance personnel.Kubernetes is currently the most popular container scheduling and scheduling system.

NGINX Ingress Controller provides enterprise delivery services to Kubernetes applications and facilitates users of open source NGINX and NGINX Plus. Using NGINX Ingress Controller provides features such as load balancing, SSL / TLS termination, URI rewriting, and SSL / TLS encryption.NGINX Plus also provides session persistence support for stateful applications, jwt authentication for API s, and so on.

ingress-nginx installation

1. Prerequisites and Common Deployment Commands

The default configuration monitors Ingress objects for all namespaces.To change this behavior, use watch-namespace to limit the scope to a specific namespace.

If multiple Ingresss define different paths for the same host, the Ingress controllers will merge the definitions.

If you are using GKE, you need to initialize the current user as a cluster administrator using the following commands: kubectl create cluster rolebinding cluster-admin-binding \ -- cluster role cluster-admin \ -- user $(gcloud config get-value account)

yaml file:

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/mandatory.yaml

There are two ways to deploy, one is to create a service to expose ingress-nginx using NodePort, the other is to use a local network and add a hostNetwork: true

Next, we deploy it in two ways:

1. Create ingress-nginx-service (official document)

1.1. First create the corresponding ingress correlation from the mandatory.yaml file

[root@k8s ~]# kubectl apply -f mandatory.yaml namespace/ingress-nginx created deployment.extensions/default-http-backend created service/default-http-backend created configmap/nginx-configuration created configmap/tcp-services created configmap/udp-services created serviceaccount/nginx-ingress-serviceaccount created clusterrole.rbac.authorization.k8s.io/nginx-ingress-clusterrole created role.rbac.authorization.k8s.io/nginx-ingress-role created rolebinding.rbac.authorization.k8s.io/nginx-ingress-role-nisa-binding created clusterrolebinding.rbac.authorization.k8s.io/nginx-ingress-clusterrole-nisa-binding created deployment.extensions/nginx-ingress-controller created

Verify Installation

To check if ingress controller pods is started properly, run the following command:

kubectl get pods --all-namespaces -l app.kubernetes.io/name=ingress-nginx --watch [root@k8s ~]# kubectl get pods --all-namespaces -l app.kubernetes.io/name=ingress-nginx --watch NAMESPACE NAME READY STATUS RESTARTS AGE ingress-nginx nginx-ingress-controller-65795b86d-28lfr 1/1 Running 0 2m55s

1.2, create the ingress-nginx-service.yaml file, and apply

ingress-nginx-service.yaml

apiVersion: v1

kind: Service

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

nodePort: 30080

- name: https

port: 443

targetPort: 443

protocol: TCP

nodePort: 30443

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

[root@k8s ~]# kubectl apply -f ingress-nginx-service.yaml

service/ingress-nginx created

1.3, Testing

Ingress Controller is deployed, now write the rules for ingress and inject them into the configuration file for ingress-nginx pod

[root@k8s ~]# cat test-nginx-service.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: test-service-ingress

namespace: default

spec:

rules:

- host: ancs.nginx.com

http:

paths:

- path:

backend:

serviceName: nginx

servicePort: 80

[root@k8s ~]# kubectl apply -f test-nginx-service.yaml

ingress.extensions/test-service-ingress created

View ingress

[root@k8s ~]# kubectl get ingress NAME HOSTS ADDRESS PORTS AGE test-service-ingress ancs.nginx.com 80 33s

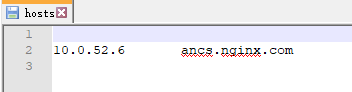

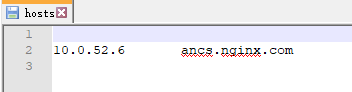

Domain Name Resolution under Machine Configuration of Accessed Clients

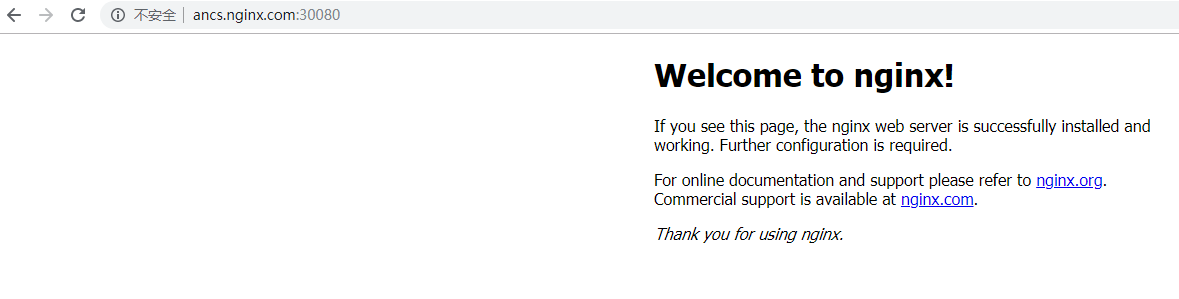

Now we can access the backend proxy pod through ancs.nginx.com:30080

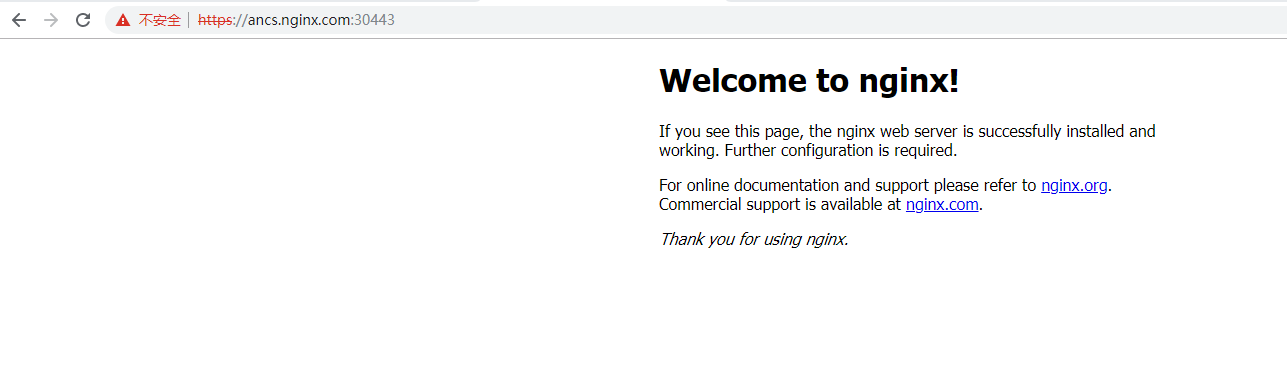

This is accessed using http. If you want to use https, first we need to create a certificate, as follows:

Self-Visa [root@k8s https]# openssl req -x509 -sha256 -nodes -days 365 -newkey rsa:2048 -keyout tls.key -out tls.crt -subj "/C=CN/ST=Beijing/L=Beijing/O=ancs/CN=ancs.nginx.com" Generating a 2048 bit RSA private key ......................+++ ...........................+++ writing new private key to 'tls.key' ----- [root@k8s https]# ls tls.crt tls.key

You can also use cfssl self-visa.It's up to you!

Create the secret resource, the certificate is generated, and then the certificate is converted to secret

[root@k8s https]# kubectl create secret tls ancs-secret --key tls.key --cert tls.crt secret/ancs-secret created

Modify the test-nginx-service.yaml file

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: test-service-ingress

namespace: default

spec:

tls:

- hosts:

- ancs.nginx.cn

secretName: ancs-secret

rules:

- host: ancs.nginx.com

http:

paths:

- path:

backend:

serviceName: nginx

servicePort: 80

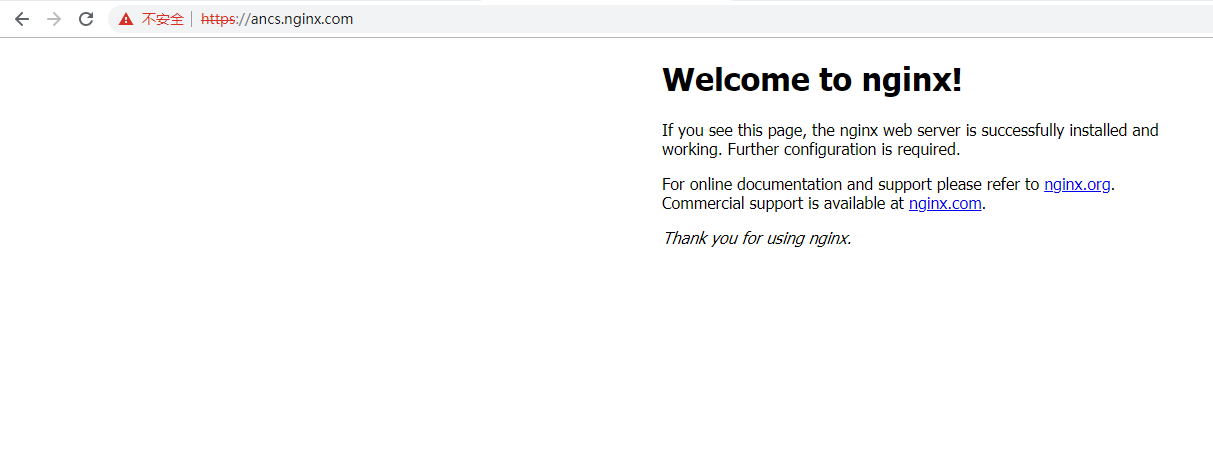

Now we can access it through https

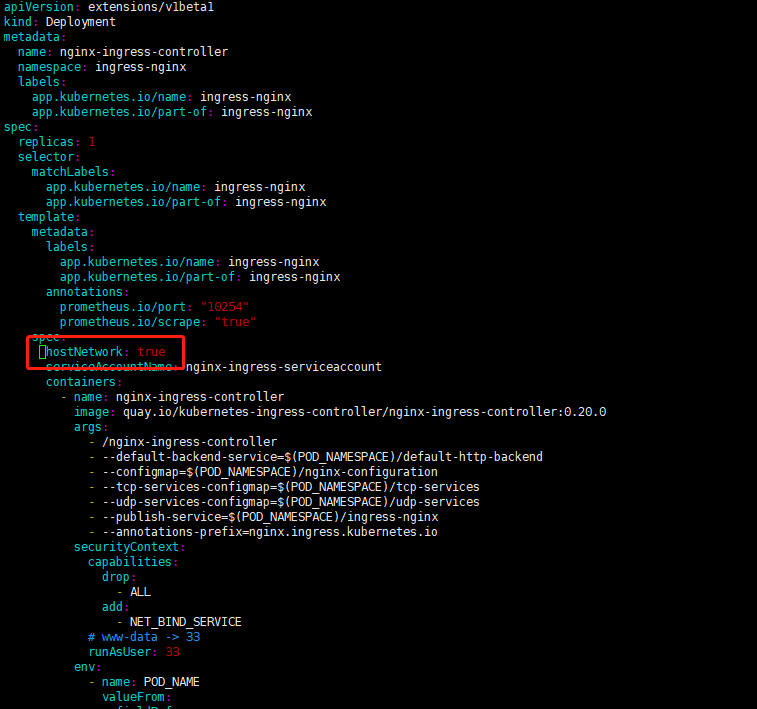

2. Increase hostNetwork: true using host network

2.1 Modify the mandatory.yaml configuration file by adding the configuration item hostNetwork to the container: true to indicate using the local network

Matters needing attention:

Before creating the ingress-nginx-controller container, you need to modify the kube-proxy configuration

Add a line to the /opt/kubernetes/cfg/kube-proxy configuration file: - masquerade-all=true and restart kube-proxy.

[root@k8s cfg]# cat kube-proxy

KUBE_PROXY_OPTS="–logtostderr=true

–v=4

–hostname-override=10.0.52.14

–cluster-cidr=10.0.0.0/24

–proxy-mode=ipvs

–masquerade-all=true

–kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig"

Configuration Item - masquerade-all Meaning, see Official Description kube-proxy Which is described as:

–masquerade-all

If using the pure iptables proxy, SNAT all traffic sent via Service cluster IPs (this not commonly needed)

Chinese translation:

–masquerade-all

If a pure iptables proxy is used, SNAT sends all traffic over the service sentence group IP (which is usually not required)

What people say means is:

Add a flag to the kube-proxy to maintain disguise rules for out-of-cluster traffic.Like this:

iptables -t nat -I POSTROUTING ! -s "${CLUSTER_CIDR}" -j MASQUERADE

2.2. Start the ingress-controller container

[root@k8s ~]# kubectl apply -f mandatory.yaml namespace/ingress-nginx unchanged deployment.extensions/default-http-backend unchanged service/default-http-backend unchanged configmap/nginx-configuration unchanged configmap/tcp-services unchanged configmap/udp-services unchanged serviceaccount/nginx-ingress-serviceaccount unchanged clusterrole.rbac.authorization.k8s.io/nginx-ingress-clusterrole unchanged role.rbac.authorization.k8s.io/nginx-ingress-role unchanged rolebinding.rbac.authorization.k8s.io/nginx-ingress-role-nisa-binding unchanged clusterrolebinding.rbac.authorization.k8s.io/nginx-ingress-clusterrole-nisa-binding unchanged deployment.extensions/nginx-ingress-controller configured [root@k8s ~]# kubectl get pods --all-namespaces -l app.kubernetes.io/name=ingress-nginx --watch NAMESPACE NAME READY STATUS RESTARTS AGE ingress-nginx nginx-ingress-controller-6b8cc9b76d-98szp 0/1 ContainerCreating 0 31s ingress-nginx nginx-ingress-controller-6b8cc9b76d-98szp 0/1 Running 0 43s ingress-nginx nginx-ingress-controller-6b8cc9b76d-98szp 1/1 Running 0 47s

2.3, Ingress deployment

2.3.1, http deployment as above, just refer to the above.Description is no longer repeated.

2.3.2, https deployment (using cfssl)

Issue certificates using cfssl:

- Generate a ca certificate

cat << EOF | tee ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat << EOF | tee ca-csr.json

{

"CN": "www.ancs.com",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

EOF

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

- Generate server certificate:

cat << EOF | tee ancs-csr.json

{

"CN": "www.ancs.com",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www ancs-csr.json | cfssljson -bare www.ancs.com

- Generate Key

[root@k8s cfssl]# kubectl create secret tls ancs-secret --cert=www.ancs.com.pem --key=www.ancs.com-key.pem secret/ancs-secret created

- Deploying ingress

[root@k8s ~]# cat test-https-nginx-service.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: test-service-ingress

namespace: default

spec:

tls:

- hosts:

- ancs.nginx.cn

secretName: ancs-secret

rules:

- host: ancs.nginx.com

http:

paths:

- path:

backend:

serviceName: nginx

servicePort: 80

[root@k8s ~]# kubectl apply -f test-https-nginx-service.yaml

ingress.extensions/test-service-ingress created

[root@k8s ~]#

*Configure hosts

- Browser Access

At this point, our ingress deployment is complete.