Introduction to ReentrantLock

ReentrantLock implements the Lock interface and is a reentrant exclusive lock.

ReentrantLock is more flexible and powerful than synchronized synchronous locks, such as implementing a fair lock mechanism.

First, let's look at what a fair lock mechanism is.

Fair Lock Mechanism for ReentrantLock

As we know, ReentrantLock is divided into fair locks and unfair locks and can be constructed to specify specific types:

//Default unfair lock

public ReentrantLock() {

sync = new NonfairSync();

}

//fair lock

public ReentrantLock(boolean fair) {

sync = fair ? new FairSync() : new NonfairSync();

}fair lock

Fair locks tend to grant access to threads that wait the longest while multiple threads compete for locks.

That is, a fair lock is equivalent to having a thread waiting on a queue, and threads that enter the queue first get the lock, which is fair to each waiting thread according to the FIFO principle.

Unfair Lock

Unfair locks are preemptive, and threads do not care about other threads in the queue, nor do they follow the principle of first-come, first-come, and then try to acquire locks directly.

Let's move on to the topic and analyze how the underlying layer of ReentrantLock is implemented.

The underlying implementation of ReentrantLock

ReentrantLock implements on the premise that AbstractQueuedSynchronizer (abstract queue synchronizer), or AQS, is the core of java.util.concurrent. The common thread concurrency classes CountDownLatch, CyclicBarrier, Semaphore, ReentrantLock, and so on, all include an internal class inherited from the AQS Abstract class.

Synchronization flag bit state

A synchronization flag state is maintained inside AQS to implement synchronization lock control:

private volatile int state;

The synchronization flag state has an initial value of 0, and each time a thread locks, the state is added 1. That is, the thread that has acquired the lock is locked again, and the state value is added 1 again. It can be seen that the state actually represents the number of lock operations performed by the thread that has acquired the lock.

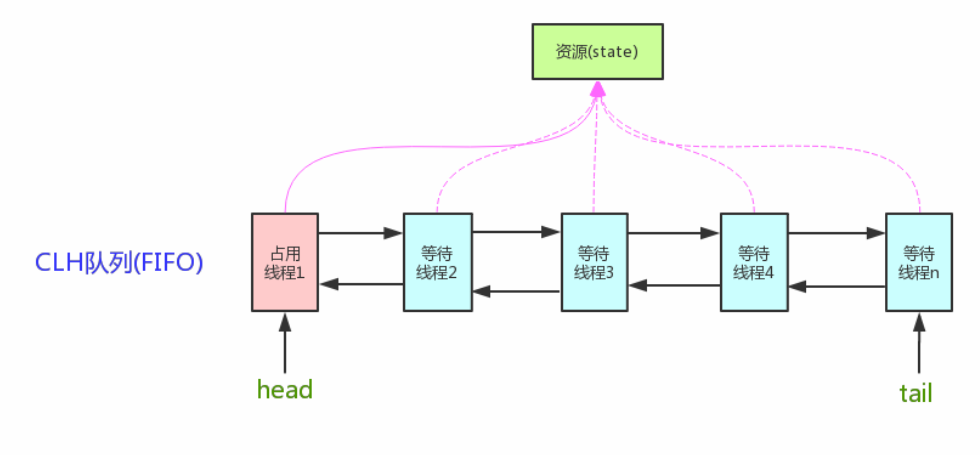

CLH Queue

In addition to the state synchronization flag bits, a FIFO queue (also known as the CLH queue) is used inside AQS to represent a queued thread waiting for a lock, which is encapsulated as a Node node to join the CLH queue when a thread fails to contend for a lock.

Code implementation for Node:

static final class Node {

// Identify the current node in shared mode

static final Node SHARED = new Node();

// Identify the current node in exclusive mode

static final Node EXCLUSIVE = null;

static final int CANCELLED = 1;

static final int SIGNAL = -1;

static final int CONDITION = -2;

static final int PROPAGATE = -3;

volatile int waitStatus;

//Precursor Node

volatile Node prev;

//Back-drive Node

volatile Node next;

//Current Thread

volatile Thread thread;

//Successor nodes stored in condition queue

Node nextWaiter;

//Is it a shared lock

final boolean isShared() {

return nextWaiter == SHARED;

}

final Node predecessor() throws NullPointerException {

Node p = prev;

if (p == null)

throw new NullPointerException();

else

return p;

}

Node() {}

Node(Thread thread, Node mode) {

this.nextWaiter = mode;

this.thread = thread;

}

Node(Thread thread, int waitStatus) {

this.waitStatus = waitStatus;

this.thread = thread;

}

}The analysis code shows that each Node node has two pointers, one pointing to the immediate successor node and the other pointing to the direct precursor node.

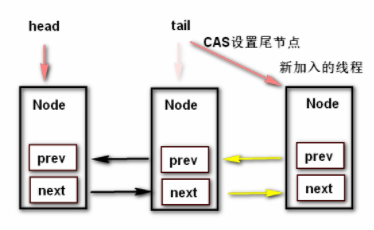

The change process of Node node

Node nodes in the AQS synchronization queue change when lock competition occurs and locks are released, as shown in the following figure:

- Threads are encapsulated as Node nodes appended to the end of the queue, setting the direction of the prev and next nodes of the current node;

- Repoint tail through CAS to a new tail Node, the currently inserted ode Node;

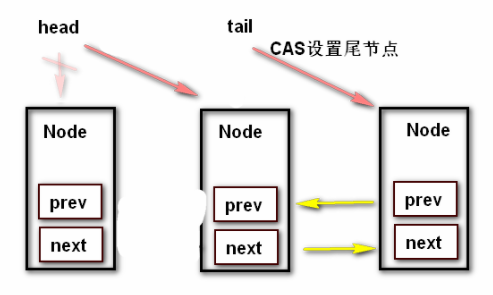

The head node represents the node that acquires the lock successfully. When the head node releases the lock, the successor node wakes up. If the successor node succeeds in locking, it sets itself as the head node. The node changes as follows:

- Modify the head node to point to the next node that gets the lock;

- A new node acquires the lock, pointing the prev pointer to null;

Unlike setting tail's redirection, setting the head node does not require CAS, because setting the head node is done by the thread that acquires the lock, and synchronization locks can only be acquired by one thread, so CAS guarantee is not required. Simply set the head node as the successor node of the original head node and disconnect the next reference of the original head node.

In addition to the predecessor and successor nodes, the Node class also includes SHARED and EXCLUSIVE nodes. What do they do? This necessitates an introduction to the two resource sharing modes of AQS.

AQS Resource Sharing Mode

AQS defines exclusive or shared modes through two variables, EXCLUSIVE and SHARED.

Exclusive Mode

Exclusive mode is the most commonly used mode and has a wide range of uses, such as locking and unlocking of ReentrantLock.

The core locking method in exclusive mode is acquire():

public final void acquire(int arg) {

if (!tryAcquire(arg) &&

acquireQueued(addWaiter(Node.EXCLUSIVE), arg))

selfInterrupt();

}The tryAcquire() method is called first to try to acquire the lock, that is, to modify the state to 1 through CAS. If it is found that the lock is already occupied by the current thread, reentry is performed, that is, to give state+1;

If the lock is occupied by another thread, then the current thread executing tryAcquire fails to return, then the addWaiter() method is executed to add an exclusive node to the waiting queue, and the addWaiter() method is implemented as follows:

private Node addWaiter(Node mode) {

//Create a node where the mode l is exclusive

Node node = new Node(mode);

for (;;) {

Node oldTail = tail;

if (oldTail != null) {

// If the tail node is not empty, set the front node of the new node as the tail node and point the tail at the new node

node.setPrevRelaxed(oldTail);

//CAS updates tail to a new node

if (compareAndSetTail(oldTail, node)) {

//Set the next of the original tail as the current node

oldTail.next = node;

return node;

}

} else {

//Initialize without calling initializeSyncQueue() method

initializeSyncQueue();

}

}

}After writing to the queue, you need to suspend the current thread as follows:

/**

* Queued threads attempt to acquire locks

*/

final boolean acquireQueued(final Node node, int arg) {

boolean failed = true; //Mark whether the lock was successfully acquired

try {

boolean interrupted = false; //Marks if the thread has been interrupted

for (;;) {

final Node p = node.predecessor(); //Get Precursor Nodes

//If the precursor is head, that is, the node is second, qualified to attempt to acquire a lock

if (p == head && tryAcquire(arg)) {

setHead(node); // Get success, set the current node as the head node

p.next = null; // The original head node is queued

failed = false; //Success

return interrupted; //Return if interrupted

}

// Determine if acquisition fails and can be suspended if possible

if (shouldParkAfterFailedAcquire(p, node) &&

parkAndCheckInterrupt())

// Set interrupted to true if thread is interrupted

interrupted = true;

}

} finally {

if (failed)

cancelAcquire(node);

}

}Look again at what shouldParkAfterFailedAcquire and parkAndCheckInterrupt have done:

/**

* Determines whether the current thread needs to hang after failing to acquire a lock.

*/

private static boolean shouldParkAfterFailedAcquire(Node pred, Node node) {

//Status of the precursor node

int ws = pred.waitStatus;

if (ws == Node.SIGNAL)

// Precursor node status is signal, returns true

return true;

// Precursor node state is CANCELLED

if (ws > 0) {

// Look forward from the end of the queue for the first node whose state is not CANCELLED

do {

node.prev = pred = pred.prev;

} while (pred.waitStatus > 0);

pred.next = node;

} else {

// Set the state of the precursor node to SIGNAL

compareAndSetWaitStatus(pred, ws, Node.SIGNAL);

}

return false;

}

/**

* Suspend the current thread, return to the thread interrupt state, and reset

*/

private final boolean parkAndCheckInterrupt() {

LockSupport.park(this);

return Thread.interrupted();

}From the code above, you can see that a thread can hang when it is queued if its predecessor node is in SIGNAL state, which means that when a current node acquires a lock and leaves the queue, it needs to wake up the latter node.

Once you've finished locking, unlocking is simpler. The core method is release():

public final boolean release(int arg) {

if (tryRelease(arg)) {

Node h = head;

if (h != null && h.waitStatus != 0)

unparkSuccessor(h);

return true;

}

return false;

}Code flow: First try to release the lock, if the release is successful, check if the state of the header node is SIGNAL, if so, wake up the thread associated with the next node of the header node, and return false if the release fails to indicate the unlock failure.

The tryRelease() method is implemented as follows, and the detailed process is described in the notes:

/**

* Release locks occupied by the current thread

* @param releases

* @return Is Release Successful

*/

protected final boolean tryRelease(int releases) {

// Calculate post-release state value

int c = getState() - releases;

// Throw an exception if the current thread is not holding the lock

if (Thread.currentThread() != getExclusiveOwnerThread())

throw new IllegalMonitorStateException();

boolean free = false;

if (c == 0) {

// Lock is reentrant 0 times, indicating successful release

free = true;

// Empty Exclusive Threads

setExclusiveOwnerThread(null);

}

// Update state Value

setState(c);

return free;

}Sharing Mode

The biggest difference between shared and exclusive modes is that shared modes are propagated.

Shared mode acquires locks by acquiShared, which is one step more than exclusive mode, that is, when you get resources, you wake up your successor teammates.

Shared mode releases locks by releaseShared, which releases a specified amount of resources and wakes up other threads in the waiting queue to obtain resources if the wait thread is successfully released and allowed to wake up.

The main reference articles for this blog are as follows. Thank you very much:

AQS Bottom-level Principle Analysis-Send eHair in Thousands-Blog Park

ReentrantLock Implementation Principles (Fair Lock and Unfair Lock) - Aware

Analysis of AQS Principle - Brief Book

ReentrantLock Principle _Long Road, Water CSDN Blog _reentrantlock