Previous blog [muduo learning notes: implementation of net part TCP network programming library TcpConnection] and [muduo learning notes: implementation of net part, TCP network programming library Acceptor] Yes, TcpServer is the basic component, blog post [muduo learning notes: design pattern evolution of multithreaded TCP server in net] This paper introduces various design methods of TcpServer and the structure of muduo network library. This paper mainly introduces the code structure and workflow of TcpServer.

1. Multithreaded TcpServer server architecture

As the server side, first of all, the Acceptor of the listening socket needs to be encapsulated to listen to the connection of the client. Each established connection TcpConnection is stored in the connection map for management. All connected tcpconnections are executed through the callback function. The IO events on the Acceptor are monitored by the main reactor of the main thread, and the IO events on the TcpConnection establishing the connection are monitored by the sub reactors. Sub reactors is implemented using EvenetLoopThreadPool. When the number of thread pools is 0 by default, it degenerates into a single thread model.

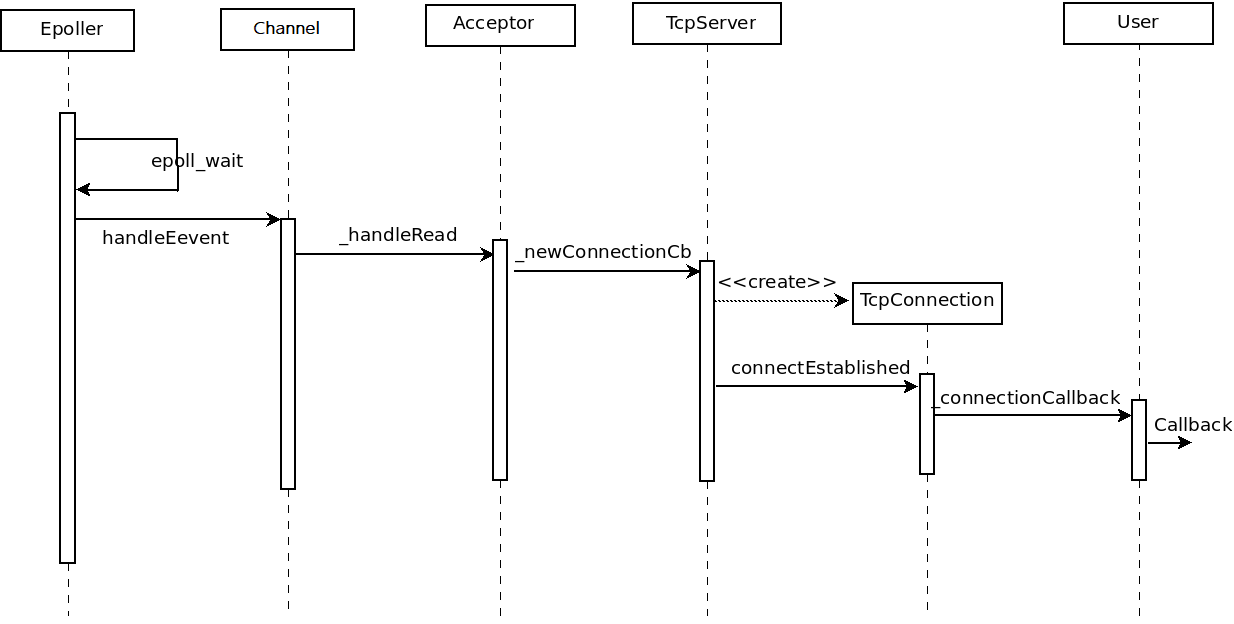

1.1. Accept the connection process

TcpServer uses the Acceptor to handle new connections, the channel handles the listening socket event of the Acceptor, and the IO event is obtained on the Poller of the EventLoop. Then it returns to TcpServer through layers of callbacks, creates a TcpConnection and feeds back to the client through callbacks.

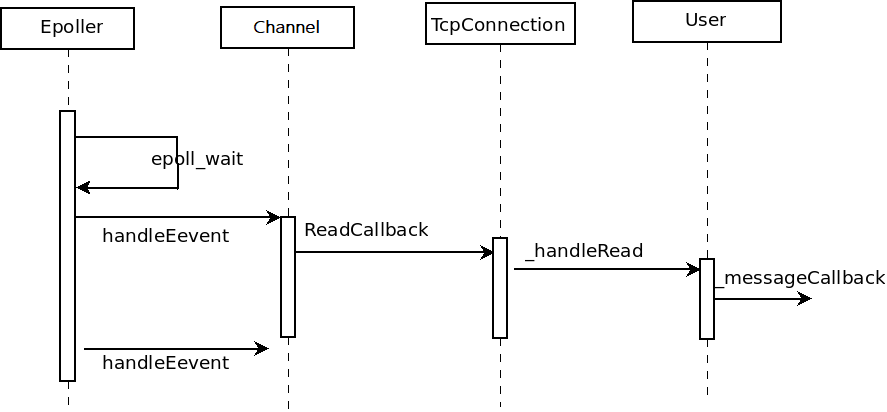

1.2. Receiving data callback process

After TcpServer establishes a connection, tcpconnection is created, and the IO events on the connected socket are registered on Poller by channel. When the event loop detects that there is a readable event on the currently connected socket, it will notify tcpconnection through callback and execute data reading, and return the read data to the user through callback.

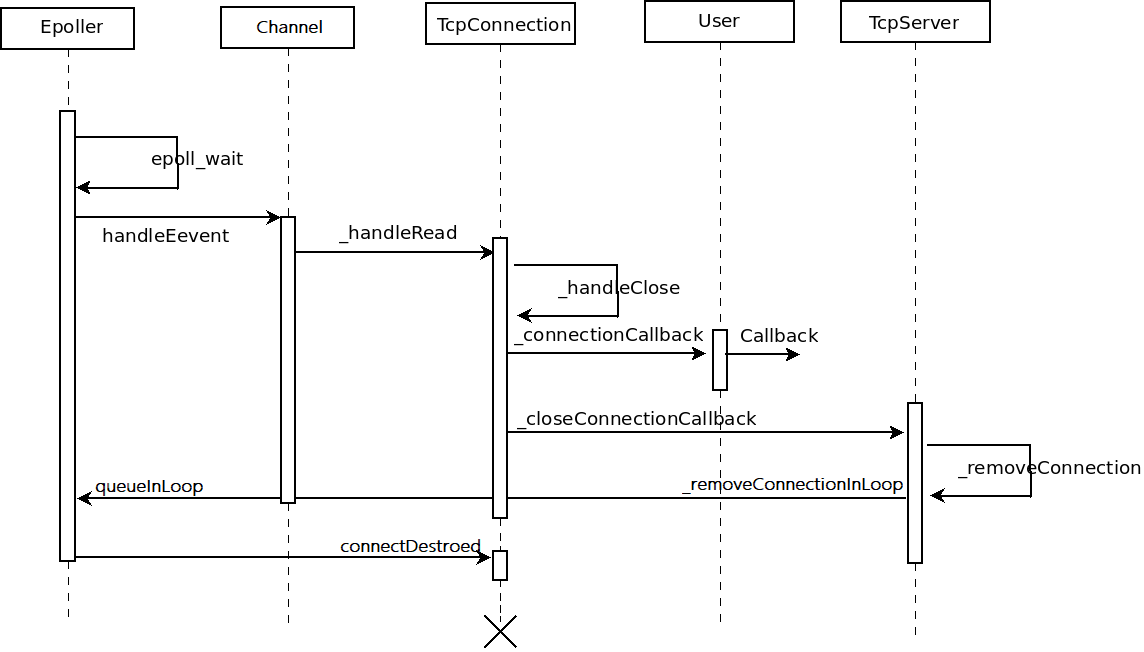

1.3 disconnection process

First, let's introduce the active disconnection of the client. After TcpServer establishes a connection, TcpConnection is created, and the IO events on the connected socket are registered on Poller by channel. The event loop detects a disconnected IO event and notifies TcpConnection through callback for corresponding processing. TcpConnection first notifies the user that the connection has been disconnected, then notifies TcpServer to disconnect through callback, and executes callback in io thread. TcpServer first removes the current TcpConnection from the connection map, recalls the destruction step of tcpcontintion in the IO thread, and removes it from Poller through channel.

If the server initiates the shutdown actively, you can directly use the forceClose function of TcpConnection to actively close the connection by calling handleClose(). The user can disconnect the socket gracefully by calling TcpConnection's shutdown().

2. TcpServer definition

///

/// TCP server, supports single-threaded and thread-pool models.

///

/// This is an interface class, so don't expose too much details.

class TcpServer : noncopyable

{

public:

typedef std::function<void(EventLoop*)> ThreadInitCallback;

enum Option{ kNoReusePort, kReusePort,};

//Pass in the loop, local ip and server name to which TcpServer belongs

//TcpServer(EventLoop* loop, const InetAddress& listenAddr);

TcpServer(EventLoop* loop,

const InetAddress& listenAddr,

const string& nameArg,

Option option = kNoReusePort);

~TcpServer(); // force out-line dtor, for std::unique_ptr members.

const string& ipPort() const { return ipPort_; }

const string& name() const { return name_; }

EventLoop* getLoop() const { return loop_; }

//Set the number of threads in the thread pool (the number of sub reactor) before calling start().

// 0: single thread (accept and IO are in one thread)

// 1: acceptor in one thread, all IO in another thread

// N: The acceptor is in one thread, and all IO S are allocated to n threads through round robin

void setThreadNum(int numThreads);

// Callback function after thread pool initialization

void setThreadInitCallback(const ThreadInitCallback& cb)

{ threadInitCallback_ = cb; }

/// valid after calling start()

std::shared_ptr<EventLoopThreadPool> threadPool()

{ return threadPool_; }

//Start the thread pool manager and add Acceptor::listen() to the scheduling queue (start only once)

void start();

// The connection is established and disconnected, the message arrives, and the message is completely written into the callback function of the tcp kernel sending buffer

// Not thread safe, but all invoked in IO thread.

void setConnectionCallback(const ConnectionCallback& cb)

{ connectionCallback_ = cb; }

void setMessageCallback(const MessageCallback& cb)

{ messageCallback_ = cb; }

void setWriteCompleteCallback(const WriteCompleteCallback& cb)

{ writeCompleteCallback_ = cb; }

private:

/// Not thread safe, but in loop

//Pass it to the Acceptor, which will call - > handleread() when a new connection arrives

void newConnection(int sockfd, const InetAddress& peerAddr);

/// Thread safe.

void removeConnection(const TcpConnectionPtr& conn); //Remove the connection.

/// Not thread safe, but in loop

void removeConnectionInLoop(const TcpConnectionPtr& conn); //Remove connection from loop

//string is the name of TcpConnection, which is used to find a connection.

typedef std::map<string, TcpConnectionPtr> ConnectionMap;

EventLoop* loop_; // the acceptor loop

const string ipPort_;

const string name_;

// Held by TcpServer only

std::unique_ptr<Acceptor> acceptor_; // avoid revealing Acceptor

std::shared_ptr<EventLoopThreadPool> threadPool_;

// User's callback function

ConnectionCallback connectionCallback_; // Callback of connection status (connected, disconnected)

MessageCallback messageCallback_; // Callback for new message arrival

WriteCompleteCallback writeCompleteCallback_; // Callback after sending data (all sent to the kernel)

ThreadInitCallback threadInitCallback_; // Callback for thread pool creation success

AtomicInt32 started_;

// always in loop thread

int nextConnId_; // The next connection ID, which is used to generate the name of TcpConnection

ConnectionMap connections_; // Currently connected list

};

3. TcpServer implementation

TcpServer implements simple code logic, manages Acceptor receiving connections, EventLoopThreadPoll manages IO event loops for establishing connections, and sets the callback function of TcpConnection.

3.1. Construction and Implementation

TcpServer::TcpServer(EventLoop* loop,

const InetAddress& listenAddr,

const string& nameArg,

Option option)

: loop_(CHECK_NOTNULL(loop)),

ipPort_(listenAddr.toIpPort()), // Address monitored by the server

name_(nameArg),

acceptor_(new Acceptor(loop, listenAddr, option == kReusePort)), // Acceptor handling new connections

threadPool_(new EventLoopThreadPool(loop, name_)), // Thread pool

connectionCallback_(defaultConnectionCallback), // Connection and disconnection callbacks provided to users

messageCallback_(defaultMessageCallback), // Callback for the arrival of a new message provided to the user

nextConnId_(1) // The sequence number of the next connection

{

// Set the callback function of the Acceptor to handle new connections

acceptor_->setNewConnectionCallback(std::bind(&TcpServer::newConnection, this, _1, _2));

}

TcpServer::~TcpServer()

{

loop_->assertInLoopThread();

LOG_TRACE << "TcpServer::~TcpServer [" << name_ << "] destructing";

for (auto& item : connections_)

{

TcpConnectionPtr conn(item.second);

item.second.reset(); // The reference count minus 1 does not mean that it has been released

// Call TcpConnection::connectDestroyed

conn->getLoop()->runInLoop(std::bind(&TcpConnection::connectDestroyed, conn));

}

}

3.2. Start the server

The function setThreadNum() must be used before calling start(). If the number of threads is not set, it will be processed in single thread mode by default. The start() function starts Acceptor::listen() to listen for new connection events. Once there is a new connection event, the callback TcpServer::newConnection will be triggered.

void TcpServer::setThreadNum(int numThreads)

{

assert(0 <= numThreads);

threadPool_->setThreadNum(numThreads);

}

void TcpServer::start()

{

if (started_.getAndSet(1) == 0)

{

threadPool_->start(threadInitCallback_);

assert(!acceptor_->listenning());

loop_->runInLoop(std::bind(&Acceptor::listen, get_pointer(acceptor_)));

}

}

3.3 callback of new connection

When Accettor detects the arrival of a new connection, it calls back TcpServer::newConnection(). Use the round robin strategy to obtain an ioloop from the thread pool for subsequent processing of IO events on the current tcpconnection. After that, we call the TcpConnection::connectEstablished() function in the ioLoop event loop.

void TcpServer::newConnection(int sockfd, const InetAddress& peerAddr)

{

loop_->assertInLoopThread();

EventLoop* ioLoop = threadPool_->getNextLoop(); // Get a loop from the thread pool using the round robin strategy

char buf[64];

snprintf(buf, sizeof buf, "-%s#%d", ipPort_.c_str(), nextConnId_);

++nextConnId_;

string connName = name_ + buf; // The name of the current connection

LOG_INFO << "TcpServer::newConnection [" << name_

<< "] - new connection [" << connName

<< "] from " << peerAddr.toIpPort();

InetAddress localAddr(sockets::getLocalAddr(sockfd));

// FIXME poll with zero timeout to double confirm the new connection

// FIXME use make_shared if necessary

// Create a TcpConnection object to indicate that each is connected

TcpConnectionPtr conn(new TcpConnection(ioLoop, // The loop to which the current connection belongs

connName, // Connection name

sockfd, // Connected socket

localAddr, // Local address

peerAddr));// Short address

// The current connection is added to the connection map

connections_[connName] = conn;

// Set event callback on TcpConnection

conn->setConnectionCallback(connectionCallback_);

conn->setMessageCallback(messageCallback_);

conn->setWriteCompleteCallback(writeCompleteCallback_);

conn->setCloseCallback(std::bind(&TcpServer::removeConnection, this, _1)); // FIXME: unsafe

// The callback executes TcpConnection::connectEstablished() to confirm the current connected state,

// Register IO events on currently connected socket s in Poller

ioLoop->runInLoop(std::bind(&TcpConnection::connectEstablished, conn));

}

3.4. Callback of disconnection

Remove the current connection from the connection map by connection name. Execute TcpConnection::connectDestroyed in the loop where the current connection is located to finish the work.

void TcpServer::removeConnection(const TcpConnectionPtr& conn)

{

// FIXME: unsafe

loop_->runInLoop(std::bind(&TcpServer::removeConnectionInLoop, this, conn));

}

void TcpServer::removeConnectionInLoop(const TcpConnectionPtr& conn)

{

loop_->assertInLoopThread();

LOG_INFO << "TcpServer::removeConnectionInLoop [" << name_

<< "] - connection " << conn->name();

size_t n = connections_.erase(conn->name()); // Remove coon by name

(void)n;

assert(n == 1);

// Execute TcpConnection::connectDestroyed in the loop where conn is located

EventLoop* ioLoop = conn->getLoop();

ioLoop->queueInLoop(std::bind(&TcpConnection::connectDestroyed, conn));

}

3.5. Some instructions

In the previous functions, the function execution of tcpconnection is performed in its own ioLoop, mainly because TcpServer is unlocked to facilitate the writing of client code. The codes of TcpServer and tcpconnection only deal with single threads (without Mutex members). With the help of EventLoop::runInLoop() and the introduction of EventLoopThreadPool, the implementation of multi-threaded TcpServer is simple.

muduo uses the simplest round robin algorithm to select the EventLoop in the pool. It is not allowed to replace the EventLoop during TcpCOnnection. It is applicable to both long and short connections, which is not easy to cause partial load. Each TcpServer currently designed has its own EventLoopThreadPool, and multiple tcpservers do not share EventLoopThreadPool. If necessary, you can also share EventLoopThreadPool among multiple tcpservers. For example, a service has multiple equivalent TCP ports, each TcpServer is responsible for one port, and the connections from these ports share an EventLoopThreadPool.

4. Testing

4.1. EchoServer test

Take EchoServer as an example, and actively disconnect after reply.

#include <muduo/net/TcpServer.h>

#include <muduo/base/Logging.h>

#include <muduo/base/Thread.h>

#include <muduo/net/EventLoop.h>

#include <muduo/net/InetAddress.h>

#include <utility>

#include <stdio.h>

#include <unistd.h>

using namespace muduo;

using namespace muduo::net;

int numThreads = 0;

class EchoServer

{

public:

EchoServer(EventLoop* loop, const InetAddress& listenAddr)

: loop_(loop),

server_(loop, listenAddr, "EchoServer")

{

server_.setConnectionCallback(std::bind(&EchoServer::onConnection, this, _1));

server_.setMessageCallback(std::bind(&EchoServer::onMessage, this, _1, _2, _3));

server_.setThreadNum(numThreads);

}

void start()

{

server_.start();

}

// void stop();

private:

void onConnection(const TcpConnectionPtr& conn)

{

LOG_INFO << conn->peerAddress().toIpPort() << " -> "

<< conn->localAddress().toIpPort() << " is "

<< (conn->connected() ? "UP" : "DOWN")

<< " - EventLoop " << conn->getLoop(); // Print the EventLoop to which the current connection belongs

LOG_INFO << conn->getTcpInfoString();

conn->send("hello\n");

}

void onMessage(const TcpConnectionPtr& conn, Buffer* buf, Timestamp time)

{

string msg(buf->retrieveAllAsString());

LOG_INFO << conn->name() << " recv " << msg.size() << " bytes at " << time.toString();

if (msg == "exit\n"){

conn->send("bye\n");

conn->shutdown(); // Turn off sending, but still accept data from the client

}

if (msg == "quit\n"){

loop_->quit(); // Server shutdown exit

}

conn->send(msg); // Loopback received data

}

EventLoop* loop_;

TcpServer server_;

};

int main(int argc, char* argv[])

{

LOG_INFO << "pid = " << getpid() << ", tid = " << CurrentThread::tid();

LOG_INFO << "sizeof TcpConnection = " << sizeof(TcpConnection);

if (argc > 1){

numThreads = atoi(argv[1]); // Number of threads

}

bool ipv6 = argc > 2; // Enable IPv6

EventLoop loop;

InetAddress listenAddr(2000, false, ipv6); // Service address

EchoServer server(&loop, listenAddr);

server.start(); // Start the server

loop.loop();

}

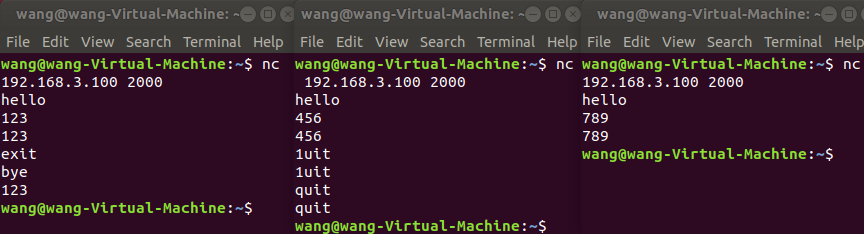

When testing, set the number of pool thread pools to 2. Enable three clients and send "123", "456" and "789" messages respectively. After that, the first client sends "exit" and then "123". Finally, the second client sends a quit.

The operation results are as follows. First open the three connections and observe that the EventLoop address assigned by the first and third connections is the same 0x7F857506F9C0, and the EventLoop address assigned by the second connection is 0x7F857485F9C0. The round robin algorithm is actually polling allocation here. Sending messages can echo normally.

After sending the "exit" message, the "bye" message is recycled, and then the server actively calls conn - > shutdown(), which is actually:: shutdown (sockfd, shutdown)_ WR), which turns off the local sending function, and the subsequent sending messages can still be accepted by the server, but the echo data will not be received because the write function of the server is turned off. Send a "quit" message and the server exits.

20210805 06:42:25.656446Z 13043 INFO pid = 13043, tid = 13043 - EchoServer_unittest.cc:75 20210805 06:42:25.656830Z 13043 INFO sizeof TcpConnection = 400 - EchoServer_unittest.cc:76 20210805 06:42:29.294193Z 13043 INFO TcpServer::newConnection [EchoServer] - new connection [EchoServer-0.0.0.0:2000#1] from 192.168.3.100:2982 - TcpServer.cc:80 20210805 06:42:29.294250Z 13043 INFO 192.168.3.100:2982 -> 192.168.3.100:2000 is UP - EventLoop 0x7F857506F9C0 - EchoServer_unittest.cc:42 20210805 06:42:29.294261Z 13043 INFO - EchoServer_unittest.cc:46 20210805 06:42:31.662242Z 13043 INFO TcpServer::newConnection [EchoServer] - new connection [EchoServer-0.0.0.0:2000#2] from 192.168.3.100:2984 - TcpServer.cc:80 20210805 06:42:31.662302Z 13043 INFO 192.168.3.100:2984 -> 192.168.3.100:2000 is UP - EventLoop 0x7F857485F9C0 - EchoServer_unittest.cc:42 20210805 06:42:31.662313Z 13043 INFO - EchoServer_unittest.cc:46 20210805 06:42:37.501991Z 13043 INFO TcpServer::newConnection [EchoServer] - new connection [EchoServer-0.0.0.0:2000#3] from 192.168.3.100:2986 - TcpServer.cc:80 20210805 06:42:37.502071Z 13043 INFO 192.168.3.100:2986 -> 192.168.3.100:2000 is UP - EventLoop 0x7F857506F9C0 - EchoServer_unittest.cc:42 20210805 06:42:37.502085Z 13043 INFO - EchoServer_unittest.cc:46 20210805 06:42:46.500798Z 13043 INFO EchoServer-0.0.0.0:2000#1 recv 4 bytes at 1628145766.500734 - EchoServer_unittest.cc:55 20210805 06:42:49.004797Z 13043 INFO EchoServer-0.0.0.0:2000#2 recv 4 bytes at 1628145769.004770 - EchoServer_unittest.cc:55 20210805 06:42:51.556946Z 13043 INFO EchoServer-0.0.0.0:2000#3 recv 4 bytes at 1628145771.556920 - EchoServer_unittest.cc:55 20210805 06:43:03.372943Z 13043 INFO EchoServer-0.0.0.0:2000#1 recv 5 bytes at 1628145783.372917 - EchoServer_unittest.cc:55 20210805 06:43:12.972814Z 13043 INFO EchoServer-0.0.0.0:2000#1 recv 4 bytes at 1628145792.972789 - EchoServer_unittest.cc:55 20210805 06:43:32.124884Z 13043 INFO EchoServer-0.0.0.0:2000#2 recv 5 bytes at 1628145812.124855 - EchoServer_unittest.cc:55 20210805 06:43:37.445024Z 13043 INFO EchoServer-0.0.0.0:2000#2 recv 5 bytes at 1628145817.444998 - EchoServer_unittest.cc:55 echoserver_unittest: net/Channel.cc:61: void muduo::net::Channel::remove(): Assertion `isNoneEvent()' failed. Aborted (core dumped)

An error is reported here when the program exits. It is found that no matter how many clients, single thread or multi thread, it is mainly found that an error must be reported when the client sends "exit" and then sends "quit". However, if the client actively disconnects after sending "exit", everything is normal.

4.2. Problems using shutdown()

When the client initiates to close the connection, it will execute the TcpConnection::handleClose() function, internally call Channel::disableAll(), and then remove the channel from TcpServer and Poller. Here, the server actively closes the write segment without processing any channel status. In EchoServer, after receiving "exit", TcpServer turns off the local sending function. In TcpServer destructor, TcpConnection::connectDestroyed() will be called, and then channel_ - > Remove(), internal assertion assert (isnonevent()); Failed, causing the runtime to crash.

The simple solution is to use conn - > forceclose() in onMessage(); Instead of Conn - > shutdown();, Modified the time listening status of channel. But the difference is that the client will respond to disconnection and can no longer send data.

When we design the application layer, after a normal connection is created, the client should disconnect. The server or client calls shutdown to ensure that it can continue to accept the data of the opposite end and ensure the integrity of the received message. See [muduo learning notes: implementation of net part TCP network programming library Buffer].