1. Data preparation

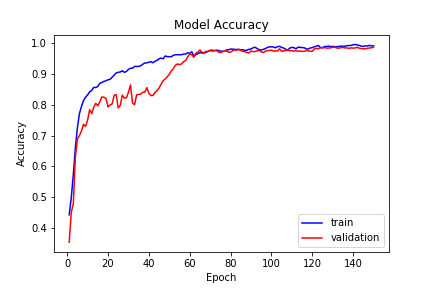

The data I used in this experiment are pictures of five kinds of flowers. The real pictures are as follows:

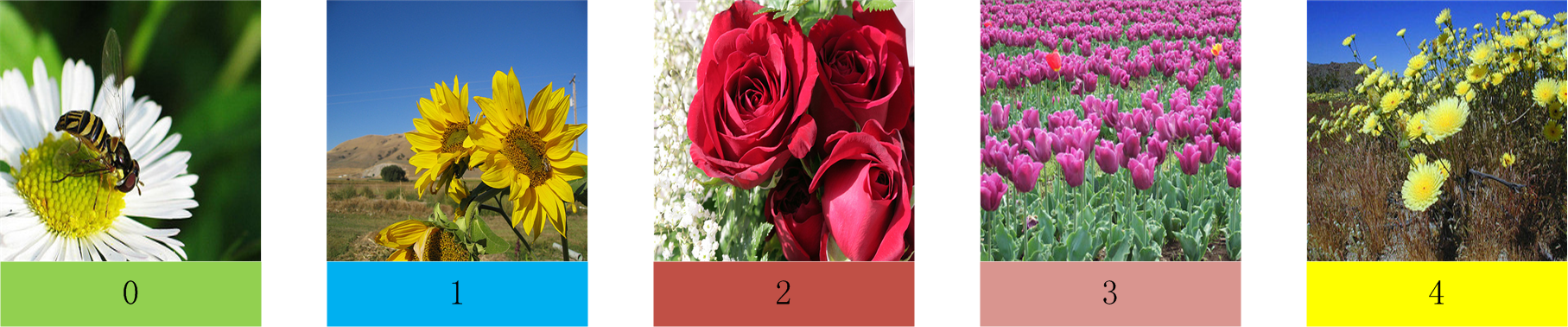

5 kinds of flowers are simply labeled with 0-4 tags. Training a good network model requires a lot of data. The number of samples in this experiment is shown in the table below:

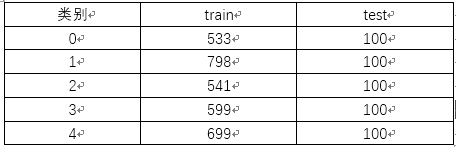

2. vgg16 network structure

The typical feature of vgg_16 is to use 33 size convolution kernel stack to achieve the effect of 55 and 7 * 7. The network structure is as follows:

3. Code implementation

This experiment uses the keras framework, and the whole code of the experiment is as follows:

from keras.preprocessing.image import ImageDataGenerator

from keras.models import Sequential

from keras.layers import Conv2D, MaxPooling2D

from keras.layers import Activation, Dropout, Flatten, Dense

from keras import backend as K

from keras.models import Sequential

from keras.layers import Input,Dense,Conv2D,MaxPooling2D,UpSampling2D,Dropout,Flatten

from keras.layers import BatchNormalization,AveragePooling2D,concatenate

from keras.layers import ZeroPadding2D,add

from keras.layers import Dropout, Activation

from keras.models import Model,load_model

from keras.utils.np_utils import to_categorical

from keras.callbacks import TensorBoard

from keras import optimizers, regularizers # Optimizer, regularization item

from keras.optimizers import SGD, Adam

# dimensions of our images.

img_width, img_height = 224, 224

train_data_dir = '/home/p18301116/vgg/traindata/'

validation_data_dir = '/home/p18301116/vgg/vaildationdata/'

nb_train_samples = 2520

nb_validation_samples = 174

epochs = 100

batch_size = 20

if K.image_data_format() == 'channels_first':

input_shape = (3, img_width, img_height)

else:

input_shape = (img_width, img_height, 3)

model = Sequential()

model.add(Conv2D(64,(3,3),strides=(1,1),input_shape=input_shape,padding='same',activation='relu',kernel_initializer='uniform'))

model.add(Conv2D(64,(3,3),strides=(1,1),padding='same',activation='relu',kernel_initializer='uniform'))

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Conv2D(128,(3,3),strides=(1,1),padding='same',activation='relu',kernel_initializer='uniform'))

model.add(Conv2D(128,(3,3),strides=(1,1),padding='same',activation='relu',kernel_initializer='uniform'))

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Conv2D(256,(3,3),strides=(1,1),padding='same',activation='relu',kernel_initializer='uniform'))

model.add(Conv2D(256,(3,3),strides=(1,1),padding='same',activation='relu',kernel_initializer='uniform'))

model.add(Conv2D(256,(3,3),strides=(1,1),padding='same',activation='relu',kernel_initializer='uniform'))

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Conv2D(512,(3,3),strides=(1,1),padding='same',activation='relu',kernel_initializer='uniform'))

model.add(Conv2D(512,(3,3),strides=(1,1),padding='same',activation='relu',kernel_initializer='uniform'))

model.add(Conv2D(512,(3,3),strides=(1,1),padding='same',activation='relu',kernel_initializer='uniform'))

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Conv2D(512,(3,3),strides=(1,1),padding='same',activation='relu',kernel_initializer='uniform'))

model.add(Conv2D(512,(3,3),strides=(1,1),padding='same',activation='relu',kernel_initializer='uniform'))

model.add(Conv2D(512,(3,3),strides=(1,1),padding='same',activation='relu',kernel_initializer='uniform'))

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Flatten())

model.add(Dense(4096,activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(4096,activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(8,activation='softmax'))

model.compile(loss='categorical_crossentropy',optimizer='sgd',metrics=['accuracy'])

model.summary()

# this is the augmentation configuration we will use for training

train_datagen = ImageDataGenerator(

rescale=1. / 255,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True)

# this is the augmentation configuration we will use for testing:

# only rescaling

test_datagen = ImageDataGenerator(rescale=1. / 255)

train_generator = train_datagen.flow_from_directory(

train_data_dir,

target_size=(img_width, img_height),

batch_size=batch_size,

class_mode='categorical') #Multi class

validation_generator = test_datagen.flow_from_directory(

validation_data_dir,

target_size=(img_width, img_height),

batch_size=batch_size,

class_mode='categorical') #Multi class

model.fit_generator(

train_generator,

steps_per_epoch=nb_train_samples // batch_size,

epochs=epochs,

validation_data=validation_generator,

validation_steps=nb_validation_samples // batch_size)

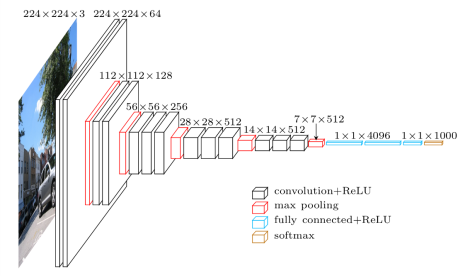

4. Experimental results