Development background

In 2015, when we tried to find a low latency player for live broadcast on the market to cooperate with the test of our RTMP push module, we found that none of them were easy to use, such as VLC or Vitamio, which were all based on FFMPEG. There were many supporting formats and were very excellent in the on-demand part, but the delay of live broadcast, especially RTMP, was a few Second, the support for pure audio, pure video playback, fast start-up, network abnormal state processing, integration complexity and other aspects is very poor. Moreover, because of its powerful functions and many bug s, in addition to the ability of senior developers in the industry, many developers even need to spend a lot of energy compiling the overall environment.

Our live player starts from the synchronous development of Windows platform, Android and iOS. Based on the shortcomings of the above open source player, we consider the full self-developed framework to ensure that the overall design is cross platform, and on the premise of ensuring the playing process, we try to achieve millisecond level delay as much as possible, unify the three platforms for interface design, and minimize the integration complexity of multiple platforms.

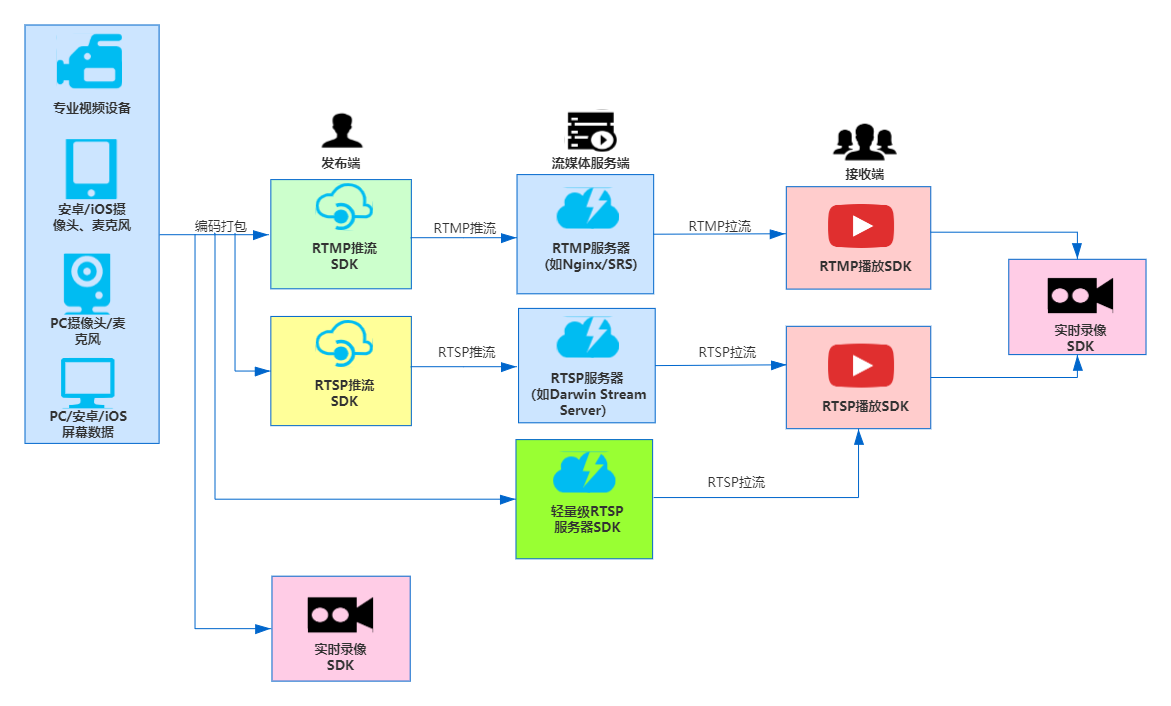

Overall scheme structure

RTMP or RTSP live player has a clear goal. Pull streaming data from RTMP server (self built server or CDN) or RTSP server (or NVR/IPC / encoder, etc.) to complete data analysis, decoding, audio and video data synchronization and rendering.

Specifically, it corresponds to the "receiver" part in the figure below:

Initial module design objectives

- It has its own framework, which is easy to expand. The adaptive algorithm makes the delay lower and the decoding and rendering efficiency higher;

- Support various abnormal network state processing, such as network disconnection and reconnection, network jitter control, etc;

- Event status callback ensures that developers can understand the overall status of the player, from uncontrollable black box to more intelligent understanding of the overall playing status;

- Support multi instance playback;

- Video support H.264, audio support AAC/PCMA/PCMU;

- Support buffer time setting;

- Real time mute.

Function after iteration

- [support playback protocol] RTSP, RTMP, millisecond delay;

- [multi instance play] support multi instance play;

- [event callback] supports network state, buffer state and other callbacks;

- [audio and video encryption] Windows platform supports the normal play of audio and video data encrypted by RTMP push end (AES / SM4 (national secret));

- [video format] support RTMP extension H.265, H.264;

- [audio format] supports AAC/PCMA/PCMU/Speex;

- [H.264/H.265 soft decoding] supports H.264/H.265 soft decoding;

- [H.264 hard decoding] Windows/Android/iOS supports H.264 hard decoding;

- [H.265 hard solution] Windows/Android/iOS supports H.265 hard solution;

- [H.264/H.265 hard decoding] Android supports setting Surface mode hard decoding and normal mode hard decoding;

- [buffer time setting] supports buffer time setting;

- [first screen second on] supports first screen second on mode;

- [low delay mode] it supports ultra-low delay mode settings (public network 200-400ms) similar to live broadcast schemes such as online doll machines;

- [complex network processing] support automatic adaptation of various network environments such as disconnection and reconnection;

- [fast switching URL] supports fast switching of other URLs and faster content switching during playback;

- [audio and video multiple render mechanisms] Android platform, video: surfaceview/OpenGL ES, audio: AudioTrack/OpenSL ES;

- [real time mute] support real-time mute / unmute during playback;

- [real time snapshot] support to capture the current playing picture during playing;

- [key frame only] Windows platform supports real-time setting whether to play only key frames;

- [rendering angle] supports four video rendering angle settings: 0 °, 90 °, 180 ° and 270 °;

- [rendering image] supports horizontal inversion and vertical inversion mode settings;

- [real time download speed update] support real-time callback of current download speed (support setting callback interval);

- [ARGB overlay] Windows platform supports ARGB image overlay to display video (see DEMO in C + +);

- [video data callback before decoding] support H.264/H.265 data callback;

- [video data callback after decoding] support YUV/RGB data callback after decoding;

- [video data scaling callback after decoding] Windows platform supports the interface for specifying the size of the callback image (it can scale the original video image and then call back to the upper layer);

- [audio data callback before decoding] supports AAC/PCMA/PCMU/SPEEX data callback;

- [audio and video adaption] support the adaption after the audio and video information changes during the playing process;

- [extended video recording function] it supports RTSP/RTMP H.264, extended H.265 stream recording, PCMA/PCMU/Speex to AAC recording, and only audio or video recording;

Considerations in the development and design of RTMP and RTSP live broadcast

1. Low latency: most of the RTSP playback is oriented to live scenes, so if the latency is too large, it will seriously affect the experience. Therefore, low latency is a very important indicator to measure a good RTSP player. At present, the RTSP live playback latency of the Daniel live SDK is better than that of the open source player, and it will not cause delay accumulation when running for a long time;

2. Audio and video synchronization: in order to pursue low latency, some players do not even do audio and video synchronization, and get audio video to play directly, resulting in a/v out of sync, and there are various problems such as time stamp jumping. The player provided by Daniel live SDK has good time stamp synchronization and abnormal time stamp correction mechanism;

3. Support multiple instances: the player provided by the Daniel live SDK supports playing multiple audio and video data at the same time, such as 4-8-9 windows. Most open-source players are not very friendly to multi instance support;

4. Support the buffer time setting: in some scenarios with network jitter, the player needs to support the buffer time setting. Generally speaking, in milliseconds, the open source player is not friendly enough to support this;

5. TCP/UDP mode setting and automatic switching: considering that many servers only support TCP or UDP mode, a good RTSP player needs to support TCP/UDP mode setting, such as link does not support TCP or UDP, Daniel live SDK can automatically switch, and open source player does not have the ability to automatically switch TCP/UDP;

6. Real time mute: for example, playing RTSP stream in multiple windows, if each audio is played out, the experience is very bad, so the real-time mute function is very necessary, and the open source player does not have the real-time mute function;

7. Video view rotation: due to installation restrictions, many cameras cause image inversion, so a good RTSP player should support real-time rotation (0 ° 90 ° 180 ° 270 °), horizontal inversion and vertical inversion of video view. Open source player does not have this function;

8. Support audio/video data output after decoding: Daniel live SDK has contacted many developers, hoping to get YUV or RGB data and perform algorithm analysis such as face matching while playing. Open source player does not have this function;

9. Real time snapshot: it is very necessary to capture the interested or important pictures in real time. Generally, the player does not have the snapshot ability, and the open source player does not have this function;

10. Network jitter processing (such as network disconnection and reconnection): stable network processing mechanism and support, such as network disconnection and reconnection, etc. open source player has poor support for network exception processing;

11. Long term operation stability: different from the open-source players on the market, the Windows platform RTSP live broadcast SDK provided by Daniel live SDK is suitable for long-term operation for several days, and the open-source player has poor support for long-term operation stability;

12. log information recording: the overall process mechanism is recorded to the log file to ensure that when there is a problem, there is evidence to be relied on, and the open source player has few log records.

13. Real time download speed feedback: the Daniel live SDK provides real-time download callback of audio and video streams, and can set the callback interval to ensure real-time download speed feedback, so as to monitor the network status. The open source player does not have this ability;

14. Abnormal state processing and Event state callback: for example, in the process of playing, in various scenes such as network disconnection, network jitter, etc., the player provided by Daniel live SDK can callback the relevant state in real time to ensure that the upper module senses the processing, and the open source player does not support this well;

15. Key frame / full frame play real-time switch: especially when playing multiple pictures, if there are too many paths, all decoding and drawing will increase the system resource occupation. If flexible processing is available, only key frames can be played at any time, and full frame play switch will greatly reduce the system performance.

interface design

Many developers, in the initial design of the interface, if there is not enough audio and video background, it is easy to overturn the previous design repeatedly. We take the Windows platform as an example to share our design ideas. If we need to download the demo project source code, we can GitHub Download Reference:

smart_player_sdk.h

#ifdef __cplusplus

extern "C"{

#endif

typedef struct _SmartPlayerSDKAPI

{

/*

flag Currently, it is used for 0, later for extension, and for PR eserve, it is used for extension,

NT? ERC? OK returned successfully

*/

NT_UINT32(NT_API *Init)(NT_UINT32 flag, NT_PVOID pReserve);

/*

This is the last called interface

NT? ERC? OK returned successfully

*/

NT_UINT32(NT_API *UnInit)();

/*

flag Currently, it is used for 0, later for extension, and for PR eserve, it is used for extension,

NT_HWND hwnd, The window for drawing picture can be set to NULL

Get Handle

NT? ERC? OK returned successfully

*/

NT_UINT32(NT_API *Open)(NT_PHANDLE pHandle, NT_HWND hwnd, NT_UINT32 flag, NT_PVOID pReserve);

/*

The handle fails after calling this interface,

NT? ERC? OK returned successfully

*/

NT_UINT32(NT_API *Close)(NT_HANDLE handle);

/*

Set event callback. If you want to listen to events, it is recommended to call this interface after calling Open successfully

*/

NT_UINT32(NT_API *SetEventCallBack)(NT_HANDLE handle,

NT_PVOID call_back_data, NT_SP_SDKEventCallBack call_back);

/*

Set video size callback interface

*/

NT_UINT32(NT_API *SetVideoSizeCallBack)(NT_HANDLE handle,

NT_PVOID call_back_data, SP_SDKVideoSizeCallBack call_back);

/*

Set video callback and spit video data out

frame_format: Only NT? SP? E? Video? Frame? Format? Rgb32, NT? SP? E? Video? Frame? Framat? I420

*/

NT_UINT32(NT_API *SetVideoFrameCallBack)(NT_HANDLE handle,

NT_INT32 frame_format,

NT_PVOID call_back_data, SP_SDKVideoFrameCallBack call_back);

/*

Set the video callback, spit out the video data, and specify the width and height of the spit out video

*handle: Play handle

*scale_width: Zoom width (must be even, multiple of 16 recommended)

*scale_height: Zoom height (must be even

*scale_filter_mode: If the scaling quality is 0, the SDK will use the default value. Currently, the setting range is [1, 3]. The larger the value, the better the scaling quality, but the more performance it consumes

*frame_format: Only NT? SP? E? Video? Frame? Format? Rgb32, NT? SP? E? Video? Frame? Framat? I420

NT? ERC? OK returned successfully

*/

NT_UINT32(NT_API *SetVideoFrameCallBackV2)(NT_HANDLE handle,

NT_INT32 scale_width, NT_INT32 scale_height,

NT_INT32 scale_filter_mode, NT_INT32 frame_format,

NT_PVOID call_back_data, SP_SDKVideoFrameCallBack call_back);

/*

*Set video frame timestamp callback when drawing video frame

*Note that if the current playback stream is pure audio, there will be no callback, which is only valid if there is video

*/

NT_UINT32(NT_API *SetRenderVideoFrameTimestampCallBack)(NT_HANDLE handle,

NT_PVOID call_back_data, SP_SDKRenderVideoFrameTimestampCallBack call_back);

/*

Set the audio PCM frame callback, spit out the PCM data, and the current frame size is 10ms

*/

NT_UINT32(NT_API *SetAudioPCMFrameCallBack)(NT_HANDLE handle,

NT_PVOID call_back_data, NT_SP_SDKAudioPCMFrameCallBack call_back);

/*

Set user data callback

*/

NT_UINT32(NT_API *SetUserDataCallBack)(NT_HANDLE handle,

NT_PVOID call_back_data, NT_SP_SDKUserDataCallBack call_back);

/*

Set video sei data callback

*/

NT_UINT32(NT_API *SetSEIDataCallBack)(NT_HANDLE handle,

NT_PVOID call_back_data, NT_SP_SDKSEIDataCallBack call_back);

/*

Start playing, pass in the URL

Note: this interface is not recommended at present. Please use StartPlay. It is reserved for the convenience of old customers

*/

NT_UINT32(NT_API *Start)(NT_HANDLE handle, NT_PCSTR url,

NT_PVOID call_back_data, SP_SDKStartPlayCallBack call_back);

/*

stop playing

Note: this interface is not recommended at present. Please use StopPlay. It is reserved for the convenience of old customers

*/

NT_UINT32(NT_API *Stop)(NT_HANDLE handle);

/*

*Provide a new set of interfaces++

*/

/*

*Set URL

NT? ERC? OK returned successfully

*/

NT_UINT32(NT_API *SetURL)(NT_HANDLE handle, NT_PCSTR url);

/*

*

* Set decryption key. Currently, it is only used to decrypt rtmp encrypted stream

* key: decryption key

* size: Key length

* NT? ERC? OK returned successfully

*/

NT_UINT32(NT_API *SetKey)(NT_HANDLE handle, const NT_BYTE* key, NT_UINT32 size);

/*

*

* Set decryption vector. Currently, it is only used to decrypt rtmp encrypted stream

* iv: Decryption vectors

* size: Vector length

* NT? ERC? OK returned successfully

*/

NT_UINT32(NT_API *SetDecryptionIV)(NT_HANDLE handle, const NT_BYTE* iv, NT_UINT32 size);

/*

handle: Play handle

hwnd: This is the window handle to be passed in for drawing

is_support: If it is supported, it is 1. If it is not supported, it is 0

Interface call successfully returns NT? ERC? OK

*/

NT_UINT32(NT_API *IsSupportD3DRender)(NT_HANDLE handle, NT_HWND hwnd, NT_INT32* is_support);

/*

Set the drawing window handle. If it is set when calling Open, this interface can not call

If it is set to NULL when calling Open, a draw window handle can be set to the player

NT? ERC? OK returned successfully

*/

NT_UINT32(NT_API *SetRenderWindow)(NT_HANDLE handle, NT_HWND hwnd);

/*

* Set whether to play the sound or not. This is different from the mute interface

* The main purpose of this interface is for the user to set the external PCM callback interface and not use it when the SDK plays a sound

* is_output_auido_device: 1: Indicates that output to audio device is allowed, default is 1, 0: indicates output is not allowed. Other value interface returns failure

* NT? ERC? OK returned successfully

*/

NT_UINT32(NT_API *SetIsOutputAudioDevice)(NT_HANDLE handle, NT_INT32 is_output_auido_device);

/*

*Start playing. Note that StartPlay and start cannot be mixed. Either use StartPlay or start

* Start And Stop are old interfaces, not recommended. Please use the new interface of StartPlay and StopPlay

*/

NT_UINT32(NT_API *StartPlay)(NT_HANDLE handle);

/*

*stop playing

*/

NT_UINT32(NT_API *StopPlay)(NT_HANDLE handle);

/*

* Set whether to record video or not. By default, if the video source has video, it will be recorded. If there is no video, it will not be recorded. However, in some scenes, you may not want to record video, just want to record audio, so add a switch

* is_record_video: 1 Indicates video recording, 0 indicates no video recording, default is 1

*/

NT_UINT32(NT_API *SetRecorderVideo)(NT_HANDLE handle, NT_INT32 is_record_video);

/*

* Set whether to record audio or not. By default, if the video source has audio, it will record. If there is no audio, it will not be recorded. However, in some scenes, you may not want to record audio, just want to record video, so add a switch

* is_record_audio: 1 Indicates recording audio, 0 indicates not recording audio, default is 1

*/

NT_UINT32(NT_API *SetRecorderAudio)(NT_HANDLE handle, NT_INT32 is_record_audio);

/*

Set the local video directory, which must be in English, otherwise it will fail

*/

NT_UINT32(NT_API *SetRecorderDirectory)(NT_HANDLE handle, NT_PCSTR dir);

/*

Set the maximum size of a single video file. When it exceeds this value, it will be cut into a second file

size: The unit is KB(1024Byte), the current range is [5MB-800MB], and the out of range will be set to the range

*/

NT_UINT32(NT_API *SetRecorderFileMaxSize)(NT_HANDLE handle, NT_UINT32 size);

/*

Set video file name generation rules

*/

NT_UINT32(NT_API *SetRecorderFileNameRuler)(NT_HANDLE handle, NT_SP_RecorderFileNameRuler* ruler);

/*

Set the video callback interface

*/

NT_UINT32(NT_API *SetRecorderCallBack)(NT_HANDLE handle,

NT_PVOID call_back_data, SP_SDKRecorderCallBack call_back);

/*

Set the switch of audio to aac code when recording. aac is more general. sdk adds other audio coding functions (such as speex, pcmu, pcma, etc.) to aac

is_transcode: If it is set to 1, if the audio coding is not aac, it will be converted to aac, if it is aac, no conversion will be done. If it is set to 0, no conversion will be done. The default is 0

Note: transcoding will increase performance consumption

*/

NT_UINT32(NT_API *SetRecorderAudioTranscodeAAC)(NT_HANDLE handle, NT_INT32 is_transcode);

/*

Startup video

*/

NT_UINT32(NT_API *StartRecorder)(NT_HANDLE handle);

/*

Stop video

*/

NT_UINT32(NT_API *StopRecorder)(NT_HANDLE handle);

/*

* Set the callback of video data when streaming

*/

NT_UINT32(NT_API *SetPullStreamVideoDataCallBack)(NT_HANDLE handle,

NT_PVOID call_back_data, SP_SDKPullStreamVideoDataCallBack call_back);

/*

* Set the callback of audio data when streaming

*/

NT_UINT32(NT_API *SetPullStreamAudioDataCallBack)(NT_HANDLE handle,

NT_PVOID call_back_data, SP_SDKPullStreamAudioDataCallBack call_back);

/*

Set the switch of audio to aac code when streaming. aac is more general. sdk adds the function of other audio codes (such as speex, pcmu, pcma, etc.) to aac

is_transcode: If it is set to 1, if the audio coding is not aac, it will be converted to aac, if it is aac, no conversion will be done. If it is set to 0, no conversion will be done. The default is 0

Note: transcoding will increase performance consumption

*/

NT_UINT32(NT_API *SetPullStreamAudioTranscodeAAC)(NT_HANDLE handle, NT_INT32 is_transcode);

/*

Starting pull flow

*/

NT_UINT32(NT_API *StartPullStream)(NT_HANDLE handle);

/*

Stop pulling

*/

NT_UINT32(NT_API *StopPullStream)(NT_HANDLE handle);

/*

*Provide a new set of interfaces--

*/

/*

When the drawing window size changes, you must call

*/

NT_UINT32(NT_API* OnWindowSize)(NT_HANDLE handle, NT_INT32 cx, NT_INT32 cy);

/*

Universal interface, setting parameters, most problems, these interfaces can solve

*/

NT_UINT32(NT_API *SetParam)(NT_HANDLE handle, NT_UINT32 id, NT_PVOID pData);

/*

Universal interface, get parameters, most problems, these interfaces can solve

*/

NT_UINT32(NT_API *GetParam)(NT_HANDLE handle, NT_UINT32 id, NT_PVOID pData);

/*

Set buffer, minimum 0ms

*/

NT_UINT32(NT_API *SetBuffer)(NT_HANDLE handle, NT_INT32 buffer);

/*

Mute interface, 1 for mute, 0 for unmute

*/

NT_UINT32(NT_API *SetMute)(NT_HANDLE handle, NT_INT32 is_mute);

/*

Set RTSP TCP mode, 1 is TCP, 0 is UDP, only RTSP is valid

*/

NT_UINT32(NT_API* SetRTSPTcpMode)(NT_HANDLE handle, NT_INT32 isUsingTCP);

/*

Set RTSP timeout, timeout in seconds, must be greater than 0

*/

NT_UINT32 (NT_API* SetRtspTimeout)(NT_HANDLE handle, NT_INT32 timeout);

/*

For RTSP, some may support rtp over udp, some may support rtp over tcp

For convenience, in some scenarios, you can turn on the automatic try switch. If udp fails to play, the sdk will automatically try tcp. If tcp fails to play, the sdk will automatically try udp

is_auto_switch_tcp_udp: If it is set to 1, the sdk will try to switch between tcp and udp. If it is set to 0, it will not try to switch

*/

NT_UINT32 (NT_API* SetRtspAutoSwitchTcpUdp)(NT_HANDLE handle, NT_INT32 is_auto_switch_tcp_udp);

/*

Set seconds on, 1 is seconds on, 0 is not seconds on

*/

NT_UINT32(NT_API* SetFastStartup)(NT_HANDLE handle, NT_INT32 isFastStartup);

/*

Set the low delay playback mode, the default is normal playback mode

mode: 1 It is low delay mode, 0 is normal mode, others are only invalid

Interface call successfully returns NT? ERC? OK

*/

NT_UINT32(NT_API* SetLowLatencyMode)(NT_HANDLE handle, NT_INT32 mode);

/*

Check whether H264 hard decoding is supported

Return NT? ERC? OK if supported

*/

NT_UINT32(NT_API *IsSupportH264HardwareDecoder)();

/*

Check whether H265 hard decoding is supported

Return NT? ERC? OK if supported

*/

NT_UINT32(NT_API *IsSupportH265HardwareDecoder)();

/*

*Set H264 hard solution

*is_hardware_decoder: 1:For hard solution, 0: for no hard solution

*reserve: Keep the parameter, just pass 0 now

*NT? ERC? OK returned successfully

*/

NT_UINT32(NT_API *SetH264HardwareDecoder)(NT_HANDLE handle, NT_INT32 is_hardware_decoder, NT_INT32 reserve);

/*

*Set H265 hard solution

*is_hardware_decoder: 1:For hard solution, 0: for no hard solution

*reserve: Keep the parameter, just pass 0 now

*NT? ERC? OK returned successfully

*/

NT_UINT32(NT_API *SetH265HardwareDecoder)(NT_HANDLE handle, NT_INT32 is_hardware_decoder, NT_INT32 reserve);

/*

*Set decode video key only

*is_only_dec_key_frame: 1:Indicates that only key frames are decoded, 0: all keys are decoded, the default is 0

*NT? ERC? OK returned successfully

*/

NT_UINT32(NT_API *SetOnlyDecodeVideoKeyFrame)(NT_HANDLE handle, NT_INT32 is_only_dec_key_frame);

/*

*Up down reverse (vertical reverse)

*is_flip: 1:For reverse, 0: for no reverse

*/

NT_UINT32(NT_API *SetFlipVertical)(NT_HANDLE handle, NT_INT32 is_flip);

/*

*Flip horizontal

*is_flip: 1:For reverse, 0: for no reverse

*/

NT_UINT32(NT_API *SetFlipHorizontal)(NT_HANDLE handle, NT_INT32 is_flip);

/*

Set rotation, clockwise

degress: Setting 0, 90180270 degrees is valid, other values are invalid

Note: in addition to 0 degrees, other angles will consume more CPU

Interface call successfully returns NT? ERC? OK

*/

NT_UINT32(NT_API* SetRotation)(NT_HANDLE handle, NT_INT32 degress);

/*

* In the case of drawing with D3D, draw a logo on the drawing window. The drawing of logo is driven by video frame, and argb image must be passed in

* argb_data: argb If the image data is null, the previously set logo will be cleared

* argb_stride: argb Step size of each line of image (generally image_width*4)

* image_width: argb Image width

* image_height: argb Image height

* left: Left x of draw position

* top: Draw top y of position

* render_width: Drawn width

* render_height: Height drawn

*/

NT_UINT32(NT_API* SetRenderARGBLogo)(NT_HANDLE handle,

const NT_BYTE* argb_data, NT_INT32 argb_stride,

NT_INT32 image_width, NT_INT32 image_height,

NT_INT32 left, NT_INT32 top,

NT_INT32 render_width, NT_INT32 render_height

);

/*

Set download speed to report. Download speed will not be reported by default

is_report: Report switch, 1: table report. 0: no report. Other values are invalid

report_interval: The reporting interval (reporting frequency) is in seconds, and the minimum value is 1 second. If it is less than 1 and the reporting is set, the call fails

Note: if reporting is set, set SetEventCallBack and handle the event in the callback function

Reported events are: NT? SP? E? Event? ID? Download? Speed

This interface must be called before StartXXX.

NT? ERC? OK returned successfully

*/

NT_UINT32(NT_API *SetReportDownloadSpeed)(NT_HANDLE handle,

NT_INT32 is_report, NT_INT32 report_interval);

/*

Get download speed actively

speed: Returns the download speed in bytes / S

(Note: this interface must be called after startXXX, otherwise it will fail.

NT? ERC? OK returned successfully

*/

NT_UINT32(NT_API *GetDownloadSpeed)(NT_HANDLE handle, NT_INT32* speed);

/*

Get video duration

For live broadcast, there is no duration and call result is undefined

For on-demand, NT? ERC? OK is returned if the acquisition is successful, and NT? ERC? SP? Need? Retry is returned if the SDK is still being parsed

*/

NT_UINT32(NT_API *GetDuration)(NT_HANDLE handle, NT_INT64* duration);

/*

Gets the current playback timestamp, in milliseconds (ms)

Note: this time stamp is the time stamp of the video source. It only supports on demand. It is not supported for live broadcast

NT? ERC? OK returned successfully

*/

NT_UINT32(NT_API *GetPlaybackPos)(NT_HANDLE handle, NT_INT64* pos);

/*

Gets the current pull stream timestamp, in milliseconds (ms)

Note: this time stamp is the time stamp of the video source. It only supports on demand. It is not supported for live broadcast

NT? ERC? OK returned successfully

*/

NT_UINT32(NT_API *GetPullStreamPos)(NT_HANDLE handle, NT_INT64* pos);

/*

Set playback position in milliseconds (ms)

Note: live broadcast is not supported. This interface is used for on demand

NT? ERC? OK returned successfully

*/

NT_UINT32(NT_API *SetPos)(NT_HANDLE handle, NT_INT64 pos);

/*

Pause playback

isPause: 1 Indicates pause, 0 indicates resume playing, other errors

Note: there is no pause concept in live broadcast, so live broadcast does not support this interface for on-demand broadcast

NT? ERC? OK returned successfully

*/

NT_UINT32(NT_API *Pause)(NT_HANDLE handle, NT_INT32 isPause);

/*

Switch URL

url:url to switch

switch_pos: After switching to the new url, fill in 0 by default for the set playback location, which is only valid for the on-demand url of the set playback location and invalid for the live url

reserve: Retention parameter

Note: 1. If the switched url is the same as the playing url, the sdk will not do any processing

Call precondition: any of the three interfaces of startplay, startrecorder and startpullstream have been successfully called

NT? ERC? OK returned successfully

*/

NT_UINT32(NT_API *SwitchURL)(NT_HANDLE handle, NT_PCSTR url, NT_INT64 switch_pos, NT_INT32 reserve);

/*

Capture pictures

file_name_utf8: File name, utf8 encoding

call_back_data: User defined data on callback

call_back: Callback function to inform the user that the screenshot has been completed or failed

NT? ERC? OK returned successfully

Only when playing can the call succeed. In other cases, the call returns an error

It takes hundreds of milliseconds to generate PNG files. To prevent the CPU from being too high, the SDK will limit the number of screenshot requests. When the number exceeds a certain amount,

Calling this interface will return NT ﹣ ERC ﹣ sp ﹣ to ﹣ man ﹣ capture ﹣ image ﹣ requests. In this case, please delay for a while, wait for the SDK to process some requests, and then try again

*/

NT_UINT32(NT_API* CaptureImage)(NT_HANDLE handle, NT_PCSTR file_name_utf8,

NT_PVOID call_back_data, SP_SDKCaptureImageCallBack call_back);

/*

* Drawing RGB32 data with GDI

* 32 The R G B format of bits, R, G and B account for 8 respectively, and the other byte is reserved. The byte format of memory is bb gg rr xx, which is mainly matched with windows bitmap. In small end mode, it operates according to DWORD type, the highest bit is xx, followed by rr, gg, bb

* In order to be compatible with windows bitmaps, the image? Strip must be width? 4

* handle: Player handle

* hdc: Drawing dc

* x_dst: Draw the x coordinate of the upper left corner of the face

* y_dst: Draw the y coordinate of the upper left corner of the face

* dst_width: Width to draw

* dst_height: Height to draw

* x_src: Source image x position

* y_src: Original image y position

* rgb32_data: rgb32 Please refer to the previous notes for data format

* rgb32_data_size: data size

* image_width: Image actual width

* image_height: Image actual height

* image_stride: Image step size

*/

NT_UINT32(NT_API *GDIDrawRGB32)(NT_HANDLE handle, NT_HDC hdc,

NT_INT32 x_dst, NT_INT32 y_dst,

NT_INT32 dst_width, NT_INT32 dst_height,

NT_INT32 x_src, NT_INT32 y_src,

NT_INT32 src_width, NT_INT32 src_height,

const NT_BYTE* rgb32_data, NT_UINT32 rgb32_data_size,

NT_INT32 image_width, NT_INT32 image_height,

NT_INT32 image_stride);

/*

* Drawing ARGB data with GDI

* The byte format of memory is bb gg rr alpha, which is mainly matched with windows bitmap. In small end mode, it operates according to DWORD type. The highest bit is alpha, followed by rr, gg, bb

* In order to be compatible with windows bitmaps, the image? Strip must be width? 4

* hdc: Drawing dc

* x_dst: Draw the x coordinate of the upper left corner of the face

* y_dst: Draw the y coordinate of the upper left corner of the face

* dst_width: Width to draw

* dst_height: Height to draw

* x_src: Source image x position

* y_src: Original image y position

* argb_data: argb For image data, please refer to the previous notes for the format

* image_stride: Image steps per line

* image_width: Image actual width

* image_height: Image actual height

*/

NT_UINT32(NT_API *GDIDrawARGB)(NT_HDC hdc,

NT_INT32 x_dst, NT_INT32 y_dst,

NT_INT32 dst_width, NT_INT32 dst_height,

NT_INT32 x_src, NT_INT32 y_src,

NT_INT32 src_width, NT_INT32 src_height,

const NT_BYTE* argb_data, NT_INT32 image_stride,

NT_INT32 image_width, NT_INT32 image_height);

} SmartPlayerSDKAPI;

NT_UINT32 NT_API GetSmartPlayerSDKAPI(SmartPlayerSDKAPI* pAPI);

/*

reserve1: Please pass 0

NT_PVOID: Please pass NULL.

Successful return: NT? ERC? OK

*/

NT_UINT32 NT_API NT_SP_SetSDKClientKey(NT_PCSTR cid, NT_PCSTR key, NT_INT32 reserve1, NT_PVOID reserve2);

#ifdef __cplusplus

}

#endifsmart_player_define.h

#ifndef SMART_PLAYER_DEFINE_H_

#define SMART_PLAYER_DEFINE_H_

#ifdef WIN32

#include <windows.h>

#endif

#ifdef SMART_HAS_COMMON_DIC

#include "../../topcommon/nt_type_define.h"

#include "../../topcommon/nt_base_code_define.h"

#else

#include "nt_type_define.h"

#include "nt_base_code_define.h"

#endif

#ifdef __cplusplus

extern "C"{

#endif

#ifndef NT_HWND_

#define NT_HWND_

#ifdef WIN32

typedef HWND NT_HWND;

#else

typedef void* NT_HWND;

#endif

#endif

#ifndef NT_HDC_

#define NT_HDC_

#ifdef _WIN32

typedef HDC NT_HDC;

#else

typedef void* NT_HDC;

#endif

#endif

/*Error code*/

typedef enum _SP_E_ERROR_CODE

{

NT_ERC_SP_HWND_IS_NULL = (NT_ERC_SMART_PLAYER_SDK | 0x1), // Window handle is empty

NT_ERC_SP_HWND_INVALID = (NT_ERC_SMART_PLAYER_SDK | 0x2), // Invalid window handle

NT_ERC_SP_TOO_MANY_CAPTURE_IMAGE_REQUESTS = (NT_ERC_SMART_PLAYER_SDK | 0x3), // Too many screenshot requests

NT_ERC_SP_WINDOW_REGION_INVALID = (NT_ERC_SMART_PLAYER_SDK | 0x4), // Invalid window area, window width or height may be less than 1

NT_ERC_SP_DIR_NOT_EXIST = (NT_ERC_SMART_PLAYER_SDK | 0x5), // directory does not exist

NT_ERC_SP_NEED_RETRY = (NT_ERC_SMART_PLAYER_SDK | 0x6), // Need retry

} SP_E_ERROR_CODE;

/*Set the parameter ID, as it is currently written, the smartplayer SDK has been divided into ranges*/

typedef enum _SP_E_PARAM_ID

{

SP_PARAM_ID_BASE = NT_PARAM_ID_SMART_PLAYER_SDK,

} SP_E_PARAM_ID;

/*Event ID*/

typedef enum _NT_SP_E_EVENT_ID

{

NT_SP_E_EVENT_ID_BASE = NT_EVENT_ID_SMART_PLAYER_SDK,

NT_SP_E_EVENT_ID_CONNECTING = NT_SP_E_EVENT_ID_BASE | 0x2, /*Connection*/

NT_SP_E_EVENT_ID_CONNECTION_FAILED = NT_SP_E_EVENT_ID_BASE | 0x3, /*connection failed*/

NT_SP_E_EVENT_ID_CONNECTED = NT_SP_E_EVENT_ID_BASE | 0x4, /*Connected*/

NT_SP_E_EVENT_ID_DISCONNECTED = NT_SP_E_EVENT_ID_BASE | 0x5, /*Disconnect*/

NT_SP_E_EVENT_ID_NO_MEDIADATA_RECEIVED = NT_SP_E_EVENT_ID_BASE | 0x8, /*RTMP data not received*/

NT_SP_E_EVENT_ID_RTSP_STATUS_CODE = NT_SP_E_EVENT_ID_BASE | 0xB, /*rtsp status code Report. At present, only 401 is reported. Param1 indicates status code*/

NT_SP_E_EVENT_ID_NEED_KEY = NT_SP_E_EVENT_ID_BASE | 0xC, /*You need to enter the decryption key to play*/

NT_SP_E_EVENT_ID_KEY_ERROR = NT_SP_E_EVENT_ID_BASE | 0xD, /*Incorrect decryption key*/

/* Next, please start from 0x81*/

NT_SP_E_EVENT_ID_START_BUFFERING = NT_SP_E_EVENT_ID_BASE | 0x81, /*Start buffer*/

NT_SP_E_EVENT_ID_BUFFERING = NT_SP_E_EVENT_ID_BASE | 0x82, /*In buffer, param1 represents percentage progress*/

NT_SP_E_EVENT_ID_STOP_BUFFERING = NT_SP_E_EVENT_ID_BASE | 0x83, /*Stop buffer*/

NT_SP_E_EVENT_ID_DOWNLOAD_SPEED = NT_SP_E_EVENT_ID_BASE | 0x91, /*Download speed, param1 means download speed, unit is (Byte/s)*/

NT_SP_E_EVENT_ID_PLAYBACK_REACH_EOS = NT_SP_E_EVENT_ID_BASE | 0xa1, /*When the broadcast is over, the live stream does not have this event, so the on-demand stream does*/

NT_SP_E_EVENT_ID_RECORDER_REACH_EOS = NT_SP_E_EVENT_ID_BASE | 0xa2, /*The video ends. The live stream doesn't have this event. The on-demand stream does*/

NT_SP_E_EVENT_ID_PULLSTREAM_REACH_EOS = NT_SP_E_EVENT_ID_BASE | 0xa3, /*The pull stream is over. The live stream does not have this event, so the on-demand stream does*/

NT_SP_E_EVENT_ID_DURATION = NT_SP_E_EVENT_ID_BASE | 0xa8, /*Video duration: if it is live, it will not be reported. If it is on-demand, it will be reported if the video duration can be obtained from the video source. param1 represents the video duration, in milliseconds (ms)*/

} NT_SP_E_EVENT_ID;

//Define video frame image format

typedef enum _NT_SP_E_VIDEO_FRAME_FORMAT

{

NT_SP_E_VIDEO_FRAME_FORMAT_RGB32 = 1, // The 32-bit R G B format, R, G and B account for 8 respectively, and the other byte is reserved. The memory byte format is bb gg rr xx, which is mainly matched with the windows bitmap. In the small end mode, it operates according to the DWORD type. The highest bit is xx, followed by rr, gg, bb

NT_SP_E_VIDEO_FRAME_FORMAT_ARGB = 2, // 32-bit argb format, memory byte format: bb gg rr aa, matching with windows bitmap

NT_SP_E_VIDEO_FRAME_FROMAT_I420 = 3, // YUV420 format, three components are saved on three faces

} NT_SP_E_VIDEO_FRAME_FORMAT;

// Define the video frame structure

typedef struct _NT_SP_VideoFrame

{

NT_INT32 format_; // For image format, refer to NT? SP? E? Video? Frame? Format

NT_INT32 width_; // Image width

NT_INT32 height_; // Image height

NT_UINT64 timestamp_; // Timestamp, generally 0, not used, in ms

// Specific image data, argb and rgb32 only use the first one, I420 uses the first three

NT_UINT8* plane0_;

NT_UINT8* plane1_;

NT_UINT8* plane2_;

NT_UINT8* plane3_;

// For argb and rgb32, in order to be compatible with windows bitmap, the number of bytes in each line of each plane must be width × 4

// For i420, string0 u u is the step of y, string1 UU is the step of u, and string2 UU is the step of v,

NT_INT32 stride0_;

NT_INT32 stride1_;

NT_INT32 stride2_;

NT_INT32 stride3_;

} NT_SP_VideoFrame;

// If all three items are 0, you will not be able to start recording

typedef struct _NT_SP_RecorderFileNameRuler

{

NT_UINT32 type_; // This value is currently 0 by default and will be used for future expansion

NT_PCSTR file_name_prefix_; // Set a video filename prefix, for example: daniulive

NT_INT32 append_date_; // If it is 1, a date will be added to the filename, for example: daniulive-2017-01-17

NT_INT32 append_time_; // If it is 1, it will increase time, for example: daniulive-2017-01-17-17-10-36

} NT_SP_RecorderFileNameRuler;

/*

*When streaming video data, some relevant data

*/

typedef struct _NT_SP_PullStreamVideoDataInfo

{

NT_INT32 is_key_frame_; /* 1:For key, 0: for non key */

NT_UINT64 timestamp_; /* Decode timestamp in milliseconds */

NT_INT32 width_; /* It's usually 0. */

NT_INT32 height_; /* Generally 0 */

NT_BYTE* parameter_info_; /* Generally NULL */

NT_UINT32 parameter_info_size_; /* It's usually 0. */

NT_UINT64 presentation_timestamp_; /*Display the time stamp. The value should be greater than or equal to the time stamp. The unit is Ms*/

} NT_SP_PullStreamVideoDataInfo;

/*

*When streaming audio data, some relevant data

*/

typedef struct _NT_SP_PullStreamAuidoDataInfo

{

NT_INT32 is_key_frame_; /* 1:For key, 0: for non key */

NT_UINT64 timestamp_; /* In milliseconds */

NT_INT32 sample_rate_; /* It's usually 0. */

NT_INT32 channel_; /* It's usually 0. */

NT_BYTE* parameter_info_; /* If it is AAC, this one has value, and other codes are generally ignored */

NT_UINT32 parameter_info_size_; /*If it is AAC, this one has value, and other codes are generally ignored */

NT_UINT64 reserve_; /* Retain */

} NT_SP_PullStreamAuidoDataInfo;

/*

When the player gets the video size, it will call back

*/

typedef NT_VOID(NT_CALLBACK *SP_SDKVideoSizeCallBack)(NT_HANDLE handle, NT_PVOID user_data,

NT_INT32 width, NT_INT32 height);

/*

Incoming when calling Start, callback interface

*/

typedef NT_VOID(NT_CALLBACK *SP_SDKStartPlayCallBack)(NT_HANDLE handle, NT_PVOID user_data, NT_UINT32 result);

/*

Video image callback

status:Not currently. The default is 0. It may be used in the future

*/

typedef NT_VOID(NT_CALLBACK* SP_SDKVideoFrameCallBack)(NT_HANDLE handle, NT_PVOID user_data, NT_UINT32 status,

const NT_SP_VideoFrame* frame);

/*

Audio PCM data callback, current frame length is 10ms

status:Not currently. The default is 0. It may be used in the future

data: PCM data

size: data size

sample_rate: sampling rate

channel: Channel number

per_channel_sample_number: Samples per channel

*/

typedef NT_VOID(NT_CALLBACK* NT_SP_SDKAudioPCMFrameCallBack)(NT_HANDLE handle, NT_PVOID user_data, NT_UINT32 status,

NT_BYTE* data, NT_UINT32 size,

NT_INT32 sample_rate, NT_INT32 channel, NT_INT32 per_channel_sample_number);

/*

Screenshots callback

result: If the screenshot is successful, the result is nt? ERC? OK. Other errors

*/

typedef NT_VOID(NT_CALLBACK* SP_SDKCaptureImageCallBack)(NT_HANDLE handle, NT_PVOID user_data, NT_UINT32 result,

NT_PCSTR file_name);

/*

When drawing video, the video frame time stamp callback is used in some special scenes, and users without special needs do not need to pay attention to it

timestamp: In milliseconds

reserve1: Retention parameter

reserve2: Retention parameter

*/

typedef NT_VOID(NT_CALLBACK* SP_SDKRenderVideoFrameTimestampCallBack)(NT_HANDLE handle, NT_PVOID user_data, NT_UINT64 timestamp,

NT_UINT64 reserve1, NT_PVOID reserve2);

/*

Video callback

status: 1:Indicates that a new video file has been written. 2: indicates that a video file has been written

file_name: Actual video file name

*/

typedef NT_VOID(NT_CALLBACK* SP_SDKRecorderCallBack)(NT_HANDLE handle, NT_PVOID user_data, NT_UINT32 status,

NT_PCSTR file_name);

/*

*Video data callback during streaming

video_codec_id: Refer to NT? Media? Code? ID

data: Video data

size: Video data size

info: Video data related information

reserve: Retention parameter

*/

typedef NT_VOID(NT_CALLBACK* SP_SDKPullStreamVideoDataCallBack)(NT_HANDLE handle, NT_PVOID user_data,

NT_UINT32 video_codec_id, NT_BYTE* data, NT_UINT32 size,

NT_SP_PullStreamVideoDataInfo* info,

NT_PVOID reserve);

/*

*Audio data callback when streaming

auido_codec_id: Refer to NT? Media? Code? ID

data: Audio data

size: Audio data size

info: Audio data related information

reserve: Retention parameter

*/

typedef NT_VOID(NT_CALLBACK* SP_SDKPullStreamAudioDataCallBack)(NT_HANDLE handle, NT_PVOID user_data,

NT_UINT32 auido_codec_id, NT_BYTE* data, NT_UINT32 size,

NT_SP_PullStreamAuidoDataInfo* info,

NT_PVOID reserve);

/*

*Player event callback

event_id: For event ID, refer to NT? SP? E? Event? ID

param1 To param6, the meaning of the value is related to the specific event ID. note that if the specific event ID does not indicate the meaning of param1-param6, then the event does not take parameters

*/

typedef NT_VOID(NT_CALLBACK* NT_SP_SDKEventCallBack)(NT_HANDLE handle, NT_PVOID user_data,

NT_UINT32 event_id,

NT_INT64 param1,

NT_INT64 param2,

NT_UINT64 param3,

NT_PCSTR param4,

NT_PCSTR param5,

NT_PVOID param6

);

/*

*

* User data callback is currently sent by the push end

* data_type: Data type, 1: for binary byte type. 2: for utf8 string

* data: For the actual data, if the data_type is 1, the data type is const NT_BYTE *, if the data_type is 2, the data type is const NT_CHAR*

* size: data size

* timestamp: Video timestamp

* reserve1: Retain

* reserve2: Retain

* reserve3: Retain

*/

typedef NT_VOID(NT_CALLBACK* NT_SP_SDKUserDataCallBack)(NT_HANDLE handle, NT_PVOID user_data,

NT_INT32 data_type,

NT_PVOID data,

NT_UINT32 size,

NT_UINT64 timestamp,

NT_UINT64 reserve1,

NT_INT64 reserve2,

NT_PVOID reserve3

);

/*

*

* sei data callback of video

* data: sei data

* size: sei data size

* timestamp: Video timestamp

* reserve1: Retain

* reserve2: Retain

* reserve3: Retain

* Note: at present, it is found that some videos have several sei nals. In order to facilitate the user's processing, we spit out all the resolved SEIS. The sei nals are separated by 00 00 01, which is convenient for parsing

* The spewed sei data is currently prefixed with 00 00 01

*/

typedef NT_VOID(NT_CALLBACK* NT_SP_SDKSEIDataCallBack)(NT_HANDLE handle, NT_PVOID user_data,

NT_BYTE* data,

NT_UINT32 size,

NT_UINT64 timestamp,

NT_UINT64 reserve1,

NT_INT64 reserve2,

NT_PVOID reserve3

);

#ifdef __cplusplus

}

#endif

#endifsummary

In general, whether it is based on the secondary development of open source player or full self-development, a good RTMP player or RTSP player, when designing, more consideration should be given to how to make it more flexible, stable, simple several interfaces, which is difficult to meet the general product claims.

Here's how to encourage: not to enjoy the scenery, but to look for higher mountains!