pod communication and service discovery

In a world without k8s, the system administrator needs to specify the exact ip address or host name of the service in the client configuration file to configure the client application, but it is not necessary in k8s. The application in k8s runs in pod, and the high availability design of pod makes it impossible to ensure accurate ip. Here is a summary of the working mode of pod and service.

1 pod features

- pod may start or close at any time, which is short

- The client will not know the address of the pod in advance,

- Horizontal scaling means that the pod may provide the same service. The IP address of each pod is different, but the client only needs the service and does not need to know the address of the pod.

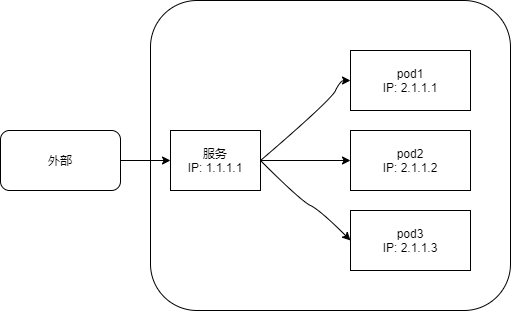

2 services

In order to solve the above needs, k8s provides a single access point for a group of pods with the same function, which is service. The ip and port of the service will not change. The client is routed to the pod through the service without directly connecting to the pod, so that the removal of a single pod or the creation of a new pod will not be affected.

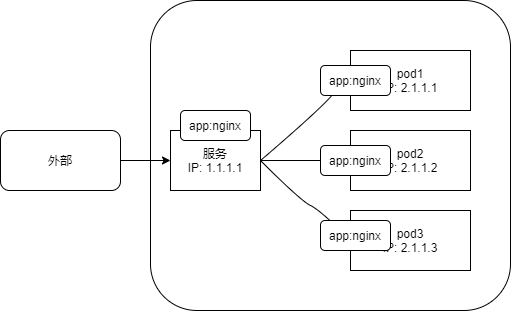

3. Matching between pod and service

From the above process, we can see a basic problem of service creation. How to match the service with a set of pod s with specific functions? tag chooser

4 create service

- Before creating a service, use rc to create a pod connected to it

cat > kubia-rc.yaml << EOF

apiVersion: v1

kind: ReplicationController

metadata:

name: kubia

spec:

replicas: 2

selector:

app: kubia

template:

metadata:

labels:

app: kubia

spec:

containers:

- name: kubia

image: luksa/kubia

ports:

- containerPort: 8080

EOF

# create

kubectl create -f kubia-rc.yaml

# see

[root@nginx test]# kubectl get po

NAME READY STATUS RESTARTS AGE

kubia-66tvd 1/1 Running 0 2m31s

kubia-v8dvv 1/1 Running 0 2m31s

- Create service

cat > kubia-svc.yaml << EOF

apiVersion: v1

kind: Service

metadata:

name: kubia

spec:

ports:

- port: 80

targetPort: 8080

selector:

app: kubia

EOF

# create

kubectl create -f kubia-svc.yaml

# see

[root@nginx test]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 16d

kubia ClusterIP 10.43.165.39 <none> 80/TCP 46s

5 why can't the service ip ping?

We found that the curl service is OK, but it cannot be ping ed.

[root@k8s-node01 ~]# curl 10.43.165.39 You've hit kubia-66tvd [root@k8s-node01 ~]# ping 10.43.165.39 PING 10.43.165.39 (10.43.165.39) 56(84) bytes of data. ^C --- 10.43.165.39 ping statistics --- 2 packets transmitted, 0 received, 100% packet loss, time 999ms

Sometimes if the ping fails, we think there is a problem. In fact, there is no problem. This is because cluster ip is a virtual ip and only makes sense when combined with service ports. Therefore, it is normal for ping to fail.

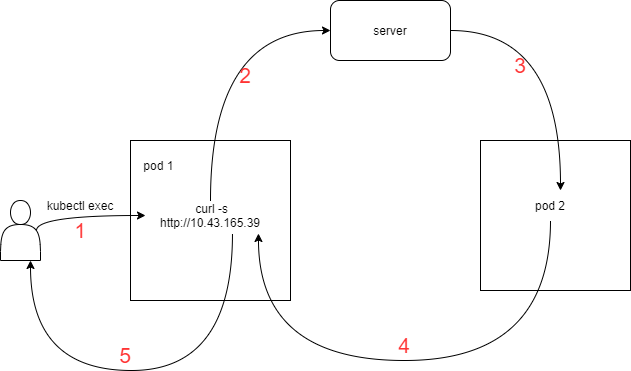

6 test and analysis

The ip assigned to this service is 10.43.165.39, but this is cluster ip, that is, cluster ip, which can only be accessed within the cluster. That is, the cluster nodes or other pod s can be accessed. Is that right? Test it

- ssh to a node of the cluster

[root@k8s-node01 ~]# curl 10.43.165.39 You've hit kubia-66tvd # The cluster ip of the service is within the cluster, and the node can communicate with it

- Execute the command in the pod. If the command is executed successfully, it indicates the connection

# Test multiple times, and each time is not the same pod [root@nginx test]# kubectl exec kubia-vs2kl -- curl -s http://10.43.165.39 You've hit kubia-vs2kl [root@nginx test]# kubectl exec kubia-vs2kl -- curl -s http://10.43.165.39 You've hit kubia-l9w94 [root@nginx test]# kubectl exec kubia-vs2kl -- curl -s http://10.43.165.39 You've hit kubia-vs2kl

– indicates the end of the kubectl command, followed by the command executed in the pod

The above figure shows the sequence of events

- Execute the command in a pod, which sends an http request

- The pod sends a request to the ip of the service

- Since this service is a proxy pod, it forwards requests to one of the pods

- Process the request in pod2 and return the corresponding with the pod name

- The request results are printed on the host

If any of the above processes goes wrong, the above command will fail, so it can be used to test the cluster network.

7 the same service exposes multiple ports

A service can expose one port or multiple ports. For example, http listens to port 8080 and https listens to port 8443. Multiple ports need to specify a name. Ports without a name will display < unset > when describing. If the port in pod is also a command, can targetport in svc use its name? In this way, there is no need to modify the service when changing the pod port number, because it matches according to the name.

cat > kubia-svc.yaml << EOF

apiVersion: v1

kind: Service

metadata:

name: kubia

spec:

ports:

- name: http

port: 80

targetPort: 8080

- name: https

port: 443

targetPort: 8443

selector:

app: kubia

EOF

8 discovery services

After the service is created, we can access the pod through a single stable ip, no matter how the pod changes. But how does the newly created pod know the service ip after a service is created? Can you query manually? unwanted.

K8s provides the client with a way to discover the ip and port of the service - environment variables. When the pod starts running, k8s will initialize a series of environment variables to point to the existing service. As follows:

[root@nginx test]# kubectl exec kubia-vs2kl -- env PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin HOSTNAME=kubia-vs2kl KUBERNETES_PORT=tcp://10.43.0.1:443 KUBIA_PORT_80_TCP_PORT=80 KUBIA_PORT=tcp://10.43.165.39:80 KUBIA_PORT_80_TCP=tcp://10.43.165.39:80 KUBIA_PORT_80_TCP_PROTO=tcp KUBIA_PORT_80_TCP_ADDR=10.43.165.39 KUBERNETES_SERVICE_PORT=443 KUBERNETES_PORT_443_TCP_ADDR=10.43.0.1 KUBIA_SERVICE_HOST=10.43.165.39 KUBIA_SERVICE_PORT=80 NPM_CONFIG_LOGLEVEL=info NODE_VERSION=7.9.0 YARN_VERSION=0.22.0 HOME=/root

9 DNS

Environment variables are a way to obtain ip and ports, and can also be obtained through DNS.

[root@nginx test]# kubectl get po -n kube-system NAME READY STATUS RESTARTS AGE canal-l58m7 2/2 Running 2 16d canal-s92dx 2/2 Running 3 16d coredns-7c5566588d-rhdtx 1/1 Running 1 16d coredns-7c5566588d-tp55c 1/1 Running 1 16d coredns-autoscaler-65bfc8d47d-4mdt5 1/1 Running 1 16d metrics-server-6b55c64f86-zlz4l 1/1 Running 2 16d

The pod in Kube system space has coredns, which indicates that when running DNS services, other pods in the cluster are configured to use as DNS, and the DNS queries of processes running on the pod will be responded by k8s their own DNS server, which knows all the services running in the system. The dnspolicy value in the spec in the pod determines whether to use a DNS server.

10 endpoints

We also want k8s to expose the service to the outside. Before that, we need to introduce endpoint, which is a resource between pod and svc. Is to expose the list of ip addresses and ports of pods to be forwarded by the service.

# View endpoints [root@nginx test]# kubectl get endpoints kubia NAME ENDPOINTS AGE kubia 10.42.0.10:8443,10.42.1.10:8443,10.42.1.9:8443 + 3 more... 79m # View pod IP NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES kubia-66tvd 1/1 Running 0 161m 10.42.0.10 81.68.101.212 <none> <none> kubia-l9w94 1/1 Running 0 128m 10.42.1.9 81.68.229.215 <none> <none> kubia-vs2kl 1/1 Running 0 125m 10.42.1.10 81.68.229.215 <none> <none> # It can also be seen from svc that endpoints are the collection of IP and ports of the pod to be forwarded [root@nginx test]# kubectl describe svc kubia Name: kubia Namespace: default Labels: <none> Annotations: <none> Selector: app=kubia Type: ClusterIP IP Families: <none> IP: 10.43.7.83 IPs: <none> Port: http 80/TCP TargetPort: 8080/TCP Endpoints: 10.42.0.10:8080,10.42.1.10:8080,10.42.1.9:8080 Port: https 443/TCP TargetPort: 8443/TCP Endpoints: 10.42.0.10:8443,10.42.1.10:8443,10.42.1.9:8443 Session Affinity: None Events: <none>

This indicates that the service uses the tag to match the pod, then establishes the endpoints, and forwards the request to an ip: port in the endpoints. Therefore, sometimes the service connection fails. You need to check whether the endpoints exist correctly.

After understanding this layer, we can also manually create endpoints for the service without using tag matching pod. Just create a new endpoint resource to make it the same as the name of the service.

endpoints itself is a resource, not a part of a service.

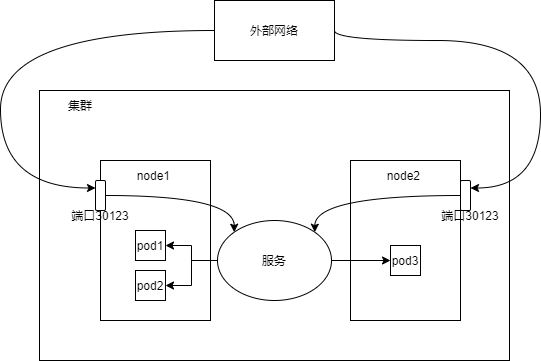

11 exposing services to the outside

So far, we have discussed the use of pod within the cluster. Sometimes we need to expose services to networks outside the cluster, such as front-end web servers. There are several ways to access services externally.

- A nodeport service opens a port on each node of the cluster, so it is called nodeport. The traffic on this port is redirected to the service, which can be accessed using the cluster ip:port.

- loadbalance service is an extension of nodeport, which enables the service to be accessed through a dedicated load balancer.

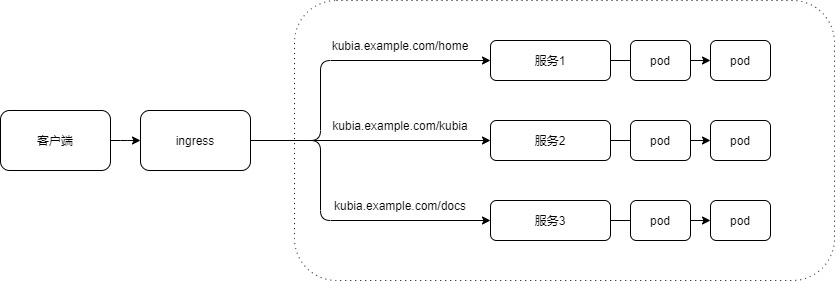

- ingress resource, a completely different mechanism, exposes multiple services through one ip address -- it runs in the http layer, so it can provide more resources than the services in the fourth layer.

The first is to expose the service to the outside by using nodeport

cat > kubia-svc-nodeport.yaml << EOF

apiVersion: v1

kind: Service

metadata:

name: kubia-nodeport

spec:

type: NodePort

ports:

- port: 80

targetPort: 8080

nodePort: 30123

selector:

app: kubia

EOF

# establish

kubectl create -f kubia-svc-nodeport.yaml

# see

[root@nginx test]# kubectl get svc kubia-nodeport -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubia-nodeport NodePort 10.43.254.206 <none> 80:30123/TCP 38m app=kubia

# Test, local shell connection

[C:\~]$ curl http://81.68.229.215:30123

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 23 0 23 0 0 23 0 --:--:-- --:--:-- --:--:-- 1533

You've hit kubia-l9w94

# This indicates that the external network can connect to the services inside the cluster and can also be opened with a browser.

The second is to expose the service to the outside by using loadbalance

Only cloud architectures that provide load balancers can use loadbalancer s. If not, nodebanlancer SVCS will degenerate into nodeport s.

cat > kubia-svc-loadbalancer.yaml << EOF

apiVersion: v1

kind: Service

metadata:

name: kubia-loadbalancer

spec:

type: LoadBalancer

ports:

- port: 80

targetPort: 8080

selector:

app: kubia

EOF

# establish

kubectl create -f kubia-svc-loadbalancer.yaml

# see

[root@nginx ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 17d

kubia ClusterIP 10.43.7.83 <none> 80/TCP,443/TCP 18h

kubia-loadbalancer LoadBalancer 10.43.20.104 <pending> 80:31342/TCP 14m

kubia-nodeport NodePort 10.43.254.206 <none> 80:30123/TCP 16h

nginx-service NodePort 10.43.66.77 <none> 80:30080/TCP 16h

# In the test, the cluster does not support the loadbalancer, so the external IP status is pending. At this time, the loadbalancer is a nodeport

# The local shell tests node IP: port and the connection is normal

[C:\~]$ curl http://81.68.229.215:31342

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 23 0 23 0 0 23 0 --:--:-- --:--:-- --:--:-- 741

You've hit kubia-vs2kl

# Change the elastic cluster test of Tencent cloud to support load balancer

# Deploy the same deployment, and then deploy the loader. The process is the same. Omit

# see

[root@k8s-node02 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 192.168.0.1 <none> 443/TCP 3d23h

kubia-loadbalancer LoadBalancer 192.168.0.201 175.27.192.232 80:30493/TCP 67s

# Local host test

[C:\~]$ curl http://175.27.192.232

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 33 0 33 0 0 33 0 --:--:-- --:--:-- --:--:-- 702

You've hit kubia-778d98d9c-2zpl5

# If successful, you can directly access the loadbalancer IP

The third: ingress

Why do you need ingress? The loadbalancer service needs an independent common IP address. Second, ingress only needs a public IP to provide access to many services. When a client sends a request to ingress, ingress will determine the service to be forwarded according to the host name and path of the request. That is, an ingress can expose multiple services. As follows:

cat > kubia-ingress.yaml << EOF

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kubia

spec:

rules:

- host: kubia.example.com

http:

paths:

- path: /

backend:

serviceName: kubia-nodeport

servicePort: 80

EOF

# establish

kubectl create -f kubia-ingress.yaml

# see

[root@k8s-node02 ~]# kubectl get ing

NAME CLASS HOSTS ADDRESS PORTS AGE

kubia <none> kubia.example.com 119.45.3.217 80 34m

# Access, local dns to be added

# Windows is in "C:\Windows\System32\drivers\etc\hosts"

# add to

119.45.3.217 kubia.example.com

# visit

[C:\~]$ curl http://1.13.12.141

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 33 0 33 0 0 33 0 --:--:-- --:--:-- --:--:-- 532

You've hit kubia-778d98d9c-2zpl5

[C:\~]$ curl http://kubia.example.com

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

You've hit kubia-778d98d9c-2zpl5

ingress means the act of entering or entering, the means and place of entering

Using ingress, the access link is the client - ingress - Service - workload. Therefore, when configuring ingress, you must ensure that the service works normally, and the serviceName is the name of the forwarding service.

Reference: kubernets in action -- Service: the client discovers the pod and communicates with it

Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:–:-- --:–:-- --:–:-- 0

You've hit kubia-778d98d9c-2zpl5

> ingress Means the act of entering or entering, the means and place of entering > > use ingress,The access link is the client—— ingress-service-Workload, so ingress When configuring, you must ensure that service Normal operation, and serviceName Forward for service Name of the. reference material: kubernets in action --Services: client discovery pod And communicate with it