HashMap is almost the knowledge that must be asked in an interview. Can you really take it easy for an interview with HashMap? I believe that if you go to an interview with a famous Internet company, you will never just ask such a simple question as the data structure of HashMap. I collected several questions that the boss often asked about HashMap during the interview recently:

1. Why is HashMap the power of 2?

new HashMap(14);

HashMap is implemented by array + linked list (1.8 and red black tree). What is the size of the created array after the above line of code is executed?

Tracing the source code, you can see that it will execute such a function to return the array size:

static final int tableSizeFor(int cap) {

int n = cap - 1;

n |= n >>> 1;

n |= n >>> 2;

n |= n >>> 4;

n |= n >>> 8;

n |= n >>> 16;

return (n < 0) ? 1 : (n >= MAXIMUM_CAPACITY) ? MAXIMUM_CAPACITY : n + 1;

}

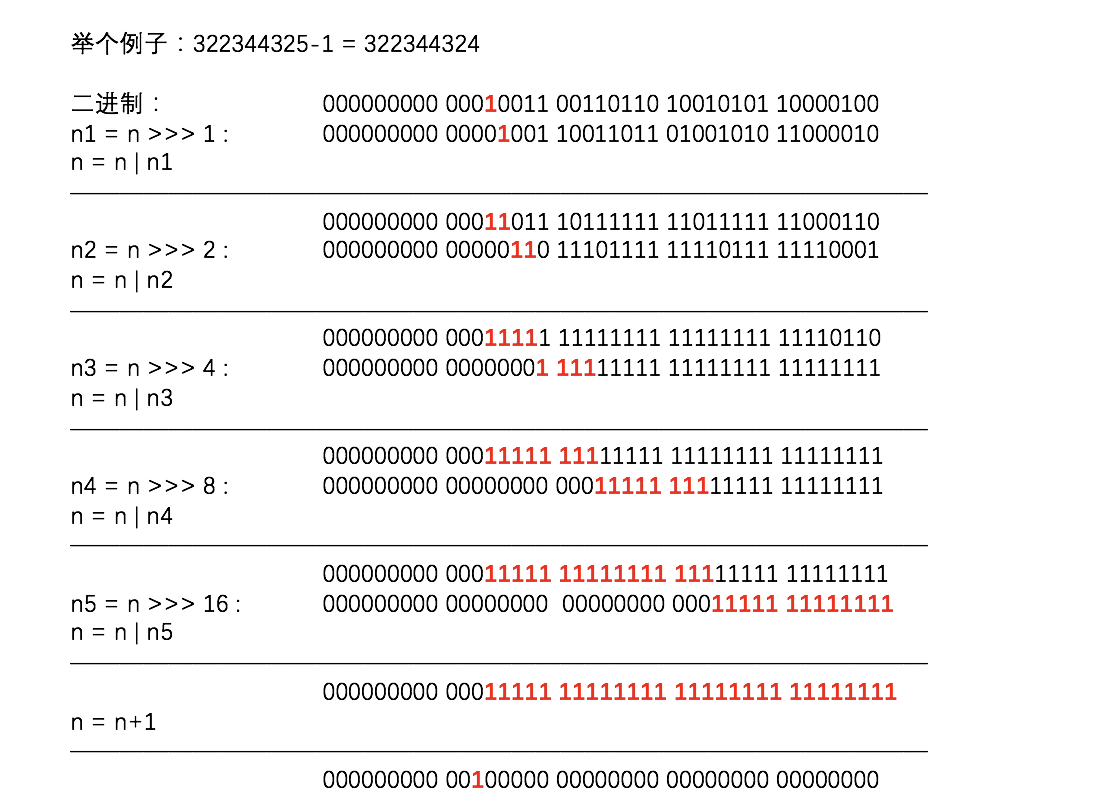

Illustration:

- The first right shift and or operation can guarantee another 1 after the first bit 1 from left to right

- The second right shift and or operation can guarantee two more ones from left to right

- And so on. Because the int value in java is 4 bytes and 32 bits, the last 16 bits shift is enough to ensure the occurrence of 2 ^ 32;

-

Through the operation of this function, we can get 16 from the 14 operation we passed in, that is, the minimum 2 power of n greater than 14.

The above shows that the array size will be guaranteed to be the n-power of 2 at last, so let's talk about why it should be guaranteed to be the n-power of 2

static int indexFor(int h, int length) {

return h & (length-1);

}In jdk1.7, when the put element is put, such a code fragment will be executed, which is intended to carry out data length and hashCode value redundancy operation. Why not use the% sign directly since it's taking the surplus? Because bit operations are much more efficient than% operations.

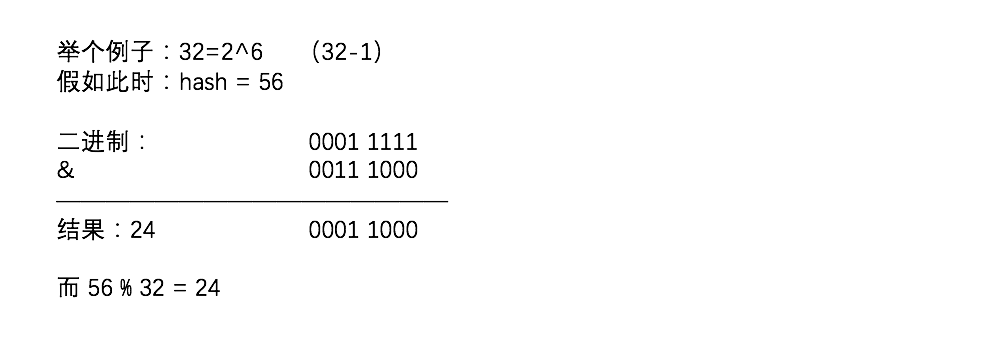

So since it's & operation, why do we have to ensure that the length is 2^n?

- If the length is 2^n-1, the result of & can be guaranteed to be within the size range of hash table;

- &The results of operation and% modulus can be consistent;

2. Why is the expansion factor of HashMap 0.75?

Load factor is a very important part. If the load factor is too large, if it is 1, then the space utilization will increase, but the time efficiency will decrease.

If the load factor is too small, it will lead to frequent capacity expansion of hashmap, which will consume performance very much every time;

OK Said like did not say the same, regarding this question I also can only throw a brick to draw jade;

It's like this:

Because TreeNodes are about twice the size of regular nodes, we * use them only when bins contain enough nodes to warrant use * (see TREEIFY_THRESHOLD). And when they become too small (due to * removal or resizing) they are converted back to plain bins. In * usages with well-distributed user hashCodes, tree bins are * rarely used. Ideally, under random hashCodes, the frequency of * nodes in bins follows a Poisson distribution * (http://en.wikipedia.org/wiki/Poisson_distribution) with a * parameter of about 0.5 on average for the default resizing * threshold of 0.75, although with a large variance because of * resizing granularity. Ignoring variance, the expected * occurrences of list size k are (exp(-0.5) * pow(0.5, k) / * factorial(k)). The first values are: * * 0: 0.60653066 * 1: 0.30326533 * 2: 0.07581633 * 3: 0.01263606 * 4: 0.00157952 * 5: 0.00015795 * 6: 0.00001316 * 7: 0.00000094 * 8: 0.00000006 * more: less than 1 in ten million

Choosing 0.75 is a trade-off between space and time. It doesn't mean that it has to be 0.75. Other programming languages are also configured to be 0.72.

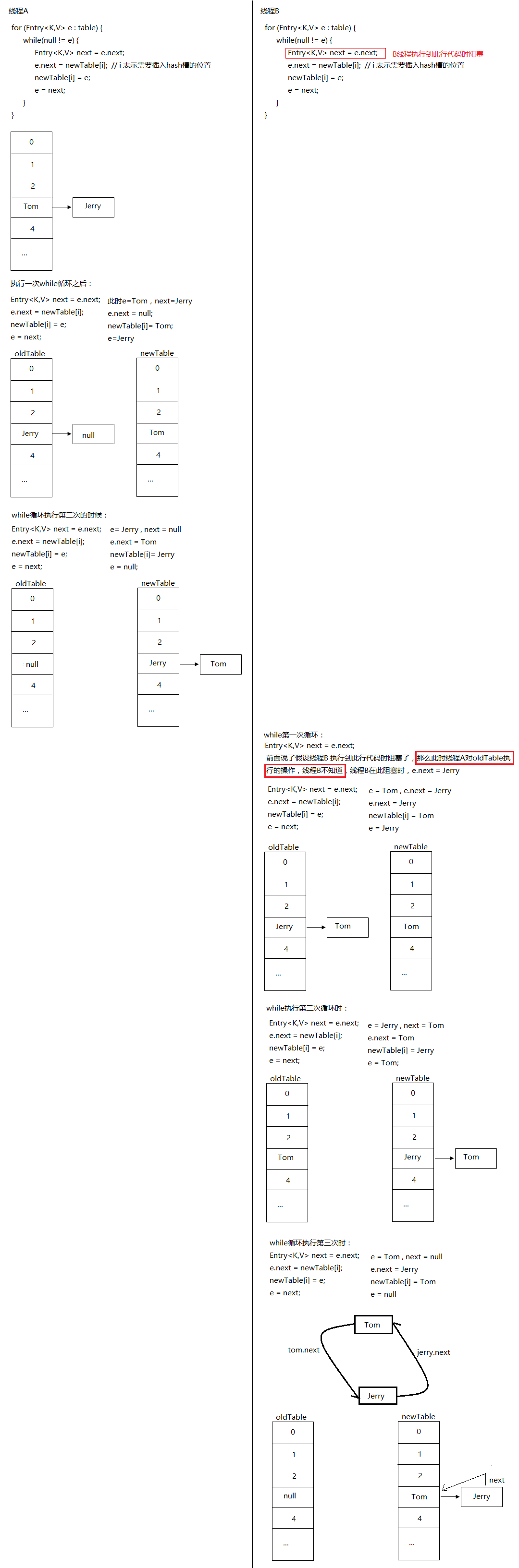

3. How does jdk1.7 produce a life and death cycle when it is expanded?

void transfer(Entry[] newTable, boolean rehash) {

int newCapacity = newTable.length;

for (Entry<K,V> e : table) {

while(null != e) {

Entry<K,V> next = e.next;

if (rehash) {

e.hash = null == e.key ? 0 : hash(e.key);

}

int i = indexFor(e.hash, newCapacity);

e.next = newTable[i];

newTable[i] = e;

e = next;

}

}

}Speaking of this topic, I couldn't understand it when I was looking for a blog on the Internet, so I tried to express it in the way of graph

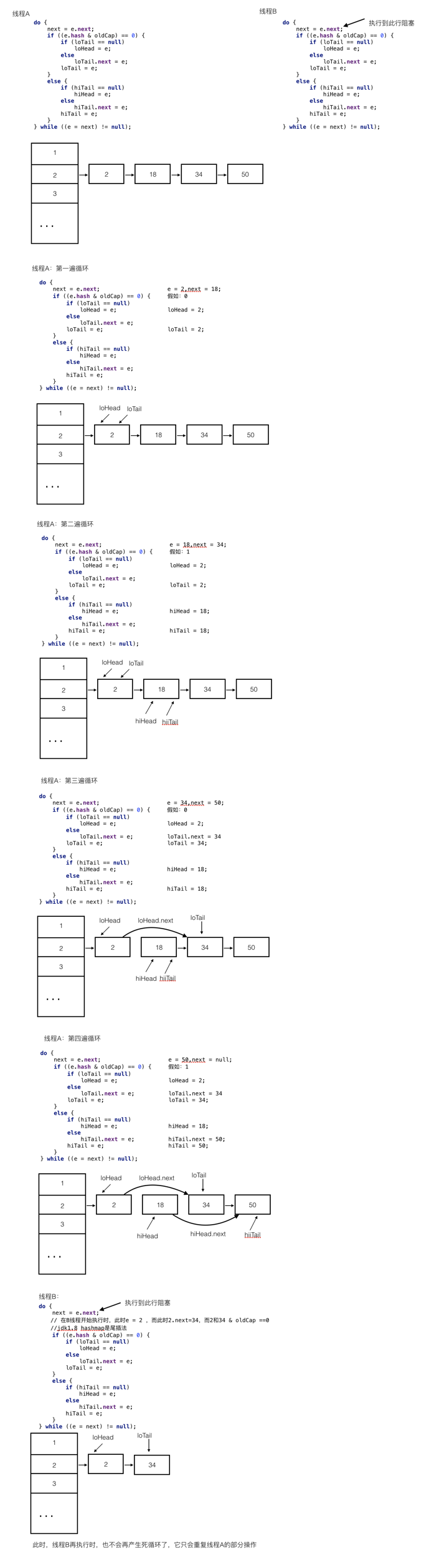

4. Will there be a life and death cycle when JDK1.8 is expanded?

Node<K,V> loHead = null, loTail = null;

Node<K,V> hiHead = null, hiTail = null;

Node<K,V> next;

do {

next = e.next;

if ((e.hash & oldCap) == 0) {

if (loTail == null)

loHead = e;

else

loTail.next = e;

loTail = e;

}

else {

if (hiTail == null)

hiHead = e;

else

hiTail.next = e;

hiTail = e;

}

} while ((e = next) != null);

if (loTail != null) {

loTail.next = null;

newTab[j] = loHead;

}

if (hiTail != null) {

hiTail.next = null;

newTab[j + oldCap] = hiHead;

}Look at the following graphic analysis:

Therefore, HashMap in jdk1.8 will not generate deadlock when expanding!

5. Why does the chain list turn into a red black tree when its length reaches 8?

First of all, TreeNode node occupies twice the size of the linked list node, and only when the container reaches 8, it will turn into a red black tree. Why is it 8? In the second question, it has been explained that according to the Poisson distribution, it is very difficult for the linked list node to reach the length of 8. If there is a special case to reach 8, then the linked list will turn into a red black tree;

There is also a requirement for turning into a red black tree, that is, the number of elements in the hashMap reaches 64.

6. Is JDK1.8 HashMap thread safe?

Although JDK 1.8 HashMap can avoid the problem of dead cycle during capacity expansion as much as possible, HashMap is still thread unsafe. For example, when thread A is in the put element, thread B will expand the capacity;

The reason why it is unsafe is that multithreading will operate on the same instance, resulting in inconsistent variable states;