Thanks for sharing- http://bjbsair.com/2020-04-10/tech-info/53317.html

At this point, you should have heard of "gRPC" (at least once in the title). In this article, I will focus on the benefits of using gRPC as a communication medium between microservices.

First of all, I will try to briefly introduce the history of architecture evolution. Second, I'll focus on using REST (as a medium) and possible problems. Third, gRPC starts. Finally, I will take my development workflow as an example.

Brief history of architecture development

This section lists and discusses the pros and cons of each architecture (focusing on Web based applications)

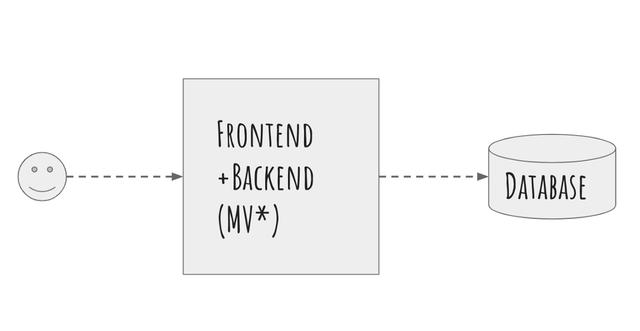

Integral type

It's all in one bag.

Advantage:

·Easy to use

·Single code base for all requirements

Disadvantages:

·Difficult to expand (part)

·Load server (server side rendering)

·Poor user experience (long load time)

·Hard to expand development team

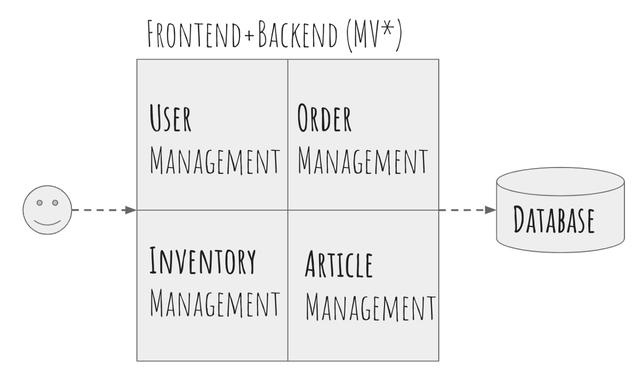

Monolith architecture

Inside monolith architecture

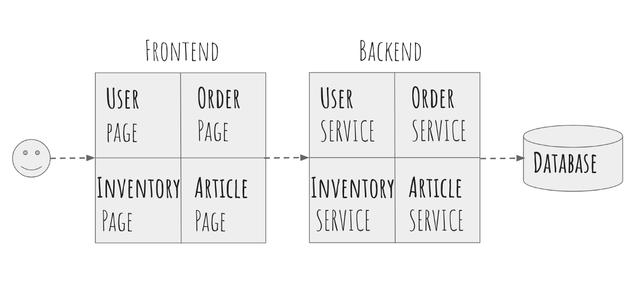

Monolith v2 (front end back end)

Clear separation between front-end logic and back-end logic. The back end is still huge.

Advantage:

·Teams can be divided into front end and back end

·Better user experience (client's front-end logic (application))

Disadvantages:

·[still] difficult to expand (part)

·[still] difficult to expand development team

Frontend-Backend architecture

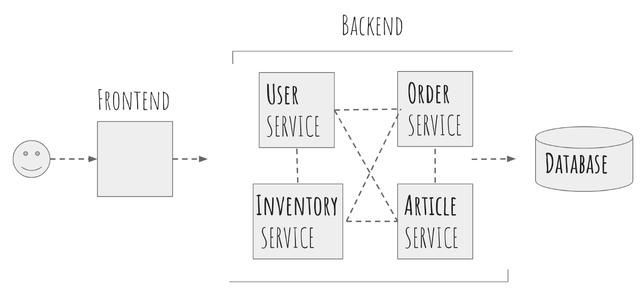

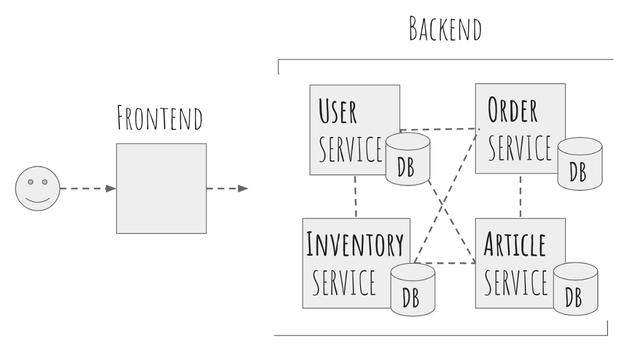

Micro service

Everything is a service (package). Use the network to communicate between each package.

Advantage:

·Scalable components

·Scalable team

·Flexible language selection (if standard communication mode is used)

·Deploy / repair each package independently

Disadvantages:

·Introduce network problems (waiting time between communications)

·Documents and protocols required for communication between services

·If you use a shared database, it is difficult to identify errors

Micro-service architecture with shared database

Micro-service architecture with standalone database per service

REST (as a medium) and possible problems

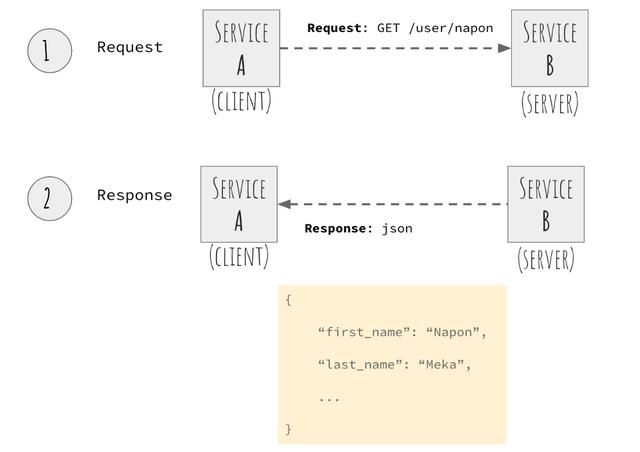

REST (HTTP based JSON) is the most popular way of communication between services due to its ease of use. Using REST gives you the flexibility to use any language for each service.

Typical REST call

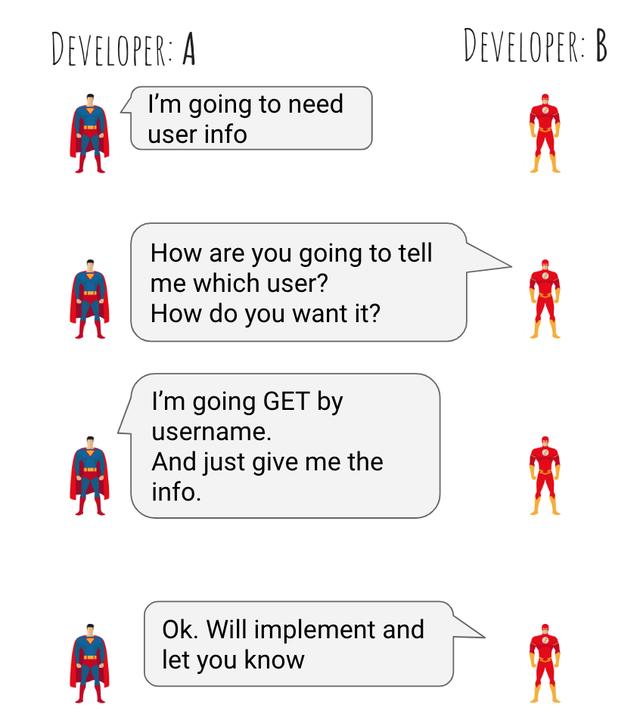

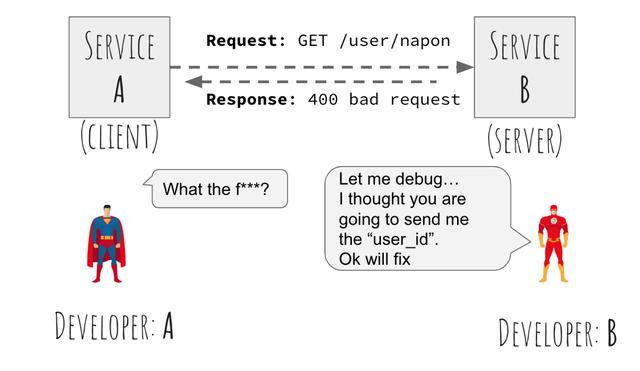

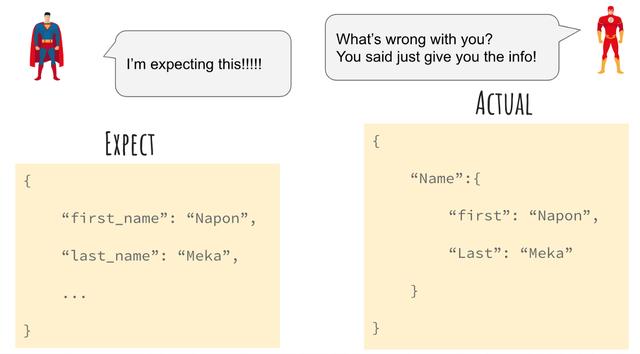

However, flexibility can lead to some pitfalls. A very strict agreement is required between developers. The following sketch shows a very common scenario that usually occurs during development.

Developer A want Developer B to make a service

Bad request

Expectation vs Actual

Question:

·Rely on human consent

·Dependent documents (need to be maintained / updated)

·From protocol to protocol (both services) requires a lot of "format, parse"

·Most development time is spent on protocol and formatting, not business logic

gRPC boot

gRPC is a modern open source high-performance RPC framework that can run in any environment.

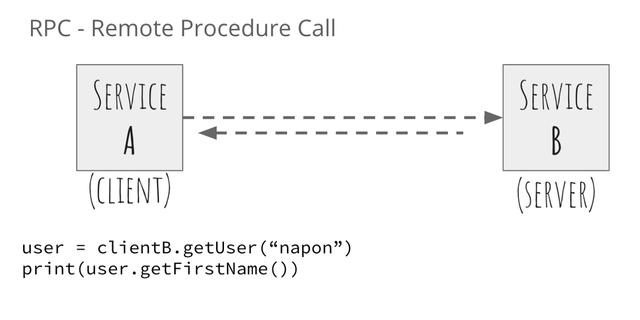

What is RPC? RPC represents a remote procedure call. It is a protocol that allows a program to request services from a program on another computer on the network without needing to know the details of the network.

Remote Procedure Call

RPC based on REST

Using the RPC client / library provided by the service creator ensures that the service is invoked correctly. If we want to use RPC and REST as media, developer B must write client code for developer A to use. If both developers use different choice languages, this is A major problem for developer B, who needs to write PRC clients in another language he is not used to. Moreover, if different services also need to use service B, developer B will have to spend A lot of time making RPC clients in different languages and maintain them.

Protozoan?

Protocol buffer is Google's language independent and platform independent extensible mechanism for serializing structured data. gRPC uses Protobuf as the language to define data structures and services. You can compare it to the rigorous documentation of REST services. The Protobuf syntax is very strict, so the machine can compile.

The following code block is a simple raw file that describes a simple to-do service and the data structure used for communication.

message keyword used to define data structure

Service keyword used to define a service

"rpc" keyword, used to define service functions

syntax = "proto3";

package gogrpcspec;

message Employee {

string name = 1;

}

message Task {

Employee employee = 1;

string name = 2;

string status = 3;

}

message Summary {

int32 todoTasks = 1;

int32 doingTasks = 2;

int32 doneTasks = 3;

}

message SpecificSummary {

Employee employee = 1;

Summary summary = 2;

}

service TaskManager {

rpc GetSummary(Employee) returns (SpecificSummary) {}

rpc AddTask(Task) returns (SpecificSummary) {}

rpc AddTasks(stream Task) returns(Summary) {}

rpc GetTasks(Employee) returns (stream Task) {}

rpc ChangeToDone(stream Task) returns (stream Task) {}

}Compile the original code into server code

Because protobuf is very strict, we can use "protoc" to compile proto files into server code. After compilation, you need to implement real logic to it.

protoc --go_out=plugins=grpc:. ${pwd}/proto/*.proto

--proto_path=${pwd}

Compile source code to client code

With proto files, we can use "protoc" to compile its client code into many popular languages: C, C + +, Dart, Go, Java, javascript, Objective-C, PHP, Python, Ruby, etc.

gRPC rpc type

gRPC supports multiple rpc types (though I won't emphasize that in this article)

·Single RPC (request response)

·Client streaming RPC

·Server streaming RPC

·Bidirectional flow RPC

Development process

In order to adopt gRPC across teams, we need something.

·Centralized code base (gRPC specification for communication between services)

·Generate code automatically

·Service users (customers) can use the generated code (for the language of their choice) through the package manager, for example. To get / install

The code for this example can be found in this warehouse:

Code base structure

.

├── HISTORY.md

├── Makefile

├── README.md

├── genpyinit.sh

├── gogrpcspec //go generated code here

│ └── ...

├── proto

│ └── todo.proto

├── pygrpcspec //python generated code here

│ ├── ...

└── setup.py

git hook

I'll set up githook to automatically generate content before committing. If appropriate, you can use CI (drone / gitlab / jenkins /...). (the disadvantage of using githook is that every developer needs to configure githook first.)

You need a directory (folder) to keep the pre commit scripts. I call it ". githooks"

$ mkdir .githooks $ cd .githooks/ $ cat <<EOF > pre-commit #!/bin/sh set -e make generate git add gogrpcspec pygrpcspec EOF $ chomd +x pre-commit

The script will trigger Makefile and git to add 2 directories (gogrpcsepc, pygrpcspec)

For githooks to work, developers must run the following git config command:

$ git config core.hooksPath .githooks

We add this command to the Makefile to make it easy for developers to run the command (called "make init"). The contents of the Makefile should look like this.

# content of: Makefile init: git config core.hooksPath .githooks generate: # TO BE CONTINUE

Generate code

We've set up githooks to run Makefile ("make generate"). Let's dig into the commands that will generate code automatically. This article will focus on two languages - go, python

Generate go code

We can use protoc to compile. proto files into go code.

protoc --go_out=plugins=grpc:. ${pwd}/proto/*.proto

\--proto_path=${pwd}

We will use protoc through docker instead (for the convenience of developers)

docker run --rm -v ${CURDIR}:${CURDIR} -w ${CURDIR} \

znly/protoc \

--go_out=plugins=grpc:. \

${CURDIR}/proto/*.proto \

--proto_path=${CURDIR}

Take a look at the generate command below (we will delete, generate and move the code to the appropriate folder)

# content of: Makefile

init:

git config core.hooksPath .githooks

generate:

# remove previously generated code

rm -rf gogrpcspec/*

# generate go code

docker run --rm -v ${CURDIR}:${CURDIR} -w ${CURDIR} \

znly/protoc \

--go_out=plugins=grpc:. \

${CURDIR}/proto/*.proto \

--proto_path=${CURDIR}

# move generated code into gogrpcspec folder

mv proto/*.go gogrpcspec

After the code is generated, users (developers) who want to use the code for the stub of the server or client to call the service can download it using the go get command

go get -u github.com/redcranetech/grpcspec-example

Then use

import pb "github.com/redcranetech/grpcspec-example/gogrpcspec"

Generate python code

We can use protoc to compile. proto files into python code.

protoc --plugin=protoc-gen-grpc=/usr/bin/grpc_python_plugin \

--python_out=./pygrpcspec \

--grpc_out=./pygrpcspec \

${pwd}/proto/*.proto \

--proto_path=${pwd}

We will use protoc through docker instead (for the convenience of developers)

docker run --rm -v ${CURDIR}:${CURDIR} -w ${CURDIR} \

znly/protoc \ --plugin=protoc-gen-grpc=/usr/bin/grpc_python_plugin \

--python_out=./pygrpcspec \

--grpc_out=./pygrpcspec \

${CURDIR}/proto/*.proto \

--proto_path=${CURDIR}

In order for the generated code to enter the python package to be installed through pip, we need to perform additional steps:

·Create setup.py

·Modify the generated code (the generated code is imported using the folder name, but we change it to a relative name)

·Folder needs to contain "init.py" to expose generated code

Use the following template to create the setup.py file:

# content of: setup.py

from setuptools import setup, find_packages

with open('README.md') as readme_file:

README = readme_file.read()

with open('HISTORY.md') as history_file:

HISTORY = history_file.read()

setup_args = dict(

name='pygrpcspec',

version='0.0.1',

description='grpc spec',

long_description_content_type="text/markdown",

long_description=README + '\n\n' + HISTORY,

license='MIT',

packages=['pygrpcspec','pygrpcspec.proto'],

author='Napon Mekavuthikul',

author_email='napon@redcranetech.com',

keywords=['grpc'],

url='https://github.com/redcranetech/grpcspec-example',

download_url=''

)

install_requires = [

'grpcio>=1.21.0',

'grpcio-tools>=1.21.0',

'protobuf>=3.8.0'

]

if __name__ == '__main__':

setup(**setup_args, install_requires=install_requires)Generate init.py

init.py of pygrpcspec folder must be

# content of: pygrpspec/__init__.py from . import proto __all__ = [ 'proto' ]

And init.py of pygrpcspec / proto folder must be

# content of: pygrpspec/proto/__init__.py

from . import todo_pb2

from . import todo_pb2_grpc

__all__ = [

'todo_pb2',

'todo_pb2_grpc',

]In order to enable developers to add more. proto files and generate init.py automatically, a simple shell script can solve this problem

# content of: genpyinit.sh

cat <<EOF >pygrpcspec/__init__.py

from . import proto

__all__ = [

'proto'

]

EOF

pyfiles=($(ls pygrpcspec/proto | sed -e 's/\..*$//'| grep -v __init__))

rm -f pygrpcspec/proto/__init__.py

for i in "${pyfiles[@]}"

do

echo "from . import $i" >> pygrpcspec/proto/__init__.py

done

echo "__all__ = [" >> pygrpcspec/proto/__init__.py

for i in "${pyfiles[@]}"

do

echo " '$i'," >> pygrpcspec/proto/__init__.py

done

echo "]" >> pygrpcspec/proto/__init__.pyModify generated code

(if you are not familiar with python modules, you can skip this reading)

We want to change each from original import to from. Import ". The reason behind this is that we put the data type and service stub in the same directory, and in order to call the module outside the module, each internal reference should be relative.

sed -i -E 's/^from proto import/from . import/g' *.py

At this point, your Makefile should look like this:

# content of: Makefile

init:

git config core.hooksPath .githooks

generate:

# remove previously generated code

rm -rf gogrpcspec/*

# generate go code

docker run --rm -v ${CURDIR}:${CURDIR} -w ${CURDIR} \

znly/protoc \

--go_out=plugins=grpc:. \

${CURDIR}/proto/*.proto \

--proto_path=${CURDIR}

# move generated code into gogrpcspec folder

mv proto/*.go gogrpcspec

# remove previously generated code

rm -rf pygrpcspec/*

# generate python code

docker run --rm -v ${CURDIR}:${CURDIR} -w ${CURDIR} \

znly/protoc \

--plugin=protoc-gen-grpc=/usr/bin/grpc_python_plugin \

--python_out=./pygrpcspec \

--grpc_out=./pygrpcspec \

${CURDIR}/proto/*.proto \

--proto_path=${CURDIR}

# generate __init__.py

sh genpyinit.sh

# modify import using sed

docker run --rm -v ${CURDIR}:${CURDIR} -w ${CURDIR}/pygrpcspec/proto \

frolvlad/alpine-bash \

bash -c "sed -i -E 's/^from proto import/from . import/g' *.py"After the code is generated, users (developers) who want to use the code for the stub of the server or client to invoke the service can download it using the pip command

pip install -e git+https://github.com/redcranetech/grpcspec-example.git#egg=pygrpcspec

Then use

from pygrpcspec.proto import todo_pb2_grpc from pygrpcspec.proto import todo_pb2

In conclusion, due to the syntax strictness of protobuf, gRPC can be compiled into client code of many different languages, so gRPC is an excellent way to communicate between microservices.

All codes in this article: