background

Cosumer's message consumption process is relatively complex, and the following modules are more important: maintenance of consumption progress, search of messages, message filtering, load balancing, message processing, acknowledgment of back delivery, etc. Limited to space, this article mainly introduces how consumers acquire and maintain consumption progress. As the above steps are closely connected, there may be interpenetration between them.

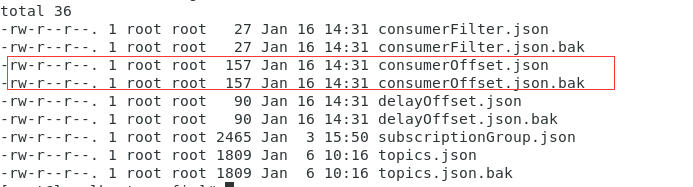

Consumption progress document

Our previous articles How does RocketMQ build ConsumerQueue's? As mentioned in, a service thread asynchronously obtains the written message from CommitLog, and then saves the key information such as message location, size and tagsCode to the selected ConsumerQueue. ConsumerQueue is an index file that stores information such as the location of messages under a topic in CommitLog. When we consume messages, we first read the message location from the ConsumerQueue, and then go to CommitLog to retrieve the messages. This is a typical space for time approach. At the same time, we need to record where we read it. The next time we read it, we need to read it from the end of the last time, so we need a file to save the consumption progress. In RocketMQ, this file is ConsumserOffset.json. In the store/config directory, it is as follows:

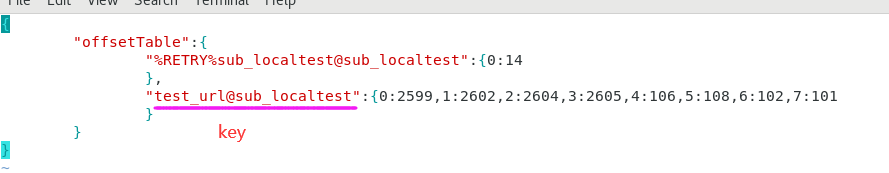

The content in consumerOffset.json is similar to this:

Test ﹣ URL @ sub ﹣ localtest is the primary key, the rule is topic@consumerGroup, and the content is the consumption progress of each ConsumerQueue. For example, the next Queue 0 should consume 2599, the next Queue 1 should consume 2602, and the next Queue 6 should consume 102. This progress is accumulated by 1, corresponding to the fixed 20 bytes of ConsumerQueue.

Now let's do an experiment. First, send a message through Producer (the code is very simple, so we don't paste it). The sending result is as follows:

SendResult [sendStatus=SEND_OK, msgId=C0A84D05091058644D4664E1427C0000, offsetMsgId=C0A84D0000002A9F00000000001ED9BA, messageQueue=MessageQueue [topic=test_url, brokerName=broker-a, queueId=6], queueOffset=102]

As you can see from the results, the message is saved in location 102 in the messengerqueue-6 of broker-a. Then we start Consumer and consume this message:

2020-01-20 14:08:04.230 INFO [MessageThread_3] c.c.r.example.simplest.Consumer - Receive message:topic=test_url,msgId=C0A84D05091058644D4664E1427C0000

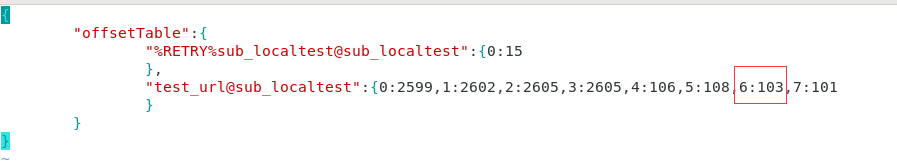

The message has been consumed successfully. Then, let's look at the contents of the ConsumerOffset.json file:

See? In the last screenshot, the consumption progress of Queue 6 was 102, which became 103 this time. A message has been successfully consumed.

Now we are going to go deep into: how is the consumption progress obtained and maintained when consuming information?

Where to start spending?

Consumer is calling

consumer.start();

At startup, the consumption progress will be loaded as follows:

//DefaultMQPushConsumerImpl.start() method if (this.defaultMQPushConsumer.getOffsetStore() != null) { this.offsetStore = this.defaultMQPushConsumer.getOffsetStore(); // If there is a direct load locally } else { switch (this.defaultMQPushConsumer.getMessageModel()) { case BROADCASTING: this.offsetStore = new LocalFileOffsetStore(this.mQClientFactory, this.defaultMQPushConsumer.getConsumerGroup()); break; case CLUSTERING: this.offsetStore = new RemoteBrokerOffsetStore(this.mQClientFactory, this.defaultMQPushConsumer.getConsumerGroup()); // In the cluster mode, a remote broker offset sotre is generated, and the consumption progress is saved on the broker side break; default: break; } this.defaultMQPushConsumer.setOffsetStore(this.offsetStore); } this.offsetStore.load(); // Load local progress

The offsetStore object mentioned above is an interface, as follows:

public interface OffsetStore { void load() throws MQClientException; void updateOffset(final MessageQueue mq, final long offset, final boolean increaseOnly); long readOffset(final MessageQueue mq, final ReadOffsetType type); void persistAll(final Set<MessageQueue> mqs); void persist(final MessageQueue mq); void removeOffset(MessageQueue mq); Map<MessageQueue, Long> cloneOffsetTable(String topic); void updateConsumeOffsetToBroker(MessageQueue mq, long offset, boolean isOneway) throws RemotingException, MQBrokerException, InterruptedException, MQClientException; }

OffsetStore provides methods for operating consumption progress, such as loading consumption progress, reading consumption progress, updating consumption progress, etc. In the cluster consumption mode, the consumption progress is not persisted on the Consumer side, but saved on the remote Broker side. For example, the remoteborkeroffsetstore class is used above:

public class RemoteBrokerOffsetStore implements OffsetStore { private final static InternalLogger log = ClientLogger.getLog(); private final MQClientInstance mQClientFactory; // Client instance private final String groupName; // Cluster name private ConcurrentMap<MessageQueue, AtomicLong> offsetTable = new ConcurrentHashMap<MessageQueue, AtomicLong>(); // Consumption progress of each Queue }

Because the consumption progress is saved in the broker side, the load method used to load the local consumption progress is empty in the remote broker offset store, and nothing is done. The real reading of the consumption progress is realized through the readOffset method.

So far, we know that the consumption progress exists in a consumerOffset.json file on the broker side. By reading this file through the readOffset method, we know where to start consumption.

Brief introduction to load balancing

Before you can get a deeper understanding of the readOffset method for reading consumption progress, you need to simply know when this method will be called.

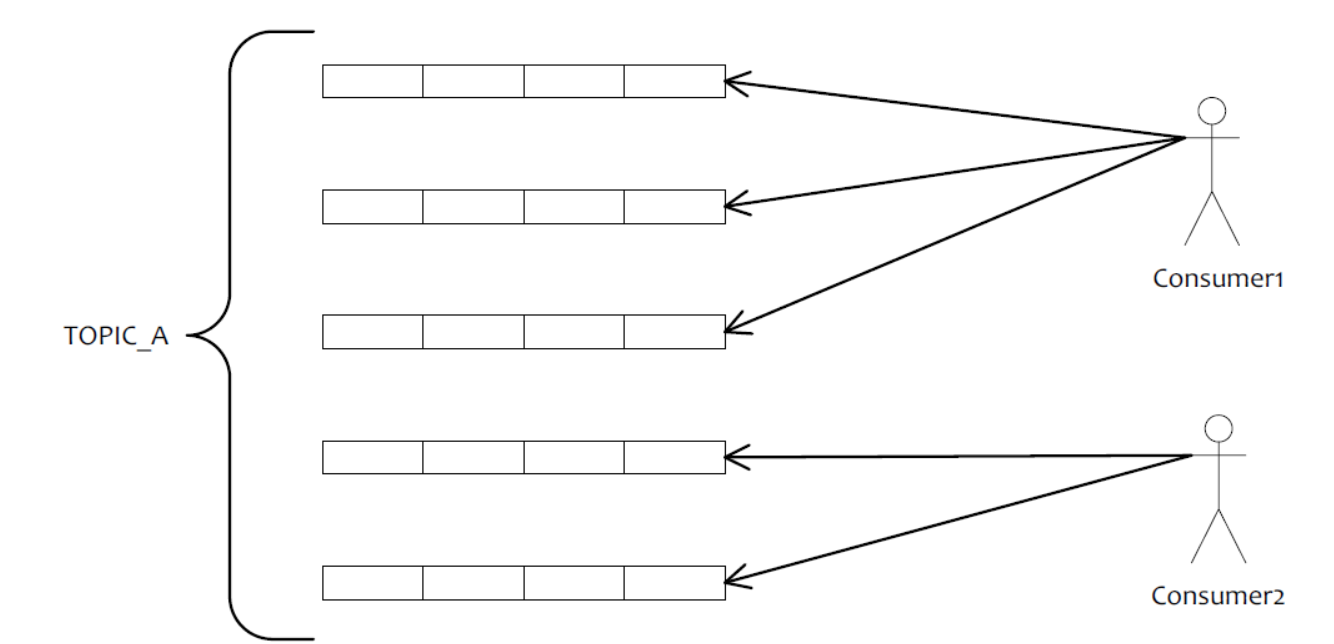

At the beginning of our article, we mentioned the module of Consumer consuming messages, including the module of load balancing. A topic has multiple consumption queues, and there may be multiple consumers under the same group subscribing to the topic, so these queues need to be allocated to the consumers under the same group according to certain policies, such as the following average allocation:

In the figure above, topic UA has 5 queues and 2 consumers have subscribed. If it is distributed evenly, it is three queues consumed by Consumer1 and two queues consumed by Consumer2. This is load balancing.

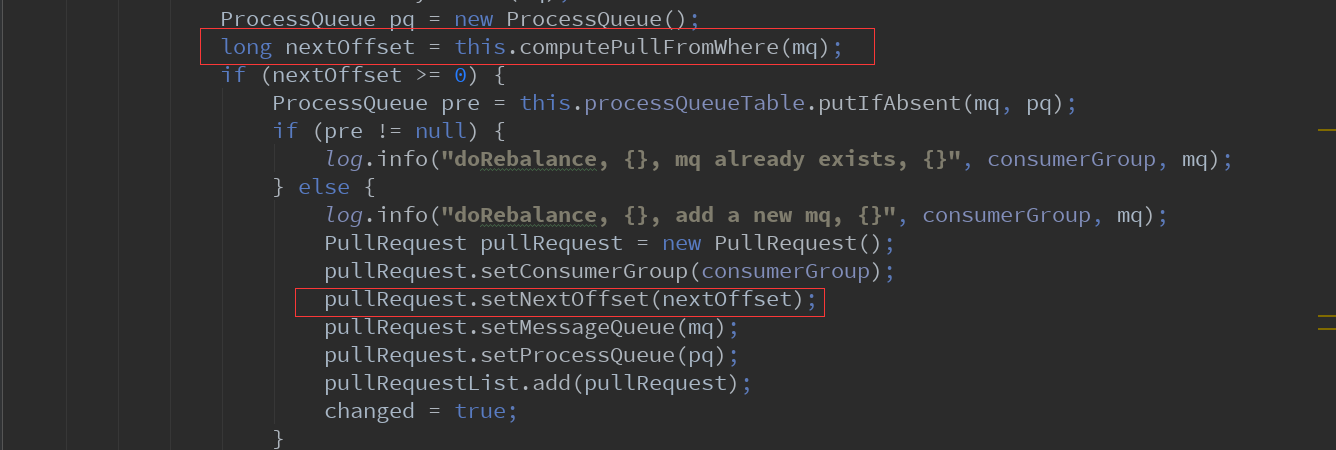

When a Consumer is assigned to several message queues, several message processing queues will be created accordingly (processqueues will be used when consuming messages), and a pull message request (PullRequest will be used when requesting messages) will be generated. This request is not a real request to get messages from the broker, but is saved in a blocking queue After that, the special service thread that pulls the message reads it and assembles the request to get the message, and sends it to the broker side (for the benefit of asynchrony, of course). The next consumption progress is temporarily saved in this request PullRequest, as shown below:

Only here will a PullRequest be generated. Subsequently, all pull messages of the Queue under the consumerGroup will reuse the PullRequest, only updating the nextofffset and other parameters. The PullRequest class is as follows:

public class PullRequest { private String consumerGroup; // Consumption grouping private MessageQueue messageQueue; // Consumption queue private ProcessQueue processQueue; // Message processing queue private long nextOffset; // Consumption schedule private boolean lockedFirst = false; // Is it locked? }

Calculate consumption progress

When a PullRequest is generated, it calculates where to start consumption (computPullFromWhere). The default consumption starting point of RocketMQ is consume [from] last [offset], so in the computPullFromWhere method, you will go to this case:

case CONSUME_FROM_LAST_OFFSET: { long lastOffset = offsetStore.readOffset(mq, ReadOffsetType.READ_FROM_STORE);// Read offset if (lastOffset >= 0) { // Normal deviation result = lastOffset; } // First start,no offset else if (-1 == lastOffset) { // First start, broker has no offset, readOffset returns - 1 if (mq.getTopic().startsWith(MixAll.RETRY_GROUP_TOPIC_PREFIX)) { result = 0L; // Message retry } else { try { result = this.mQClientFactory.getMQAdminImpl().maxOffset(mq);// Get the maximum offset of the message queue consumption } catch (MQClientException e) { result = -1; } } } else { result = -1; // In case of exception, readOffset returns - 2, and here returns - 1 } break; }

This is the readOffset method we mentioned above!

readOffset

The input parameters of the readoffer method t are the currently assigned messagequeue and the fixed readoffsettype.read'from'store, which means to read the consumption progress of the messagequeue from the remote Broker. Therefore, we go to the branch of "read" from "store, as follows:

case READ_FROM_STORE: { try { long brokerOffset = this.fetchConsumeOffsetFromBroker(mq);// Get consumption progress from broker AtomicLong offset = new AtomicLong(brokerOffset); this.updateOffset(mq, offset.get(), false); // Update the consumption progress, and update the table remoteborkeroffsetstore.offsettable return brokerOffset; } // No offset in broker catch (MQBrokerException e) { return -1; } //Other exceptions catch (Exception e) { log.warn("fetchConsumeOffsetFromBroker exception, " + mq, e); return -2; } }

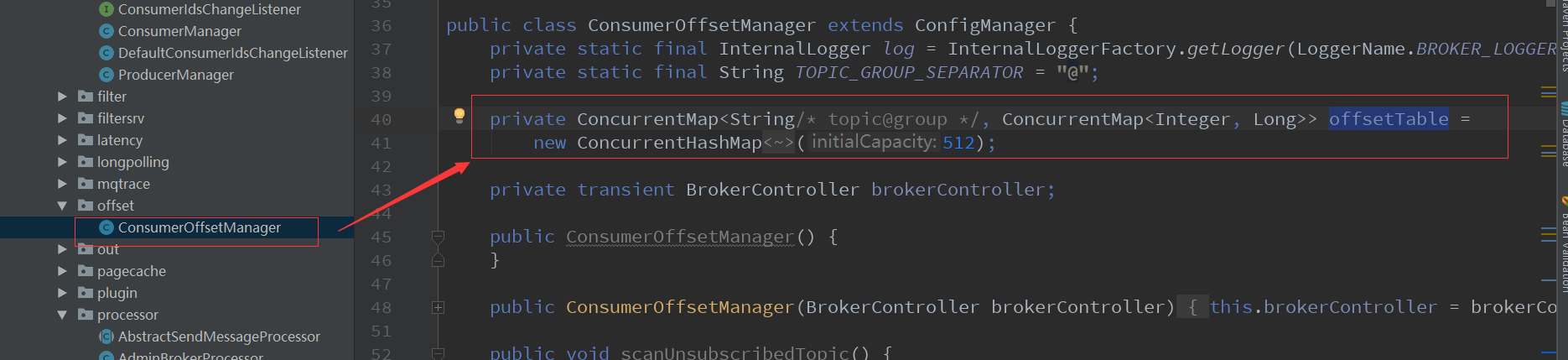

fetchConsumerOffsetFromBroker is to send a request to the broker where the MessageQueue is located to get the consumption progress. The previous article on the underlying communication has already mentioned it, so we won't go into details here. On the broker side, the consumption progress is saved in the ConsumerOffsetManager:

key is Topic@ConsumerGroup, and value stores the mapping relationship between queueId and offset. The code to find the consumption progress is as follows:

long offset = this.brokerController.getConsumerOffsetManager().queryOffset( requestHeader.getConsumerGroup(), requestHeader.getTopic(), requestHeader.getQueueId());

From the key point of view, the consumption progress of topic is distinguished by the ConsumerGroup. The consumption progress of the MessageQueue under different consumergroups does not affect each other, which is also well understood.

Message acquisition and update consumption progress

So far, we know that the consumption progress of each MessageQueue has a corresponding Broker side. When the load balancing service does load balancing for each Topic, it creates a PullRequest and reads the consumption progress offset. Then put the PullRequest into a PullRequest queue for the special pullmessage service to read, and then initiate the real pull message request. Update consumption progress here is closely related to getting messages, so it will involve some content of getting messages.

Pullmessageservice is a service thread specially used to pull messages. Its run method is as follows:

@Override public void run() { log.info(this.getServiceName() + " service started"); while (!this.isStopped()) { try { PullRequest pullRequest = this.pullRequestQueue.take(); // Get a PullsRequest from the blocking queue this.pullMessage(pullRequest); // Fetch message } catch (InterruptedException ignored) { } catch (Exception e) { log.error("Pull Message Service Run Method exception", e); } } log.info(this.getServiceName() + " service end"); }

First, pull the PullRequest from the blocking queue, and then call the pullMessage method to get the message. The call will eventually go to the pullMessage method of DefaultMQPushConsumerImpl. There are a lot of codes and how to pull the message is not the key content of this time. Here, we just post the last part of the send request, understand the parameters in it, and understand the message get request.

try { this.pullAPIWrapper.pullKernelImpl( pullRequest.getMessageQueue(), //Message queue subExpression,// Subscription expression, such as "tag a" subscriptionData.getExpressionType(), // Expression type, such as "TAG" subscriptionData.getSubVersion(), // Version number pullRequest.getNextOffset(), // Next message progress this.defaultMQPushConsumer.getPullBatchSize(), // How many pieces are pulled at one time, default 32 sysFlag,// Some flag bit sets, temporarily not concerned commitOffsetValue, // BROKER_SUSPEND_MAX_TIME_MILLIS,// hold time for long polling, default 15 seconds CONSUMER_TIMEOUT_MILLIS_WHEN_SUSPEND,// Call timeout, 30 seconds by default CommunicationMode.ASYNC, // Asynchronous communication pullCallback// Callback ); } catch (Exception e) { log.error("pullKernelImpl exception", e); this.executePullRequestLater(pullRequest, PULL_TIME_DELAY_MILLS_WHEN_EXCEPTION); }

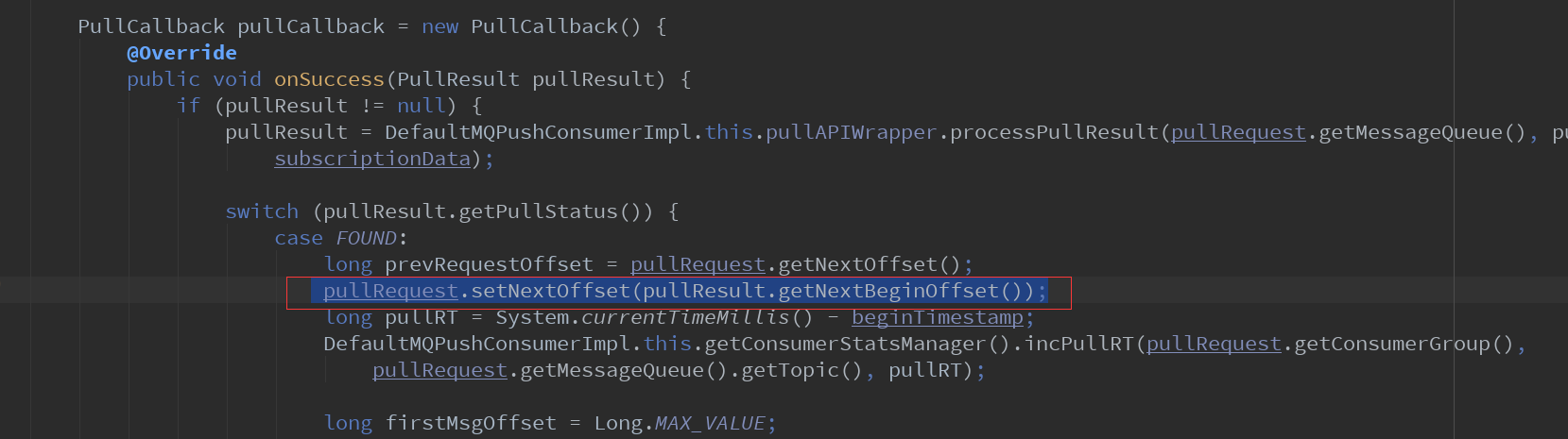

Update consumption progress on the Consumer side

Since the request to send a message is an asynchronous operation and the return processing is in the pullCallback, we can boldly guess that the update of the consumption progress on the Consumer side must also be in this. Indeed, the next offset of PullRequest will be updated in pullCallback:

broker side update consumption progress

Since message processing is not the focus of this time, we do not intend to go deep into the Broker side's processing of getting messages. We just need to know that after the Broker side gets messages, the accountant calculates a nextBeginOffset, which is the next consumption progress, and then returns to the Consumer side for the Consumer side to update the progress, as shown below:

nextBeginOffset = offset + (i / ConsumeQueue.CQ_STORE_UNIT_SIZE);

Secondly, after getting the message, the broker will update the consumption progress as follows:

boolean storeOffsetEnable = brokerAllowSuspend; // brokerAllowSuspend is true by default. If there is no message, the request will be held storeOffsetEnable = storeOffsetEnable && hasCommitOffsetFlag; // If the consumption progress is allowed to be submitted when pulling messages, commitOffsetFlag has storeOffsetEnable = storeOffsetEnable && this.brokerController.getMessageStoreConfig().getBrokerRole() != BrokerRole.SLAVE;// So this is true by default for the Broker master node if (storeOffsetEnable) { this.brokerController.getConsumerOffsetManager().commitOffset(RemotingHelper.parseChannelRemoteAddr(channel), requestHeader.getConsumerGroup(), requestHeader.getTopic(), requestHeader.getQueueId(), requestHeader.getCommitOffset()); // Save consumption progress and write offsetTable }

Consumption progress persistence

Broker updates the consumption progress only by updating the offsetTable table table, not the ConsumerOffset.json file. In fact, during broker initialization, a timing task will be started to save tableOffset to the ConsumerOffset.json file on a regular basis, as shown below:

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() { @Override public void run() { try { BrokerController.this.consumerOffsetManager.persist(); // Save file } catch (Throwable e) { log.error("schedule persist consumerOffset error.", e); } } }, 1000 * 10, this.brokerConfig.getFlushConsumerOffsetInterval(), TimeUnit.MILLISECONDS);

Flushconsumeroffsetinterval is 5s by default, which means saving the consumption progress to the file every 5 times. The process of saving is to save the original file to the ConsumerOffset.json.bak file, and then save the new content to the ConsumerOffset.json file.

At this point, the consumption progress maintenance of ConsumerQueue is completed.

Summary

For each Topic message of RocketMQ, the consumption progress of each MessageQueue under its own ConsumerGroup exists in a consumerOffset.json file on the broker side. When the Consumer side starts, a PullRequest request will be created. At this time, a request to get the next consumption progress will be sent to the broker. The broker reads the next consumption progress and returns it to the Consumer side. Then the Consumer reads the PullRequest through a separate service thread and pulls the message accordingly. After the broker gets the message, it will update the consumption progress in time. In addition, there is a separate scheduled task to regularly save consumption progress to files and back up the original files.