01 MongoDB test procedure description

This paper mainly tests the efficiency of mongodb inserting data. Using the mongodb secondary development interface provided by a teacher.

The data structure is:

Struct keyvalmeta{

Char name[64];

Int valType;

DateTime nStartTime;

DateTime nEndTime;

Int nUserDefMetaSize;

Char* pUserDefMetaDat;

}

Other parts of the above structure are briefly provided for reference only.

Semantic analysis of structural style:

- valType represents the type of Value, which is an object in 3D space.

- nStartTime is the starting point of the valid time.

- nEndTime is a valid time endpoint.

- nUserDefMetaSize is the user-defined metadata length.

- pUserDefMetaDat user-defined metadata, such as XML format.

The following is the test code. First initialize the data of the structure, then use the clock() function (CPU clock timing unit between hours) to set the start time start, insert the data through a cycle, and record the end time finish again after the cycle:

CreateAndConnect();//connect the mongodb

InitOrOpenDb();//initial the db

OpenWorkspace();//open a work space

//initial the data

int nMaxSize=32*1024*1024;

char* pFileDat=new char[nMaxSize];

int nFileSize;

keyvalmeta kvm;

strcpy(kvm.name,"whu");

const int TESTNUM=100000;

clock_t start,finish;

//get the file's data

FILE* fp;

fp=fopen("c:\\bin\\1.xml","rb");

nFileSize=fread(pFileDat,1,nMaxSize,fp);

fclose(fp);

kvm.nUserDefMetaSize=nFileSize;

kvm.pUserDefMetaDat=new char[kvm.nUserDefMetaSize];

//start the test

start=clock();

for(int i=0;i<TESTNUM;i++)

{

InsertKeyVal(kvm);//insert the record

}

delete [] kvm.pUserDefMetaDat;

finish=clock();

cout<<"time:"<<(double)(finish-start)/CLOCKS_PER_SEC<<"S"<<endl;

cout<<"insert finished"<<endl;

getchar();

From the above program, we can know that the variable quantity is two: the number of cycles, TESTNUM and the file stream size 1.XML.

02 data structure analysis

Before testing, let me talk about the precautions for general testing:

- It is better to use a desktop computer for the test and it has strong stability (usually the heat dissipation problem has a great impact), so it is not recommended to use a notebook.

- Generally, some servers or ordinary desktops still use 32-bit systems. I would like to remind you that 32-bit systems store more than 1G at most. Therefore, pay attention when inserting a large amount of data. The 64 bit system can store up to 32-bit Systems * 232, about 4EB (1EB=1024PB,1PB=1024TB,1TB=1024G)

At first I used a laptop to test performance. Insert 100w pieces of data, and the XML size is 258kb.

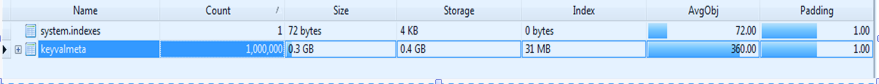

Use MongoDBVUE to view, as shown in the figure:

You can only know the general data through the UI interface. To understand the specific data, you also need to use db.keyvalmeta.stats() of the shell command,

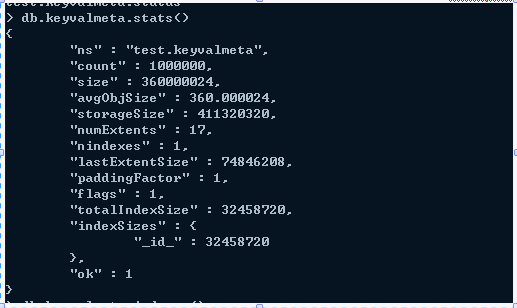

The output is as shown in the figure:

mongodb Storage resolution: The size of a single data block inserted is 258+104=362(byte)Where 258 is the size of the inserted file and 104 is the size of the structure total Size=362*1000000=362000000(and shell 360000024 in the command (almost the same) Memory utilization=1-(storageSize-size)/storegeSize*100%=87.6%,Memory utilization is very high.

03 notebook test data

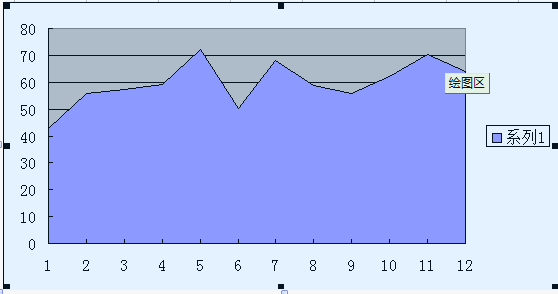

Through the notebook test of 64 bit system, the use time is shown in the table below, and the time displayed each time is not very stable.

29.54 50.3 42.66 55.96 57.48 59.26 72.19 89.75 67.92 58.82 55.83 61.96 70.2 64.12

Remove the lowest value of 29.54 and the highest value of 89.75. The average time is 59.72, which is a little scary. It takes about a minute to insert 100w data.

The floating of data is relatively large, so it is not recommended to use notebook, which has poor stability.

04 server test data

The following is a desktop computer of 32-bit system (the local computer is a server and the disk reading speed is fast). The test method is the same as the above notebook.

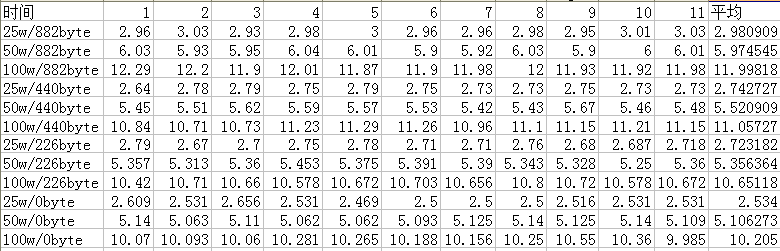

A/B, a represents the number of inserts, B represents the file size, and the data displayed later is the total time spent in inserting.

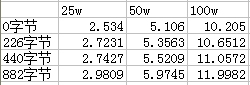

According to the above table, the following statistics can be obtained:

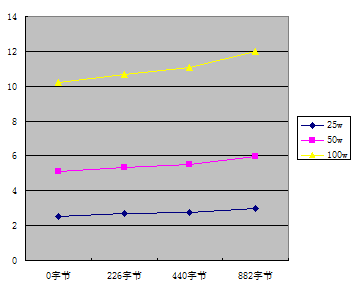

The following figure takes the file size as the abscissa and the time spent as the ordinate

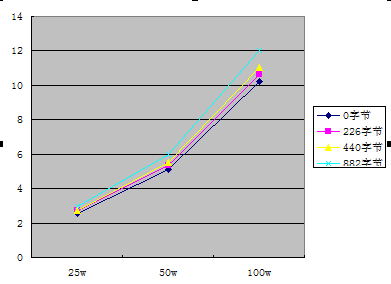

The following figure takes the number of inserted bars as the abscissa and the time spent as the ordinate

It can be seen from the chart that the time fluctuation of file size is relatively small, and the file size is positively proportional to the insertion time, but the coefficient is small.

The number of inserts is also in direct proportion to the insertion time, and the coefficient is about 2 (these data are easy to understand).

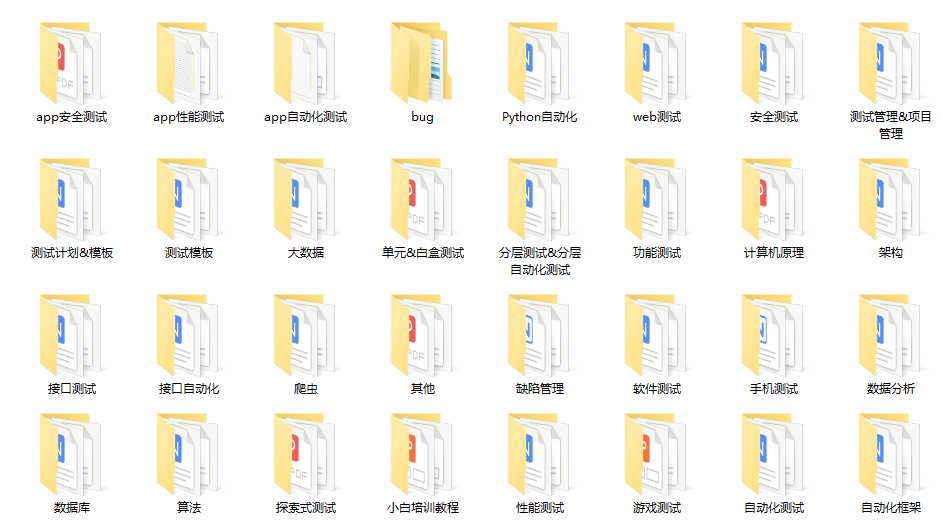

The following is the test data. It should be the most comprehensive and complete war preparation warehouse for friends doing [software testing]. This warehouse has also accompanied me through the most difficult journey. I hope it can also help you!

Finally, it can be in the official account: the sad spicy bar! Get a 216 page interview document of Software Test Engineer for free. And the corresponding video learning tutorials for free!, It includes basic knowledge, Linux essentials, Shell, Internet program principles, Mysql database, special topics of packet capture tools, interface test tools, test advanced Python programming, Web automation test, APP automation test, interface automation test, advanced continuous test integration, test architecture, development test framework, performance test, security test, etc.

Don't fight alone in learning. It's best to stay together and grow together. The effect of mass effect is very powerful. If we study together and punch in together, we will have more motivation to learn and stick to it. You can join our testing technology exchange group: 914172719 (there are various software testing resources and technical discussions)

Friends who like software testing, if my blog is helpful to you and if you like my blog content, please click "like", "comment" and "collect" for three times!

Haowen recommendation

What kind of person is suitable for software testing?