Tags (space delimited): kubernetes series

-

One: What is Helm

- 2: Helm deployment

One: What is Helm

Before using helm, deploying applications to kubernetes requires a cumbersome process of deployment, svc, and so on.Moreover, as many projects are microserviced, deployment and management of complex applications in containers becomes more complex. Helm supports release version management and control by packaging, which greatly simplifies the deployment and management of Kubernetes applications Helm is essentially to make K8s'application management (Deployment,Service, etc.) configurable and dynamically generated.Dynamically generate K8s resource cleanup Single file (deployment.yaml, service.yaml).Then call Kubectl to automate K8s resource deployment Helm is an official YUM-like package manager and a process encapsulation for deployment environments.Helm has two important concepts: chart and release chart is a collection of information that creates an application, including configuration templates, parameter definitions, dependencies, documentation for various Kubernetes objects Ming Wait.Charts are self-contained logical units that apply deployments.You can think of chart s as software installation packages in apt, yum release is a running instance of a chart and represents a running application.When charts are installed in the Kubernetes cluster, they are generated A release.chart can be installed to the same cluster multiple times, with each installation being a release

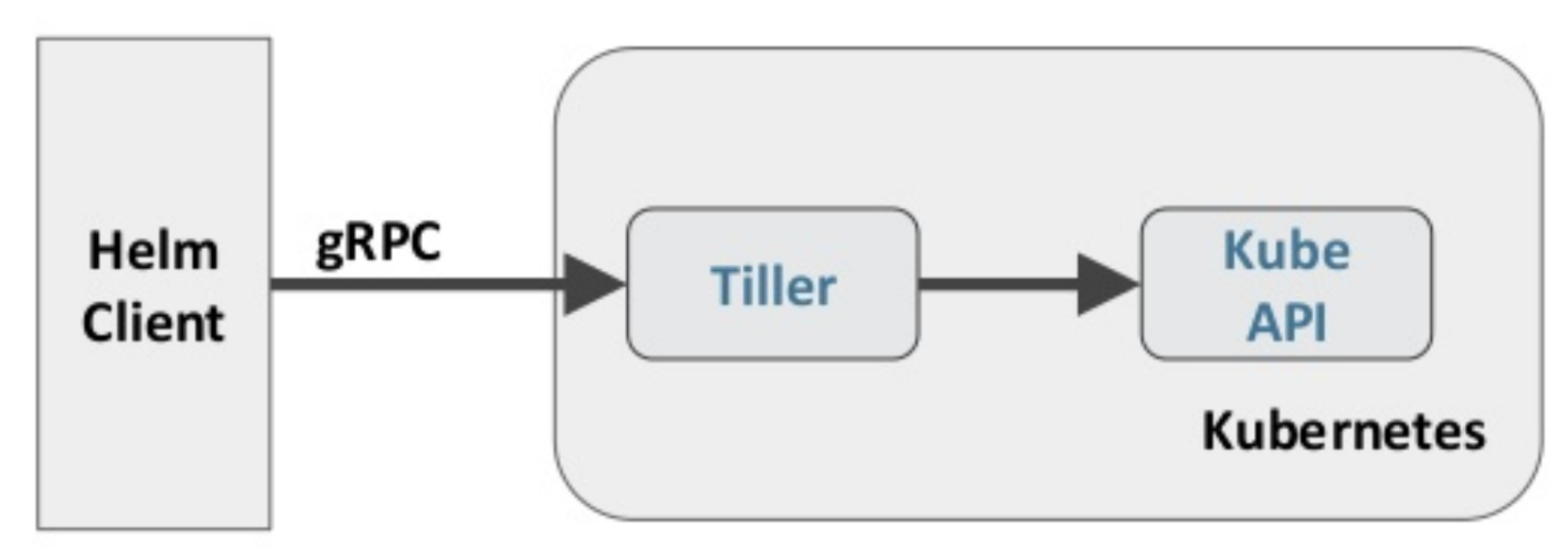

Helm contains two components: a Helm client and a Tiller server, as shown in the following figure

The Helm client is responsible for creating and managing chart s and release s and for interacting with Tiller.Tiller server running in Kubernetes cluster In, it handles requests from Helm clients and interacts with the Kubernetes API Server

2: Helm deployment

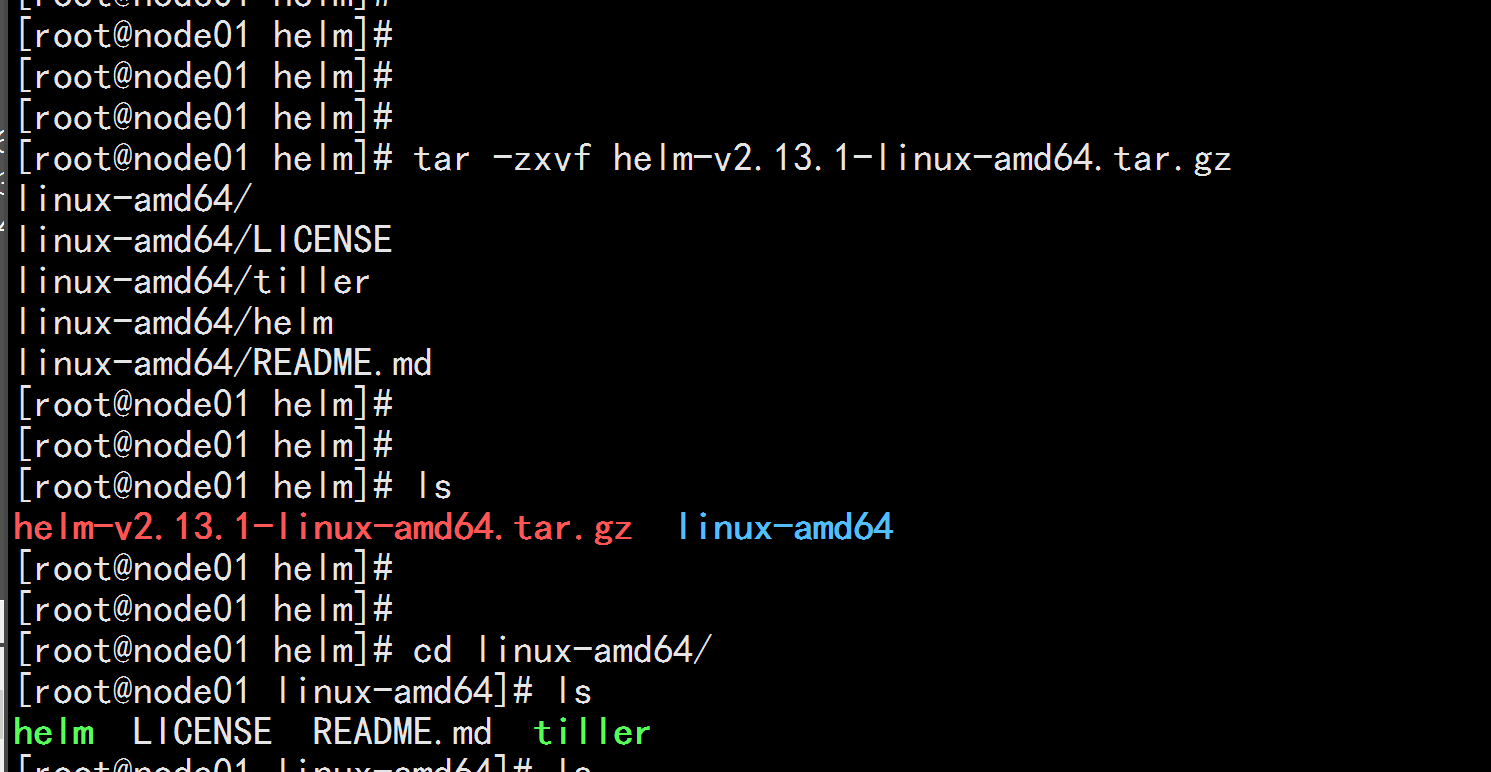

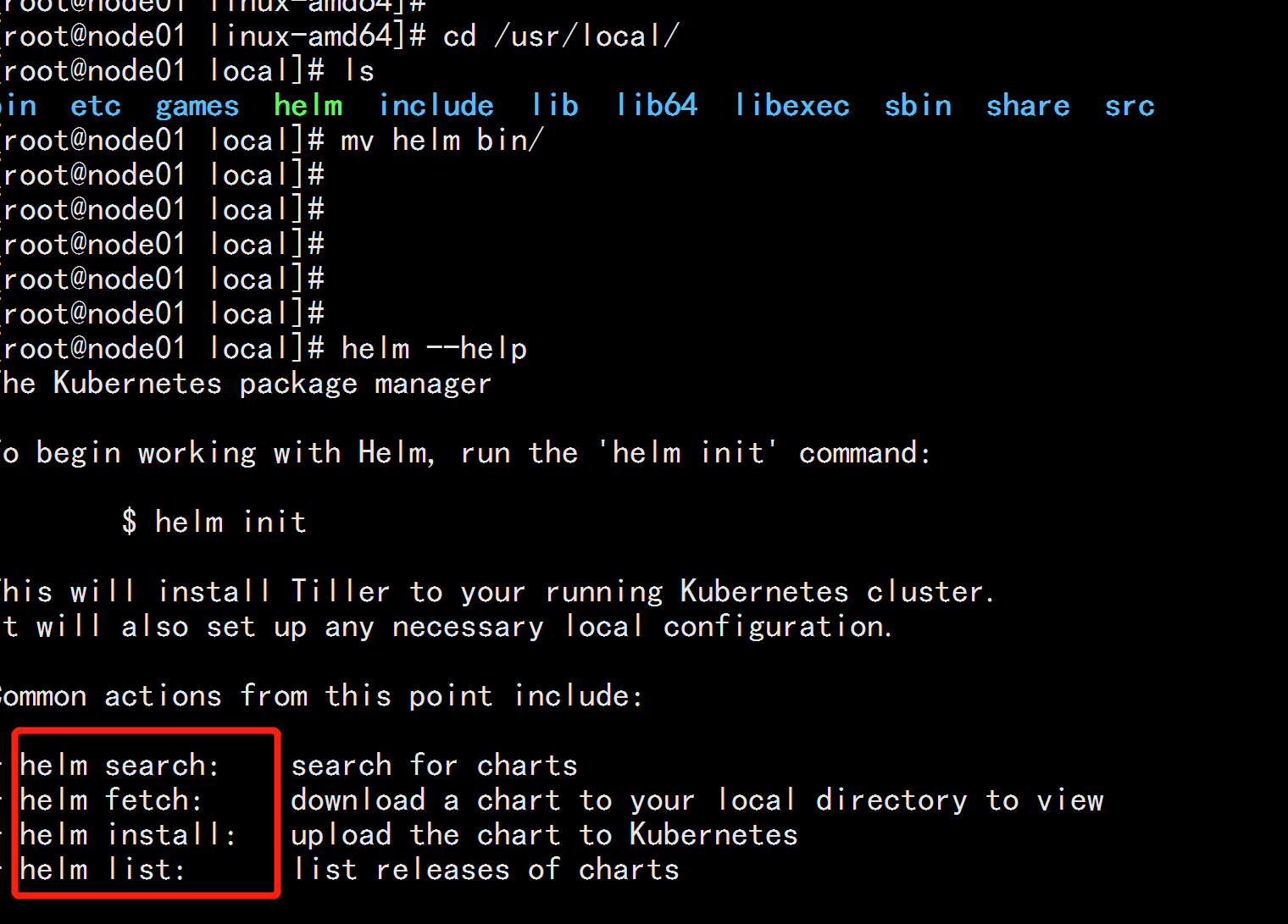

More and more companies and teams are starting to use Helm, a package manager for Kubernetes, and we will use Helm to install the common uses of Kubernetes as well. Component.Helm consists of a client-side life helm command line tool and a server-side tiller, and the installation of Helm is simple.Download helm command line tools to Version 2.13.1 downloaded here under master node node 1/usr/local/bin:

wget https://storage.googleapis.com/kubernetes-helm/helm-v2.13.1-linux-amd64.tar.gz tar -zxvf helm-v2.13.1-linux-amd64.tar.gz cd linux-amd64/ cp -p helm /usr/local/bin/ chmod a+x /usr/local/bin/helm

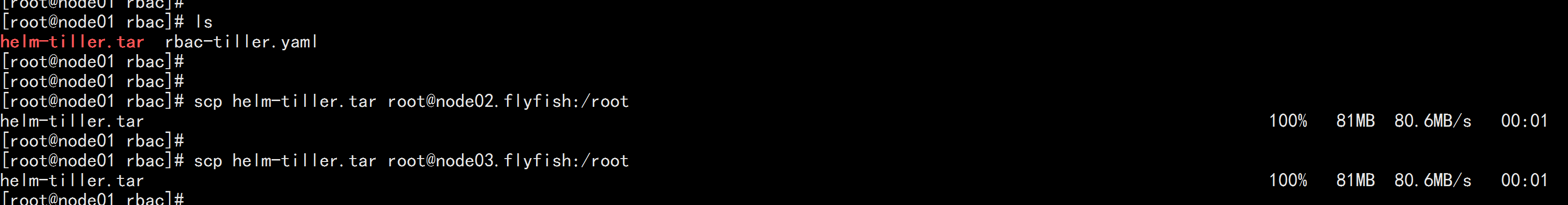

In order to install the server-side tiller, you also need to configure the kubectl tool and the kubeconfig file on this machine to ensure that the kubectl tool can Access to apiserver on this machine and normal use.The node1 node here and the kubectl configured Because Kubernetes APIServer turns on RBAC access control, you need to create service account: tiller for tiller and divide Give it the right role.You can view Role-based Access Control in the helm document for more details.Assign directly here for simplicity Cluster-admin is given to it by the Cluster's built-in Cluster Role.Create the rbac-config.yaml file:

vim rbac-tiller.yaml

-----

apiVersion: v1

kind: ServiceAccount

metadata:

name: tiller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: tiller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: tiller

namespace: kube-system

----

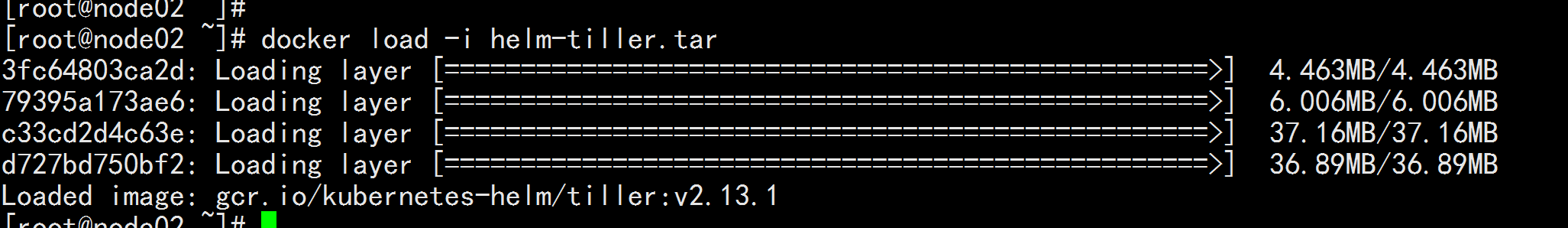

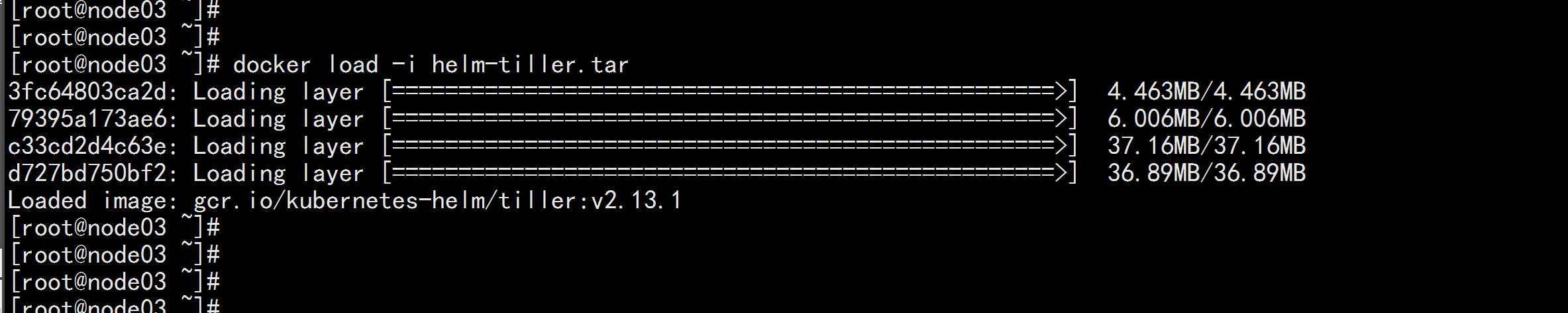

//Upload a mirror of helm-tiller to all nodes

docker load -i helm-tiller.tar

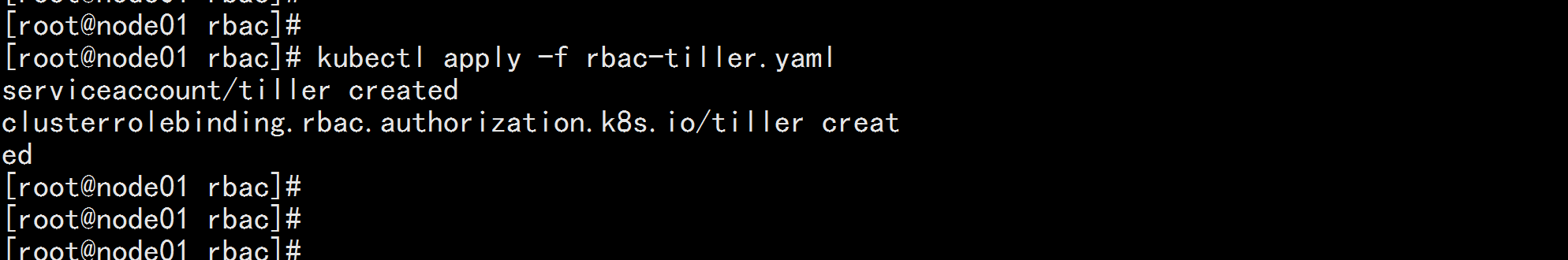

kubectl apply -f rbac-tiller.yaml

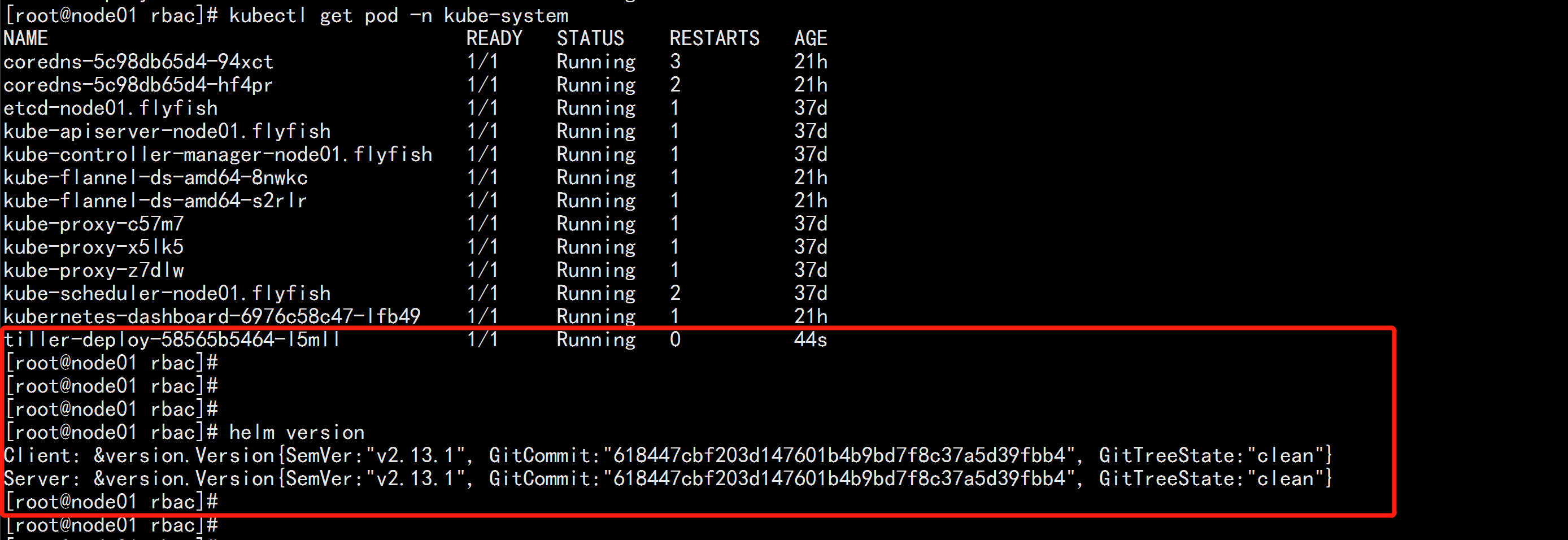

helm init --service-account tiller --skip-refresh

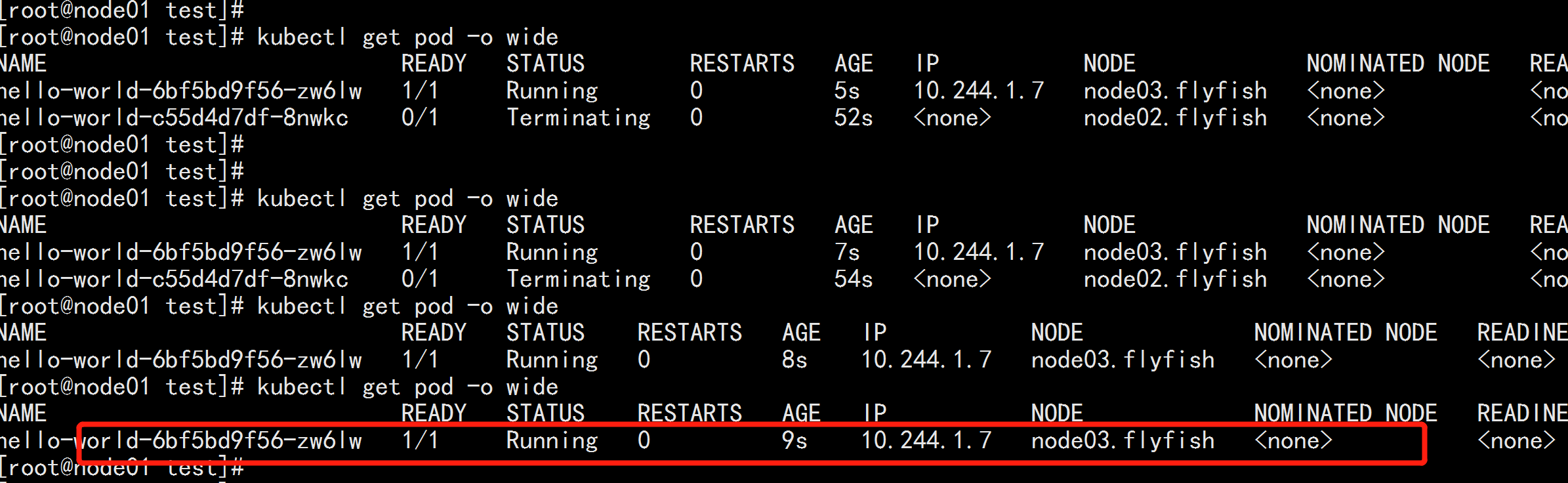

kubectl get pod -n kube-system

helm version

Custom template for helm

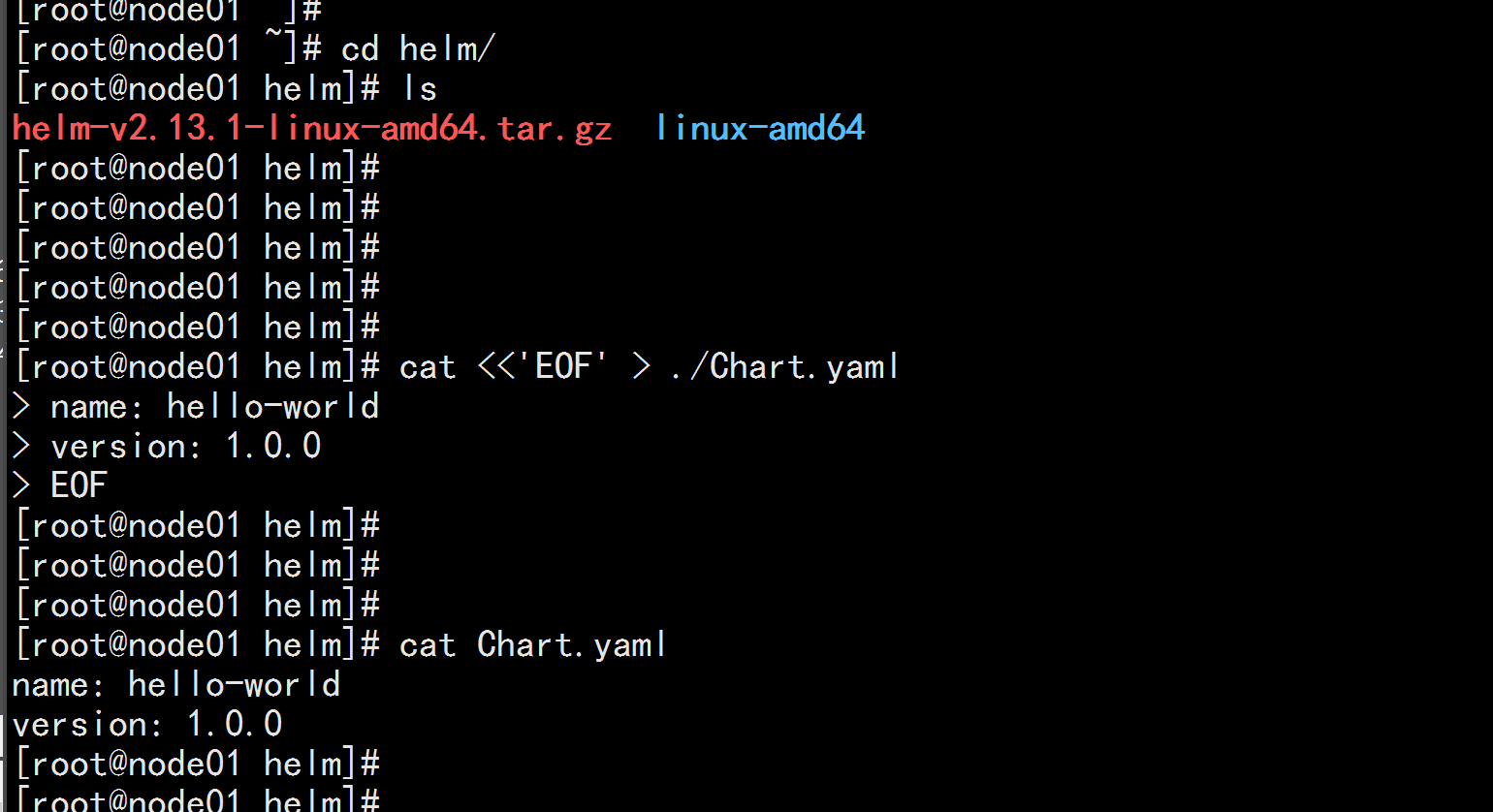

Create a self-describing file, Chart.yaml, which must have a name and version definition cat <<'EOF' > ./Chart.yaml name: hello-world version: 1.0.0 EOF

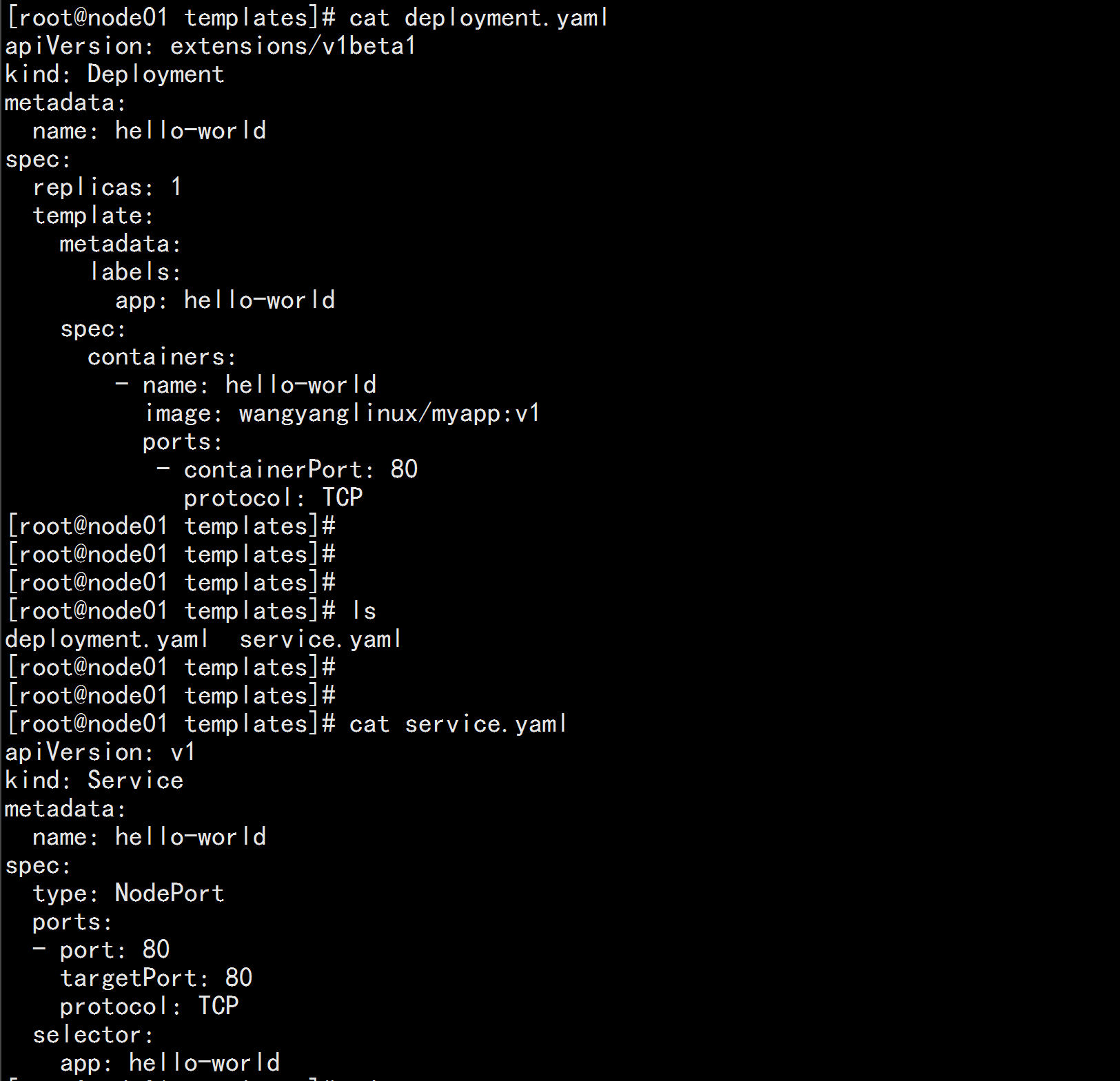

Create a template file for generating Kubernetes Resource List ( manifests)

cat <<'EOF' > ./templates/deployment.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: hello-world

spec:

replicas: 1

template:

metadata:

labels:

app: hello-world

spec:

containers:

- name: hello-world

image: wangyanglinux/myapp:v1

ports:

- containerPort: 80

protocol: TCP

EOFcat <<'EOF' > ./templates/service.yaml

apiVersion: v1

kind: Service

metadata:

name: hello-world

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

protocol: TCP

selector:

app: hello-world

EOF

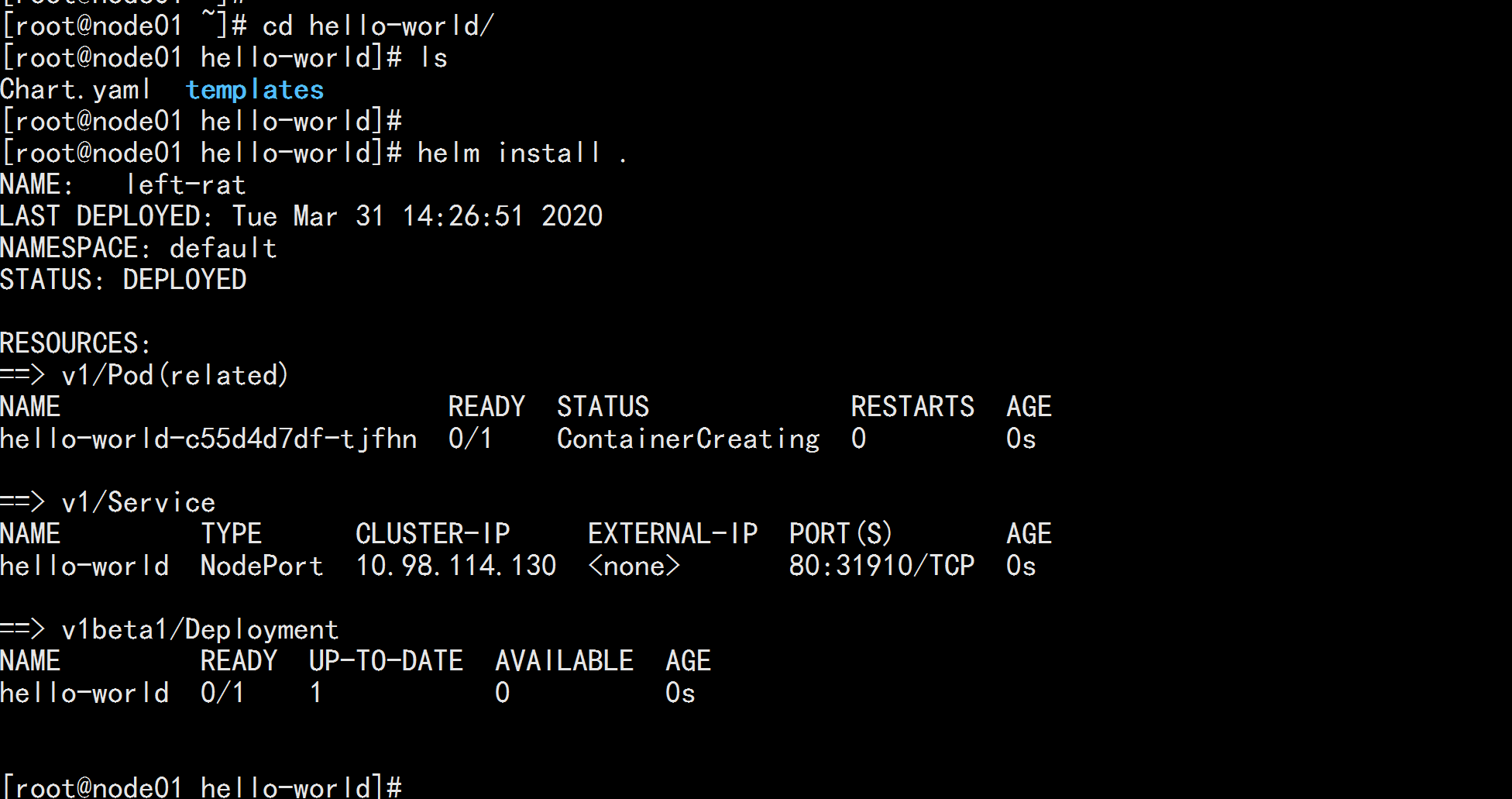

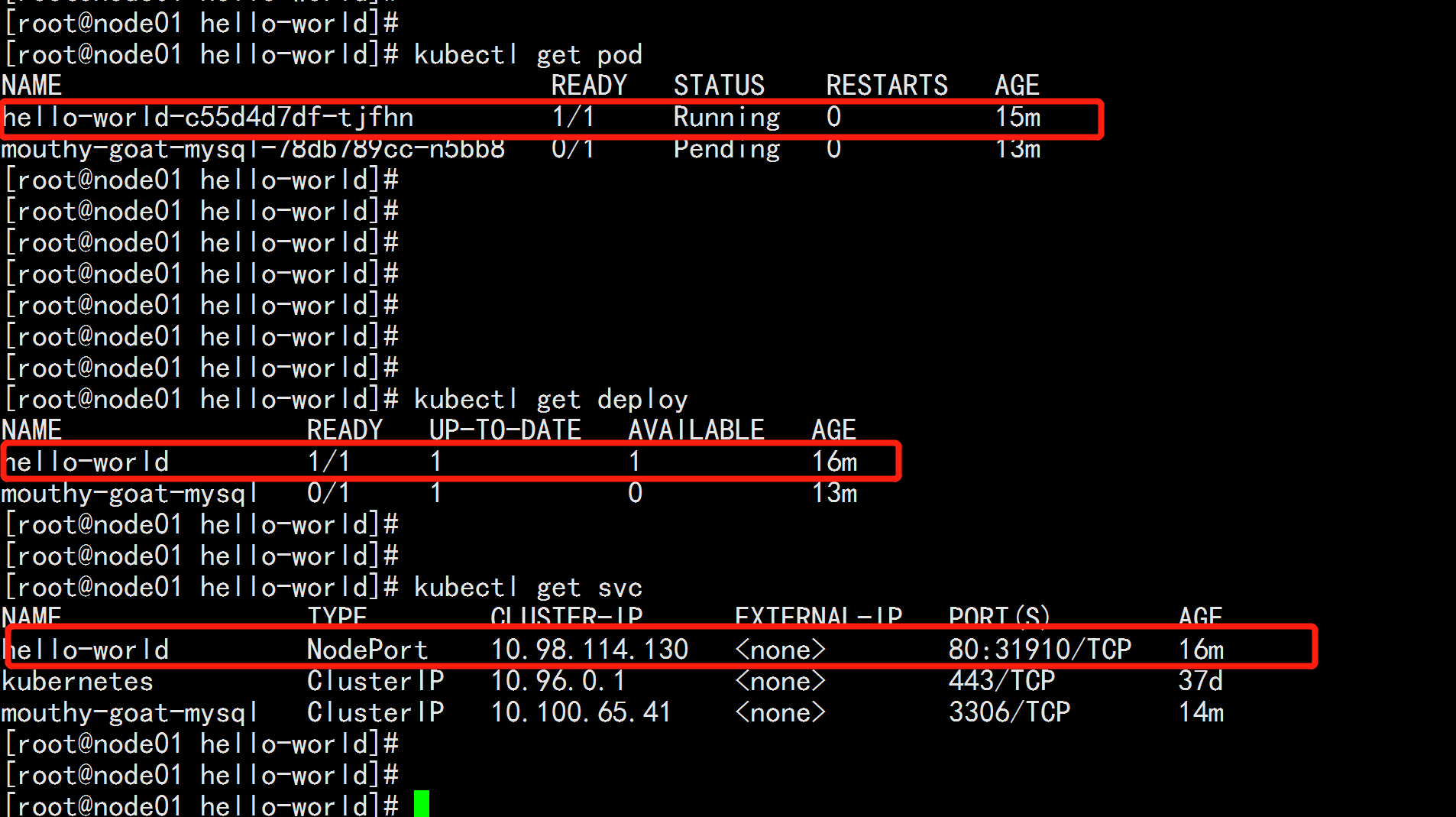

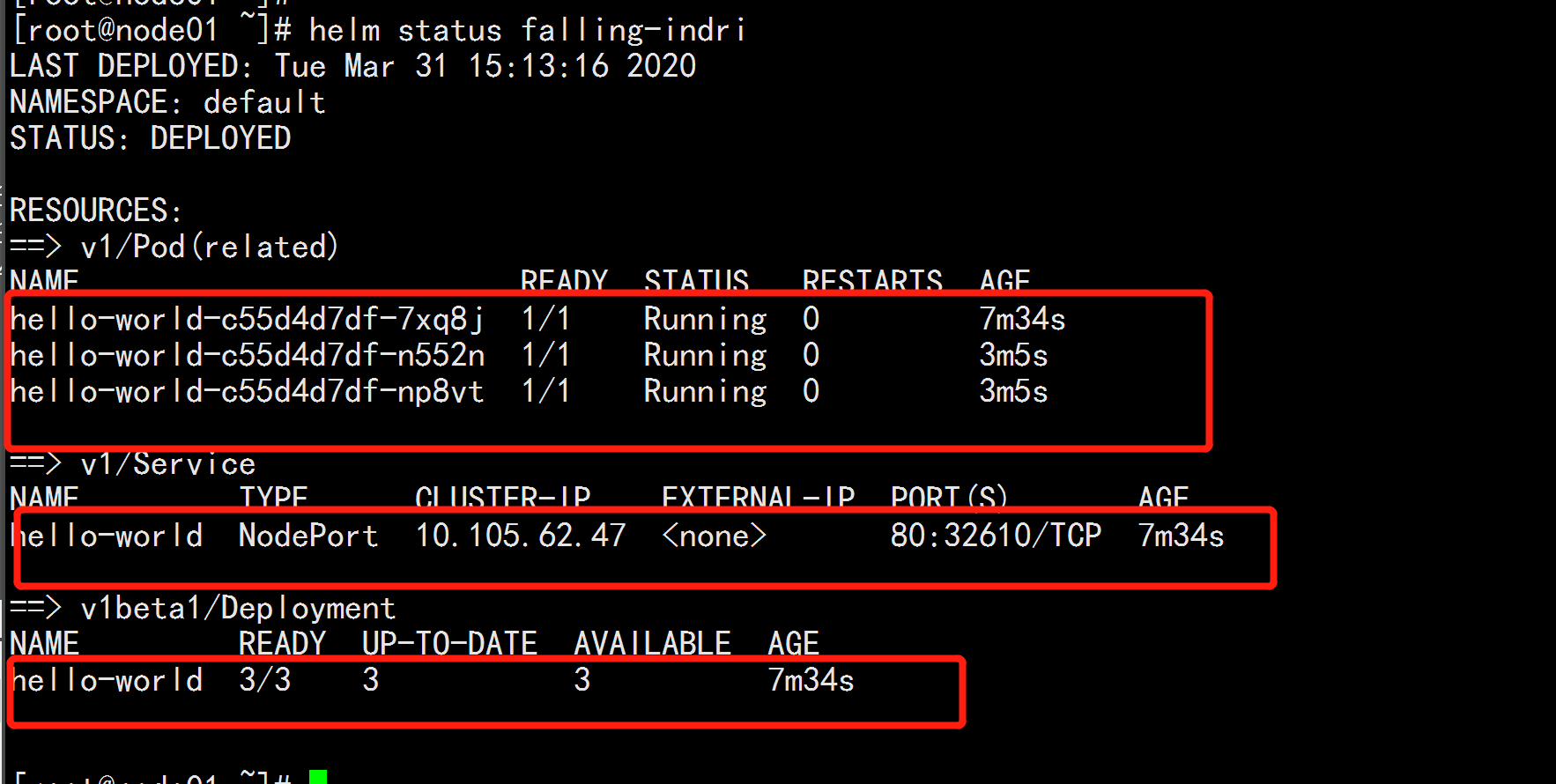

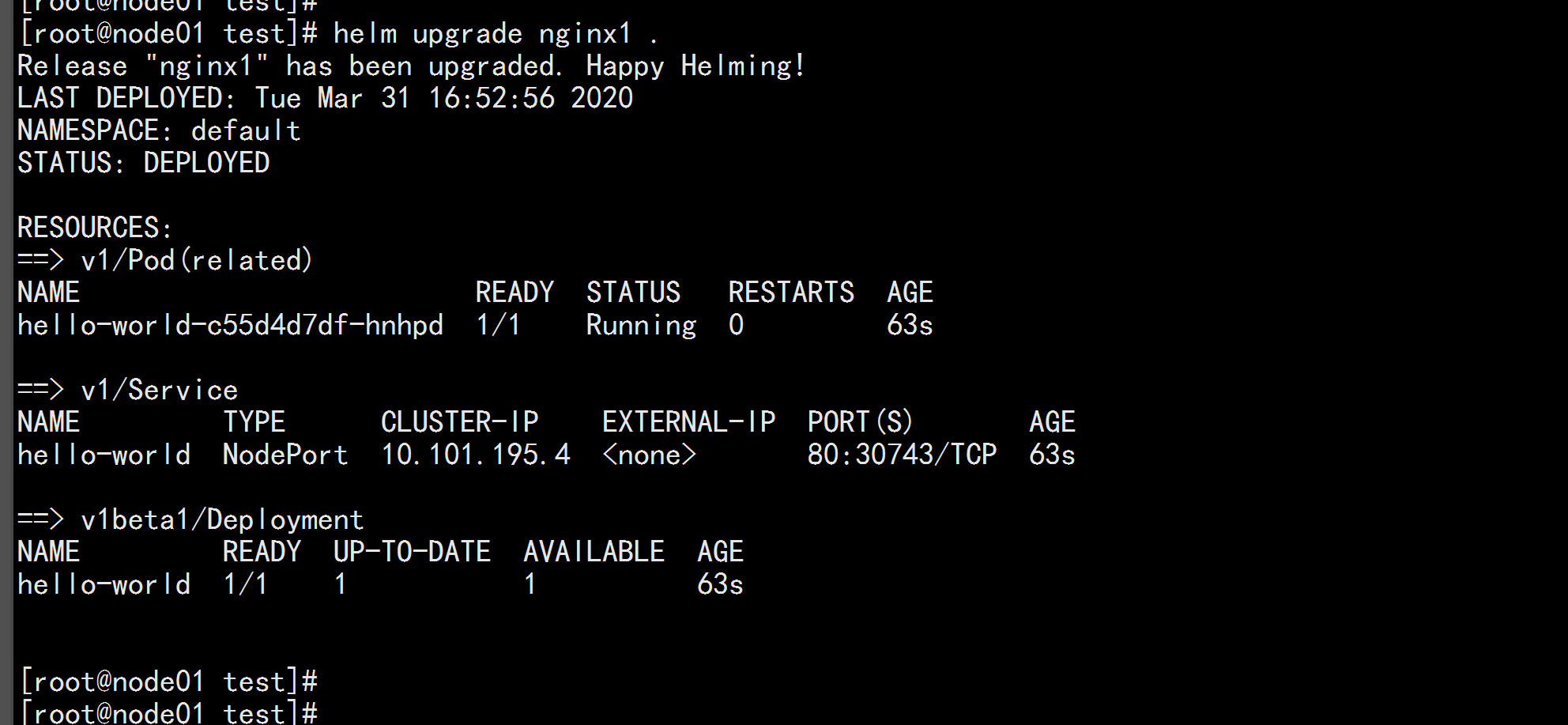

cd /root/hello-world helm install .

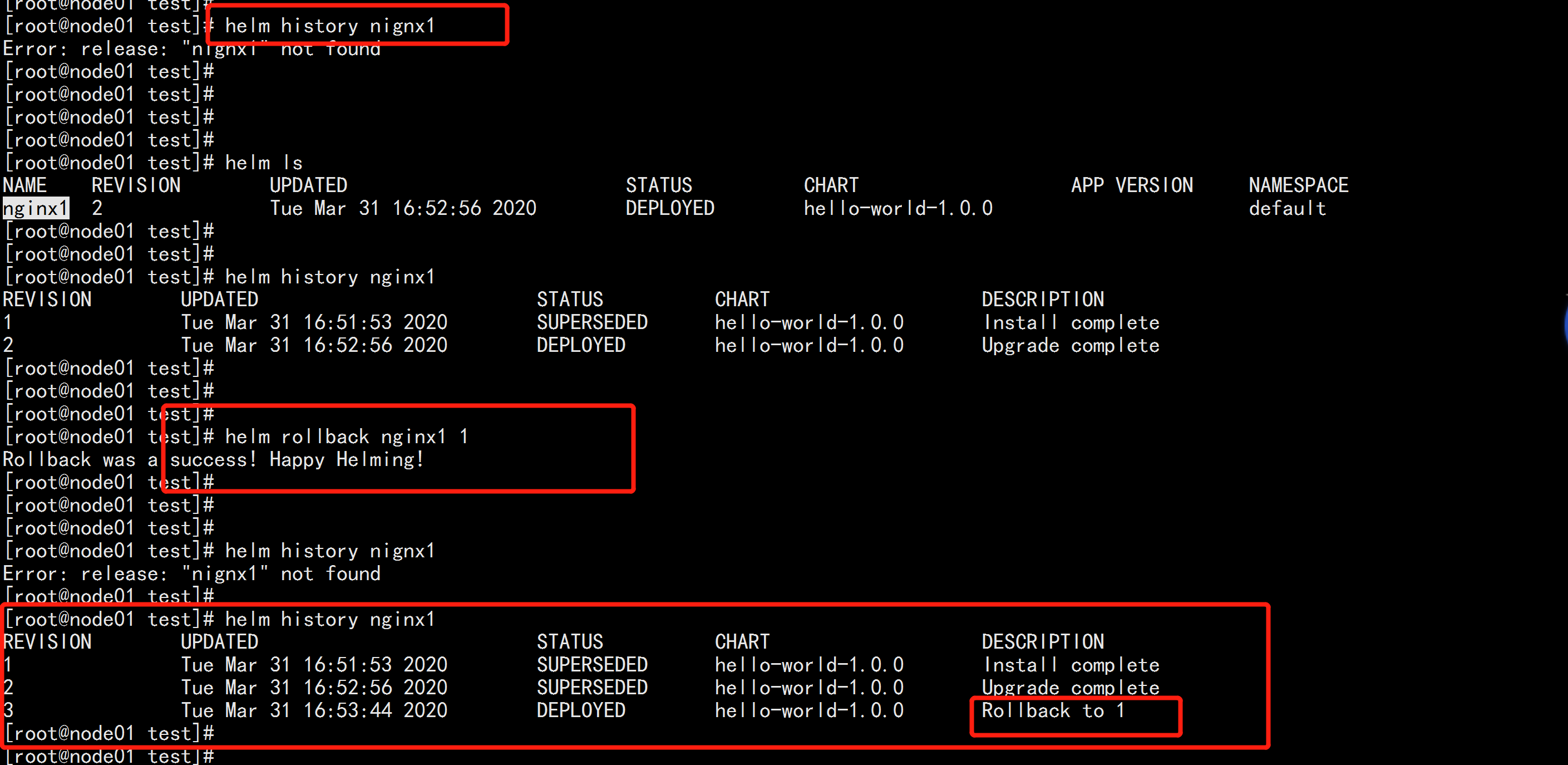

List deployed Release $ helm ls # Query the status of a particular Release $ helm status RELEASE_NAME # Remove all Kubernetes resources associated with this Release $ helm delete cautious-shrimp # helm rollback RELEASE_NAME REVISION_NUMBER $ helm rollback cautious-shrimp 1 # Remove all Kubernetes resources associated with the specified Release and all this using helm delete --purge RELEASE_NAME Release Records $ helm delete --purge cautious-shrimp $ helm ls --deleted

Configuration is reflected in the configuration file values.yaml vim values.yaml --- image: repository: wangyanglinux/myapp tag: 'v2' ---

cd templates

vim deployment.yaml

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: hello-world

spec:

replicas: 1

template:

metadata:

labels:

app: hello-world

spec:

containers:

- name: hello-world

image: {{ .Values.image.repository }}:{{ .Values.image.tag }}

ports:

- containerPort: 80

protocol: TCP

----

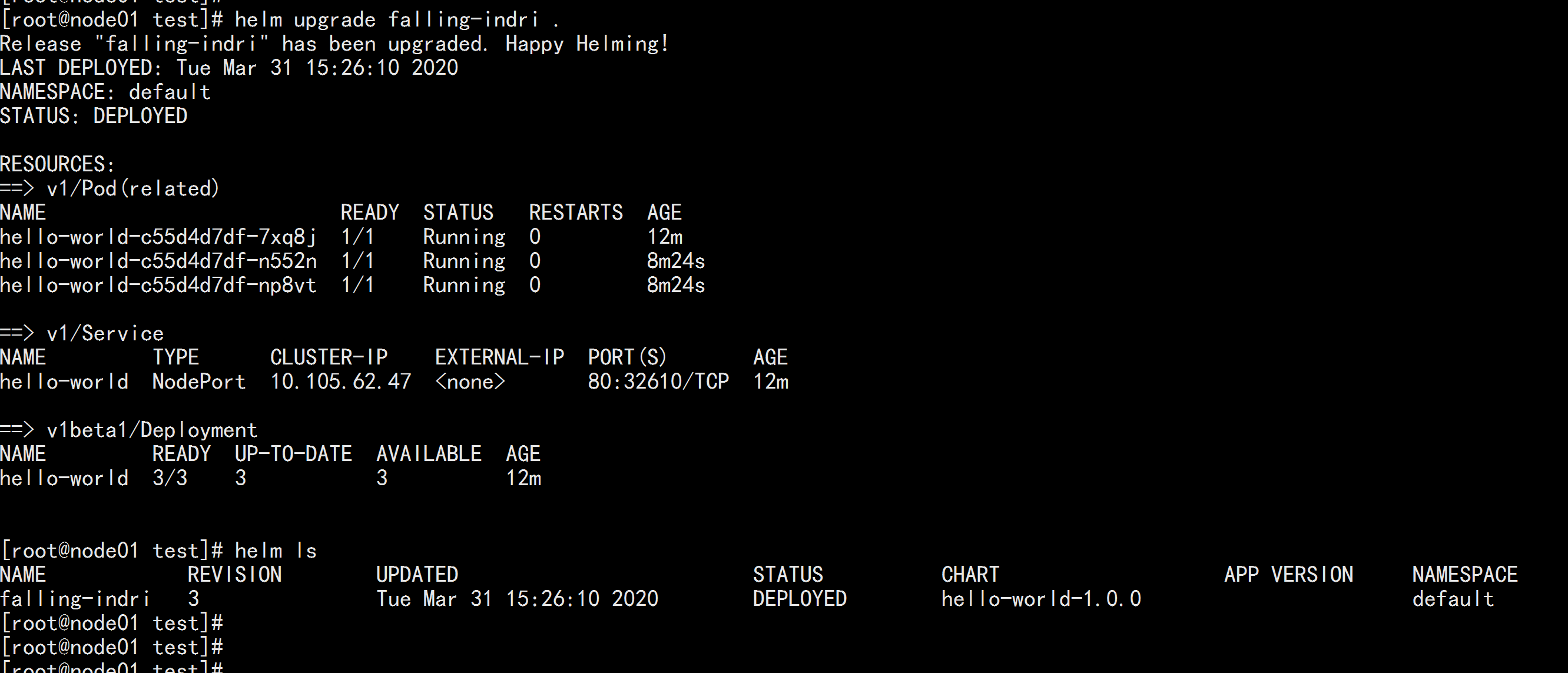

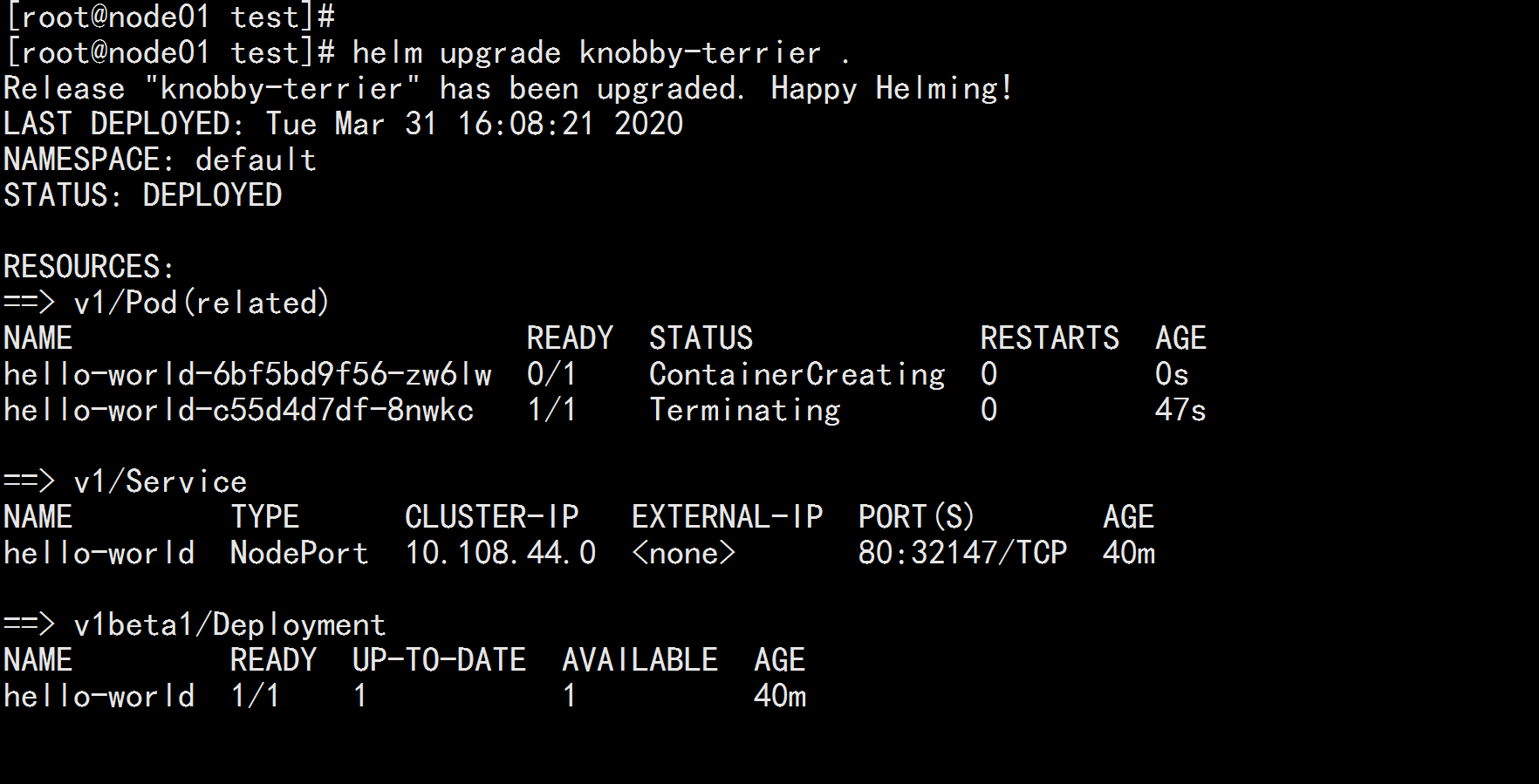

cd /root/test

helm ls

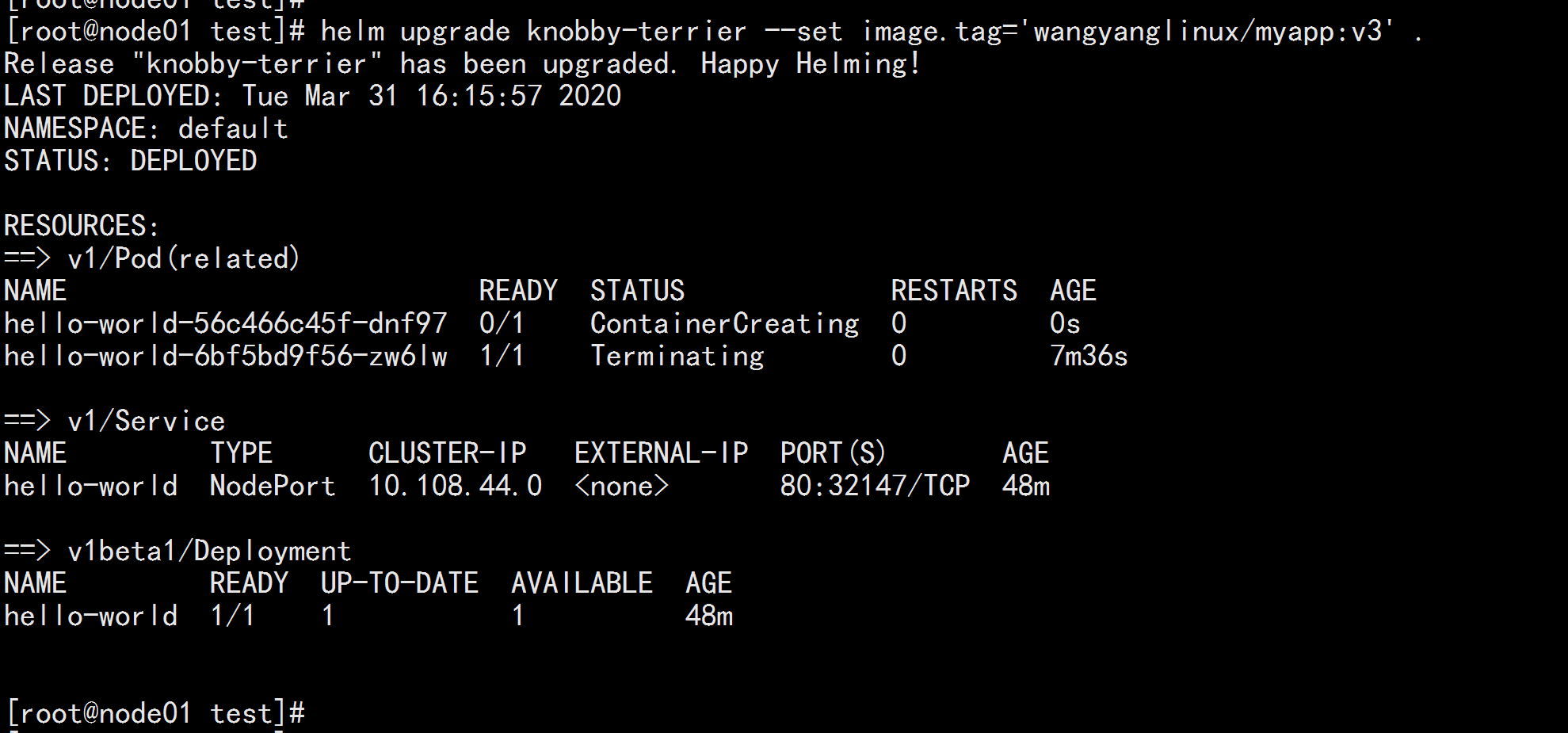

helm upgrade knobby-terrier .

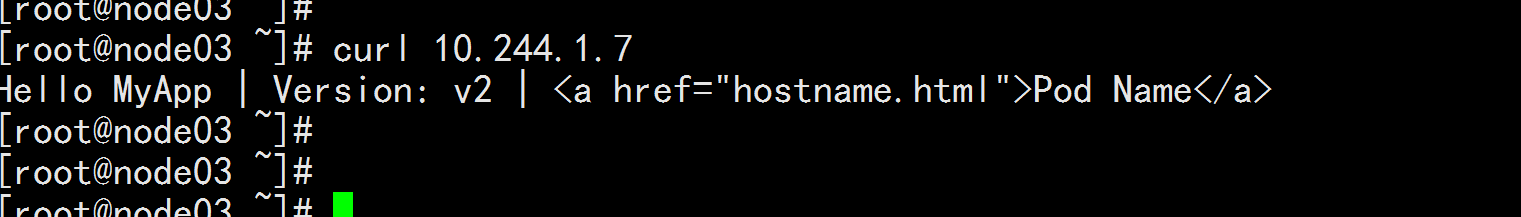

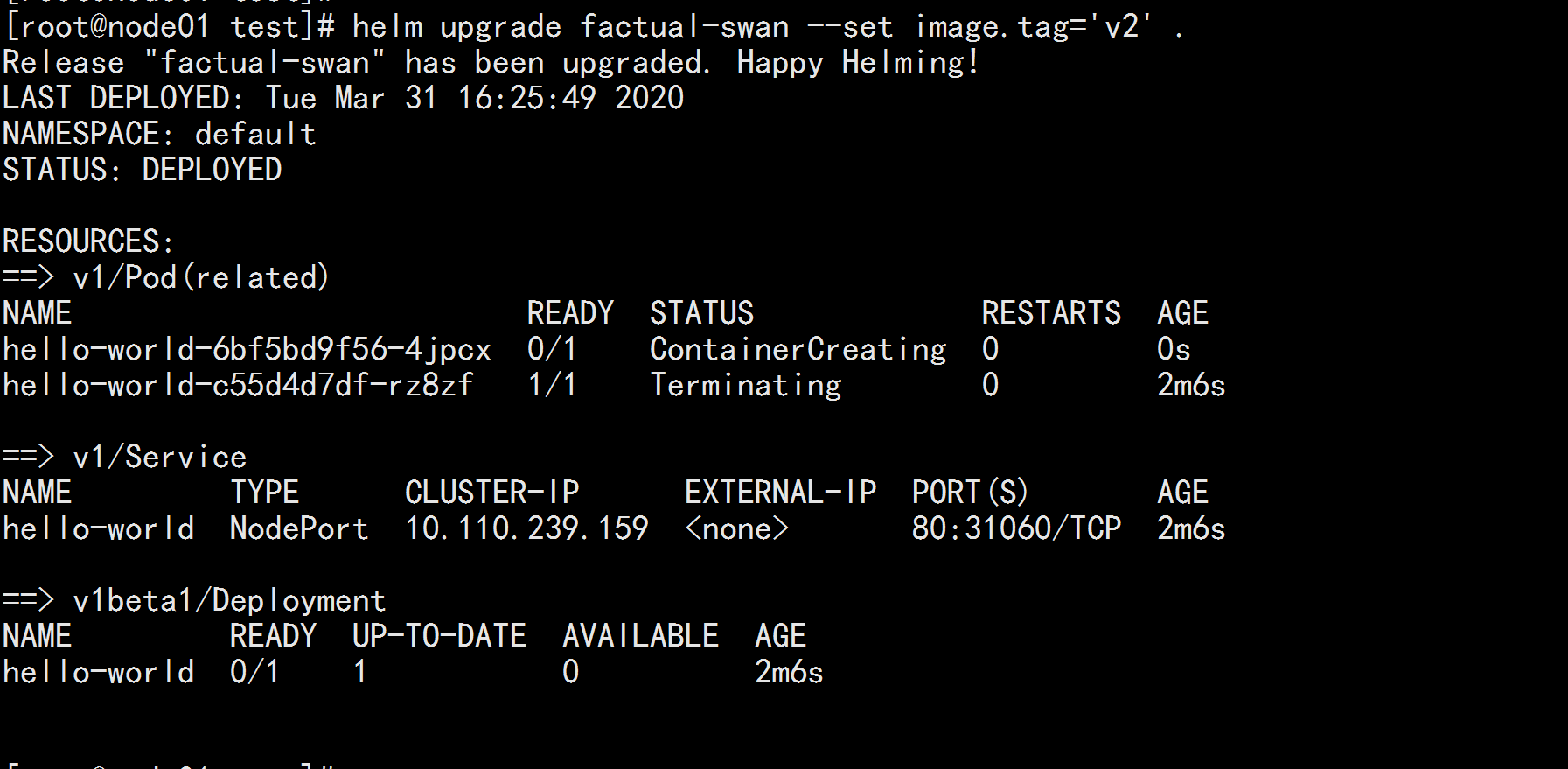

Values in values.yaml can be overridden by the parameters used when deploying release s--values YAML_FILE_PATH or--set key1=value1, key2=value2 helm install --set image.tag='v3' helm upgrade factual-swan --set image.tag='v2' .

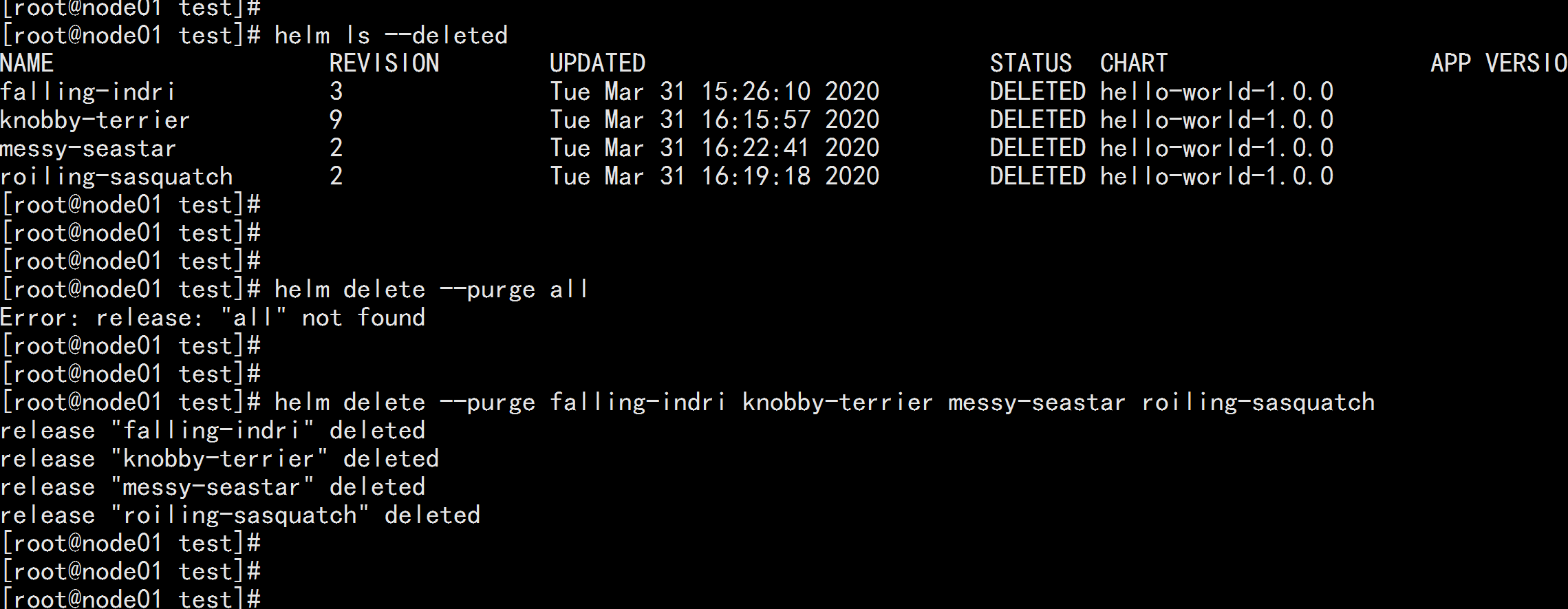

helm ls --deleted helm deleted --purge falling-indri knobby-terrier messy-seastar roiling-sasquatch --- purge Indicates a complete deletion release

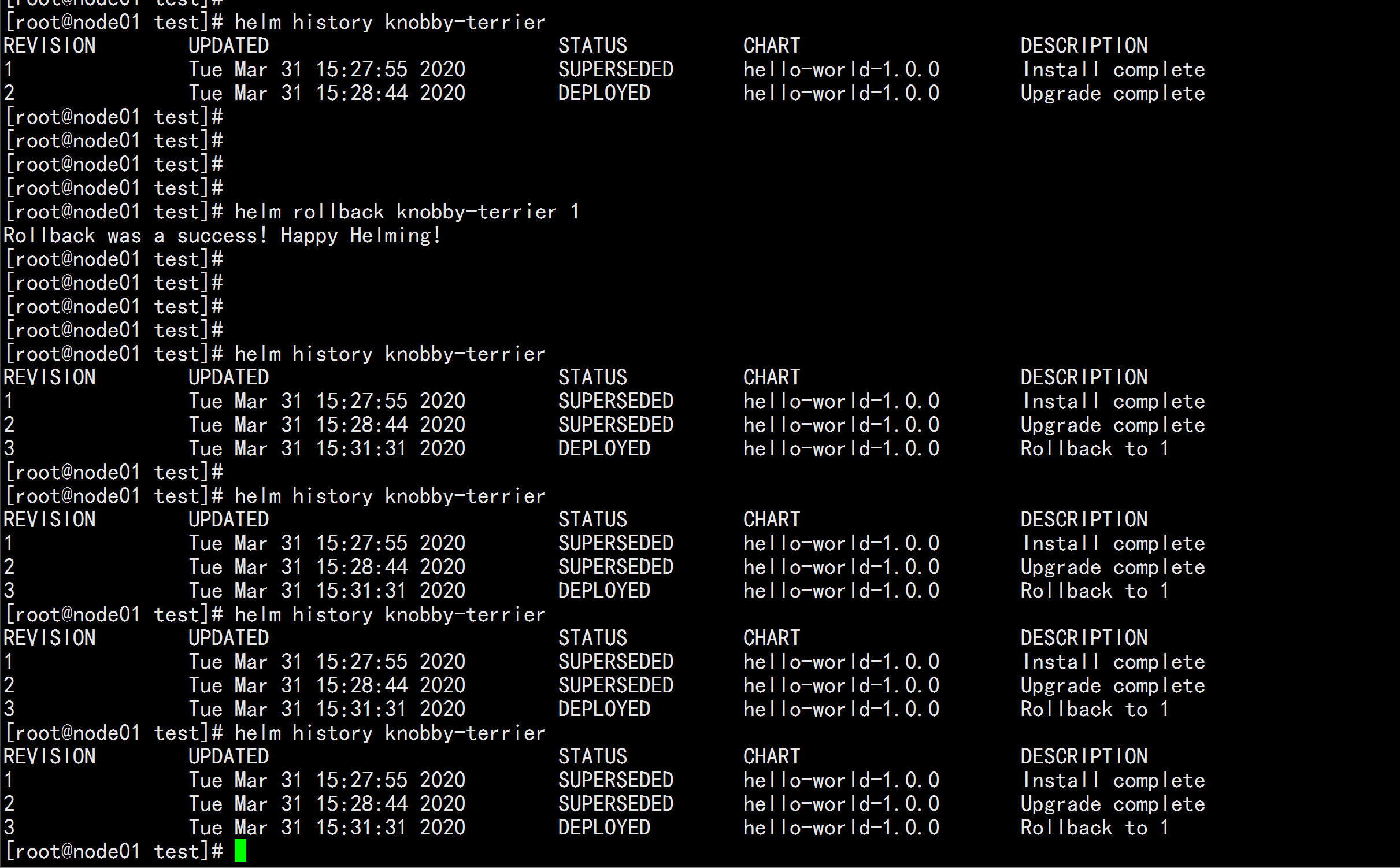

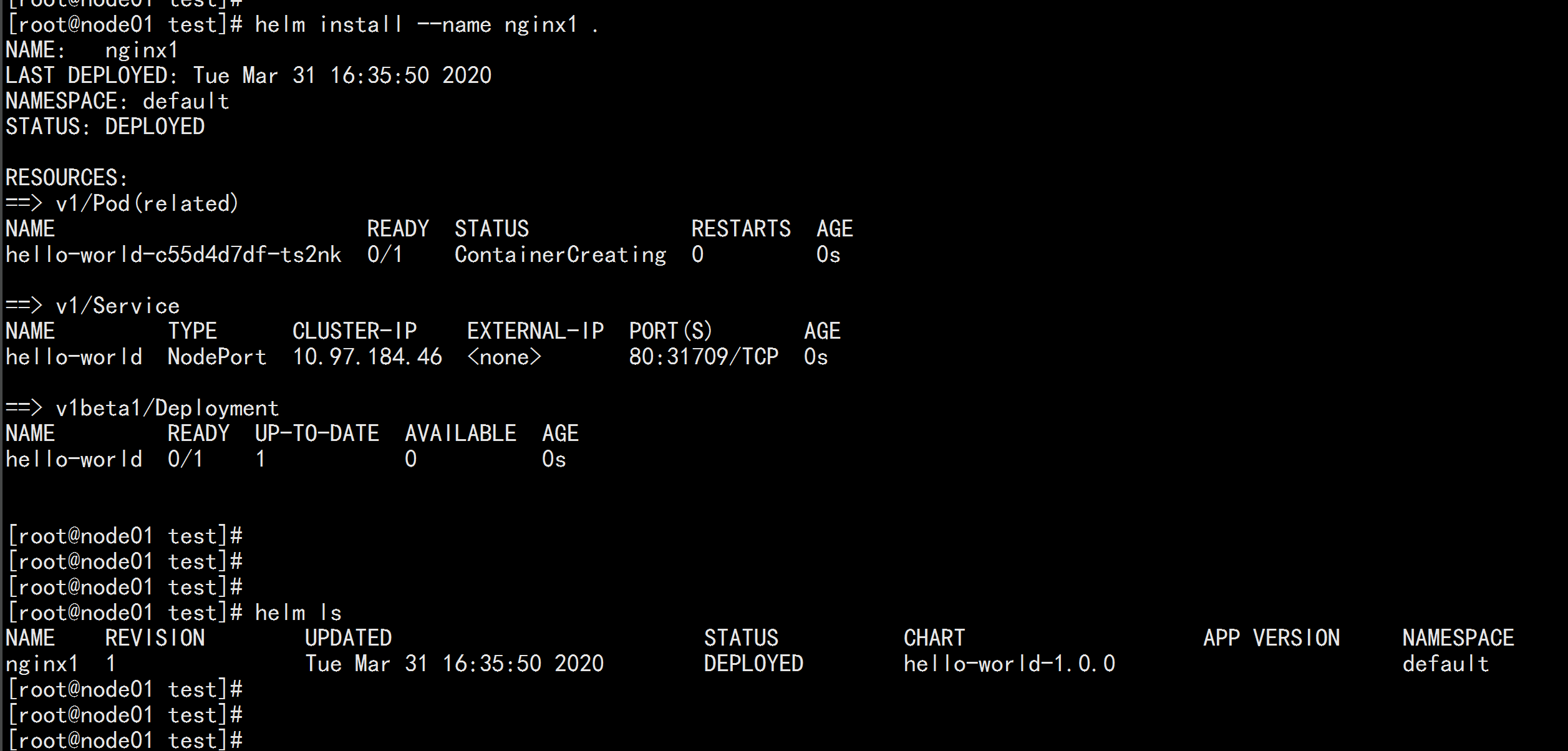

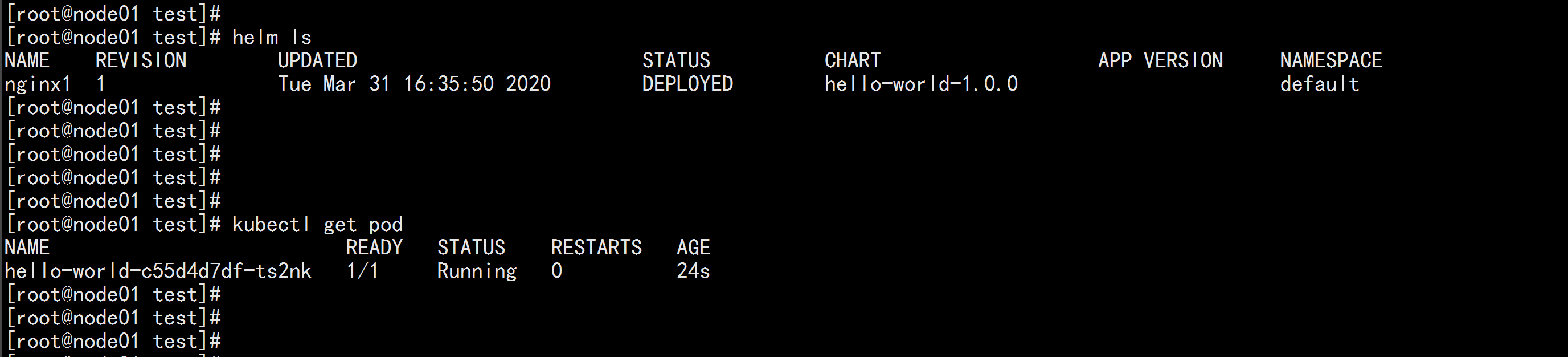

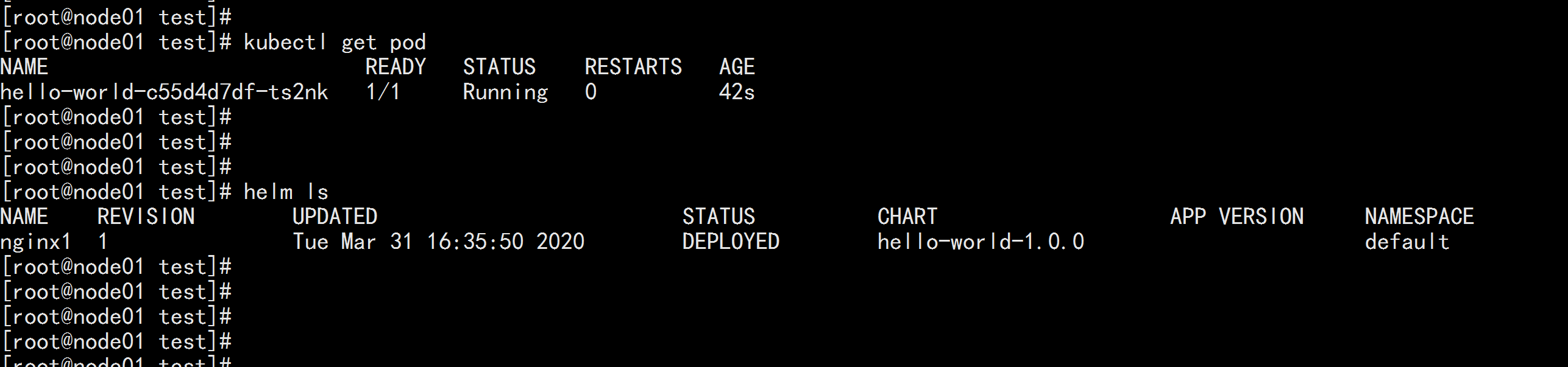

About Rollback Restore helm rollback RELEASE_NAME REVISION_NUMBER --- helm install --name nginx1 . helm ls helm upgrade nginx1 . helm histroy nignx1 helm rollback nginx1 1

debug : # Using templates to dynamically generate K8s resource lists requires the ability to preview the generated results ahead of time. # Use the --dry-run --debug option to print the contents of the generated manifest file instead of the Execution Department helm install . --dry-run --debug --set image.tag=latest