Common HDFS commands

<path>... One or more paths in hdfs, if not specified, default to/user/<currentUser>

<localsrc>...one or more paths to the local file system

Target Path in <dst> HDFS

view help

Command: hdfs dfs -help [cmd ...] Parameters: cmd... One or more commands to query

Create directory

Command: hdfs dfs -mkdir [-p] <path> ... Parameters: -p Create recursively

Create an empty file

Command: hdfs dfs -touchz <path> ...

View directories or files

Command: hdfs dfs -ls [-C] [-d] [-h] [-R] [-t] [-S] [-r] [-u] [<path> ...] Parameters: -C Show paths to files and directories only -d Catalog as a regular file -h Format file size in a human-readable way, not in bytes -R List the contents of a directory recursively -t Sort files by modification time (first most recent) -S Sort files by size -r Reverse Sort Order -u Display and sort using last visited time instead of modifications

Upload directory or file

# put and copyFromLocal are essentially copy operations, and moveFromLocal are cut operations Command: hdfs dfs -put [-f] [-p] [-d] <localsrc> ... <dst> Parameters: -f Overwrite the target if it already exists -p Retain access and modification time, ownership, and mode -d Skip creation of temporary files Command: hdfs dfs -copyFromLocal [-f] [-p] [-d] <localsrc> ... <dst> Parameters: -f Overwrite the target if it already exists -p Retain access and modification time, ownership, and mode -d Skip creation of temporary files Command: hdfs dfs -moveFromLocal <localsrc> ... <dst>

Download directory or file

# get and copyToLocal are essentially copy operations, moveToLocal are cut operations # Note: When downloading multiple files, the target must be a directory. Command: hdfs dfs -get [-f] [-p] [-ignoreCrc] [-crc] <src> ... <localdst> Parameters: -f Overwrite the target if it already exists -ignoreCrc ignore CRC check -crc Use CRC check Command: hdfs dfs -copyToLocal [-f] [-p] [-ignoreCrc] [-crc] <src> ... <localdst> Parameters: -f Overwrite the target if it already exists -ignoreCrc ignore CRC check -crc Use CRC check Command: hdfs dfs -moveToLocal <src> <localdst>

Copy directories or files in HDFS

Command: hdfs dfs -cp [-f] [-p | -p[topax]] [-d] <src> ... <dst> Parameters: -f Overwrite the target if it already exists -p | -p[topax] Retained state, parameters[topax]Represented separately (timestamp, ownership, permissions, ACL,XAttr),No parameters, no state preserved -d Skip creation of temporary files

Cut directories or files in HDFS

Command: hdfs dfs -mv <src> ... <dst>

Delete directories or files in HDFS

Command: hdfs dfs -rm [-f] [-r|-R] [-skipTrash] [-safely] <src> ... Parameters: -f If the file does not exist, do not display a diagnostic message or modify the exit status to reflect the error -[rR] Remove directories recursively -skipTrash Delete directly without trash -safely Security Confirmation Required

Attach local file to target file

Command: hdfs dfs -appendToFile <localsrc> ... <dst>

Show file contents

Command: hdfs dfs -cat [-ignoreCrc] <src> ... Parameters: -ignoreCrc ignore CRC check

Modify directory or file permissions

Command: hdfs dfs -chmod [-R] <MODE[,MODE]... | OCTALMODE> PATH... Parameters: -R Modify files recursively <MODE> Modes and Uses shell Command has the same mode <OCTALMODE> Digital representation

Modify directory or file owners and groups

Command: hdfs dfs -chown [-R] [OWNER][:[GROUP]] PATH... Parameters: -R Modify files recursively OWNER owner GROUP Group to which you belong Command: hdfs dfs -chgrp [-R] GROUP PATH... Parameters: -R Modify files recursively GROUP Group to which you belong

Count directories, files, bytes under specified paths

Command: hdfs dfs -count [-q] [-h] [-v] [-t [<storage type>]] [-u] [-x] <path> ... Parameters: -q Show quota and quota usage -h Format file size in a human-readable way, not in bytes -v Show header row -t [<storage type>] Display quotas by storage type,Requirements and parameters-q or-u Use together -u Show quota and quota usage, but do not show a detailed summary -x Do not compute snapshots

Displays the capacity, free space, and used space of the file system

Command: hdfs dfs -df [-h] [<path> ...] Parameters: -h Format file size in a human-readable way, not in bytes

Show usage of directories or files

Command: hdfs dfs -du [-s] [-h] [-x] <path> ... Parameters: -s Show total usage, not each individual directory or file -h Format file size in a human-readable way, not in bytes -x Do not compute snapshots

Empty Trash

Command: hdfs dfs -expunge

Find directories or files

# Finds all files that match the specified expression and applies the selected action to them. If not specified, the default is the current working directory. If no expression is specified, the default is -print Command: hdfs dfs -find <path> ... <expression> ... <expression>: -name Match Content -iname Ignore case matches -print Write the current path name to standard output followed by a line break -print0 If used-print0 Expression is appended ASCII Empty characters, not line breaks.

Show 1kb content at the end of the file

Command: hdfs dfs -tail [-f] <file> Parameters: -f Display additional data as files grow

Merge downloaded files

Command: hdfs dfs -getmerge [-nl] [-skip-empty-file] <src> <localdst> Parameters: -nl Add a line break at the end of each file -skip-empty-file Do not add new line characters for empty files

Note: Creating snapshots requires administrators to turn on allowing snapshots to be created

Turn on allow snapshots

Command: hdfs dfsadmin -allowSnapshot <path> Parameters: path snaphottable Path to directory

Turn off allowing snapshots

Command: hdfs dfsadmin -disallowSnapshot <path> Parameters: path snaphottable Path to directory

Get all snapshot table directories where the current user has permission to take snapshots

Command: hdfs lsSnapshottableDir

Create Snapshot

Command: hdfs dfs -createSnapshot <snapshotDir> [<snapshotName>] Parameters: snapshotDir Snapshot directory snapshotName Name of snapshot

Rename snapshot

Command: hdfs dfs -renameSnapshot <snapshotDir> <snapshotName> Parameters: snapshotDir Snapshot directory snapshotName Name of snapshot

Delete snapshot

Command: hdfs dfs -deleteSnapshot <snapshotDir> <oldName> <newName> Parameters: snapshotDir Snapshot directory oldName Name of the original snapshot newName Name of the new snapshot

Compare the differences between the two snapshots

Command: hdfs snapshotDiff <snapshotDir> <from> <to> Parameters: snapshotDir Path to snapshot directory from Original snapshot to Snapshots to compare

File quota operations

Set quantity quota

Command: hdfs dfsadmin -setQuota <quota> <dirname>... Parameters: quota Quantity of quotas (where a directory takes up a quota, such as two, and only one is actually allowed) dirname Paths requiring quotas

Clear Quantity Quota

Command: hdfs dfsadmin -clrQuota <dirname>... Parameters: dirname Paths requiring quotas

Set space size limit

Command: hdfs dfsadmin -setSpaceQuota <quota> [-storageType <storagetype>] <dirname>... Parameters: quota Quota space size (the space quota also counts copies, for example, 1) GB If you have three copies of the data, you will occupy 3 GB Quota.) -storageType Setting quotas specific to storage type Available storage types include:RAM_DISK,DISK, SSD,ARCHIVE dirname Paths requiring quotas

Clear Space Size Limit

Command: hdfs dfsadmin -clrSpaceQuota [-storageType <storagetype>] <dirname>... Parameters: -storageType Clear quotas specific to storage type Available storage types include:RAM_DISK,DISK, SSD,ARCHIVE dirname Path to clear quota

Backup and Restore of Metadata

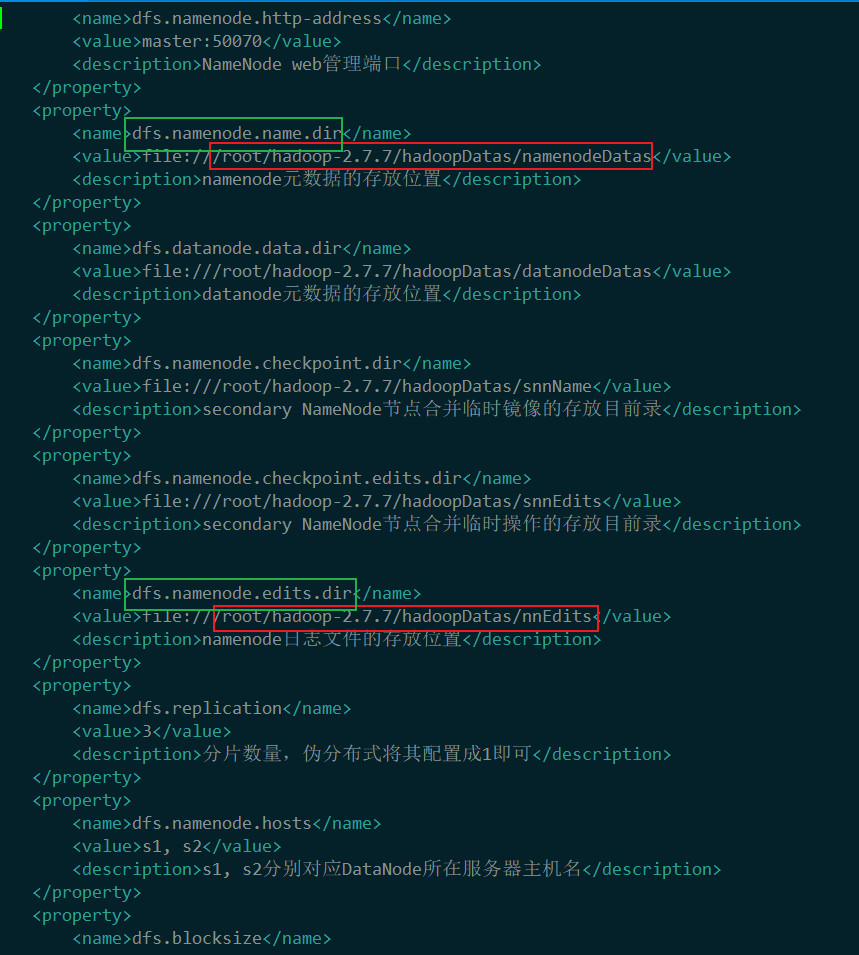

View the location of the hdfs-site.xml profile data directory, with emphasis on these two directories

Copy the files in the directory to back up the operation.

View pre-backup files

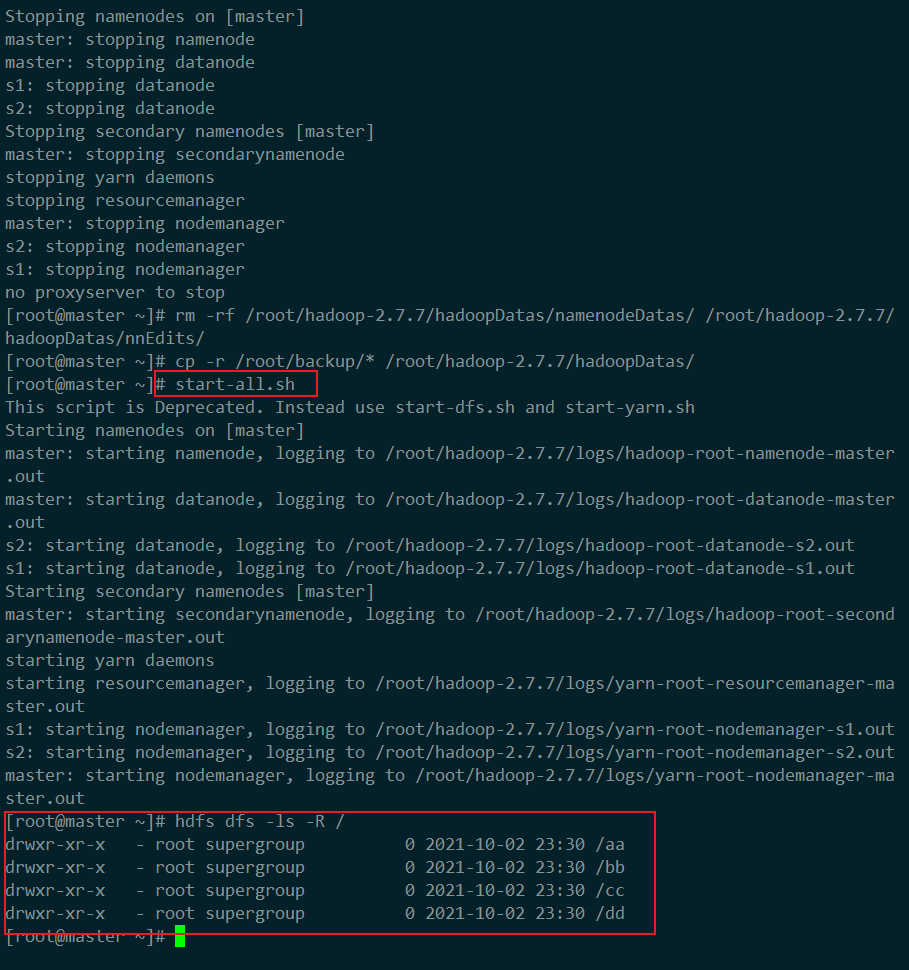

Stop hadoop service stop-all.sh

Create a backup file mkdir/root/backup

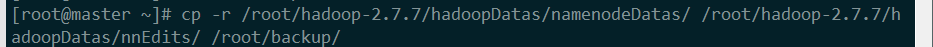

Copy cp-r/root/hadoop-2.7.7/hadoopDatas/namenodeDatas/root/hadoop-2.7.7/hadoopDatas/nnEdits/root/backup/

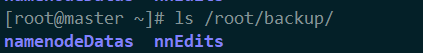

View backed up files ls/root/backup/

To demonstrate that we can restore, start hadoop, modify the files in hdfs, and then restore.

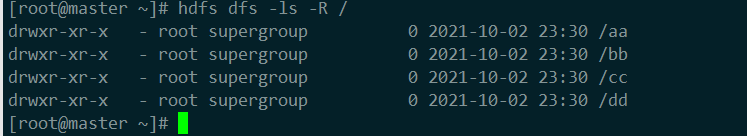

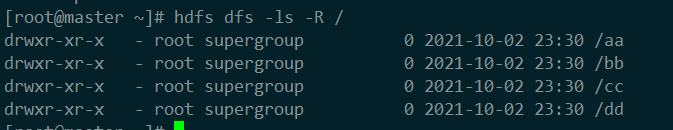

After startup, we view the current hdfs file

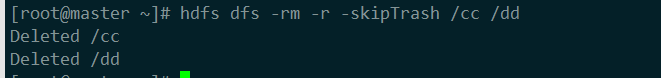

That's what happened before the backup. Now delete a few directories. We'll restore the metadata we just backed up later.

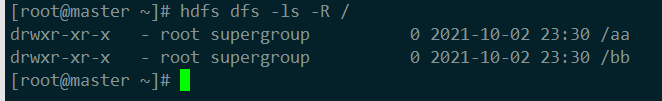

View the contents of the file again

Now that the file has been deleted, let's stop hadoop and restore it.

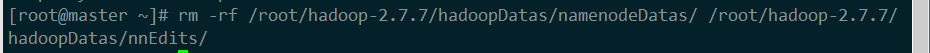

We need to delete the original metadata rm-rf/root/hadoop-2.7.7/hadoopDatas/namenodeDatas/root/hadoop-2.7.7/hadoopDatas/nnEdits/

Restore the backup file to the metadata directory cp-r/root/backup/*/root/hadoop-2.7.7/hadoopDatas/

Start hadoop again to view the file

The files at the time of backup have been restored and the operation is completed by the time of backup.