Handwriting recognition based on KNN

Task introduction

- This example uses sklearn to train a K-nearest neighbor (KNN) classifier to recognize handwritten digits in the data set DBRHD.

- The recognition effect of KNN is compared with that of multi-layer perceptron.

Input of KNN

-

Each picture of DBRHD dataset is a 32 * 32 text matrix composed of 0 or 1;

-

The input of KNN is a 1024 dimensional vector expanded by the picture matrix.

Handwriting recognition based on KNN

Experimental steps:

-

Step 1: create the project and lead the sklearn package

-

Step 2: load training data

-

Step 3: build KNN classifier

-

Step 4: test set evaluation

Specific steps

Step 1: create the project and import the sklearn package

(1) Create the sklearnKNN.py file

(2) Import sklearn related packages in the sklearknn.py file

Step 2: load training data

(1) In the sklearnKNN.py file, define the img2vector function to expand the loaded 32 * 32 picture matrix into a column of vectors.

(2) Define the function readDataSet to load training data in the sklearnKNN.py file.

(3) in the sklearnKNN.py file, the read DataSet and img2vector functions are called to load the data, and the trained pictures are stored in train_. In the dataset, the corresponding tag has a train_hwLabels.

Step 3: build KNN classifier

In the sklearnKNN.py file, build KNN classifier: set the search algorithm and the number of neighbor points (k).

- KNN is a lazy learning method. There is no learning process. It only finds the nearest neighbor point during prediction. The input of data set is the process of constructing KNN classifier.

- When building KNN, we also called the fit() function.

Step 4: test set evaluation

(1) Load test set

(2) The constructed KNN classifier is used to predict the test set, and the prediction error rate is calculated

Specific code

import numpy as np # Import numpy Toolkit

from os import listdir # Use the listdir module to access local files

from sklearn import neighbors

def img2vector(fileName):

retMat = np.zeros([1024], int) # Define the returned matrix with a size of 1 * 1024

fr = open(fileName) # Open a digital file with a size of 32 * 32

lines = fr.readlines() # Read all lines of the file

for i in range(32): # Traverse all lines of the file

for j in range(32): # And store the 01 number in retMat

retMat[i * 32 + j] = lines[i][j]

return retMat

def readDataSet(path):

fileList = listdir(path) # Get all files in the folder

numFiles = len(fileList) # Count the number of files that need to be read

dataSet = np.zeros([numFiles, 1024], int) # Used to store all digital files

hwLabels = np.zeros([numFiles]) # Used to store the corresponding label (different from neural network)

for i in range(numFiles): # Traverse all files

filePath = fileList[i] # Get file name / path

digit = int(filePath.split('_')[0]) # Get label by file name

hwLabels[i] = digit # Store numbers directly, not one hot vectors

dataSet[i] = img2vector(path + '/' + filePath) # Read file contents

return dataSet, hwLabels

# read dataSet

train_dataSet, train_hwLabels = readDataSet('digits/trainingDigits')

knn = neighbors.KNeighborsClassifier(algorithm='kd_tree', n_neighbors=3)

knn.fit(train_dataSet, train_hwLabels)

# read testing dataSet

dataSet, hwLabels = readDataSet('digits/testDigits')

res = knn.predict(dataSet) # Predict the test set

error_num = np.sum(res != hwLabels) # Count the number of classification errors

num = len(dataSet) # Number of test sets

print("Total num:", num, " Wrong num:", \

error_num, " TrueRate:", 1-(error_num / float(num)))

Experimental effect

Influence analysis of neighbor number k: set KNN classifiers with K as 1, 3, 5 and 7, and compare their experimental results.

KNN classifier with K set to 1:

Total num: 946 Wrong num: 13 TrueRate: 0.9862579281183932

KNN classifier with K set to 3:

Total num: 946 Wrong num: 12 TrueRate: 0.9873150105708245

KNN classifier with K set to 5:

Total num: 946 Wrong num: 19 TrueRate: 0.9799154334038055

KNN classifier with K set to 7:

Total num: 946 Wrong num: 22 TrueRate: 0.9767441860465116

Conclusion:

When K=3, the accuracy rate is the highest. When k > 3, the accuracy rate begins to decline. This is because when the sample is a sparse data set (there are only 946 samples in this example), the k-th neighbor point may be far away from the test point, so it cast an error vote, which affects the final prediction result.

Comparative experiment

KNN classifier vs. MLP multilayer perceptron:

We take the MLP classifier with the highest accuracy (H) and the worst accuracy (L) in the comparison experiments on the number of neurons in different hidden layers, the maximum number of iterations and the learning rate in the previous section. The parameters of each MLP are set as follows:

| MLP code | Number of hidden layer neurons | Maximum number of iterations | optimization method | Initial learning rate / learning rate |

|---|---|---|---|---|

| MLP-YH | 200 | 2000 | adam | 0.0001 |

| MLP-YL | 50 | 2000 | adam | 0.0001 |

| MLP-DH | 100 | 2000 | adam | 0.0001 |

| MLP-DL | 100 | 500 | adam | 0.0001 |

| MLP-XH | 100 | 2000 | sgd | 0.1 |

| MLP-XL | 100 | 2000 | sgd | 0.0001 |

Compare the best KNN classifier (K=3) and the worst KNN classifier (K=7) with each MLP classifier as follows:

(for MLP data, we need to look at the previous experiment, mainly real data)

| classifier | Number of neurons in MLP hidden layer (MLP-Y) | MLP iterations (MLP-D) | MLP learning rate (MLP-X) | Number of KNN neighbors | |

|---|---|---|---|---|---|

| best | Error quantity | 37 | 33 | 33 | 12 |

| best | Correct rate | 0.9608 | 0.9651 | 0.9651 | 0.9873 |

| worst | Error quantity | 43 | 54 | 242 | 22 |

| worst | Correct rate | 0.9545 | 0.9429 | 0.7441 | 0.9767 |

Conclusion:

- The accuracy of KNN is much higher than that of MLP classifier, which is because MLP is easy to over fit on small data sets.

- MLP is sensitive to parameter adjustment. If the parameter setting is unreasonable, it is easy to get poor classification effect. Therefore, parameter setting is very important for MLP.

Last thought

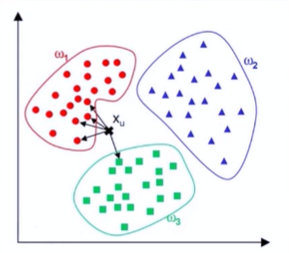

The principle of this experiment, KNN, K nearest neighbor algorithm, can be reviewed in the 12 basic classification models.

This time, the program needs to import the data set digits.rar and put it in the file directory.

The code is not very difficult, and it is marked. Different from the original video, the final output accuracy is changed, and the output result is slightly different from that given in the video.

For experimental comparison, different K values are set as 1, 3, 5 and 7. What needs to be changed is n in kneigborsclassifier()_ The value of neighbors.

In addition, because it needs to be compared with MLP multi-layer perceptron, I made a table and went to the last experiment to find the data.

The final conclusion is that the accuracy of KNN is much higher than that of MLP classifier.

It's so cold. I want to eat hot pot and small cake.