Preface

The text and pictures of the article are from the Internet, only for learning and communication, and do not have any commercial use. The copyright belongs to the original author. If you have any questions, please contact us in time for handling.

Crawler's website: Wanbang international group. Founded in 2010 and headquartered in Zhengzhou City, Henan Province, with the tenet of "based on agriculture, rural areas and farmers, ensuring people's livelihood, and serving the whole country", the business covers comprehensive cold chain logistics of agricultural products, efficient ecological agricultural development, fresh supermarket chain, cross-border e-commerce, import and export trade and other agricultural industry chain. It has won the honorary titles of key leading enterprise, national agricultural products "top ten comprehensive markets", "star creation world", national "ten thousand enterprises help ten thousand villages" precision poverty alleviation advanced private enterprise, etc. At present, Wanbang agricultural products logistics park constructed and operated by the group in Zhongmou county has invested 10 billion yuan in total, covering an area of 5000 mu and a building area of 3.5 million square meters. There are more than 6000 fixed merchants. In 2017, the trading volume of various agricultural and sideline products was 91.3 billion yuan and the trading volume was 17.2 million tons, ranking the first in China, realizing the goal of "buying the world and selling the country" for agricultural products.

The price information query is get request, the web page is relatively standard, and there will be no big change in the short term, so it is easy to analyze, so we choose it.

1, Using request to crawl data

# _*_ coding:utf-8 _*_ # Developers:Weiyang # development time :2020/4/12 16:03 # file name:Scrapy_lab1.py # development tool:PyCharm import csv import codecs import requests # Import requests package from bs4 import BeautifulSoup # Import bs4 package from datetime import datetime class Produce: price_data = [] # Price data list of agricultural products item_name = "" # Aliases of agricultural products def __init__(self, category): self.item_name = category self.price_data = [] # Read the data of a page, the default is the first page def get_price_page_data(self, page_index=1): url = 'http://www.wbncp.com/PriceQuery.aspx?PageNo=' + str( page_index) + '&ItemName=' + self.item_name + '&DateStart=2017/10/1&DateEnd=2020/3/31 ' strhtml = requests.get(url) # GET How to get web data # print(strhtml.text) soup = BeautifulSoup(strhtml.text, 'html.parser') # Parsing web documents # print(soup) table_node = soup.find_all('table') # number = 0 # for table in table_node: # number += 1 # print(number, table) all_price_table = table_node[21] # Get those with the price of agricultural products table Data for # print(all_price_table) for tr in all_price_table.find_all('tr'): number = 0 price_line = [] for td in tr.find_all('td'): number += 1 # print(number, td) if number == 1: price_line.append(td.get_text().split()) # Get product name elif number == 2: price_line.append(td.get_text().split()) # Obtain origin elif number == 3: price_line.append(td.get_text().split()) # Get specifications elif number == 4: price_line.append(td.get_text().split()) # Get unit elif number == 5: price_line.append(td.get_text().split()) # Get the highest price elif number == 6: price_line.append(td.get_text().split()) # Get the lowest price elif number == 7: price_line.append(td.get_text().split()) # Get the average price elif number == 8: price_line.append(datetime.strptime(td.get_text().replace('/', '-'), '%Y-%m-%d')) # get date self.price_data.append(price_line) return # Get data for all pages def get_price_data(self): for i in range(33): self.get_price_page_data(str(i)) return # How to write crawler data to CSV File, path: D:\Data_pytorch\Name.csv def data_write_csv(self): # file_address Write for CSV The path to the file, self.price_data To write a data list self.get_price_data() file_address = "D:\Data_pytorch\\" + self.item_name.__str__() + ".csv" file_csv = codecs.open(file_address, 'w+', 'utf-8') # Append writer = csv.writer(file_csv, delimiter=' ', quotechar=' ', quoting=csv.QUOTE_MINIMAL) for temp_data in self.price_data: writer.writerow(temp_data) print(self.item_name + "Crawler data saved to file successfully!") # Read as dictionary type csv file,The read path is: D:\Data_pytorch\Name.csv def data_reader_csv(self): file_address = "D:\Data_pytorch\\" + self.item_name.__str__() + ".csv" with open(file_address, 'r', encoding='utf8')as fp: # Use list derivation to load the read data into the list data_list = [i for i in csv.DictReader(fp, fieldnames=None)] # csv.DictReader The data read is list type print(self.item_name + "The data are as follows:") print(data_list) return data_list list = ["Chinese cabbage", "Cabbage", "Potato", "Spinach", "Garlic sprouts"] for temp_name in list: produce = Produce(temp_name) produce.data_write_csv() data = produce.data_reader_csv()

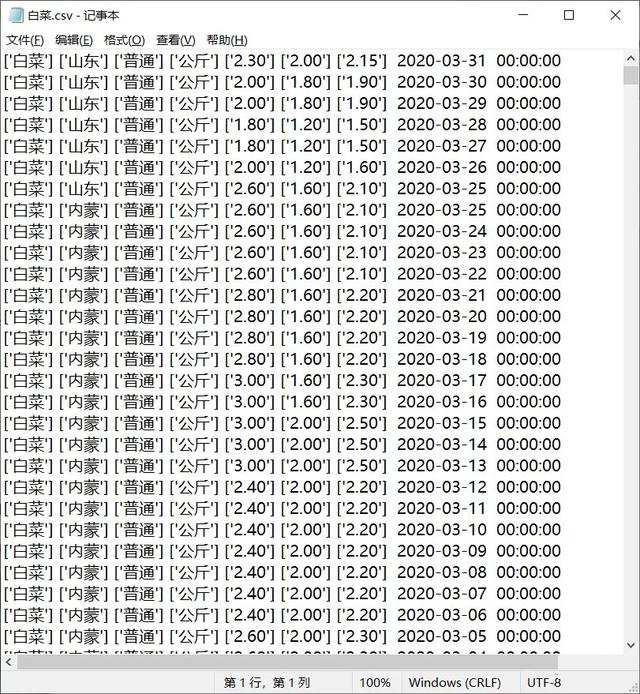

After running, the file displays as follows:

2, Crawling data using scratch

Similar to the previous study cases, here is no longer a step-by-step introduction, go directly to the code:

The code of items.py is as follows:

# -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # https://doc.scrapy.org/en/latest/topics/items.html import scrapy from scrapy.loader import ItemLoader from scrapy.loader.processors import TakeFirst class PriceSpiderItemLoader(ItemLoader): # custom itemloader,The default_output_processor = TakeFirst() class PriceSpiderItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() name = scrapy.Field() # Product Name address = scrapy.Field() # Place of Origin norms = scrapy.Field() # Specifications unit = scrapy.Field() # Company high = scrapy.Field() # Maximum price low = scrapy.Field() # minimum price price_ave = scrapy.Field() # average price price_date = scrapy.Field() # date

The setting.py code is as follows:

# -*- coding: utf-8 -*- # Scrapy settings for price_spider project # # For simplicity, this file contains only settings considered important or # commonly used. You can find more settings consulting the documentation: # # https://doc.scrapy.org/en/latest/topics/settings.html # https://doc.scrapy.org/en/latest/topics/downloader-middleware.html # https://doc.scrapy.org/en/latest/topics/spider-middleware.html from scrapy.exporters import JsonLinesItemExporter # Chinese displayed by default is poor in reading Unicode character # Need to define subclass to display the original character set(The ensure_ascii Property set to False that will do) class CustomJsonLinesItemExporter(JsonLinesItemExporter): def __init__(self, file, **kwargs): super(CustomJsonLinesItemExporter, self).__init__(file, ensure_ascii=False, **kwargs) # Enable newly defined Exporter class FEED_EXPORTERS = { 'json': 'price_spider.settings.CustomJsonLinesItemExporter', } BOT_NAME = 'price_spider' SPIDER_MODULES = ['price_spider.spiders'] NEWSPIDER_MODULE = 'price_spider.spiders' # Crawl responsibly by identifying yourself (and your website) on the user-agent # USER_AGENT = 'price_spider (+http://www.yourdomain.com)' # Obey robots.txt rules ROBOTSTXT_OBEY = False # Configure maximum concurrent requests performed by Scrapy (default: 16) # CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0) # See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs DOWNLOAD_DELAY = 3

The code of spider logic (spider.py) is as follows:

# _*_ coding:utf-8 _*_ # Developers:Weiyang # development time :2020/4/16 14:55 # file name:spider.py # development tool:PyCharm import scrapy from price_spider.items import PriceSpiderItemLoader, PriceSpiderItem class SpiderSpider(scrapy.Spider): name = 'spider' allowed_domains = ['www.wbncp.com'] start_urls = ['http://www.wbncp.com/PriceQuery.aspx?PageNo=1&ItemName=%e7%99%bd%e8%8f%9c&DateStart=2017/10/1' '&DateEnd=2020/3/31', 'http://Www.wbncp. COM / pricequery. ASPX? Pageno = 1 & itemname = Tudou & datestart = 2017 / 10 / 1' '&DateEnd=2020/3/31', 'http://www.wbncp.com/PriceQuery.aspx?PageNo=1&ItemName' '=Celery&DateStart=2017/10/1 &DateEnd=2020/3/31'] def parse(self, response): item_nodes = response.xpath("//tr[@class='Center' or @class='Center Gray']") for item_node in item_nodes: item_loader = PriceSpiderItemLoader(item=PriceSpiderItem(), selector=item_node) item_loader.add_css("name", "td:nth-child(1) ::text") item_loader.add_css("address", "td:nth-child(2) ::text") item_loader.add_css("norms", "td:nth-child(3) ::text") item_loader.add_css("unit", "td:nth-child(4) ::text") item_loader.add_css("high", "td:nth-child(5) ::text") item_loader.add_css("low", "td:nth-child(6) ::text") item_loader.add_css("price_ave", "td:nth-child(7)::text") item_loader.add_css("price_date", "td:nth-child(8)::text") price_item = item_loader.load_item() yield price_item next_page = response.xpath("//*[@id='cphRight_lblPage']/div/a[10]/@href").extract_first() if next_page is not None: next_page = response.urljoin(next_page) yield scrapy.Request(next_page, callback=self.parse)

The code to replace the running command (price main. Py) is as follows:

# _*_ coding:utf-8 _*_ # Developers:Weiyang # development time :2020/4/16 14:55 # file name:price_scrapy_main.py # development tool:PyCharm from scrapy.cmdline import execute execute(["scrapy", "crawl", "spider", "-o", "price_data.csv"])

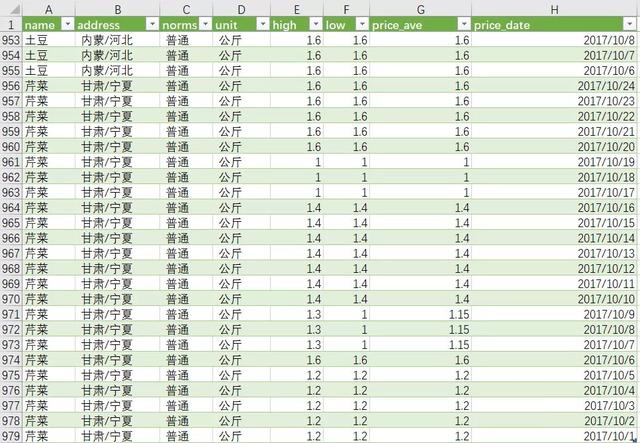

After the operation, the csv data is imported into excel, and the results are as follows:

3, Experience summary:

1. It's really flexible to use request, but if it's inconvenient to crawl more data, the code will be very long, and it's still convenient to use scratch. Especially crawling multiple pages, horizontal and vertical crawling of the story, super greasy!

2.Scrapy is mainly used to set various settings of the file (setting.py) and the crawler logic of the crawler file (spider.py in this paper). The selector part is troublesome

If you want to learn Python or are learning python, there are many Python tutorials, but are they up to date? Maybe you have learned something that someone else probably learned two years ago. Share a wave of the latest Python tutorials in 2020 in this editor. Access to the way, private letter small "information", you can get free Oh!