Preface

In the last article, I took WordCount as an example to explain the code structure and operation mechanism of MapReduce. This article will further understand MapReduce through a few simple examples.

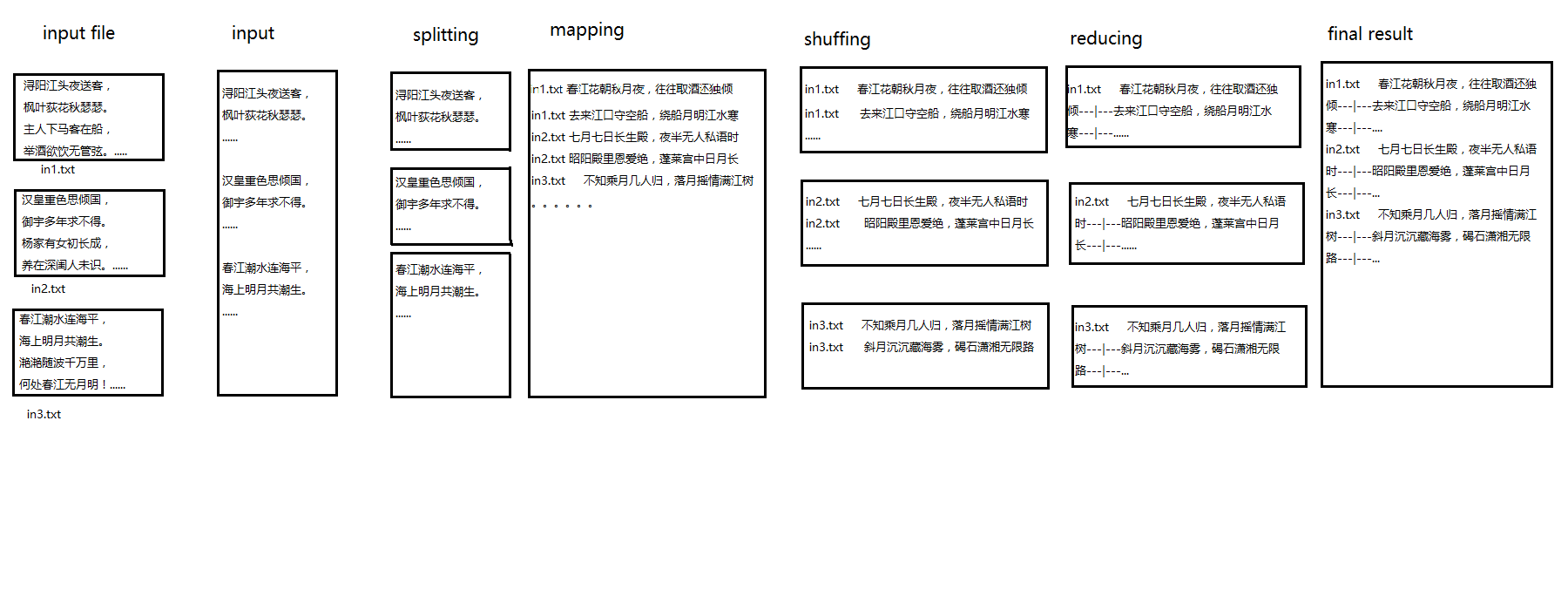

1. Data Retrieval

Problem description

Assuming there are many pieces of data, we look for statements that contain a string.

Solution

This problem is relatively simple. Firstly, the file name of the currently read file is obtained in Map as key, the data to be parsed is divided into periods, and judged sentence by sentence. If it contains a specified string, it is output as value. In Reduce, statements belonging to the same file are merged and then output.

test data

Input:

in1.txt:

Xunyang Jiangtou Night Delivery, Maple Leaf Silver Flowers autumn rustle. The owner dismounted the passenger from the boat and drank without an orchestra. If you are drunk, you will be separated. If you are drunk, you will be separated from the moon in the vast river. Suddenly heard the sound of Pipa on the water, the host forgot to return to the guest. Who is the bomber? The Pipa stops appetite and speaks late. Ships were moved to invite each other, wine was added and the dinner was reopened. A thousand calls begin to come out, still holding the pipa half-covered. Rotating axle plucked three or two strings, not tuned first affectionate. The string conceals the voice and thinks, as if telling you that you have no ambition in your life. Low eyebrow letter formalities renewed, said the hearts of infinite things. Light and slow twist and wipe and pick again, first for six units after neon clothes. Big strings are noisy like rain, and small strings are whispering. Noise cuts the wrong bullets, big pearls and small pearls fall on the jade plate. The Ying dialect in Jiankuan is slippery, but it's hard for the Youyan Spring to flow under the ice. The cold and astringent strings of the ice spring are frozen, and the frozen spring will never rest silently for a while. Don't be sad and hate life. Silence is better than sound. Silver bottles burst through the water and iron horses protruded with knives and guns. At the end of the song, pay attention to the painting, and the four strings sound like cracked silk. The East ship and the West boat are silent, but the autumn moon is white in the heart of the river. Meditation in the plucking chord, straightening up clothes and restraining. I said that I was a Beijing woman and I lived under the shrimp and Toad mausoleum. Thirteen learnt to play pipa, which is the first part of the workshop. Quba once taught good people to wear clothes, dressed up as being envied by Qiu Niang. Five mausoleums are young and entangled, and a song of red tapestry is unknown. The silver grate of the head of the mole smashes, and the red skirt turns over the wine. This year laughs again next year, autumn, moon, spring breeze and other leisure. The younger brother went to the army and his aunt died, so he went to the evening and came to the morning. In front of the door, pommel horses are neglected. The eldest man marries a businesswoman. Businessmen pay more attention to profits than to departure. They went to Fuliang to buy tea the previous month. Going to Jiangkou to guard the empty boat, around the Yueming River cold water. Late at night, I suddenly dreamed of teenagers, and my eyes were red and dry. I heard the pipa sigh, and I heard the words heavy haw. The same is the end of the world, why have you ever known each other when you meet each other! I have been living in Xunyang City, a sick city, since I resigned from the emperor last year. Xunyang is a remote place without music and can't hear silk and bamboo all the year round. Living near the Lujiang River, low humidity, Huanglu bitter bamboo around the house. What did you hear in the twilight? The cuckoo crows and the blood ape mourns. In the autumn and moonlit night, the flowers of the Spring River tend to drink alone. Are there no folk songs and village flutes? Dumbness and mockery are hard to hear. Tonight, I heard the words of Junpipa, like listening to Xianle temporarily. Mo Cigeng sat down to play a song and remake Pipa Xing for Jun. I feel that I have spoken this word for a long time, but I am sitting in a hurry. Sorrow does not sound forward, full of heavy news are crying. Who weeps most in the seat? Jiangzhou Sima Qing Shirt is wet.

in2.txt:

The emperor of the Han Dynasty thought deeply about his country, but the imperial court couldn't ask for it for many years. Yang's family has a girl who has just grown up and is not known by a boudoir. Natural beauty is hard to abandon, once chosen by the king. Looking back and laughing at Baimeisheng, Six Palaces Pink Dai without color. Spring cold gives bath Huaqing Pool, hot spring water slips and washes and coagulates grease. The waiter's inability to support her is the beginning of the new ChengEnze. Cloud sideburns with golden faces and lotus tents warm for spring night. Spring Festival Night is bitter and short day rises, from then on the emperor does not early dynasty. Chenghuan serves dinner without leisure. Spring outing night is a special night. There are 3,000 beautiful people in the back palace, 3,000 favorites in one. Golden house dressed up as a charming night, Yulou banquet drunk and spring. Sisters and brothers all belong to the earth, poor and glorious. So the world's parents will not give birth to new boys and new daughters. Ligong high into the Qingyun, Xianle wind blowing everywhere. Slow singing and dancing are not enough for the emperor. Yuyang nipple comes agitatingly and breaks the feather song of neon clothes. Nine heavy cities are full of smoke and dust, and they ride thousands of miles southwest. Cuihua swayed back and went west for more than a hundred miles. The Six Armies died before they turned their heads. No one collects the flowers, and the flowers scratch their heads. The king could not save his face, but looked back at the blood and tears flowing. Huang Ai is scattered in the wind, and the cloud stack lingers in the Jiangdeng Jiange Pavilion. There are few pedestrians under Mount Emei and the flag is sunless and thin. Shu River is green, and the Lord is in the twilight. The palace sees the sad moonlight, and the rain hears the bell break at night. The sky turns back to the dragon's rein and hesitates to go there. In the soil below the Mawei slope, there is no death of Yuyan Empty. The monarchs and ministers cared for each other's clothes and looked eastward at Dumen and believed in Horses and Horses. Returning to Chiyuan is still the same, Taiye lotus not Yangliu. Hibiscus is like a willow on the face and a brow on the face. Spring wind, peach and plum blossom, autumn rain, when the leaves fall. There are many autumn grasses in the south of Xigong, and the leaves are red. Liyuan disciple Bai Haixin, Jiaofang Jianqing'e old. Night hall fluorescent thought quietly, lone lamp picked up not sleeping. Late bells and drums early night, Geng Geng Xinghe is about to dawn. The mandarin duck tiles are full of frost and cold, and the jadeite tiles are full of cold. Long life and death do not go through years, the soul has never come to dream. Linyong Taoist priest Hongduke, can be sincere soul. In order to impress the king, he taught the academicians to look for them diligently. Exhaust the air and control the air, run like electricity, ascend into the sky and seek everywhere. Upper poor green falls down to Huangquan, and both are missing. Suddenly I heard that there are fairy mountains in the sea, and the mountains are in the void. The Pavilion is exquisite and five clouds rise, among which there are many fairies. One of the characters is too true, and the appearance of snow skin is uneven. Jinque Xixiang Xiaoyu Zhao, a converted Xiaoyu newspaper double. When I heard of the emperor of the Han family, I was shocked by the dream in Jiuhua tent. The pillow of the package hovered and the Pearl foil screen came apart. Cloud sideburns half asleep, the Corolla is incomplete down the hall. The wind blows the fairy jacket and floats like the dance of neon clothes and feathers. Yurong lonely tears dried up, pear blossom with spring rain. Looking at King Xie affectionately, his voice and face are different. Zhaoyang Palace is full of love, and Penglai Palace is full of sunshine and moon. Looking back at the human world, there is no dust in Chang'an. Only the old things show affection and the neptunium alloy will be sent to us. Leave one share in one fan, and brace gold in one. But the heart of teaching is like gold, and heaven and earth meet. Farewell courteous re-send words, there are vows two hearts know. On July 7, the Hall of Eternal Life was silent at midnight. On high, we'd be two birds flying wing to wing. On earth, two trees with branches twined from spring to spring. This hatred lasts forever and lasts forever.

in3.txt:

The Spring River tide is even at sea level, and the bright moon and the sea are intertidal. There are thousands of miles of sunshine in the spring river, where there is no moon in the spring river! The river flows around Fangdian, and the moonlight flowers and forests look like grains. Flowing frost in the air can't fly, and white sand in Ting Shang can't be seen. The River and the sky are all clean and dusty, and the lone moon in the sky is bright. Who first saw the moon by the river? When did Jiang Yue shine at the beginning of the year? Life has lasted for generations, but the years are similar. I don't know who Jiangyue will be, but I see the Yangtze River delivering water. White clouds go for a long time, and Qingfengpu is full of sorrow. Whose family is Bianzhou tonight? Where is the Acacia Moon Tower? Poor upstairs hovering last month, should be taken away from the makeup mirror. Jade curtains can't be rolled up and brushed back on the pounding anvil. At this time, I don't know each other, and I would like to show you month by month. The wild goose flies far and wide, and the ichthyosaur diving is written. Last night, the idle pool dreamed of falling flowers, and the poor spring did not return home. The river is running out of spring, and the moon falls back to the west. The oblique moon deposits sea fog and the Jieshi Xiaoxiang Infinite Road. I don't know how many people return in a month, but the falling moon is full of trees.

Expected results:

in1.txt Spring River flowers fall into the autumn moonlight night, often taking wine and leaning alone - | - - - to the river mouth empty boat, around the boat Yueming River cold water - | - - - businessmen pay more attention to profit than departure, the previous month Fuliang buy tea - - - - - - - - - - - - laughter this year, return next year, autumn, spring breeze and other leisure - - - - - - - - - East boat West Boat silence, only to see the river heart of the autumn - - - - - - - - - - - - - - - will not be drunk sadly farewell, when the vast river soaks in the Moon - - - -- | -- In 2.txt On July 7, Changsheng Palace, when nobody whispered in the middle of the night - - | - - - Zhaoyang Palace is full of love, and Penglai Palace is full of sun and moon - | - - - - - - the palace sees the sad moonlight, and the rain hears the bell and bowel break at night - | - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - In 3.txt, I don't know how many people return in the month, and the moon falls in love with the trees of the river - | - - - the inclined moon sinks in sea fog, the infinite road of Xiaoxiang in Jieshi - | - - - the river flows away in spring, and the river pool falls back to the West - | - - - at this time, I don't know each other. I would like to see you - - - - - - - - - the pitiful building wandering in the month after month, who should leave the dressing mirror table - - - - | - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - who's Bianzhou Where is the Acacia Moon Tower? I don't know who the river is waiting for, but I see the Yangtze River delivering water - ------------------------------------------------------------------------------------------------------------------------------------------------ When did Jiang Yue shine at the beginning of the year? The river flows around Fangdian, and the moonlight blossoms and forests look like grains; ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- The Spring River tide is at sea level, and the bright moon and the sea tide are at sea - --------------------------------------------------------------------------------------------------------

The above examples are poems and sentences containing the word "month" in the retrieval document.

look and say

See the specific process through the following figure:

Code

package train;

import java.io.IOException;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Mapper.Context;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.FileSplit;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

import train.InvertedIndex.Combine;

import train.InvertedIndex.Map;

import train.InvertedIndex.Reduce;

/**

* Find a sentence that contains the specified string

* @author hadoop

*

*/

public class Search {

public static class Map extends Mapper<Object,Text,Text,Text>{

private static final String word = "month";

private FileSplit fileSplit;

public void map(Object key,Text value,Context context) throws IOException, InterruptedException{

fileSplit = (FileSplit)context.getInputSplit();

String fileName = fileSplit.getPath().getName().toString();

//Separation by period

StringTokenizer st = new StringTokenizer(value.toString(),". ");

while(st.hasMoreTokens()){

String line = st.nextToken().toString();

if(line.indexOf(word)>=0){

context.write(new Text(fileName),new Text(line));

}

}

}

}

public static class Reduce extends Reducer<Text,Text,Text,Text>{

public void reduce(Text key,Iterable<Text> values,Context context) throws IOException, InterruptedException{

String lines = "";

for(Text value:values){

lines += value.toString()+"---|---";

}

context.write(key, new Text(lines));

}

}

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

conf.set("mapred.job.tracker", "localhost:9001");

args = new String[]{"hdfs://localhost:9000/user/hadoop/input/search_in","hdfs://localhost:9000/user/hadoop/output/search_out"};

//Check Running Command

String[] otherArgs = new GenericOptionsParser(conf,args).getRemainingArgs();

if(otherArgs.length != 2){

System.err.println("Usage search <int> <out>");

System.exit(2);

}

//Configure job name

Job job = new Job(conf,"search");

//Configure job classes

job.setJarByClass(InvertedIndex.class);

job.setMapperClass(Map.class);

job.setReducerClass(Reduce.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

In map, the file where the data is located is obtained by context.getInputSplit(), and then the read data is separated by periods, and traversed. If the specified character "month" is included, the file name is used as key and the sentence is written as value.

Reduction is a simple merging process.

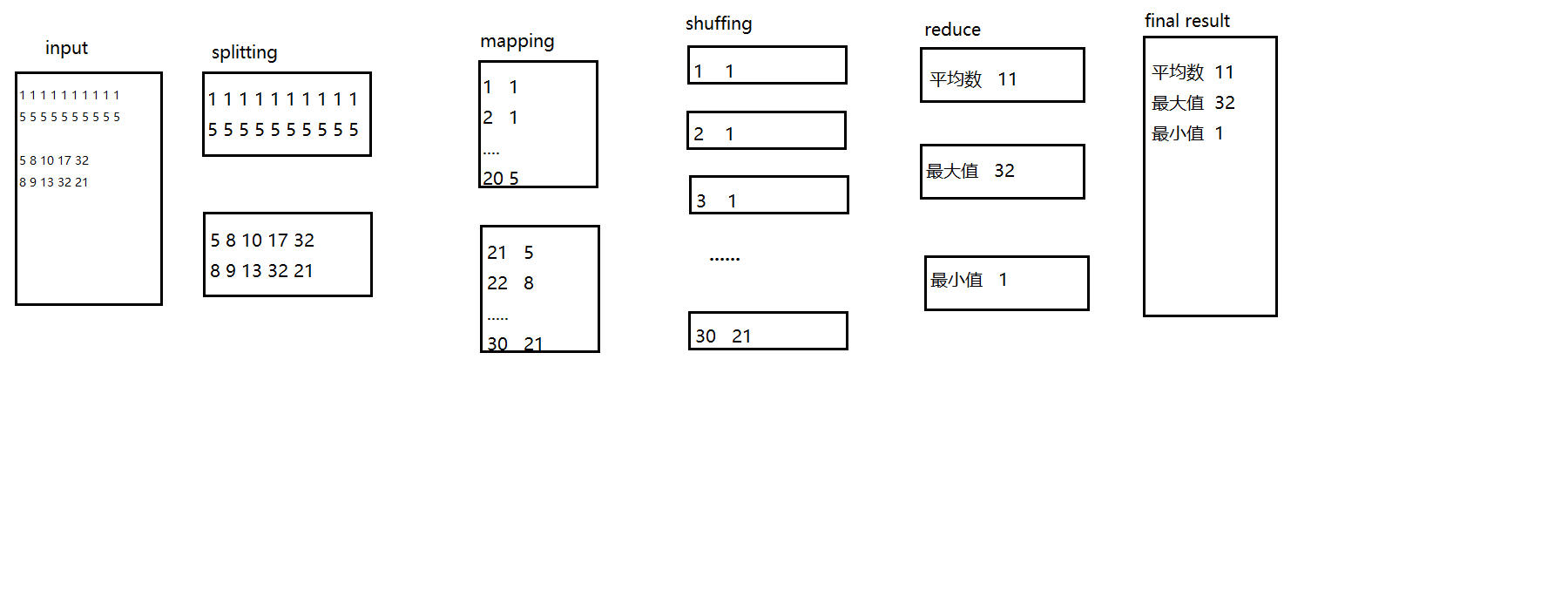

2. Maximum and Minimum Average

Problem description

Given a batch of numbers, get the maximum and minimum, and get the average.

Solution

This problem is also very simple. First, read and cut data in map, define an incremental number as key, cut down the number as value. In reduce, traverse the value, calculate the number and compare the size at the same time to get the maximum and minimum, and finally calculate the average.

test data

input

in1.txt

1 1 1 1 1 1 1 1 1 1 5 5 5 5 5 5 5 5 5 5

in2.txt

5 8 10 17 32 8 9 13 32 21

Expected results

Average 11 Maximum 32 Minimum 1

look and say

Code

package train;

import java.io.IOException;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

import test.WordCount;

/**

* Calculated average

* @author hadoop

*

*/

public class Average1 {

public static class Map extends Mapper<Object,Text,IntWritable,IntWritable>{

private static IntWritable no = new IntWritable(1); //Counting as key

private Text number = new Text(); //Store cut numbers

public void map(Object key,Text value,Context context) throws IOException, InterruptedException{

StringTokenizer st = new StringTokenizer(value.toString());

while(st.hasMoreTokens()){

number.set(st.nextToken());

context.write(no, new IntWritable(Integer.parseInt(number.toString())));

}

}

}

public static class Reduce extends Reducer<IntWritable,IntWritable,Text,IntWritable>{

//Define global variables

int count = 0; //Number of numbers

int sum = 0; //The sum of numbers

int max = -2147483648;

int min = 2147483647;

public void reduce(IntWritable key,Iterable<IntWritable> values,Context context) throws IOException, InterruptedException{

for(IntWritable val:values){

if(val.get()>max){

max = val.get();

}

if(val.get()<min){

min = val.get();

}

count++;

sum+=val.get();

}

int average = (int)sum/count; //Calculated average

//System.out.println(sum+"--"+count+"--"+average);

context.write(new Text("Average"), new IntWritable(average));

context.write(new Text("Maximum value"), new IntWritable(max));

context.write(new Text("minimum value"), new IntWritable(min));

}

}

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

// TODO Auto-generated method stub

Configuration conf = new Configuration();

//conf.set("mapred.job.tracker", "localhost:9001");

conf.addResource("config.xml");

args = new String[]{"hdfs://localhost:9000/user/hadoop/input/average1_in","hdfs://localhost:9000/user/hadoop/output/average1_out"};

//Check Running Command

String[] otherArgs = new GenericOptionsParser(conf,args).getRemainingArgs();

if(otherArgs.length != 2){

System.err.println("Usage WordCount <int> <out>");

System.exit(2);

}

//Configure job name

Job job = new Job(conf,"average1 ");

//Configure job classes

job.setJarByClass(Average1.class);

job.setMapperClass(Map.class);

job.setReducerClass(Reduce.class);

//The output type of Mapper

*Emphasis on content* job.setOutputKeyClass(IntWritable.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

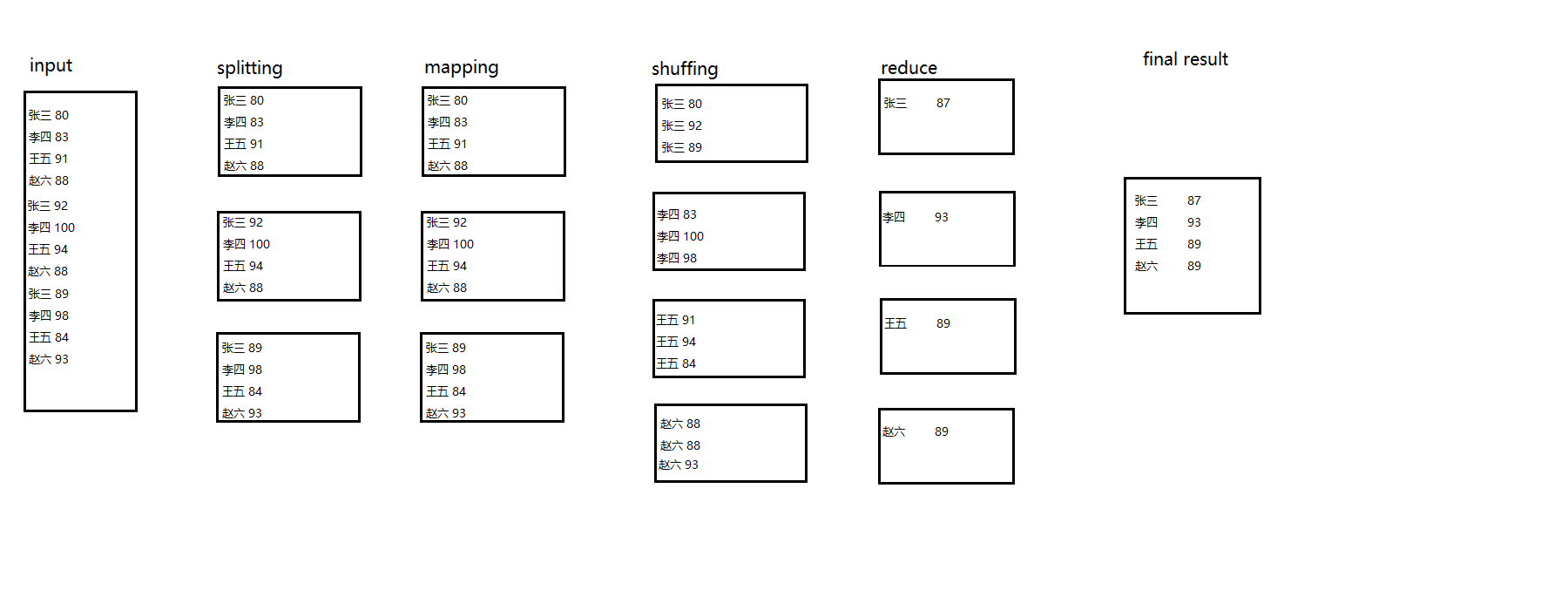

3. Average Achievements

Problem description

Given three input files, each file has multiple students'math and English language scores, and the average scores of each student in three subjects are calculated.

Solution

The problem is also very simple, parsing the data in the map and using the student's name as the key and the score as the value output.

test data

Input:

in1.txt

Zhang San 80 Li Si 83 Wang Wu 91 Zhao six 88

in2.txt

Zhang San 92 Li Si 100 Wang Wu 94 Zhao six 88

in3.txt

Zhang San 89 Li Si 98 Wang Wu 84 Zhao Liu 93

Expected results

Zhang San 87 Li Si 93 Wang five 89 Zhao six 89

look and say

Code

package train;

import java.io.IOException;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.Reducer.Context;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

import train.Average1.Map;

import train.Average1.Reduce;

/**

* Calculate the average score of each student

* @author hadoop

*

*/

public class Average2 {

public static class Map extends Mapper<Object,Text,Text,IntWritable>{

public void map(Object key,Text value,Context context) throws IOException, InterruptedException{

//Segmentation of data by row

StringTokenizer st = new StringTokenizer(value.toString(),"\n");

while(st.hasMoreTokens()){

//Separate each row of data by space

StringTokenizer stl = new StringTokenizer(st.nextToken());

String name = stl.nextToken();

String score = stl.nextToken();

//Name score

context.write(new Text(name), new IntWritable(Integer.parseInt(score)));

}

}

}

public static class Reduce extends Reducer<Text,IntWritable,Text,IntWritable>{

public void reduce(Text key,Iterable<IntWritable> values,Context context) throws IOException, InterruptedException{

int count = 0; //Number

int sum = 0; //The sum

for(IntWritable val:values){

count++;

sum+=val.get();

}

int average = (int)sum/count; //Calculated average

System.out.println(sum+"--"+count+"--"+average);

context.write(key, new IntWritable(average));

}

}

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

// TODO Auto-generated method stub

Configuration conf = new Configuration();

//conf.set("mapred.job.tracker", "localhost:9001");

conf.addResource("config.xml");

args = new String[]{"hdfs://localhost:9000/user/hadoop/input/average2_in","hdfs://localhost:9000/user/hadoop/output/average2_out"};

//Check Running Command

String[] otherArgs = new GenericOptionsParser(conf,args).getRemainingArgs();

if(otherArgs.length != 2){

System.err.println("Usage WordCount <int> <out>");

System.exit(2);

}

//Configure job name

Job job = new Job(conf,"average1 ");

//Configure job classes

job.setJarByClass(Average2.class);

job.setMapperClass(Map.class);

job.setReducerClass(Reduce.class);

//The output type of Mapper

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

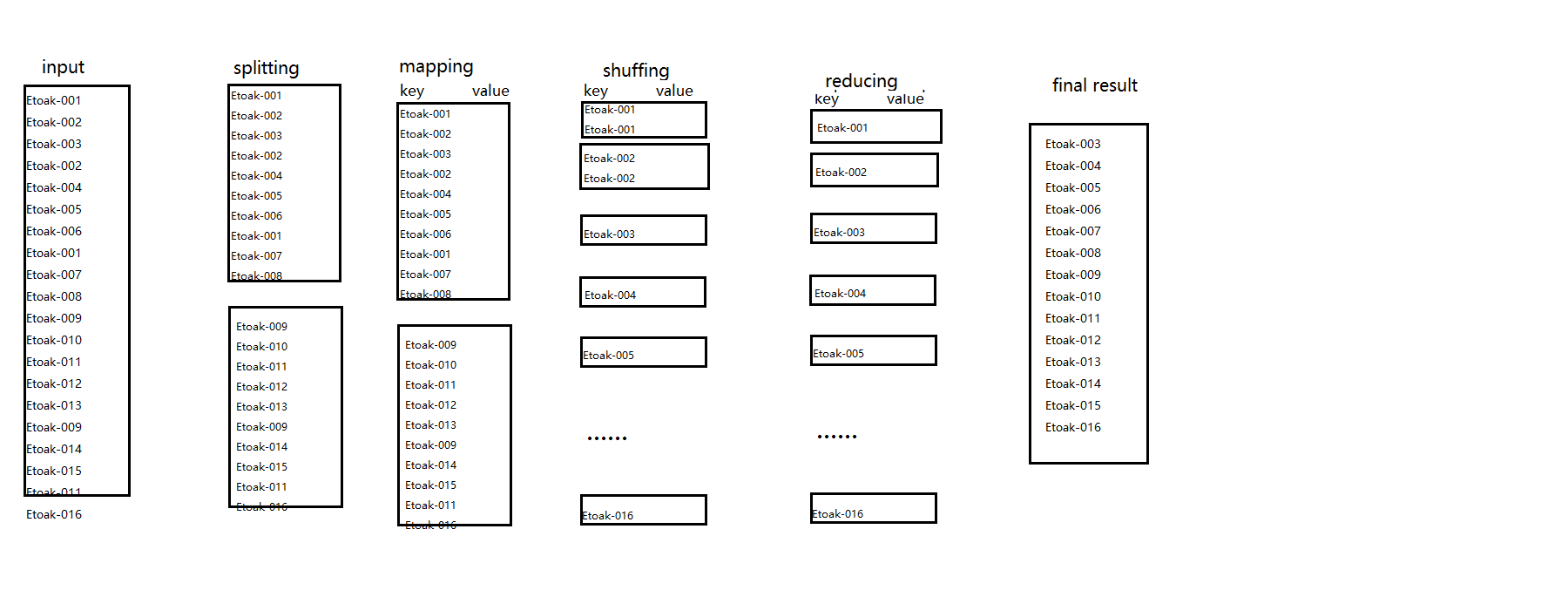

4. Data Reduplication

Problem description

Given several sets of data, the data is de-duplicated and output

Solution

In shuffing shuffling stage, it will be classified according to key, so the key value is unique when the data arrives at reduce method, as long as the data read from the file is output as the key value, and the value value is empty.

test data

input

in1.txt

Etoak-001 Etoak-002 Etoak-003 Etoak-002 Etoak-004 Etoak-005 Etoak-006 Etoak-001 Etoak-007 Etoak-008

in2.txt

Etoak-009 Etoak-010 Etoak-011 Etoak-012 Etoak-013 Etoak-009 Etoak-014 Etoak-015 Etoak-011 Etoak-016

Expected results:

Etoak-001 Etoak-002 Etoak-003 Etoak-004 Etoak-005 Etoak-006 Etoak-007 Etoak-008 Etoak-009 Etoak-010 Etoak-011 Etoak-012 Etoak-013 Etoak-014 Etoak-015 Etoak-016

look and say

Code

package train;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

import test.WordCount;

/**

* Data deduplication

* @author hadoop

*

*/

public class Duplicate {

//Output key Text, output value Text

public static class Map extends Mapper<Object,Text,Text,Text>{

//In Map, the value of the data received from the file is written directly as the key to the output, and the value is empty.

public void map(Object key,Text value,Context context) throws IOException, InterruptedException{

context.write(value, new Text(""));

}

}

//The results of the above map phase are shuffle d and passed to reduce

//In the reduce phase, the key of the acquired data is directly used as the output key, and the value is empty.

public static class Reduce extends Reducer<Text,Text,Text,Text>{

public void reduce(Text key,Iterable<Text> values,Context context) throws IOException, InterruptedException{

context.write(key, new Text(""));

System.out.println(key);

}

}

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

conf.set("mapred.job.tracker", "localhost:9001");

args = new String[]{"hdfs://localhost:9000/user/hadoop/input/duplicate_in","hdfs://localhost:9000/user/hadoop/output/duplicate_out"};

//Check Running Command

String[] otherArgs = new GenericOptionsParser(conf,args).getRemainingArgs();

if(otherArgs.length != 2){

System.err.println("Usage Duplicate <int> <out>");

System.exit(2);

}

//Configure job name

Job job = new Job(conf,"duplicate");

//Configure job classes

job.setJarByClass(Duplicate.class);

job.setMapperClass(Map.class);

job.setCombinerClass(Reduce.class);

job.setReducerClass(Reduce.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

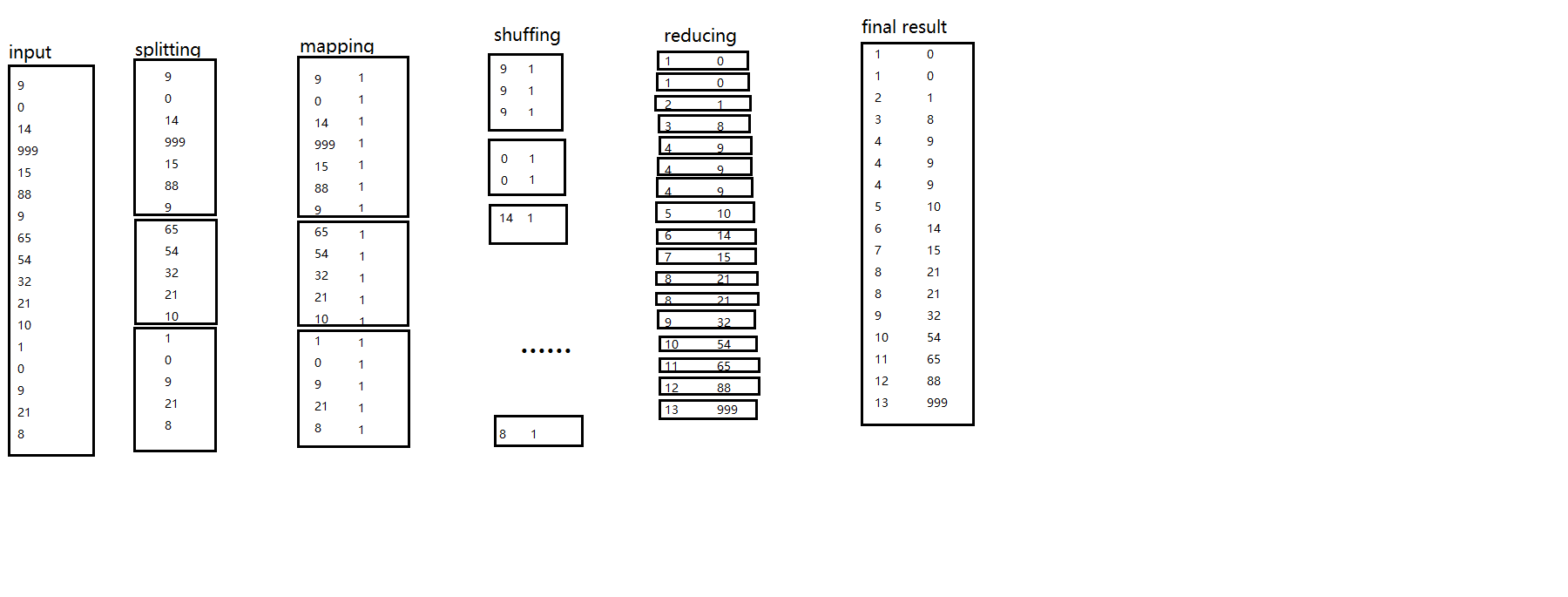

5. ranking

Problem description

Sort a given set of data in ascending order and give the order of each number.

Solution

Using the default sorting rule of mapreduce, data of type Intwritable is sorted by key value size

test data

Input:

in1.txt:

9 0 14 999 15 88 9

in2.txt:

65 54 32 21 10

in3.txt:

1 0 9 21 8

Expected results:

1 0 1 0 2 1 3 8 4 9 4 9 4 9 5 10 6 14 7 15 8 21 8 21 9 32 10 54 11 65 12 88 13 999

look and say

Code

package train;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

import train.Duplicate.Map;

import train.Duplicate.Reduce;

/**

* Ascending sort (using the default sort rule provided by mapreduce)

* For IntWritable type data, sort by key value size

* @author hadoop

*

*/

public class Sort {

//Replace the value of the input data with an int type and output it as a key

public static class Map extends Mapper<Object,Text,IntWritable,IntWritable>{

private static IntWritable numble = new IntWritable();

private static final IntWritable one = new IntWritable(1);

public void map(Object key,Text value,Context context) throws IOException, InterruptedException{

String line = value.toString();

numble.set(Integer.parseInt(line));

context.write(numble, one);

}

}

//Global num determines the order and order of each number

//Traverse values to determine the number of times each digital output is made

public static class Reduce extends Reducer<IntWritable,IntWritable,IntWritable,IntWritable>{

private static IntWritable num = new IntWritable(1);

public void reduce(IntWritable key,Iterable<IntWritable> values,Context context) throws IOException, InterruptedException{

//System.out.println(key+" "+num);

for(IntWritable value:values){

context.write(num, key);

System.out.println(key+"--"+value+"--"+num);

}

num = new IntWritable(num.get()+1);

}

}

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

conf.set("mapred.job.tracker", "localhost:9001");

args = new String[]{"hdfs://localhost:9000/user/hadoop/input/sort_in","hdfs://localhost:9000/user/hadoop/output/sort_out"};

//Check Running Command

String[] otherArgs = new GenericOptionsParser(conf,args).getRemainingArgs();

if(otherArgs.length != 2){

System.err.println("Usage Sort <int> <out>");

System.exit(2);

}

//Configure job name

Job job = new Job(conf,"sort");

//Configure job classes

job.setJarByClass(Sort.class);

job.setMapperClass(Map.class);

job.setReducerClass(Reduce.class);

job.setOutputKeyClass(IntWritable.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

Note that this code does not need to set combin, otherwise the results will be inconsistent because it will merge more than once.

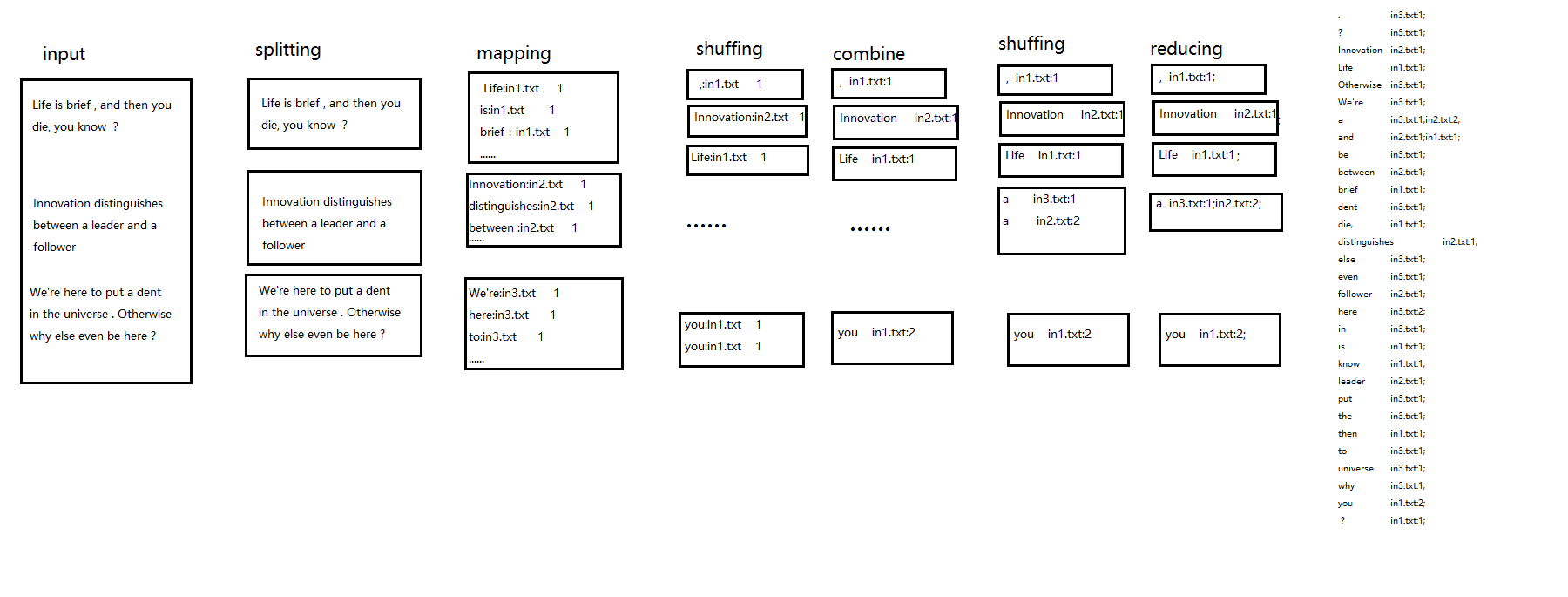

6. Inverted Index

Problem description

There are many pieces of data, which are grouped according to attribute values, such as for multiple statements, grouped according to the words contained.

test data

Input:

in1.txt

Life is brief , and then you die, you know ?in2.txt:

Innovation distinguishes between a leader and a followerin3.txt

We're here to put a dent in the universe . Otherwise why else even be here ?Expected results:

, in1.txt:1;

. in3.txt:1;

? in3.txt:1;

Innovation in2.txt:1;

Life in1.txt:1;

Otherwise in3.txt:1;

We're in3.txt:1;

a in3.txt:1;in2.txt:2;

and in2.txt:1;in1.txt:1;

be in3.txt:1;

between in2.txt:1;

brief in1.txt:1;

dent in3.txt:1;

die, in1.txt:1;

distinguishes in2.txt:1;

else in3.txt:1;

even in3.txt:1;

follower in2.txt:1;

here in3.txt:2;

in in3.txt:1;

is in1.txt:1;

know in1.txt:1;

leader in2.txt:1;

put in3.txt:1;

the in3.txt:1;

then in1.txt:1;

to in3.txt:1;

universe in3.txt:1;

why in3.txt:1;

you in1.txt:2;

? in1.txt:1;look and say

Code

package train;

import java.io.IOException;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.FileSplit;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

/**

* Inverted index

* @author hadoop

*

*/

public class InvertedIndex {

//Output value: key for word + file address value for frequency, all specified 1

public static class Map extends Mapper<Object,Text,Text,Text>{

private Text keyStr = new Text();

private Text valueStr = new Text();

private FileSplit fileSplit;

public void map(Object key,Text value,Context context) throws IOException, InterruptedException{

//Get input file information

fileSplit = (FileSplit)context.getInputSplit();

//Cut by space

StringTokenizer st = new StringTokenizer(value.toString().trim());

while(st.hasMoreTokens()){

String filePath = fileSplit.getPath().getName().toString();

keyStr.set(st.nextToken()+":"+filePath);

valueStr.set("1");

context.write(keyStr,valueStr);

}

}

}

//Merging frequency

//Output: key for word value for file address + frequency

public static class Combine extends Reducer<Text,Text,Text,Text>{

private Text newValue = new Text();

public void reduce(Text key,Iterable<Text> values,Context context) throws IOException, InterruptedException{

int sum = 0;

//Merging frequency

for(Text value:values){

sum += Integer.parseInt(value.toString());

}

//Split the original key, take the word as the new key, and the file address + frequency as the value.

int index = key.toString().indexOf(":");

String word = key.toString().substring(0,index);

String filePath = key.toString().substring(index+1,key.toString().length());

key.set(word);

newValue.set(filePath+":"+sum);

context.write(key,newValue);

}

}

//Integrate multiple files and frequencies corresponding to each word into one line

public static class Reduce extends Reducer<Text,Text,Text,Text>{

Text newValue = new Text();

public void reduce(Text key,Iterable<Text> values,Context context) throws IOException, InterruptedException{

String files = "";

for(Text value:values){

files += value+";";

}

newValue.set(files);

context.write(key,newValue);

}

}

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

conf.set("mapred.job.tracker", "localhost:9001");

args = new String[]{"hdfs://localhost:9000/user/hadoop/input/invertedIndex_in","hdfs://localhost:9000/user/hadoop/output/invertedIndex_out"};

//Check Running Command

String[] otherArgs = new GenericOptionsParser(conf,args).getRemainingArgs();

if(otherArgs.length != 2){

System.err.println("Usage invertedIndex <int> <out>");

System.exit(2);

}

//Configure job name

Job job = new Job(conf,"invertedIndex");

//Configure job classes

job.setJarByClass(InvertedIndex.class);

job.setMapperClass(Map.class);

job.setCombinerClass(Combine.class);

job.setReducerClass(Reduce.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}