This paper introduces GuassDB's Cross cluster access to remote oracle and remote MPPDB database. Due to the limited environment, docking HDFS and docking Spark functions are not listed here.

I. docking with oracle Database

1. Restriction

- Only connection from Oracle11.2.0.1 to Oracle12.2.0.1 is supported.

- The Oracle-ODBC driver version is required to be 12.2.0.1.0.

- The version of UNIX ODBC requires 2.3.6.

- Supports up to 20 remote Oracle configurations.

- Please download the required Oracle ODBC from the Oracle official website. Take Oracle 11.2.0.4 as an example, you need to download: "oracle-instantclient-basic-linux.x64-12.2.0.1.0.zip", "oracle-instantclient-sqlplus-linux.x64-12.2.0.1.0.zip", "oracle-instantclient-odbc-linux.x64-12.2.0.1.0.zip". And compress the three zip files into "package.zip".

2. Preconditions

- Oracle database and gausdb 200 cluster are in normal state.

- The Oracle database physical nodes and the gausdb 200 cluster can communicate with each other.

3. Install unixODBC

Gaussdb requires a UNIX ODBC version of 2.3.6. The version of the operating system cannot be used normally. It needs to be downloaded and compiled separately from the official website.

[root@hd06 ~]# tar -xzf unixODBC-2.3.6.tar.gz [root@hd06 ~]# cd unixODBC-2.3.6 [root@hd06 unixODBC-2.3.6]# ./configure [root@hd06 unixODBC-2.3.6]# make [root@hd06 unixODBC-2.3.6]# make install

After the installation, you need to specify the environment variable of the library file, otherwise an error will be reported during the use.

[root@hd06 ~]# vi /etc/profile export LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:/usr/local/lib [root@hd06 ~]# isql --version unixODBC 2.3.6 [root@hd06 ~]# su - omm Last login: Tue Oct 29 16:35:31 CST 2019 [omm@hd06 ~]$ isql --version unixODBC 2.3.6After saving, you must make the environment variable effective.

4. Create package.zip

Download the following three compressed packages from the official oracle website, and then compress them into the package.zip file.

[root@hd06 tmp]# zip package.zip instantclient-*.zip adding: instantclient-basic-linux.x64-12.2.0.1.0.zip (stored 0%) adding: instantclient-odbc-linux.x64-12.2.0.1.0-2.zip (stored 0%) adding: instantclient-sqlplus-linux.x64-12.2.0.1.0.zip (stored 0%)

5. Create docking oracle on Gassdb management page

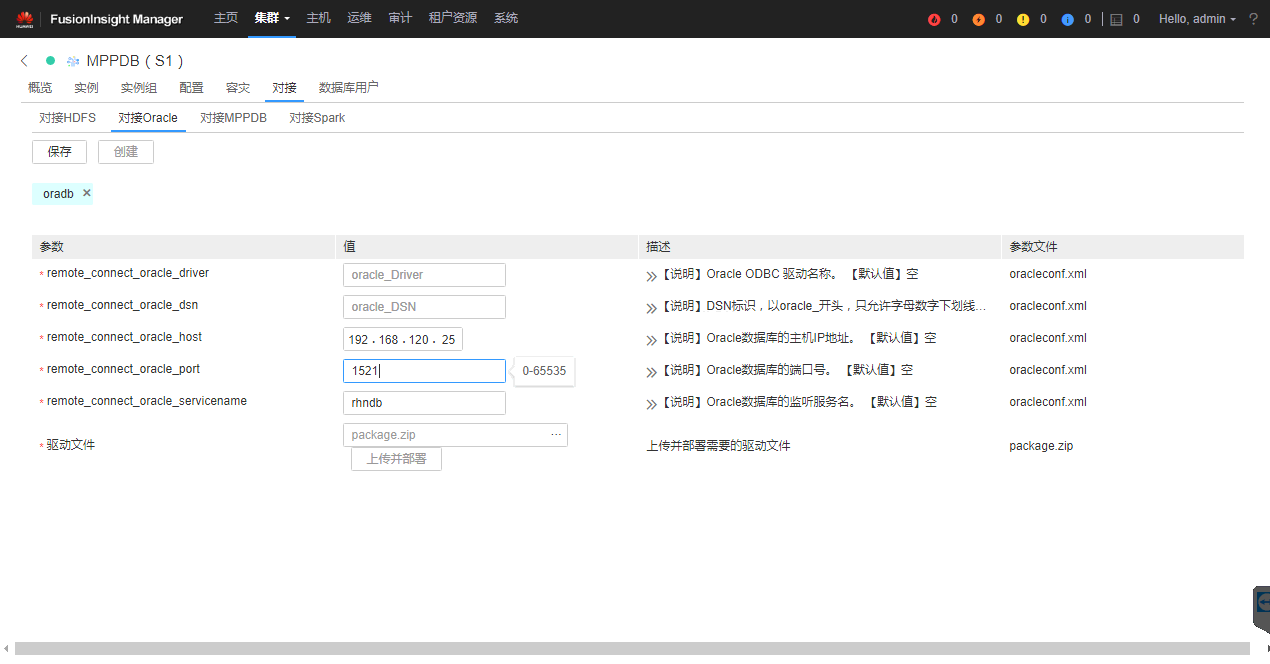

Log in to fusion Insight Manager > select cluster > cluster name to be operated > Services > mppdb > docking > docking Oracle, click create, fill in the configuration group name, and click OK. As shown in the figure below:

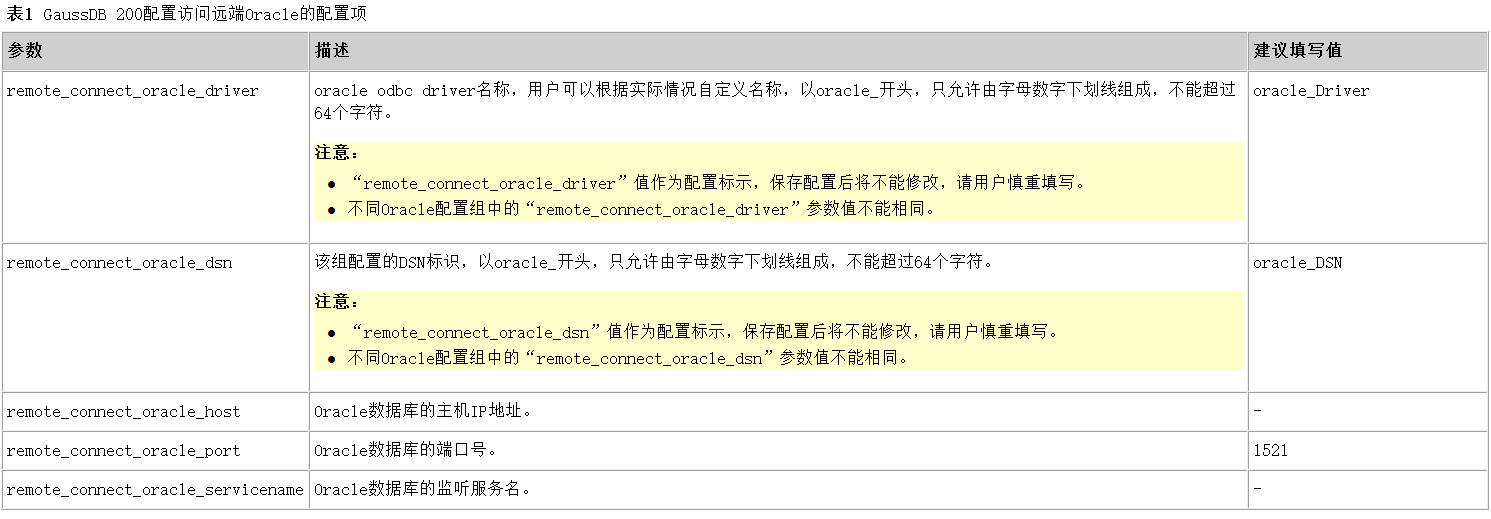

See the table below for Parameter Description:

Enter the relevant configuration parameters and upload the driver file "package.zip". Click "upload and deploy" and wait for the deployment to complete. When the deployment is complete, click save. Wait for the configuration to be saved successfully, and click finish. Then on the "Overview" page of the cluster, select "more > Restart", and perform the corresponding operation to restart the service.

Note: in the process of service restart, the system will distribute the ODBC driver to each node to complete the deployment. The deployment directory of ODBC is: / opt / Huawei / bigdata / mppdb / core / utilslib / FC? Conf.6. Create Data Source and connect to oracle Database

Switch to the omm user from the host, connect to the mppdb database, and execute the following statement to create:

[root@hd06 ~]# su - omm Last login: Tue Oct 29 16:43:45 CST 2019 [omm@hd06 ~]$ gsql -d postgres -p 25308 gsql ((GaussDB Kernel V300R002C00 build 8a9c1eb6) compiled at 2019-08-01 18:47:38 commit 6093 last mr 10175 ) Non-SSL connection (SSL connection is recommended when requiring high-security) Type "help" for help. postgres=#CREATE DATA SOURCE ds_oracle TYPE 'ORACLE' OPTIONS (DSN 'oracle_DSN', USERNAME 'spwuser', PASSWORD 'redhat', ENCODING 'UTF8'); CREATE DATA SOURCE

Use the following statement to verify that you can connect to the remote oracle Database:

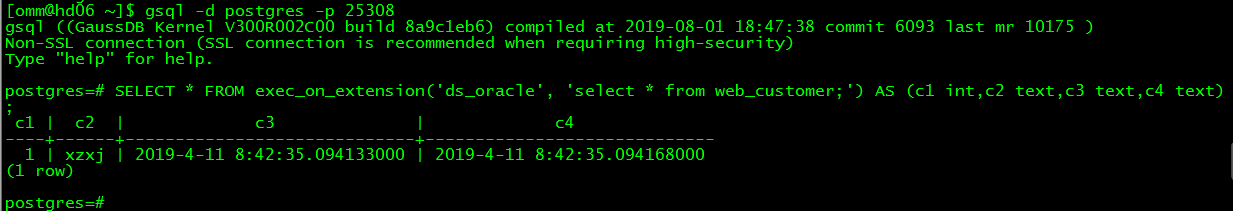

postgres=# SELECT * FROM exec_on_extension('ds_oracle', 'select * from web_customer;') AS (c1 int,c2 text,c3 text,c4 text);As shown in the figure below:

Note: you need to create a Data Source object before using "exec on extension".

The following example imports the remote query results into the local table:

[omm@hd06 ~]$ gsql -d postgres -p 25308

gsql ((GaussDB Kernel V300R002C00 build 8a9c1eb6) compiled at 2019-08-01 18:47:38 commit 6093 last mr 10175 )

Non-SSL connection (SSL connection is recommended when requiring high-security)

Type "help" for help.

postgres=#\c rhnschema

rhnschema=# CREATE TABLE dd AS SELECT * FROM exec_on_extension('ds_oracle', 'select * from rhnpackagefile;') AS (package_id numeric,capability_id numeric,device numeric,

inode numeric,file_mode numeric,username character varying(32),groupname character varying(32),rdev numeric,file_size numeric,mtime timestamp with time zone,

checksum_id numeric,linkto character varying(256),flags numeric,verifyflags numeric,lang character varying(32),created timestamp with time zone,modified timestamp with time zone);

NOTICE: The 'DISTRIBUTE BY' clause is not specified. Using 'package_id' as the distribution column by default.

HINT: Please use 'DISTRIBUTE BY' clause to specify suitable data distribution column.

INSERT 0 6304854

rhnschema=# select count(*) from dd;

count

---------

6304854

(1 row)II. Docking remote MPPDB

Gausdb 200 supports two ways to access the remote MPPDB database cluster, including the way to configure through fusioniinsight manager and the way to create a Foreign Table.

2.1. Configuration through fusioninspight Manager

- create profile

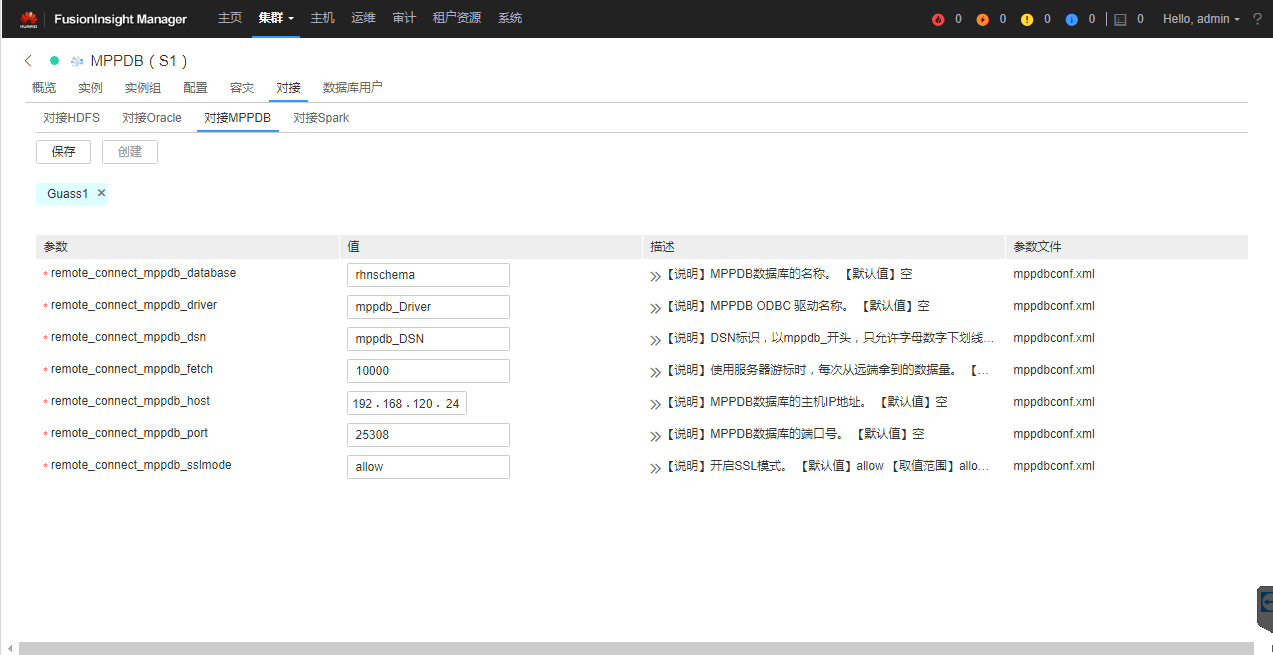

Log in to fusion Insight Manager > select cluster > cluster name to be operated > Services > MPPDB > docking > docking MPPDB, click create, fill in the configuration group name, and click OK. As shown in the figure below:

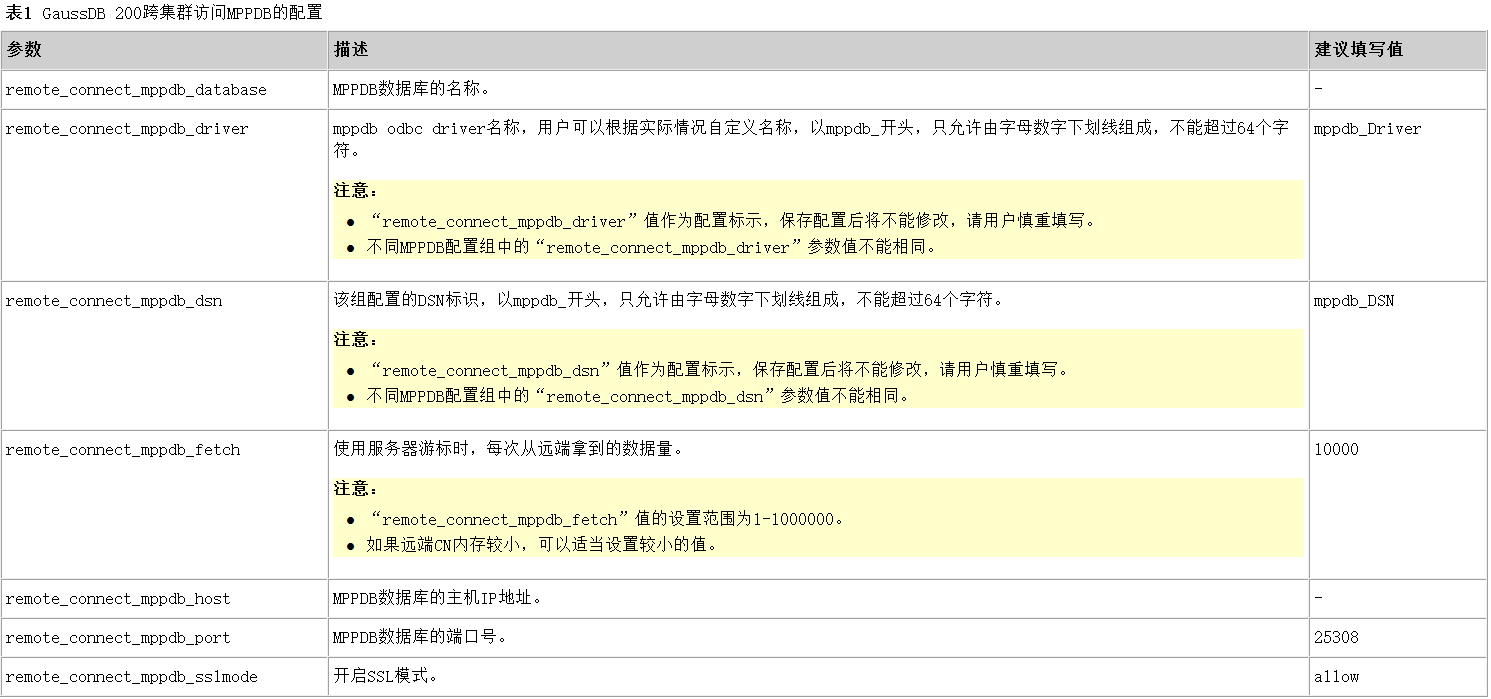

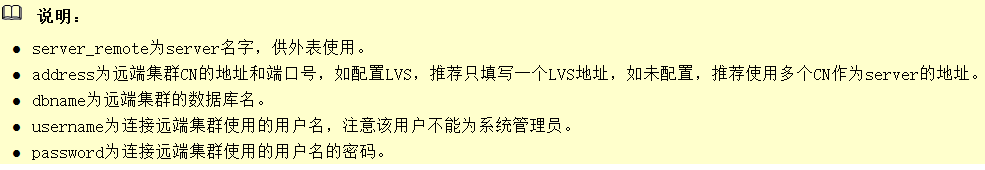

Parameter description is shown in the figure below:

Click save. Wait for the configuration to be saved successfully, and click finish. Then select "more > Restart" on the "Overview" page of the cluster to restart the service. - Configure cluster interconnection

Set the listening IP of the remote cluster

By default, the cluster system only listens to localhost and business IP. You can use the following command to query:

[omm@hd03 ~]$ gs_guc check -Z coordinator -I all -c "listen_addresses"

expected guc information: hd02: listen_addresses=NULL: [/srv/BigData/mppdb/data1/coordinator/postgresql.conf]

gs_guc check: hd02: listen_addresses='localhost,192.168.110.24': [/srv/BigData/mppdb/data1/coordinator/postgresql.conf]

Total GUC values: 1. Failed GUC values: 0.

The value of parameter listen_addresses is same on all instances.

listen_addresses='localhost,192.168.110.24'If there is no monitoring service IP, you can use the following methods to add it to the monitoring:

[omm@hd03 ~]$ gs_guc set -I all -Z coordinator -c "listen_addresses='localhost,192.168.110.24'" expected instance path: [/srv/BigData/mppdb/data1/coordinator/postgresql.conf] gs_guc set: listen_addresses='*': [/srv/BigData/mppdb/data1/coordinator/postgresql.conf] Total instances: 1. Failed instances: 0. Success to perform gs_guc!

The above is only valid in one node of the cluster, and other nodes need to operate separately. Separate multiple IP S with commas.

Setting authentication mode in remote cluster CN

By default, the system only allows each node of the cluster to communicate with each other. If other network segments need access, the authentication mode must be set, which can be executed at any node.

[omm@hd01 ~]$ gs_guc reload -Z coordinator -N all -I all -h "host all all 192.168.120.0/24 sha256" Begin to perform gs_guc for all coordinators. Total instances: 3. Failed instances: 0. Success to perform gs_guc!

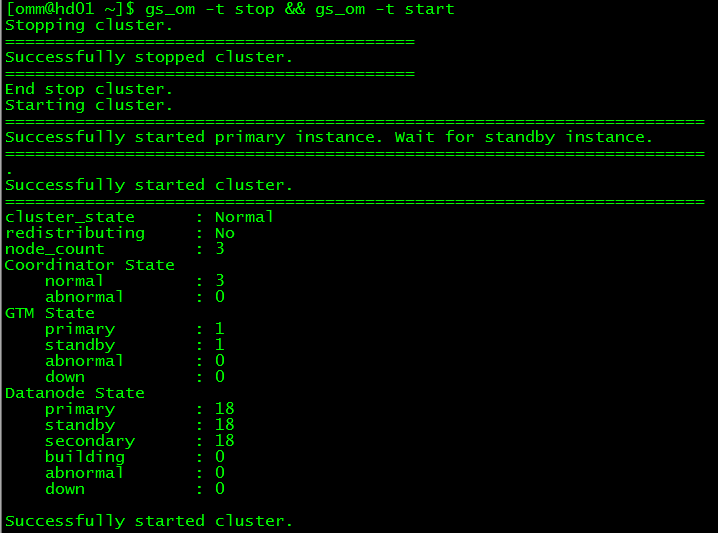

After setting, restart the cluster:

[omm@hd01 ~]$ gs_om -t stop && gs_om -t start

- Create a Data Source object

As follows, create the DS? Mppdb object and use the exec? On? Extension function to connect.

[omm@hd06 ~]$ gsql -d postgres -p 25308

gsql ((GaussDB Kernel V300R002C00 build 8a9c1eb6) compiled at 2019-08-01 18:47:38 commit 6093 last mr 10175 )

Non-SSL connection (SSL connection is recommended when requiring high-security)

Type "help" for help.

postgres=# CREATE DATA SOURCE ds_mppdb type 'MPPDB' OPTIONS (DSN 'mppdb_DSN', USERNAME 'spwuser', PASSWORD 'abcABC12', ENCODING 'UTF8');

CREATE DATA SOURCE

postgres=# SELECT * FROM exec_on_extension('ds_oracle', 'select * from web_customer;') AS (c1 int,c2 text,c3 text,c4 text);

c1 | c2 | c3 | c4

----+------+-----------------------------+-----------------------------

1 | xzxj | 2019-4-11 8:42:35.094133000 | 2019-4-11 8:42:35.094168000

(1 row)2.2 using Foreign Table

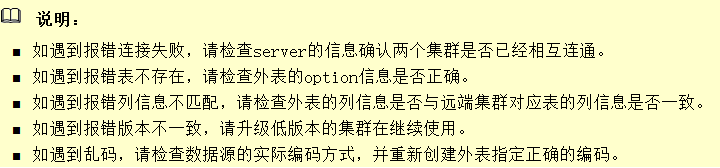

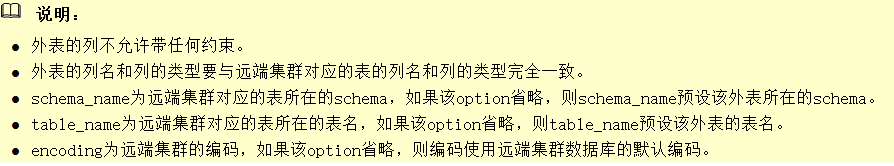

matters needing attention:

- The created appearance should be exactly the same as the column name and type name of its corresponding remote table, and the type of remote table is row save table or column save table, hash table or replication table.

- If the associated tables are replicated in another cluster or data skew exists, the performance may be poor.

- The status of both clusters should be "Normal" during use.

- Both clusters need to have the data processing function of SQL on other GaussDB based on Foreign Table.

It is recommended to configure LVS. If it is not configured, it is recommended to use multiple CN as the server address. It is forbidden to write CN addresses of multiple clusters together. - Please make sure that the codes of the databases at both ends are the same as much as possible, otherwise errors may occur or the data received is garbled.

- If the remote table has done statistics collection, you can perform analyze on the external table to get a better execution plan.

Before configuration, you need to ensure that the two clusters can access each other. Please refer to the above steps for cluster mutual access.

Create Serverpostgres=# CREATE SERVER Gauss1 FOREIGN DATA WRAPPER GC_FDW OPTIONS (address '192.168.110.22:25308,192.168.110.23:25308,192.168.110.24:25308' , dbname 'rhnschema', username 'spwuser', password 'abcABC12');

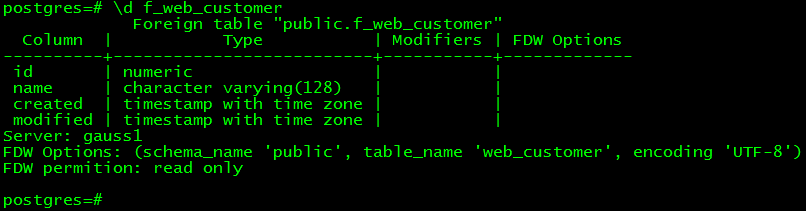

Create appearance and viewpostgres=# CREATE FOREIGN TABLE f_web_customer ( ID NUMERIC, NAME character varying(128), CREATED timestamp with time zone, MODIFIED timestamp with time zone ) SERVER Gauss1 OPTIONS ( schema_name 'public', table_name 'web_customer', encoding 'UTF-8' ); postgres=# \d f_web_customer;

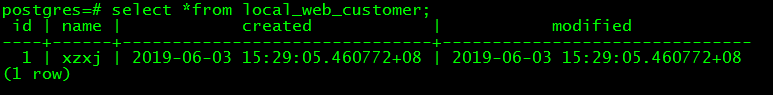

Importing data using appearancespostgres=# CREATE TABLE local_web_customer ( ID NUMERIC, NAME character varying(128), CREATED timestamp with time zone, MODIFIED timestamp with time zone ); postgres=# INSERT INTO local_web_customer SELECT * FROM f_web_customer; INSERT 0 1 postgres=# select *from local_web_customer;