Apache Dubbo has the communication mode of injvm, which can avoid the delay caused by the network and does not occupy the local port. It is a more convenient RPC communication mode for testing and local verification.

Recently, I saw the code of containerd and found that it has similar requirements. Then I investigated whether gRPC has a similar memory based communication mode. I found that pipe is very easy to use, so I recorded it.

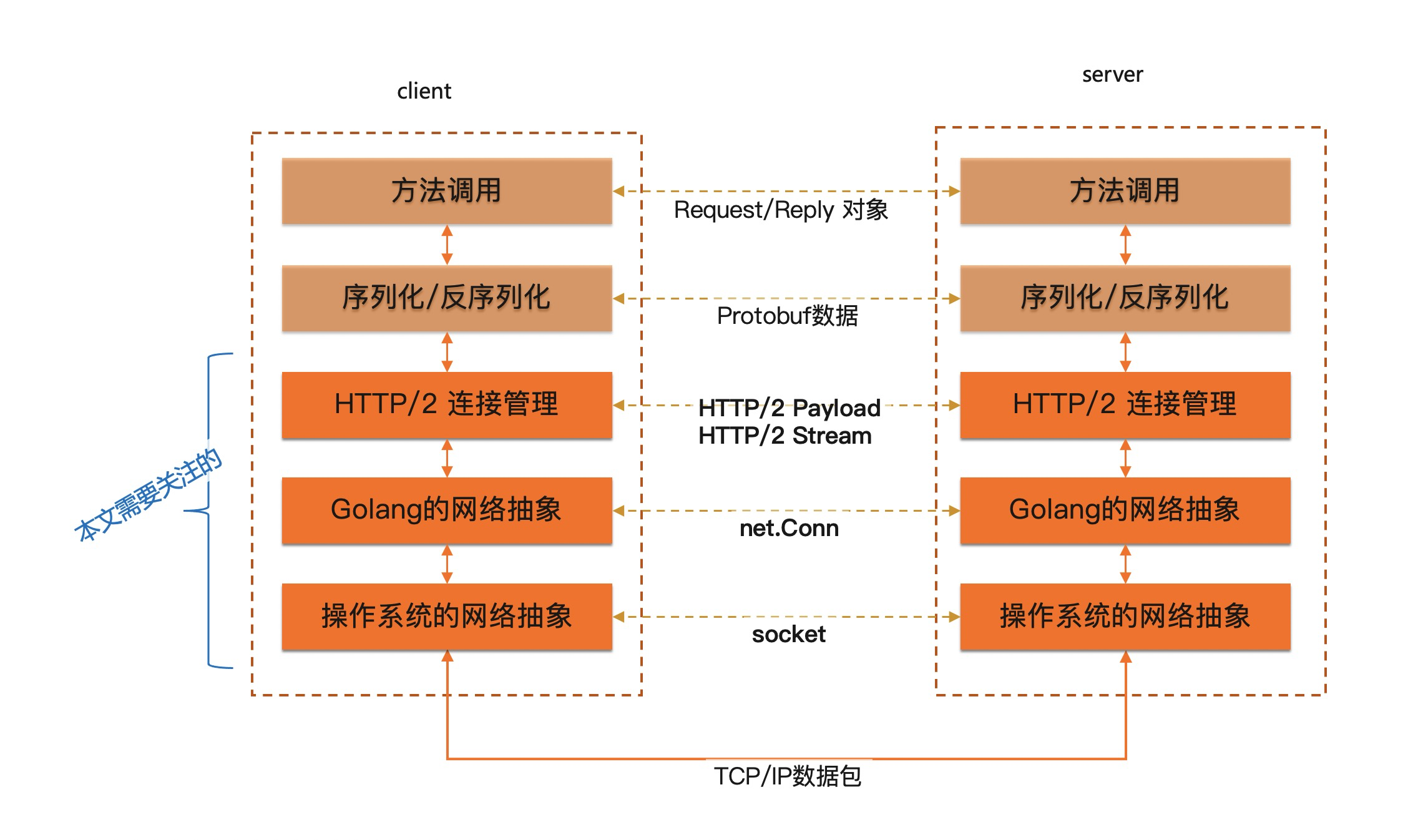

Golang/gRPC's abstraction of network

First, let's take a look at the architecture diagram of gRPC one call. Of course, this architecture diagram only focuses on the abstract distribution of the network.

We focus on the network.

Operating system abstraction

First, the system is abstracted from the network package socket , represents a virtual connection. For UDP, the virtual connection is unreliable. For TCP, the link is as reliable as possible.

For network programming, just having a connection is not enough. You also need to tell developers how to create and close connections.

For the server, the system provides accept method To receive connections.

For the client, the system provides connect method It is used to establish a connection with the server.

Golang abstraction

In Golang, the concept of socket equivalence is called net.Conn , represents a virtual connection.

Next, for the server, the accept behavior is wrapped as net.Listener interface ; For the client side, Golang provides the following services based on connect net.Dial method.

type Listener interface {

// Receive network connections from clients

Accept() (Conn, error)

Close() error

Addr() Addr

}gRPC usage

So how does gRPC use Listener and Dial?

For gRPC server, Serve method Receive a Listener, indicating that services are provided on this Listener.

For gRPC clients, the network is essentially something that can be connected to a certain place, so only a dialer func(context.Context, string) (net.Conn, error) function is required.

What is pipe

At the operating system level, pipe Represents a data pipeline, and both ends of the pipeline are in this program, which can well meet our requirements: memory based network communication.

Golang also provides pipe based net.Pipe() function A two-way, memory based communication pipeline is created, which can well meet the requirements of gRPC for bottom communication in terms of capability.

However, net.Pipe only produces two net.conns, that is, only two network connections. There is no Listner mentioned earlier and no Dial method.

Therefore, combined with Golang's channel, net.Pipe is packaged into Listner, and Dial method is also provided:

- Listener.Accept(), you only need to listen to one channel. When the client connects, you can pass the connection through the channel

- Dial method, call Pipe, and connect one end to the server through channel (as the server connection) and the other end as the client connection

The code is as follows:

package main

import (

"context"

"errors"

"net"

"sync"

"sync/atomic"

)

var ErrPipeListenerClosed = errors.New(`pipe listener already closed`)

type PipeListener struct {

ch chan net.Conn

close chan struct{}

done uint32

m sync.Mutex

}

func ListenPipe() *PipeListener {

return &PipeListener{

ch: make(chan net.Conn),

close: make(chan struct{}),

}

}

// Accept wait for client connection

func (l *PipeListener) Accept() (c net.Conn, e error) {

select {

case c = <-l.ch:

case <-l.close:

e = ErrPipeListenerClosed

}

return

}

// Close close the listener

func (l *PipeListener) Close() (e error) {

if atomic.LoadUint32(&l.done) == 0 {

l.m.Lock()

defer l.m.Unlock()

if l.done == 0 {

defer atomic.StoreUint32(&l.done, 1)

close(l.close)

return

}

}

e = ErrPipeListenerClosed

return

}

// Addr returns the address of the listener

func (l *PipeListener) Addr() net.Addr {

return pipeAddr(0)

}

func (l *PipeListener) Dial(network, addr string) (net.Conn, error) {

return l.DialContext(context.Background(), network, addr)

}

func (l *PipeListener) DialContext(ctx context.Context, network, addr string) (conn net.Conn, e error) {

// Is the PipeListener closed

if atomic.LoadUint32(&l.done) != 0 {

e = ErrPipeListenerClosed

return

}

// Create pipe

c0, c1 := net.Pipe()

// Wait for the connection to be delivered to the server to receive

select {

case <-ctx.Done():

e = ctx.Err()

case l.ch <- c0:

conn = c1

case <-l.close:

c0.Close()

c1.Close()

e = ErrPipeListenerClosed

}

return

}

type pipeAddr int

func (pipeAddr) Network() string {

return `pipe`

}

func (pipeAddr) String() string {

return `pipe`

}How to use pipe as gRPC connection

With the above packaging, we can create a gRPC server and client based on this for memory based RPC communication.

First, we simply create a service that contains four invocation methods:

syntax = "proto3";

option go_package = "google.golang.org/grpc/examples/helloworld/helloworld";

option java_multiple_files = true;

option java_package = "io.grpc.examples.helloworld";

option java_outer_classname = "HelloWorldProto";

package helloworld;

// The greeting service definition.

service Greeter {

// unary call

rpc SayHello(HelloRequest) returns (HelloReply) {}

// Server side streaming call

rpc SayHelloReplyStream(HelloRequest) returns (stream HelloReply);

// Client streaming call

rpc SayHelloRequestStream(stream HelloRequest) returns (HelloReply);

// Bidirectional streaming call

rpc SayHelloBiStream(stream HelloRequest) returns (stream HelloReply);

}

// The request message containing the user's name.

message HelloRequest {

string name = 1;

}

// The response message containing the greetings

message HelloReply {

string message = 1;

}Then generate the relevant stub code:

protoc --go_out=. --go_opt=paths=source_relative \ --go-grpc_out=. --go-grpc_opt=paths=source_relative \ helloworld/helloworld.proto

Then start writing the server code. The basic logic is to implement a demo version of the server:

package main

import (

"context"

"log"

"github.com/robberphex/grpc-in-memory/helloworld"

pb "github.com/robberphex/grpc-in-memory/helloworld"

)

// Implementation of helloworld.GreeterServer

type server struct {

// For later code compatibility, unimplemented greeterserver must be aggregated

// In this way, when a new method is added to the proto file in the future, this code will at least not report an error

pb.UnimplementedGreeterServer

}

// Server code of unary call

func (s *server) SayHello(ctx context.Context, in *pb.HelloRequest) (*pb.HelloReply, error) {

log.Printf("Received: %v", in.GetName())

return &pb.HelloReply{Message: "Hello " + in.GetName()}, nil

}

// Server code of client streaming call

// Receive two req s and return a resp

func (s *server) SayHelloRequestStream(streamServer pb.Greeter_SayHelloRequestStreamServer) error {

req, err := streamServer.Recv()

if err != nil {

log.Printf("error receiving: %v", err)

return err

}

log.Printf("Received: %v", req.GetName())

req, err = streamServer.Recv()

if err != nil {

log.Printf("error receiving: %v", err)

return err

}

log.Printf("Received: %v", req.GetName())

streamServer.SendAndClose(&pb.HelloReply{Message: "Hello " + req.GetName()})

return nil

}

// Server side code of server side streaming call

// Receive a req and then send two resp s

func (s *server) SayHelloReplyStream(req *pb.HelloRequest, streamServer pb.Greeter_SayHelloReplyStreamServer) error {

log.Printf("Received: %v", req.GetName())

err := streamServer.Send(&pb.HelloReply{Message: "Hello " + req.GetName()})

if err != nil {

log.Printf("error Send: %+v", err)

return err

}

err = streamServer.Send(&pb.HelloReply{Message: "Hello " + req.GetName() + "_dup"})

if err != nil {

log.Printf("error Send: %+v", err)

return err

}

return nil

}

// Server code of bidirectional streaming call

func (s *server) SayHelloBiStream(streamServer helloworld.Greeter_SayHelloBiStreamServer) error {

req, err := streamServer.Recv()

if err != nil {

log.Printf("error receiving: %+v", err)

// Return the error to the client in time, the same below

return err

}

log.Printf("Received: %v", req.GetName())

err = streamServer.Send(&pb.HelloReply{Message: "Hello " + req.GetName()})

if err != nil {

log.Printf("error Send: %+v", err)

return err

}

// After leaving this function, the streamServer will shut down, so it is not recommended to send messages in a separate goroute

return nil

}

// Create a new server implementation

func NewServerImpl() *server {

return &server{}

}Then we create a client based on pipe connection to call the server.

It includes the following steps:

- Create server implementation

- Create a listener based on pipe, and then create a gRPC server based on it

- Create a client connection based on pipe, then create gRPC client and call the service

The code is as follows:

package main

import (

"context"

"fmt"

"log"

"net"

pb "github.com/robberphex/grpc-in-memory/helloworld"

"google.golang.org/grpc"

)

// Transforming a service implementation into a client

func serverToClient(svc *server) pb.GreeterClient {

// Create a pipe based Listener

pipe := ListenPipe()

s := grpc.NewServer()

// Register Greeter service to gRPC

pb.RegisterGreeterServer(s, svc)

if err := s.Serve(pipe); err != nil {

log.Fatalf("failed to serve: %v", err)

}

// The client specifies to use pipe as the network connection

clientConn, err := grpc.Dial(`pipe`,

grpc.WithInsecure(),

grpc.WithContextDialer(func(c context.Context, s string) (net.Conn, error) {

return pipe.DialContext(c, `pipe`, s)

}),

)

if err != nil {

log.Fatalf("did not connect: %v", err)

}

// Create gRPC client based on pipe connection

c := pb.NewGreeterClient(clientConn)

return c

}

func main() {

svc := NewServerImpl()

c := serverToClient(svc)

ctx := context.Background()

// unary call

for i := 0; i < 5; i++ {

r, err := c.SayHello(ctx, &pb.HelloRequest{Name: fmt.Sprintf("world_unary_%d", i)})

if err != nil {

log.Fatalf("could not greet: %v", err)

}

log.Printf("Greeting: %s", r.GetMessage())

}

// Client streaming call

for i := 0; i < 5; i++ {

streamClient, err := c.SayHelloRequestStream(ctx)

if err != nil {

log.Fatalf("could not SayHelloRequestStream: %v", err)

}

err = streamClient.Send(&pb.HelloRequest{Name: fmt.Sprintf("SayHelloRequestStream_%d", i)})

if err != nil {

log.Fatalf("could not Send: %v", err)

}

err = streamClient.Send(&pb.HelloRequest{Name: fmt.Sprintf("SayHelloRequestStream_%d_dup", i)})

if err != nil {

log.Fatalf("could not Send: %v", err)

}

reply, err := streamClient.CloseAndRecv()

if err != nil {

log.Fatalf("could not Recv: %v", err)

}

log.Println(reply.GetMessage())

}

// Server side streaming call

for i := 0; i < 5; i++ {

streamClient, err := c.SayHelloReplyStream(ctx, &pb.HelloRequest{Name: fmt.Sprintf("SayHelloReplyStream_%d", i)})

if err != nil {

log.Fatalf("could not SayHelloReplyStream: %v", err)

}

reply, err := streamClient.Recv()

if err != nil {

log.Fatalf("could not Recv: %v", err)

}

log.Println(reply.GetMessage())

reply, err = streamClient.Recv()

if err != nil {

log.Fatalf("could not Recv: %v", err)

}

log.Println(reply.GetMessage())

}

// Bidirectional streaming call

for i := 0; i < 5; i++ {

streamClient, err := c.SayHelloBiStream(ctx)

if err != nil {

log.Fatalf("could not SayHelloStream: %v", err)

}

err = streamClient.Send(&pb.HelloRequest{Name: fmt.Sprintf("world_stream_%d", i)})

if err != nil {

log.Fatalf("could not Send: %v", err)

}

reply, err := streamClient.Recv()

if err != nil {

log.Fatalf("could not Recv: %v", err)

}

log.Println(reply.GetMessage())

}

}summary

Of course, as a memory based RPC call, there can also be better ways, such as directly passing objects to the server and communicating directly through local calls.

However, this method breaks many conventions, such as the object address, such as gRPC connection parameters do not take effect, and so on.

The Pipe based communication mode introduced in this paper is consistent with the normal RPC communication behavior except that the network layer adopts memory transfer, such as serialization and HTTP/2 flow control. Of course, the performance is a little worse than the native call, but the good thing is that the consistency of behavior is more important for the test and verification scenarios.

The code for this article is hosted in GitHub https://github.com/robberphex/grpc-in-memory.

This article was first published in https://robberphex.com/grpc-in-memory/ .