Write in front

Everyone may be tired of Python crawlers. Let's play with Golang crawlers!

This article will be continuously updated!

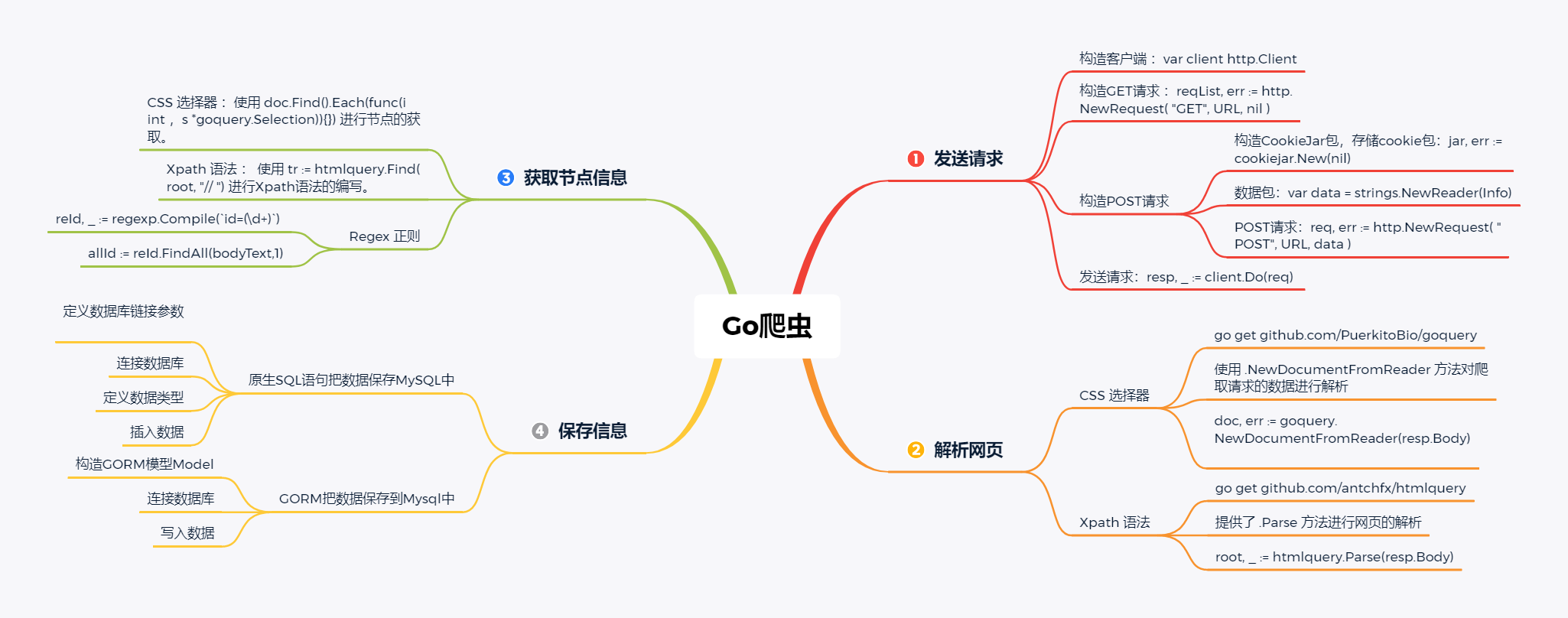

Mind map

To get the original image or. xmind format, scan at the end of the text and reply to the Go crawler

Golang provides the net/http package, which supports request and response natively.

1. Send request

- Construct client

var client http.Client

- Construct GET request:

reqList, err := http.NewRequest("GET", URL, nil)

- Construct POST request

Go provides a function method of cookie jar.new, which is used to retain the generated cookie information. This is for some websites that can only be accessed after logging in. Therefore, after logging in, there will be a cookie that stores user information, that is, this information is to let the server know who is accessing this time! For example, log in to the Academic Affairs Office of the school to crawl the timetable. Because everyone of the timetable may be different, you need to log in and let the server know whose timetable information this is. Therefore, you need to add a cookie on the request header to disguise crawling.

jar, err := cookiejar.New(nil)

if err != nil {

panic(err)

}

When constructing a POST request, you can encapsulate the data to be transmitted and construct it together with the URL

var client http.Client

Info :="muser="+muserid+"&"+"passwd="+password

var data = strings.NewReader(Info)

req, err := http.NewRequest("POST", URL, data)

- Add request header

req.Header.Set("Connection", "keep-alive")

req.Header.Set("Pragma", "no-cache")

req.Header.Set("Cache-Control", "no-cache")

req.Header.Set("Upgrade-Insecure-Requests", "1")

req.Header.Set("Content-Type", "application/x-www-form-urlencoded")

req.Header.Set("User-Agent", "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.198 Safari/537.36")

req.Header.Set("Accept", "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9")

req.Header.Set("Accept-Language", "zh-CN,zh;q=0.9")

- Send request

resp, _:= client.Do(req) // Send request bodyText, _ := ioutil.ReadAll(resp.Body) // Read web page content using buffer

- About cookie s

The above also mentioned a package. After sending the request, the cookie will be saved in the client.Jar package

myStr:=fmt.Sprintf("%s",client.Jar) //Force type conversion pointer to string

After we process and print out the information of the client.Jar package, we can select the response cookie and put it on the request header! We can handle the cookie problem in the case of login.

req.Header.Set("Cookie", "ASP.NET_SessionId="+cook)

So far, the sending request part is completely completed!

2. Analyze web pages

2.1 CSS selector

github.com/PuerkitoBio/goquery provides the. NewDocumentFromReader method to parse web pages.

doc, err := goquery.NewDocumentFromReader(resp.Body)

2.2 Xpath syntax

github.com/antchfx/htmlquery provides the. Parse method to parse web pages

root, _ := htmlquery.Parse(resp.Body)

2.3 Regex regularization

reId, _ := regexp.Compile(`id=(\d+)`) // Regular matching

allId := reId.FindAll(bodyText,1)

for _,item := range allId {

id=string(item)

}

3. Get node information

3.1 CSS selector

Through 2.1, after we get the doc parsed in the previous step, we can use css selector syntax to select nodes.

doc.Find("#main > div.right > div.detail_main_content").

Each(func(i int, s *goquery.Selection) {

Data.title = s.Find("p").Text()

Data.time = s.Find("#fbsj").Text()

Data.author = s.Find("#author").Text()

Data.count = Read_Count(Read_Id)

fmt.Println(Data.title, Data.time, Data.author,Data.count)

})

doc.Find("#news_content_display").Each(func(i int, s *goquery.Selection) {

Data.content = s.Find("p").Text()

fmt.Println(Data.content)

})

3.2 Xpath syntax

Through 3.2, after we get the root parsed in the previous step, we can write Xpath syntax and select nodes.

tr := htmlquery.Find(root, "//*[@ id='LB_kb']/table/tbody/tr/td ") / / use Xpath to obtain node information

for _, row := range tr { //len(tr)=13

classNames := htmlquery.Find(row, "./font")

classPosistions := htmlquery.Find(row,"./text()[4]")

classTeachers := htmlquery.Find(row,"./text()[5]")

if len(classNames)!=0 {

className = htmlquery.InnerText(classNames[0])

classPosistion = htmlquery.InnerText(classPosistions[0])

classTeacher = htmlquery.InnerText(classTeachers[0])

fmt.Println(className)

fmt.Println(classPosistion)

fmt.Println(classTeacher)

}

}

4. Save information

4.1 using native SQL statements to save data in Mysql

- Define database link parameters

const ( usernameClass = "root" passwordClass = "root" ipClass = "127.0.0.1" portClass = "3306" dbnameClass = "class" )

- Connect to database

var DB *sql.DB

func InitDB(){

path := strings.Join([]string{usernameClass, ":", passwordClass, "@tcp(", ipClass, ":", portClass, ")/", dbnameClass, "?charset=utf8"}, "")

DB, _ = sql.Open("mysql", path)

DB.SetConnMaxLifetime(10)

DB.SetMaxIdleConns(5)

if err := DB.Ping(); err != nil{

fmt.Println("opon database fail")

return

}

fmt.Println("connect success")

}

- Define data type

type Class struct {

classData string

teacherName string

position string

}

- insert data

func InsertData(Data Class) bool {

tx, err := DB.Begin()

if err != nil{

fmt.Println("tx fail")

return false

}

stmt, err := tx.Prepare("INSERT INTO class_data (`class`,`teacher`,`position`) VALUES (?, ?, ?)")

if err != nil{ // Data insertion

fmt.Println("Prepare fail",err)

return false

}

_, err = stmt.Exec(Data.classData,Data.teacherName,Data.position) //Execute transaction

if err != nil{

fmt.Println("Exec fail",err)

return false

}

_ = tx.Commit() // Commit transaction

return true

}

4.2 using GORM to save data to Mysql

- Construct GORM model

type NewD struct {

gorm.Model

Title string `gorm:"type:varchar(255);not null;"`

Time string `gorm:"type:varchar(256);not null;"`

Author string `gorm:"type:varchar(256);not null;"`

Count string `gorm:"type:varchar(256);not null;"`

Content string `gorm:"type:longtext;not null;"`

}

- Connect to database

var db *gorm.DB

func Init() {

var err error

path := strings.Join([]string{userName_New, ":", password_New, "@tcp(",ip_New, ":", port_New, ")/", dbName_New, "?charset=utf8"}, "")

db, err = gorm.Open("mysql", path)

if err != nil {

panic(err)

}

fmt.Println("SUCCESS")

_ = db.AutoMigrate(&NewD{})

sqlDB := db.DB()

sqlDB.SetMaxIdleConns(10)

sqlDB.SetMaxOpenConns(100)

}

- Write data

NewA := NewD{

Title: Data.title,

Time: Data.time,

Author: Data.author,

Count: Data.count,

Content: Data.content,

}

err = db.Create(&NewA).Error // Create a piece of data in the database