preface

Network has always been an old topic. When talking about network programming, it is always inseparable from the five models of network programming: blocking IO, non blocking IO, multiplexing IO, signal driven IO and asynchronous io. The construction of a high-performance network library is basically based on Multiplexing (epoll, kqueue) or asynchronous io. After IO, IO data processing is realized through thread pool. Netpoll, the built-in network library of go language, also adopts such a mode in the overall framework. In io transceiver, the bottom layer encapsulates multiplexing and asynchronous IO at the operating system level such as epoll, kqueue and IOCP. In io data processing, thanks to goroutine's lightweight and efficient concurrent scheduling mode, it does not adopt the idea of thread pool, but its unique one goroutine per connection (each connection enables one goroutine for processing). This paper mainly analyzes the design and packaging of go netpoll from the perspective of source code (taking epoll as an example), explains its working principle and advantages and disadvantages of one goroutine per connection, and then summarizes the more suitable master-slave reactor mode according to the shortcomings of go netpoll. During this period, relevant knowledge of network programming (mainly multiplexing IO) and the basic principles of go language use and runtime scheduling will be involved. Readers need to have some background knowledge.

1. Basic programming mode and data structure

First, compare the similarities and differences between go net network programming and epoll. For epoll foundation and IO processing mode, refer to [1] [2]. Go net network programming is used, and the process is as follows:

package main import ( "log" "net" ) func main() { Listener, err := net.Listen("tcp", "localhost:9000") if err != nil { log.Println("Listen err: ", err) return } for { conn, err := Listener.Accept() if err != nil { log.Println("Accept err: ", err) continue } go HandleConn(conn) } } func HandleConn(conn net.Conn) { // Processing function, call Read()->Processing business logic->call Write Write back }

It can be seen that the network programming of go is extremely simple. In just a few lines, the bottom layer encapsulates all the operations required by epoll, mainly including:

- Call socket() to create a socket on the server and bind();

- Call epoll_create creates an epfd, binds the server socket to the epfd, and listens;

- Whenever a new connection arrives, call accept to bind the client socket and register with epfd.

All operations are inseparable from two important components: socket descriptor and epoll descriptor epfd. go netpoll encapsulates these contents and mainly forms the following structures:

// netFD, It can be considered go net Network descriptor for type netFD struct { pfd poll.FD // The bottom layer is encapsulated socket descriptor // immutable until Close family int sotype int isConnected bool // handshake completed or use of association with peer net string laddr Addr raddr Addr } type FD struct { // Lock sysfd and serialize access to Read and Write methods. fdmu fdMutex // System file descriptor. Immutable until Close. Sysfd int // preservation linux of fd // I/O poller. pd pollDesc // pollDesc Variable, with the pointer of the variable, all socket Encapsulated into a linked list, each socket Corresponding goroutine,Also encapsulated in pollDesc Yes, it can be said go net The soul part of // Writev cache. iovecs *[]syscall.Iovec // Semaphore signaled when file is closed. csema uint32 // Non-zero if this file has been set to blocking mode. isBlocking uint32 // Whether this is a streaming descriptor, as opposed to a // packet-based descriptor like a UDP socket. Immutable. IsStream bool // Whether a zero byte read indicates EOF. This is false for a // message based socket connection. ZeroReadIsEOF bool // Whether this is a file rather than a network socket. isFile bool } //pollDesc type pollDesc struct { link *pollDesc // in pollcache, protected by pollcache.lock. pollDesc Is a linked list whenever 1 has a new one socket When connected, there will be a new one pollDesc // The lock protects pollOpen, pollSetDeadline, pollUnblock and deadlineimpl operations. // This fully covers seq, rt and wt variables. fd is constant throughout the PollDesc lifetime. // pollReset, pollWait, pollWaitCanceled and runtime·netpollready (IO readiness notification) // proceed w/o taking the lock. So closing, everr, rg, rd, wg and wd are manipulated // in a lock-free way by all operations. // TODO(golang.org/issue/49008): audit these lock-free fields for continued correctness. // NOTE(dvyukov): the following code uses uintptr to store *g (rg/wg), // that will blow up when GC starts moving objects. lock mutex // protects the following fields,Used for pd Lock protection fd uintptr // Save network file descriptor closing bool everr bool // marks event scanning error happened user uint32 // user settable cookie rseq uintptr // protects from stale read timers rg uintptr // pdReady, pdWait, G waiting for read or nil. Accessed atomically. Used to indicate listening fd Ready, only pdReady, pdWait,Waiting to read G,nil Value rt timer // read deadline timer (set if rt.f != nil) rd int64 // read deadline wseq uintptr // protects from stale write timers wg uintptr // pdReady, pdWait, G waiting for write or nil. Accessed atomically. Same function rg wt timer // write deadline timer wd int64 // write deadline self *pollDesc // storage for indirect interface. See (*pollDesc).makeArg. }

netFD can be regarded as the network descriptor of go net, including the corresponding socket; pollDesc is a lower level structure. All sockets are linked together through a linked list composed of pollDesc, and the goroutine bound to each socket is encapsulated in pollDesc.wg and pollDesc.rg (when a goroutine is waiting for IO readiness, pollDesc.rg and WG are used to save the structure g of the goroutine corresponding to the socket). Then, the Listener returned by net.Listen and the net.Conn returned by net.Accept contain the netFD structure:

// TCPListener is a TCP network listener. Clients should typically // use variables of type Listener instead of assuming TCP. type TCPListener struct { fd *netFD lc ListenConfig } // TCPConn is an implementation of the Conn interface for TCP network // connections. type TCPConn struct { conn } type conn struct { fd *netFD }

Next, focus on how go net implements all the above operations through net.Listen and net.Accept.

2. Waiting end (plug end)

The waiting end blocks the current goroutine before the read-write event monitored by epoll arrives; When the read-write event arrives, the corresponding handler functions (Accept, Read, Write) are called. This process covers the following in epoll programming:

- Server socket creation and binding; Epoll initialization; Add the server socket to the listening queue of epoll. All through net.Listen.

- By calling the accept function, the server goroutine is blocked until the client connection arrives. Then add the new client connection to the epoll listening queue (this process is the same as adding the server socket to the epoll listening queue, and the bottom layer also reuses the same function pollDesc.init).

- Call the Read and Write functions to block the client goroutine until the client Read / Write event arrives and execute the business logic (this process is consistent with the accept logic of the socket on the server, and the bottom layer also reuses the same functions pollDesc.WaitRead and pollDesc.waitWrite).

The corresponding go net implementations are analyzed below.

2.1 net.Listen

2.1.1 create servsocket

// linux in servsock=socket(PF_INET,SOCK_STREAM,0) // establish socket /* go netpoll in * Call chain:Listen() -> ListenConfig.Listen() -> listenTCP() -> internetSocket() -> socket() * socket()The function is as follows */ func socket(ctx context.Context, net string, family, sotype, proto int, ipv6only bool, laddr, raddr sockaddr, ctrlFn func(string, string, syscall.RawConn) error) (fd *netFD, err error) { s, err := sysSocket(family, sotype, proto) //establish servsock if err != nil { return nil, err } if err = setDefaultSockopts(s, family, sotype, ipv6only); err != nil { poll.CloseFunc(s) return nil, err } // initialization netFD if fd, err = newFD(s, family, sotype, net); err != nil { poll.CloseFunc(s) return nil, err } ... // call listenStream() if laddr != nil && raddr == nil { switch sotype { case syscall.SOCK_STREAM, syscall.SOCK_SEQPACKET: if err := fd.listenStream(laddr, listenerBacklog(), ctrlFn); err != nil { fd.Close() return nil, err } return fd, nil case syscall.SOCK_DGRAM: if err := fd.listenDatagram(laddr, ctrlFn); err != nil { fd.Close() return nil, err } return fd, nil } } ... }

2.1.2 binding servsocket

// Linux in bind(servsock,(struct sockaddr*)&servaddr,sizeof(servaddr) // take servsock With one sockaddr Structure binding for /* go netpoll in */ func (fd *netFD) listenStream(laddr sockaddr, backlog int, ctrlFn func(string, string, syscall.RawConn) error) error { var err error if err = setDefaultListenerSockopts(fd.pfd.Sysfd); err != nil { return err } var lsa syscall.Sockaddr if lsa, err = laddr.sockaddr(fd.family); err != nil { return err } if ctrlFn != nil { c, err := newRawConn(fd) if err != nil { return err } if err := ctrlFn(fd.ctrlNetwork(), laddr.String(), c); err != nil { return err } } if err = syscall.Bind(fd.pfd.Sysfd, lsa); err != nil { return os.NewSyscallError("bind", err) } if err = listenFunc(fd.pfd.Sysfd, backlog); err != nil { return os.NewSyscallError("listen", err) } // stay fd.init Implementation creation in epfd,monitor servsock if err = fd.init(); err != nil { return err } lsa, _ = syscall.Getsockname(fd.pfd.Sysfd) fd.setAddr(fd.addrFunc()(lsa), nil) return nil }

2.1.3 Create epoll instance epfd

// linux in epoll_event event event.events=EPOLLIN //input monitoring event.data.fd=servsock epfd=epoll_create(20) //establish epoll example /* go netpoll in * Call chain:netFD.init()->pollDesc.init()-> runtime_pollServerInit()->netpollinit() */ // pollDesc.init() func (pd *pollDesc) init(fd *FD) error { // serverInit Defined as var serverInit sync.Once,Guarantee in init In function runtime_pollServerInit Execute only once; Subsequent net.Accpet Also called pollDesc.init,The main purpose is to call runtime_pollOpen Add client connections to epfd in serverInit.Do(runtime_pollServerInit) // establish epfd,The bottom layer is called according to the operating system netpollinit() ctx, errno := runtime_pollOpen(uintptr(fd.Sysfd)) // stay epfd Medium monitoring servsock if errno != 0 { if ctx != 0 { runtime_pollUnblock(ctx) runtime_pollClose(ctx) } return errnoErr(syscall.Errno(errno)) } pd.runtimeCtx = ctx return nil } // establish epfd And create a pipe epfd signal communication, Equivalent to Linux of epoll_create func netpollinit() { epfd = epollcreate1(_EPOLL_CLOEXEC) // establish epfd example if epfd < 0 { epfd = epollcreate(1024) if epfd < 0 { println("runtime: epollcreate failed with", -epfd) throw("runtime: netpollinit failed") } closeonexec(epfd) } r, w, errno := nonblockingPipe() // Create pipes to break epoll_wait Listening waiting for if errno != 0 { println("runtime: pipe failed with", -errno) throw("runtime: pipe failed") } ev := epollevent{ events: _EPOLLIN, } *(**uintptr)(unsafe.Pointer(&ev.data)) = &netpollBreakRd errno = epollctl(epfd, _EPOLL_CTL_ADD, r, &ev) // The pipeline is used to listen for read events if errno != 0 { println("runtime: epollctl failed with", -errno) throw("runtime: epollctl failed") } netpollBreakRd = uintptr(r) netpollBreakWr = uintptr(w) }

go netpoll bottom layer by identifying different operating system, netpollinit function has different implementation, for example, in Linux, calling epoll_. Create(), kqueue() for Mac OS and IOCP for windows. When other functions are called at the bottom, they will also be automatically bound to the specific implementation according to the operating system.

2.1.4 Add servsock to the listening object of epfd

// linux in epoll_ctl(epfd,EPOLL_CTL_ADD,servsock,&event); //towards epfd add to fd Input listening event for /* go netpoll in * Call chain:pollDesc.init() -> runtime_pollOpen() -> poll_runtime_pollOpen() -> netpollopen() */ func poll_runtime_pollOpen(fd uintptr) (*pollDesc, int) { pd := pollcache.alloc() // Apply for one pollDesc, pollDesc Is a linked list structure, pre applied memory lock(&pd.lock) wg := atomic.Loaduintptr(&pd.wg) if wg != 0 && wg != pdReady { throw("runtime: blocked write on free polldesc") } rg := atomic.Loaduintptr(&pd.rg) if rg != 0 && rg != pdReady { throw("runtime: blocked read on free polldesc") } pd.fd = fd // take servsock Bind to pollDesc pd.closing = false pd.everr = false pd.rseq++ atomic.Storeuintptr(&pd.rg, 0) pd.rd = 0 pd.wseq++ atomic.Storeuintptr(&pd.wg, 0) pd.wd = 0 pd.self = pd unlock(&pd.lock) // initialization pd.rg and pd.wg,These two variables are used to indicate pd.fd Whether there are already readable and writable events for calling blocking in this fd Upper goroutine errno := netpollopen(fd, pd) // take servsock Add to epfd In the monitoring queue for if errno != 0 { pollcache.free(pd) return nil, int(errno) } return pd, 0 } // netpollopen Concrete implementation of func netpollopen(fd uintptr, pd *pollDesc) int32 { var ev epollevent ev.events = _EPOLLIN | _EPOLLOUT | _EPOLLRDHUP | _EPOLLET *(**pollDesc)(unsafe.Pointer(&ev.data)) = pd // Bind pollDesc struct to epoll_ On event.data return -epollctl(epfd, _EPOLL_CTL_ADD, int32(fd), &ev) // call epollctl }

summary

2.2 net.Accept

- Create a socket for the client connection to initialize the client netFD.

- Call netFD.init() function. According to the analysis of net.Listen, this step is mainly used to add the socket connected by the client to epfd.

// Listener.Accept Called is netFD.cccept. func (fd *netFD) accept() (netfd *netFD, err error) { d, rsa, errcall, err := fd.pfd.Accept() // The bottom layer is linux accept,Get to client socket if err != nil { if errcall != "" { err = wrapSyscallError(errcall, err) } return nil, err }

// Initialize netFD if netfd, err = newFD(d, fd.family, fd.sotype, fd.net); err != nil { poll.CloseFunc(d) return nil, err } // Follow net.Listen()Medium net.init()Is a function that is mainly used to socket Add to epfd in if err = netfd.init(); err != nil { netfd.Close() return nil, err } lsa, _ := syscall.Getsockname(netfd.pfd.Sysfd) netfd.setAddr(netfd.addrFunc()(lsa), netfd.addrFunc()(rsa)) return netfd, nil }

// Accept wraps the accept network call. func (fd *FD) Accept() (int, syscall.Sockaddr, string, error) { if err := fd.readLock(); err != nil { return -1, nil, "", err } defer fd.readUnlock() // Add read / write lock // prepareRead Mainly check whether the read-write timeout occurs if err := fd.pd.prepareRead(fd.isFile); err != nil { return -1, nil, "", err } for { // Because the server socket It is set to non blocking mode, so it will return immediately, so it is necessary to for loop s, rsa, errcall, err := accept(fd.Sysfd) if err == nil { return s, rsa, "", err } switch err { case syscall.EINTR: continue case syscall.EAGAIN: if fd.pd.pollable() { // waitRead Will current goroutine Suspend and resume operation again until a read event arrives if err = fd.pd.waitRead(fd.isFile); err == nil { continue } } case syscall.ECONNABORTED: // This means that a socket on the listen // queue was closed before we Accept()ed it; // it's a silly error, so try again. continue } return -1, nil, errcall, err } }

1. Why does each socket have a read / write lock

3. Ready execution end

The behavior of the waiting end has been analyzed earlier, and the ready execution end (epoll_wait) only needs to be in epoll_ After the wait response returns, take out the corresponding ev.data.rg (wg) and rejoin the runtime scheduling queue. This process is implemented through netpoll. The corresponding source code of netpoll is as follows:// netpoll checks for ready network connections. // Returns list of goroutines that become runnable. // delay < 0: blocks indefinitely // delay == 0: does not block, just polls // delay > 0: block for up to that many nanoseconds // gList Indicates that all are ready goroutine func netpoll(delay int64) gList { if epfd == -1 { return gList{} } // calculation epoll_wait Waiting time for var waitms int32 if delay < 0 { waitms = -1 } else if delay == 0 { waitms = 0 } else if delay < 1e6 { waitms = 1 } else if delay < 1e15 { waitms = int32(delay / 1e6) } else { // An arbitrary cap on how long to wait for a timer. // 1e9 ms == ~11.5 days. waitms = 1e9 } var events [128]epollevent retry: // call epoll_wait n := epollwait(epfd, &events[0], int32(len(events)), waitms) if n < 0 { if n != -_EINTR { println("runtime: epollwait on fd", epfd, "failed with", -n) throw("runtime: netpoll failed") } // If a timed sleep was interrupted, just return to // recalculate how long we should sleep now. if waitms > 0 { return gList{} } goto retry } var toRun gList for i := int32(0); i < n; i++ { ev := &events[i] if ev.events == 0 { continue } // judge epoll_wait Is it interrupted if *(**uintptr)(unsafe.Pointer(&ev.data)) == &netpollBreakRd { if ev.events != _EPOLLIN { println("runtime: netpoll: break fd ready for", ev.events) throw("runtime: netpoll: break fd ready for something unexpected") } if delay != 0 { // netpollBreak could be picked up by a // nonblocking poll. Only read the byte // if blocking. var tmp [16]byte read(int32(netpollBreakRd), noescape(unsafe.Pointer(&tmp[0])), int32(len(tmp))) atomic.Store(&netpollWakeSig, 0) } continue } var mode int32 if ev.events&(_EPOLLIN|_EPOLLRDHUP|_EPOLLHUP|_EPOLLERR) != 0 { mode += 'r' } if ev.events&(_EPOLLOUT|_EPOLLHUP|_EPOLLERR) != 0 { mode += 'w' } if mode != 0 { // because pollDesc Save in epoll_event.data Medium, and goroutine Save in pollDesc.rg and pollDeesc.wg Through epoll_event.data You can get the blocked goroutine pd := *(**pollDesc)(unsafe.Pointer(&ev.data)) pd.everr = false if ev.events == _EPOLLERR { pd.everr = true } // Will be ready goroutines Put in ready queue netpollready(&toRun, pd, mode) } } return toRun } // netpollready()Wake up ready goroutine func netpollready(toRun *gList, pd *pollDesc, mode int32) { var rg, wg *g if mode == 'r' || mode == 'r'+'w' { // netpollunblock()Responsible from pollDesc Remove from goroutine(g) rg = netpollunblock(pd, 'r', true) } if mode == 'w' || mode == 'r'+'w' { wg = netpollunblock(pd, 'w', true) } if rg != nil { toRun.push(rg) } if wg != nil { toRun.push(wg) } } // netpollunblock()Responsible from pollDesc Remove from goroutine(g) func netpollunblock(pd *pollDesc, mode int32, ioready bool) *g { gpp := &pd.rg if mode == 'w' { gpp = &pd.wg } for { old := atomic.Loaduintptr(gpp) // It is already a ready event. If no new ready event occurs, there is no need to wake up g if old == pdReady { return nil } if old == 0 && !ioready { // Only set pdReady for ioready. runtime_pollWait // will check for timeout/cancel before waiting. return nil } var new uintptr if ioready { new = pdReady } // take pollDesc.rg Set as pdReady And return g if atomic.Casuintptr(gpp, old, new) { if old == pdWait { old = 0 } return (*g)(unsafe.Pointer(old)) } } }

Compared with the waiting end, the ready execution end has many functions directly. It's epoll_wait checks the ready socket, takes out the ready ev.data.rg, and then returns all ready glists.

3.1 when to call netpoll

netpoll only returns the ready gList, but a function is needed to call it to put the ready gList back into the runtime schedule. In other words, waking up goroutine is a process of runtime rescheduling.

// schedule()Called when the function is scheduled findrunnable()Find ready goroutine Put p in func findrunnable() (gp *g, inheritTime bool) { ... // Check whether there is a network first io be ready. This function is local P No executable on G When, in steal from others Before execution, if there is a network io Ready, wake up and schedule the goroutine. therefore nepoll(0)(Non blocking call) // Poll network. // This netpoll is only an optimization before we resort to stealing. // We can safely skip it if there are no waiters or a thread is blocked // in netpoll already. If there is any kind of logical race with that // blocked thread (e.g. it has already returned from netpoll, but does // not set lastpoll yet), this thread will do blocking netpoll below // anyway. if netpollinited() && atomic.Load(&netpollWaiters) > 0 && atomic.Load64(&sched.lastpoll) != 0 { // Get ready goroutine,adopt injectglist()Will be ready goroutine Put p Scheduling in if list := netpoll(0); !list.empty() { // non-blocking gp := list.pop() injectglist(&list) casgstatus(gp, _Gwaiting, _Grunnable) if trace.enabled { traceGoUnpark(gp, 0) } return gp, false } } ... // Call again netpoll,This is a blocking call if netpollinited() && (atomic.Load(&netpollWaiters) > 0 || pollUntil != 0) && atomic.Xchg64(&sched.lastpoll, 0) != 0 { atomic.Store64(&sched.pollUntil, uint64(pollUntil)) if _g_.m.p != 0 { throw("findrunnable: netpoll with p") } if _g_.m.spinning { throw("findrunnable: netpoll with spinning") } delay := int64(-1) if pollUntil != 0 { if now == 0 { now = nanotime() } delay = pollUntil - now if delay < 0 { delay = 0 } } if faketime != 0 { // When using fake time, just poll. delay = 0 } list := netpoll(delay) // block until new work is available atomic.Store64(&sched.pollUntil, 0) atomic.Store64(&sched.lastpoll, uint64(nanotime())) if faketime != 0 && list.empty() { // Using fake time and nothing is ready; stop M. // When all M's stop, checkdead will call timejump. stopm() goto top } lock(&sched.lock) _p_ = pidleget() unlock(&sched.lock) if _p_ == nil { injectglist(&list) } else { acquirep(_p_) if !list.empty() { gp := list.pop() injectglist(&list) casgstatus(gp, _Gwaiting, _Grunnable) if trace.enabled { traceGoUnpark(gp, 0) } return gp, false } if wasSpinning { _g_.m.spinning = true atomic.Xadd(&sched.nmspinning, 1) } goto top } } }

two Call in sysmon monitor. sysmon is a monitoring thread set by golang (a system level daemon). It is independent of all models of G-M-P and runs directly on M without binding to logical P. His relationship with other g-M-ps is as follows:

func sysmon() { ... lastpoll := int64(atomic.Load64(&sched.lastpoll)) if netpollinited() && lastpoll != 0 && lastpoll+10*1000*1000 < now { atomic.Cas64(&sched.lastpoll, uint64(lastpoll), uint64(now)) list := netpoll(0) // non-blocking - returns list of goroutines if !list.empty() { // Need to decrement number of idle locked M's // (pretending that one more is running) before injectglist. // Otherwise it can lead to the following situation: // injectglist grabs all P's but before it starts M's to run the P's, // another M returns from syscall, finishes running its G, // observes that there is no work to do and no other running M's // and reports deadlock. incidlelocked(-1) injectglist(&list) incidlelocked(1) } } ... }

Note: the waiting end and the ready end are connected through golang's runtime scheduling (G-M-P scheduling model). The waiting end suspends the goroutine, and the ready end puts the goroutine into the scheduling queue again. This part needs to have a basic understanding of the GPM scheduling model in order to understand the whole process. GMP scheduling can be seen in literature [3].

4. Summary

4.1 go netpoll execution flow

-

goroutine is a unique lightweight thread of golang. It schedules in user space. Combined with golang's runtime scheduling model, its scheduling resources are far less than threads. Therefore, it makes multithreaded IO processing that was not applicable before shine again.

-

Through encapsulation, golang uses its runtime scheduling to separate IO waiting from Io read-write execution. Add goroutine to the waiting queue of epoll through pollDeesc.waitRead() and pollDesc.waitWrite(), and complete the processing of the waiting end; Wake up goroutine from the waiting end through netpoll() and put it back into the ready and schedulable state; The connection waiting and execution is golang's powerful G-M-P scheduling model. The whole dispatching process is shown as follows:

4.2 enlightenment from go netpoll

void ListenAndServe() { // 1. Create and bind the server socket servsock // 2. binding servsock reach epoll epoll_event event; event.events=EPOLLIN; //input monitoring event.data.fd=servsock; int epfd=epoll_create(20); //establish epoll routine epoll_ctl(epfd,EPOLL_CTL_ADD,servsock,&event); //towards epfd add to fd Input listening event for struct epoll_event* events; events=(epoll_event*)malloc(sizeof(struct epoll_event)*EPOLL_SIZE); //Apply for storing an array of events // 3. Loop listening while(1) { int event_cnt=epoll_wait(epfd,events,EPOLL_SIZE,-1); //Set infinite wait if(event_cnt==-1) printf("epoll_wait() error"); for(int i=0;i<event_cnt;i++) { // 4. Server connection request if(events[i].data.fd==servsock) //Description is a new client request { // call accept Connect and register a new epoll monitor clntsock=accept(...); event.events=EPOLLIN; event.data.fd=clntsock; //adopt event.data.fd deposit clntsock,Used to mark the returned event To which socket epoll_ctl(epfd,EPOLL_CTL_ADD,clntsock,&event); //Add the newly applied connection epfd } else { // 5. The client reads and writes events and processes them accordingly } } } ... } }

4.3 analysis of advantages and disadvantages

4.4 master slave Reactor model

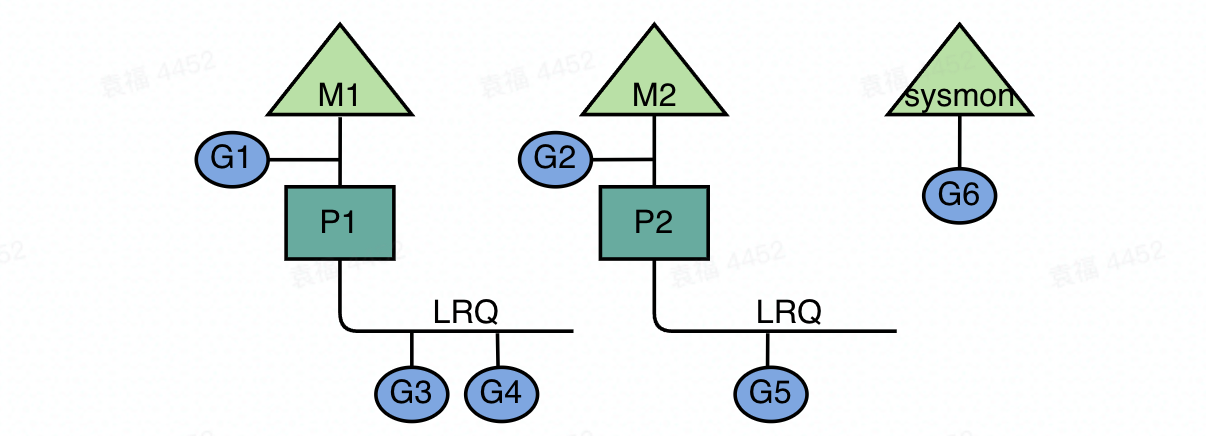

go netpoll should be more than enough for writing small-scale network applications. However, for a large number of http and rpc requests from large Internet companies, it is fatal to open goroutine without restriction and no service degradation. When a large number of requests arrive at the same time, it will directly lead to the paralysis of golang's runtime scheduling. Therefore, large companies often encapsulate their own network libraries based on the multiplexing of the operating system. The classic model is the master-slave reactor model. The master-slave reactor model usually consists of a master reactor thread, a slave actor thread (or multiple), and a worker thread pool. The master reactor is responsible for listening to the events of the server socket, encapsulating the connected client socket into a connection and handing it to the slave reactor; The reactor is specially responsible for receiving read/write events and sending the received data to the worker for business processing; Worker is a thread pool (coordinator pool), which is responsible for processing the business logic after reading and writing. As shown in the figure below:

reference

[1] Concurrent programming 3: IO multiplexing technology (2)

[2] If this article can't explain the essence of epoll, come and strangle me!

[3] Scheduling In Go : Part II - Go Scheduler

[4] Full disclosure of the source code of go netpoller's native network model

[5] go language design and implementation section 6.6