1. Pre-preparation

1.1 Basic Knowledge

In Kubernetes, using the GlusterFS file system, the steps are usually:

Create brick-->Create volume-->Create PV-->Create PVC-->Pod Mount PVC

If you want to create multiple PV s, you need to repeat the execution manually, and glusterfs can be managed through Heketi.

Heketi is used to manage the life cycle of GlusterFS volumes and provides a RESTful API interface for Kubernetes calls because GlusterFS does not provide a way to make API calls, so we use heketi.With Heketi, Kubernetes can dynamically configure GlusterFS volumes, and Heketi dynamically chooses the volumes needed for bricks creation within the cluster to ensure that copies of the data are dispersed across different fault domains in the cluster. Heketi also supports GlusterFS multi-cluster management to facilitate administrator operations on GlusterFS.

Heketi requires bare disks on each glusterfs node for Heketi to create PVs and VG s.Using Hekete, Kubernetes uses the PV steps as follows:

Create StorageClass-->Create PVC-->Pod Mount PVC

This is called dynamic resource supply based on StorageClass.

Tip: This experiment deploys glusterfs based on Kubernetes, while Heketi, the glusterfs management component, also uses Kubernetes.

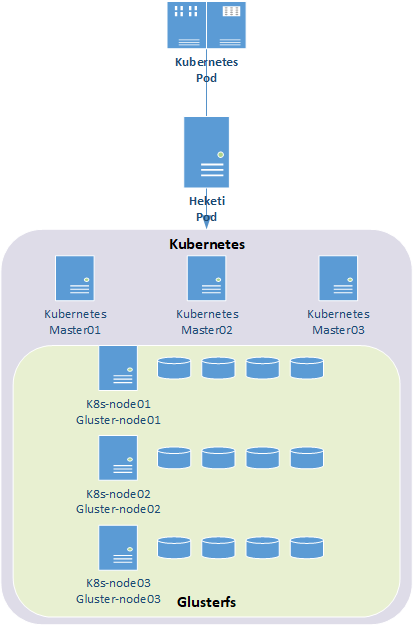

1.2 Schema

Tip: This experiment does not involve Kubernetes deployment, Kubernetes deployment reference 001-019.

1.3 Related Planning

Host |

IP |

disk |

Remarks |

k8smaster01 |

172.24.8.71 |

Kubernetes master node |

|

k8smaster02 |

172.24.8.72 |

Kubernetes master node |

|

k8smaster03 |

172.24.8.73 |

Kubernetes master node |

|

k8snode01 |

172.24.8.74 |

sdb |

Kubernetes node glusterfs node |

k8snode02 |

172.24.8.75 |

sdb |

Kubernetes node glusterfs node |

k8snode03 |

172.24.8.76 |

sdb |

Kubernetes node glusterfs node |

Disk Planning

|

k8snode01

|

k8snode02

|

k8snode03

|

|||||||

|

PV

|

sdb1

|

sdb1

|

sdb1

|

||||||

1.4 Deployment Conditions

Hyper-converged deployments require deployed Kubernetes cluster management access.If the Kubernetes node meets the following requirements, you can choose to deploy GlusterFS as a hyperconvergent service:

- There must be at least three nodes for glusterfs;

- Each node must have at least one bare disk device connected for use by heketi.These devices must not contain any data, and heketi will format and partition the device;

- Each node must open the following ports for GlusterFS communication:

- 2222:sshd port of GlusterFS pod;

- 24007:GlusterFS daemon;

- 24008:GlusterFS management;

- 49152 - 49251: Each brick on each volume on the host requires a separate port.For each new brick, a new port will be used starting at 49152.It is recommended that the default range for each host be 49152-49251, or adjust as needed.

- The following kernel modules must be loaded:

- dm_snapshot

- dm_mirror

- dm_thin_pool

- For kernel modules, the existence of the module can be checked through lsmod | grep <name>, and the modprobe <name> loads the given module.

- Each node requires that the mount.glusterfs command be available.This command is provided by the glusterfs-fuse package on all Red Hat-based operating systems.

Note: The GlusterFS client version installed on the node should be as close to the server version as possible.To get the installed version, you can view it through the glusterfs --version or kubectl exec <pod> -- glusterfs --version command.

1.5 Additional preparations

NTP configuration for all nodes;

All nodes add corresponding host name resolution:

1 172.24.8.71 k8smaster01 2 172.24.8.72 k8smaster02 3 172.24.8.73 k8smaster03 4 172.24.8.74 k8snode01 5 172.24.8.75 k8snode02 6 172.24.8.76 k8snode03

Note: If not necessary, it is recommended to turn off the firewall and SELinux.

2. Planning for bare equipment

2.1 Confirmation Disk

1 [root@k8snode01 ~]# fdisk /dev/sdb -l #Check if sdb is a bare disk3. Install glusterfs-fuse

3.1 Install the corresponding RPM source

1 [root@k8snode01 ~]# yum -y install centos-release-gluster 2 [root@k8snode01 ~]# yum -y install glusterfs-fuse #Install glusterfs-fuse

Tip: similar operations k8snode01, k8snode02, k8snode03, install glusterfs-fuse component according to 1.4 requirements;

After installing the source, the file CentOS-Storage-common.repo will be added to the / etc/yum.repos.d/directory as follows:

# CentOS-Storage.repo

#

# Please see http://wiki.centos.org/SpecialInterestGroup/Storage for more

# information

[centos-storage-debuginfo]

name=CentOS-$releasever - Storage SIG - debuginfo

baseurl=http://debuginfo.centos.org/$contentdir/$releasever/storage/$basearch/

gpgcheck=1

enabled=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-SIG-Storage

3.2 Loading corresponding modules

1 [root@k8snode01 ~]# cat > /etc/sysconfig/modules/glusterfs.modules <<EOF 2 #!/bin/bash 3 4 for kernel_module in dm_snapshot dm_mirror dm_thin_pool;do 5 /sbin/modinfo -F filename \${kernel_module} > /dev/null 2>&1 6 if [ \$? -eq 0 ]; then 7 /sbin/modprobe \${kernel_module} 8 fi 9 done; 10 EOF 11 [root@k8snode01 ~]# chmod +x /etc/sysconfig/modules/glusterfs.modules 12 [root@k8snode01 ~]# lsmod |egrep "dm_snapshot|dm_mirror|dm_thin_pool" #All glusterfs node checks

Tip: You can load a given module through modprobe <name>.

IV. Kubernetes Deployment glusterfs

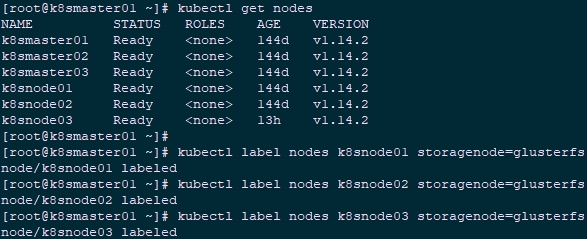

4.1 Node tag

1 [root@k8smaster01 ~]# kubectl label nodes k8snode01 storagenode=glusterfs 2 [root@k8smaster01 ~]# kubectl label nodes k8snode02 storagenode=glusterfs 3 [root@k8smaster01 ~]# kubectl label nodes k8snode03 storagenode=glusterfs

Tip: The following Selector for label exists for kube-templates/glusterfs-daemonset.yaml in subsequent deployments using DaemonSet:

spec:

nodeSelector:

storagenode: glusterfs

4.2 Download glusterfs-Kubernetes

1 [root@k8smaster01 ~]# yum -y install git 2 [root@k8smaster01 ~]# git clone https://github.com/gluster/gluster-kubernetes.git

4.3 Modify glusterfs topology

1 [root@k8smaster01 ~]# cd gluster-kubernetes/deploy/ 2 [root@k8smaster01 deploy]# cp topology.json.sample topology.json 3 [root@k8smaster01 deploy]# vi topology.json

1 { 2 "clusters": [ 3 { 4 "nodes": [ 5 { 6 "node": { 7 "hostnames": { 8 "manage": [ 9 "k8snode01" 10 ], 11 "storage": [ 12 "172.24.8.74" 13 ] 14 }, 15 "zone": 1 16 }, 17 "devices": [ 18 "/dev/sdb" 19 ] 20 }, 21 { 22 "node": { 23 "hostnames": { 24 "manage": [ 25 "k8snode02" 26 ], 27 "storage": [ 28 "172.24.8.75" 29 ] 30 }, 31 "zone": 1 32 }, 33 "devices": [ 34 "/dev/sdb" 35 ] 36 }, 37 { 38 "node": { 39 "hostnames": { 40 "manage": [ 41 "k8snode03" 42 ], 43 "storage": [ 44 "172.24.8.76" 45 ] 46 }, 47 "zone": 1 48 }, 49 "devices": [ 50 "/dev/sdb" 51 ] 52 } 53 ] 54 } 55 ] 56 }

Tip: The heketi configuration file and introduction refer to GlusterFS Independent Deployment with 009.Kubernetes Permanent Storage.

If you need to modify the way heketi is exposed, if you need to modify it to NodePort, refer to https://lichi6174.github.io/glusterfs-heketi/.

All deployment-related yaml is located at/root/gluster-kubernetes/deploy/kube-templates, and the default parameters are used in this experiment.

4.4 Configure heketi

1 [root@k8smaster01 deploy]# cp heketi.json.template heketi.json 2 [root@k8smaster01 deploy]# vi heketi.json 3 { 4 "_port_comment": "Heketi Server Port Number", 5 "port" : "8080", 6 7 "_use_auth": "Enable JWT authorization. Please enable for deployment", 8 "use_auth" : true, #Turn on user authentication 9 10 "_jwt" : "Private keys for access", 11 "jwt" : { 12 "_admin" : "Admin has access to all APIs", 13 "admin" : { 14 "key" : "admin123" #Administrator password 15 }, 16 "_user" : "User only has access to /volumes endpoint", 17 "user" : { 18 "key" : "xianghy" #User Password 19 } 20 }, 21 22 "_glusterfs_comment": "GlusterFS Configuration", 23 "glusterfs" : { 24 25 "_executor_comment": "Execute plugin. Possible choices: mock, kubernetes, ssh", 26 "executor" : "${HEKETI_EXECUTOR}", #This experiment uses the Kubernetes method 27 28 "_db_comment": "Database file name", 29 "db" : "/var/lib/heketi/heketi.db", #heketi data storage 30 31 "kubeexec" : { 32 "rebalance_on_expansion": true 33 }, 34 35 "sshexec" : { 36 "rebalance_on_expansion": true, 37 "keyfile" : "/etc/heketi/private_key", 38 "port" : "${SSH_PORT}", 39 "user" : "${SSH_USER}", 40 "sudo" : ${SSH_SUDO} 41 } 42 }, 43 44 "backup_db_to_kube_secret": false 45 }

4.5 Corrections

The # kubectl get pod command of the new version of Kubernetes does not have the--show-all option, so the deployment gk-deploy script needs to be corrected as follows.

1 [root@k8smaster01 deploy]# vi gk-deploy 2 924 #heketi_pod=$(${CLI} get pod --no-headers --show-all --selector="heketi" | awk '{print $1}') 3 925 heketi_pod=$(${CLI} get pod --no-headers --selector="heketi" | awk '{print $1}')

Since the domestic glusterfs mirror may not pull, it is recommended to pull the mirror in advance by VPN, and then upload it to all glusterfs node s.

1 [root@VPN ~]# docker pull gluster/gluster-centos:latest 2 [root@VPN ~]# docker pull heketi/heketi:dev 3 [root@VPN ~]# docker save -o gluster_latest.tar gluster/gluster-centos:latest 4 [root@VPN ~]# docker save -o heketi_dev.tar heketi/heketi:dev 5 [root@k8snode01 ~]# docker load -i gluster_latest.tar 6 [root@k8snode01 ~]# docker load -i heketi_dev.tar 7 [root@k8snode01 ~]# docker images 8

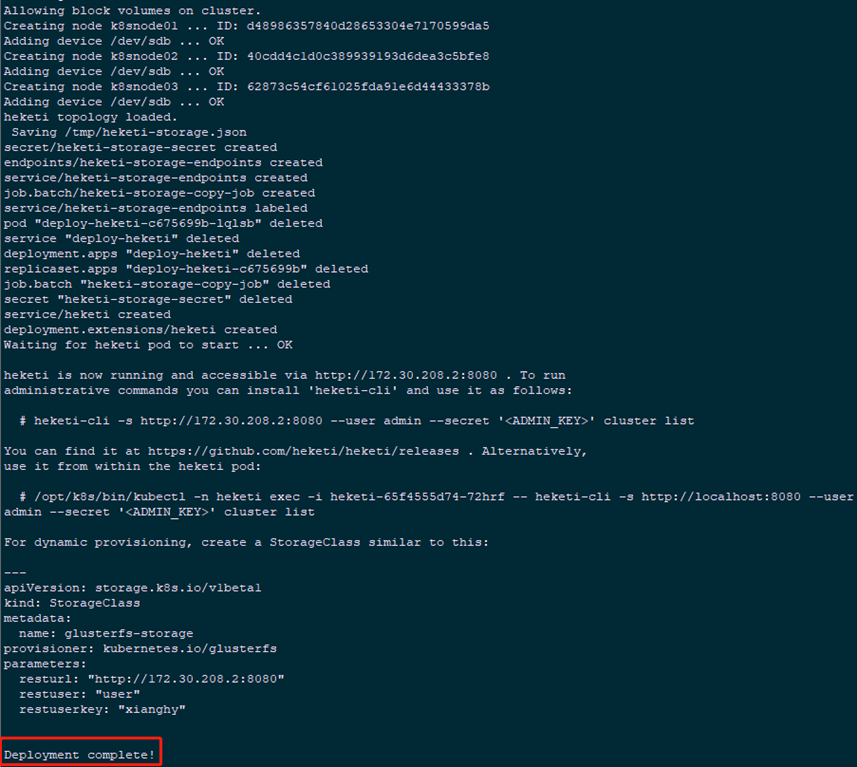

4.6 Formal Deployment

1 [root@k8smaster01 deploy]# ./gk-deploy -h #View deployment parameters 2 [root@k8smaster01 deploy]# kubectl create ns heketi #Recommended deployment in a separate namespace 3 [root@k8smaster01 deploy]# ./gk-deploy -g -n heketi topology.json --admin-key admin123 --user-key xianghy 4 ...... 5 Do you wish to proceed with deployment? 6 7 [Y]es, [N]o? [Default: Y]: y

Tip: Deployment script more parameters reference: https://github.com/gluster/gluster-kubernetes/blob/master/deploy/gk-deploy.

Note: If the deployment fails, you need to redeploy it by completely removing it:

1 [root@k8smaster01 deploy]# ./gk-deploy --abort --admin-key admin123 --user-key xianghy -y -n heketi 2 [root@k8smaster01 deploy]# kubectl delete -f kube-templates/ -n heketi

The glusterfs node needs to be cleaned up thoroughly as follows:

1 [root@k8snode01 ~]# dmsetup ls 2 [root@k8snode01 ~]# dmsetup remove_all 3 [root@k8snode01 ~]# rm -rf /var/log/glusterfs/ 4 [root@k8snode01 ~]# rm -rf /var/lib/heketi 5 [root@k8snode01 ~]# rm -rf /var/lib/glusterd/ 6 [root@k8snode01 ~]# rm -rf /etc/glusterfs/ 7 [root@k8snode01 ~]# dd if=/dev/zero of=/dev/sdb bs=512k count=1 8 [root@k8snode01 ~]# wipefs -af /dev/sdb

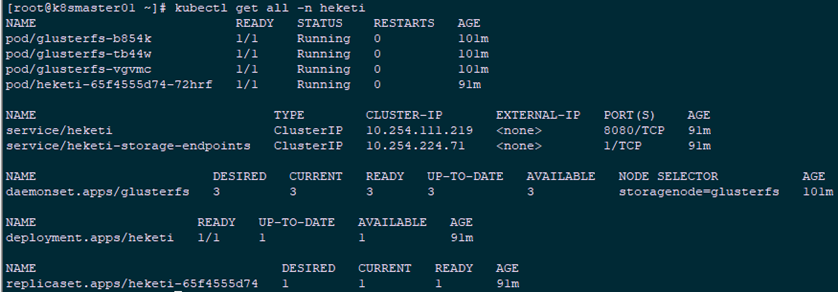

4.7 Kubernetes Cluster View Validation

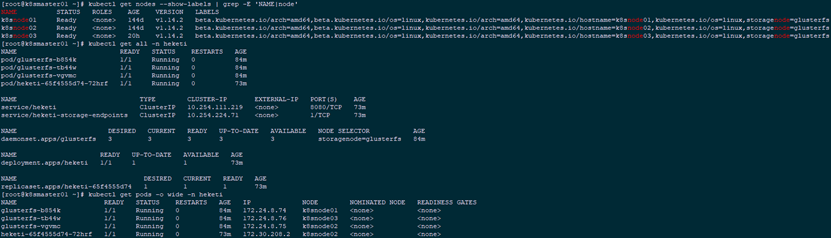

1 [root@k8smaster01 ~]# kubectl get nodes --show-labels | grep -E 'NAME|node' 2 [root@k8smaster01 ~]# kubectl get all -n heketi

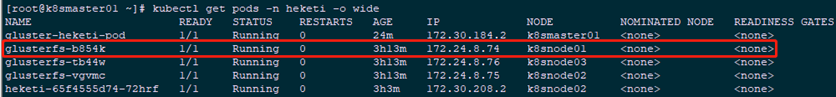

1 [root@k8smaster01 ~]# kubectl get pods -o wide -n heketi4.8 gluster cluster view validation

1 [root@k8smaster01 ~]# kubectl exec -it heketi-65f4555d74-72hrf -n heketi -- heketi-cli cluster list --user admin --secret admin123 #Cluster List 2 [root@k8smaster01 ~]# kubectl -n heketi exec -ti heketi-65f4555d74-72hrf /bin/bash [root@heketi-65f4555d74-72hrf /]# heketi-cli cluster list --user admin --secret admin123 #Enter the heketi container to view 3 [root@k8smaster01 ~]# curl http://10.254.111.219:8080/hello 4 Hello from Heketi

Note: Use a 4.6 script for one-click deployment or files in the gluster-kubernetes/deploy/directory to deploy step by step separately. The ideas for deployment are as follows:

- Deploy glusterfs DaemonSet using glusterfs-daemonset.json;

- Label node s;

- Deploy Heketi's service account using heketi-service-account.json;

- Authorize the service account created by Heketi;

- Create secret;

- Forward local port 8080 to deploy-heketi.

Full process reference for stand-alone deployment: https://jimmysong.io/kubernetes-handbook/practice/using-heketi-gluster-for-persistent-storage.html.

5. Install heketi-cli

Since managing heketi on the master node requires entering the heketi container or using the kubectl exec-ti method, it is recommended that the heketi client be installed directly on the master node for direct management,

5.1 Install the heketi service

1 [root@k8smaster01 ~]# yum -y install centos-release-gluster 2 [root@k8smaster01 ~]# yum -y install heketi-client

5.2 Configuring heketi

1 [root@k8smaster01 ~]# echo "export HEKETI_CLI_SERVER=http://$(kubectl get svc heketi -n heketi -o go-template='{{.spec.clusterIP}}'):8080" >> /etc/profile.d/heketi.sh 2 [root@k8smaster01 ~]# echo "alias heketi-cli='heketi-cli --user admin --secret admin123'" >> ~/.bashrc 3 [root@k8smaster01 ~]# source /etc/profile.d/heketi.sh 4 [root@k8smaster01 ~]# source ~/.bashrc 5 [root@k8smaster01 ~]# echo $HEKETI_CLI_SERVER 6 http://heketi:8080

5.3 Cluster Management

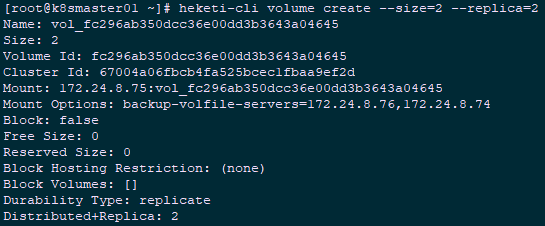

1 [root@k8smaster01 ~]# heketi-cli cluster list 2 Clusters: 3 Id:67004a06fbcb4fa525bcec1fbaa9ef2d [file][block] 4 [root@k8smaster01 ~]# heketi-cli cluster info 67004a06fbcb4fa525bcec1fbaa9ef2d #Cluster Details 5 Cluster id: 67004a06fbcb4fa525bcec1fbaa9ef2d 6 Nodes: 7 40cdd4c1d0c389939193d6dea3c5bfe8 8 62873c54cf61025fda91e6d44433378b 9 d48986357840d28653304e7170599da5 10 Volumes: 11 5f15f201d623e56b66af56313a1975e7 12 Block: true 13 14 File: true 15 [root@k8smaster01 ~]# heketi-cli topology info 67004a06fbcb4fa525bcec1fbaa9ef2d #View Topology Information 16 [root@k8smaster01 ~]# heketi-cli node list #View all node s 17 Id:40cdd4c1d0c389939193d6dea3c5bfe8 Cluster:67004a06fbcb4fa525bcec1fbaa9ef2d 18 Id:62873c54cf61025fda91e6d44433378b Cluster:67004a06fbcb4fa525bcec1fbaa9ef2d 19 Id:d48986357840d28653304e7170599da5 Cluster:67004a06fbcb4fa525bcec1fbaa9ef2d 20 [root@k8smaster01 ~]# heketi-cli node info 40cdd4c1d0c389939193d6dea3c5bfe8 #Node node information 21 [root@k8smaster01 ~]# heketi-cli volume create --size=2 --replica=2 #The default replica mode is 3 copies

1 [root@k8smaster01 ~]# heketi-cli volume list #List all volumes 2 [root@k8smaster01 ~]# heketi-cli volume info fc296ab350dcc36e00dd3b3643a04645 #Volume Information 3 [root@k8smaster01 ~]# heketi-cli volume delete fc296ab350dcc36e00dd3b3643a04645 #remove volume

Sixth, Kubernetes dynamically mounts glusterfs

6.1 StorageClass Dynamic Storage

kubernetes Shared Storage provider mode:

Static mode: Cluster administrators create PVs manually to set back-end storage characteristics when defining PVs;

Dynamic mode: Cluster administrators do not need to create PVs manually, but rather describe the back-end storage through StorageClass settings, labeled as a "Class". PVC is required to specify the type of storage, and the system will automatically complete the creation of PVs and binding to PVC. PVC can be declared a Class as "to prohibit the use of dynamic mode for PVC.

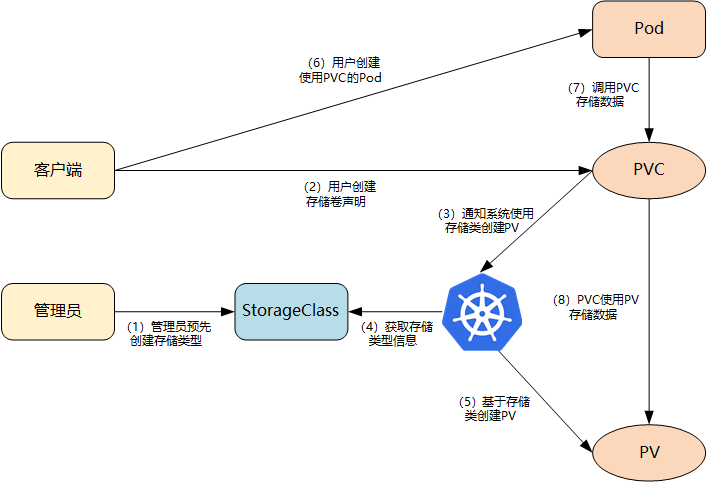

The overall process of dynamic storage and supply based on StorageClass is shown in the following figure:

- Cluster administrators pre-create storage classes (StorageClass es);

- User creates a persistent storage declaration using a storage class (PVC: PersistentVolumeClaim);

- Store persistent declaration notification system, which requires a persistent storage (PV: PersistentVolume);

- The system reads the information of the storage class;

- The system automatically creates the PV needed by PVC in the background based on the information of the storage class.

- Users create a Pod that uses PVC;

- The application in Pod uses PVC for data persistence.

- PVC uses PV for final data persistence.

Tip: For deployment of Kubernetes, refer to Appendix 003.Kubeadm Deployment Kubernetes.

6.2 Define StorageClass

Keyword description:

- provisioner: Represents a storage allocator that needs to be changed depending on the backend storage;

- reclaimPolicy: Default is "Delete". After deleting pvc, the corresponding pv and back-end volume, brick(lvm) are deleted together. When set to "Retain", the data is retained and manual processing is required if deletion is required.

- resturl: url provided by the heketi API service;

- restauthenabled: Optional parameter, default value is "false", heketi service must be set to "true" when opening authentication;

- restuser: Optional parameter to set the user name when authentication is turned on;

- SecNamespace: An optional parameter that can be set to use the namespace of persistent storage when authentication is turned on;

- SecName: Optional parameter. When authentication is turned on, the authentication password of the heketi service needs to be saved in the secret resource;

- Cluster id: An optional parameter specifying the cluster ID or a list of cluster IDS in the format "id1,id2";

- Volumetype: Optional parameter that sets the volume type and its parameters, and if the volume type is not assigned, an allocator determines the volume type; for example, "volumetype: replicate:3" denotes a replicate volume of three copies, "volumetype: disperse:4:2" denotes a disperse volume, where'4'is the data and'2' is the redundancy check, "volumetype: none" denotes a distribute volume

Tip: For different types of glusterfs, see 004.RHGS-Create volume.

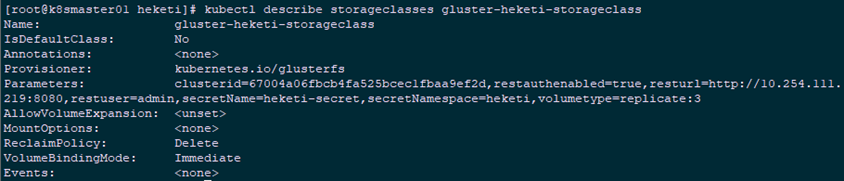

1 [root@k8smaster01 ~]# echo -n "admin123" | base64 #Convert password to 64-bit encoding 2 YWRtaW4xMjM= 3 [root@k8smaster01 ~]# mkdir -p heketi 4 [root@k8smaster01 ~]# cd heketi/ 5 [root@k8smaster01 ~]# vi heketi-secret.yaml #Create a secret to save the password 6 apiVersion: v1 7 kind: Secret 8 metadata: 9 name: heketi-secret 10 namespace: heketi 11 data: 12 # base64 encoded password. E.g.: echo -n "mypassword" | base64 13 key: YWRtaW4xMjM= 14 type: kubernetes.io/glusterfs 15 [root@k8smaster01 heketi]# kubectl create -f heketi-secret.yaml #Create heketi 16 [root@k8smaster01 heketi]# kubectl get secrets -n heketi 17 NAME TYPE DATA AGE 18 default-token-6n746 kubernetes.io/service-account-token 3 144m 19 heketi-config-secret Opaque 3 142m 20 heketi-secret kubernetes.io/glusterfs 1 3m1s 21 heketi-service-account-token-ljlkb kubernetes.io/service-account-token 3 143m 22 [root@kubenode1 heketi]# vim gluster-heketi-storageclass.yaml #Formally Create StorageClass 23 apiVersion: storage.k8s.io/v1 24 kind: StorageClass 25 metadata: 26 name: gluster-heketi-storageclass 27 parameters: 28 resturl: "http://10.254.111.219:8080" 29 clusterid: "67004a06fbcb4fa525bcec1fbaa9ef2d" 30 restauthenabled: "true" #If heketi turns authentication on, auth authentication must also be turned on here 31 restuser: "admin" 32 secretName: "heketi-secret" #name/namespace and secret Consistent Definitions in Resources 33 secretNamespace: "heketi" 34 volumetype: "replicate:3" 35 provisioner: kubernetes.io/glusterfs 36 reclaimPolicy: Delete 37 [root@k8smaster01 heketi]# kubectl create -f gluster-heketi-storageclass.yaml

Note: storageclass resources cannot be changed after they are created. Modifications can only be deleted and rebuilt.

1 [root@k8smaster01 heketi]# kubectl get storageclasses #View Confirmation 2 NAME PROVISIONER AGE 3 gluster-heketi-storageclass kubernetes.io/glusterfs 85s 4 [root@k8smaster01 heketi]# kubectl describe storageclasses gluster-heketi-storageclass

6.3 Defining PVC

1 [root@k8smaster01 heketi]# vi gluster-heketi-pvc.yaml 2 apiVersion: v1 3 kind: PersistentVolumeClaim 4 metadata: 5 name: gluster-heketi-pvc 6 annotations: 7 volume.beta.kubernetes.io/storage-class: gluster-heketi-storageclass 8 spec: 9 accessModes: 10 - ReadWriteOnce 11 resources: 12 requests: 13 storage: 1Gi

Note: accessModes can be abbreviated as follows:

- ReadWriteOnce: Short for RWO, read and write permissions, and can only be mounted by a single node;

- ReadOnlyMany: Short for ROX, read-only permission, allow mounting by multiple node s;

- ReadWriteMany: Short for RWX, read and write permissions, allows mounting by multiple node s.

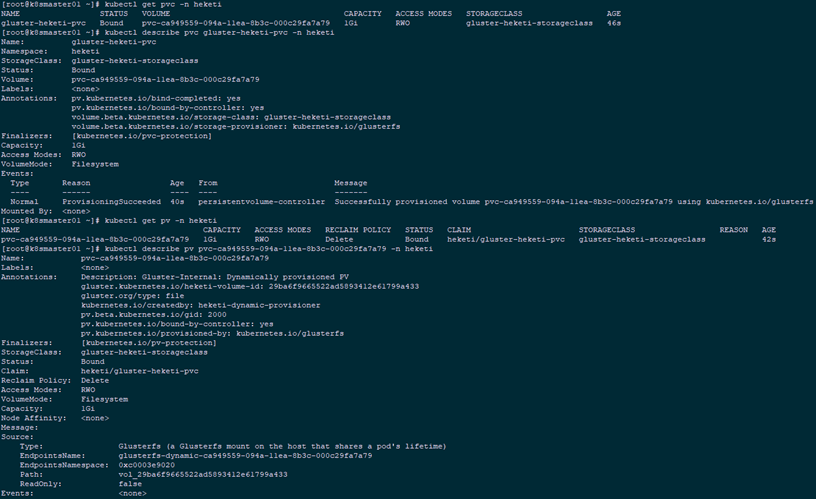

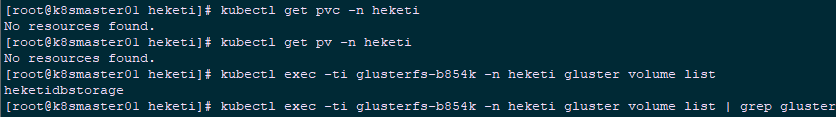

1 [root@k8smaster01 heketi]# kubectl create -f gluster-heketi-pvc.yaml -n heketi 2 [root@k8smaster01 heketi]# kubectl get pvc -n heketi 3 [root@k8smaster01 heketi]# kubectl describe pvc gluster-heketi-pvc -n heketi 4 [root@k8smaster01 heketi]# kubectl get pv -n heketi 5 [root@k8smaster01 heketi]# kubectl describe pv pvc-ca949559-094a-11ea-8b3c-000c29fa7a79 -n heketi

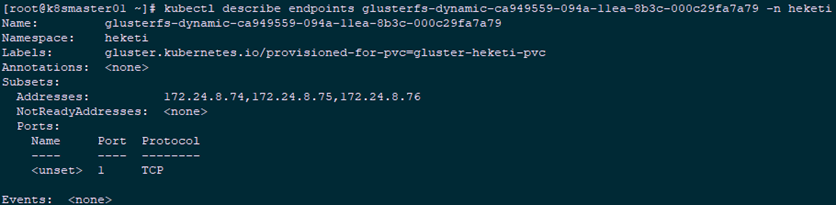

1 [root@k8smaster01 heketi]# kubectl describe endpoints glusterfs-dynamic-ca949559-094a-11ea-8b3c-000c29fa7a79 -n heketiTip: As you can see from the above, the state of PVC is Bound and Capacity is 1G.View PV details, in addition to capacity, reference storageclass information, status, recycling policy, and so on, and know the Endpoint and path of GlusterFS.EndpointsName is in a fixed format: glusterfs-dynamic-PV_NAME, and the endpoints resource specifies the specific address at which storage is mounted.

6.4 Confirm View

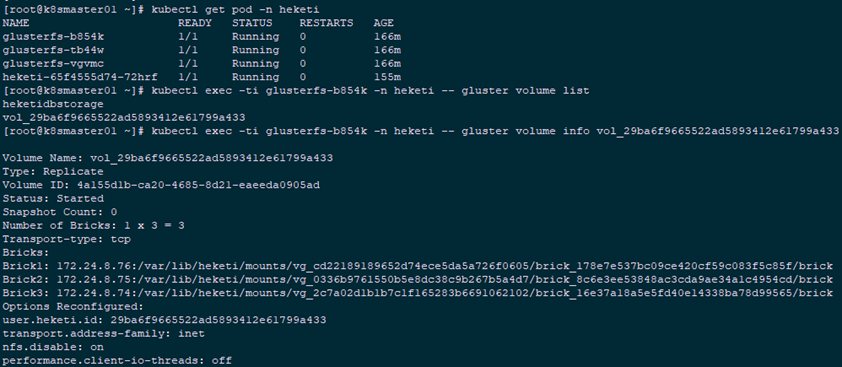

Information created through 5.3:

- volume and brick have been created;

- The primary mount point (communication) is 172.24.8.41 nodes, the other two nodes are alternative;

- In the case of three copies, all nodes create brick s.

1 [root@k8smaster01 ~]# kubectl get pod -n heketi 2 [root@k8smaster01 ~]# kubectl exec -ti glusterfs-b854k -n heketi -- lsblk #glusterfs Node View 3 [root@k8smaster01 ~]# kubectl exec -ti glusterfs-b854k -n heketi -- df -hT #glusterfs Node View 4 [root@k8smaster01 ~]# kubectl exec -ti glusterfs-b854k -n heketi -- gluster volume list 5 [root@k8smaster01 ~]# kubectl exec -ti glusterfs-b854k -n heketi -- gluster volume info vol_29ba6f9665522ad5893412e61799a433 #glusterfs Node View

6.5 Pod Mount Test

1 [root@xxx ~]# yum -y install centos-release-gluster 2 [root@xxx ~]# yum -y install glusterfs-fuse #Install glusterfs-fuse

Tip: This environment master node also allows the distribution of pod s, so all masters must also install glusterfs-fuse for normal mounting, and the version needs to be consistent with the glusterfs node.

1 [root@k8smaster01 heketi]# vi gluster-heketi-pod.yaml 2 kind: Pod 3 apiVersion: v1 4 metadata: 5 name: gluster-heketi-pod 6 spec: 7 containers: 8 - name: gluster-heketi-container 9 image: busybox 10 command: 11 - sleep 12 - "3600" 13 volumeMounts: 14 - name: gluster-heketi-volume #Must match name in volume 15 mountPath: "/pv-data" 16 readOnly: false 17 volumes: 18 - name: gluster-heketi-volume 19 persistentVolumeClaim: 20 claimName: gluster-heketi-pvc #Must match the name in the PVC created by 5.3 21 [root@k8smaster01 heketi]# kubectl create -f gluster-heketi-pod.yaml -n heketi #Create Pod

6.6 Confirm Validation

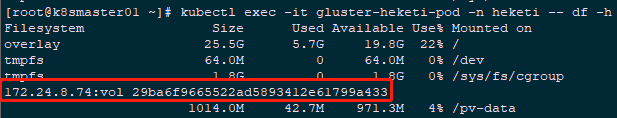

1 [root@k8smaster01 ~]# kubectl get pod -n heketi | grep gluster-heketi 2 gluster-heketi-pod 1/1 Running 0 4m58s 3 [root@k8smaster01 ~]# kubectl exec -it gluster-heketi-pod /bin/sh -n heketi #Enter Pod to write test file 4 / # cd /pv-data/ 5 /pv-data # echo "This is a file!" >> a.txt 6 /pv-data # echo "This is b file!" >> b.txt 7 /pv-data # ls 8 a.txt b.txt 9 [root@k8smaster01 ~]# kubectl exec -it gluster-heketi-pod -n heketi -- df -h #View mounted glusterfs

1 [root@k8smaster01 ~]# kubectl get pods -n heketi -o wide #View the corresponding glusterfs node1 [root@k8smaster01 ~]# kubectl exec -ti glusterfs-b854k -n heketi -- cat /var/lib/heketi/mounts/vg_2c7a02d1b1b7c1f165283b6691062102/brick_16e37a18a5e5fd40e14338ba78d99565/brick/a.txt 2 This is a file!

Tip: Write the appropriate test file through Pod, then check the existence through the glusterfs node.

6.7 Delete Resources

1 [root@k8smaster01 ~]# cd heketi/ 2 [root@k8smaster01 heketi]# kubectl delete -f gluster-heketi-pod.yaml -n heketi 3 [root@k8smaster01 heketi]# kubectl delete -f gluster-heketi-pvc.yaml 4 [root@k8smaster01 heketi]# kubectl get pvc -n heketi 5 [root@k8smaster01 heketi]# kubectl get pv -n heketi 6 [root@k8smaster01 heketi]# kubectl exec -ti glusterfs-b854k -n heketi gluster volume list | grep gluster

Reference: https://www.linuxba.com/archives/8152

https://www.cnblogs.com/blackmood/p/11389811.html