Introduction to file system (FS)

File system composition

① File system interface

② Software collection for object management

③ Object and attribute

File system role

From the perspective of system, file system is a system that organizes and allocates the space of file storage device, is responsible for file storage, and protects and retrieves the stored files.

Specifically, it is responsible for creating files for users, storing, reading, modifying and dumping files, and controlling file access

Mount and use of file system

File systems other than the root file system can be accessed only after they are mounted to the mount point. The mount point is a directory file associated with the partition device file

Analogy: NES

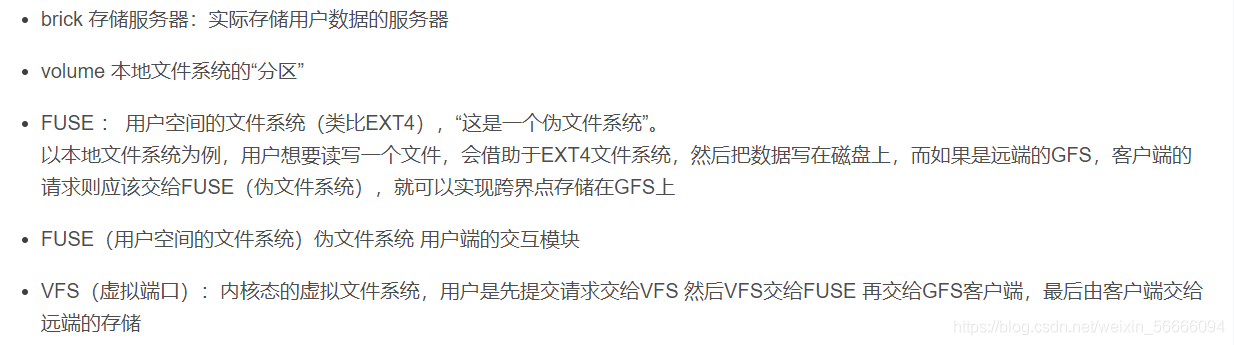

GFS consists of three components

① Storage server

② Client

③ NFS/Samba storage gateway composition

The positioning of the client is more important

Because it has no metadata server

PS: role of metadata server: store metadata and help users locate file location, index and other information

In the file system with metadata server, if the metadata is damaged, the file system will be directly unavailable (single point of failure - server location)

GlusterFS composition

GLusterFS modular dimension trestle architecture

Work history

The client sends a read-write request locally, and then submits it to the VFS API to accept the request. After receiving the request, it will be handed over to FUSE (kernel pseudo file system). FUSE can simulate the operating system, so it can transfer the file system. The device location of transfer is: / dev / FUSE (device virtual device file for transmission) ——>Give it to the GFS client. The client will process the data according to the configuration file, and then send it to the GFS server through TCP/ib/rdma network, and write the data to the server storage device

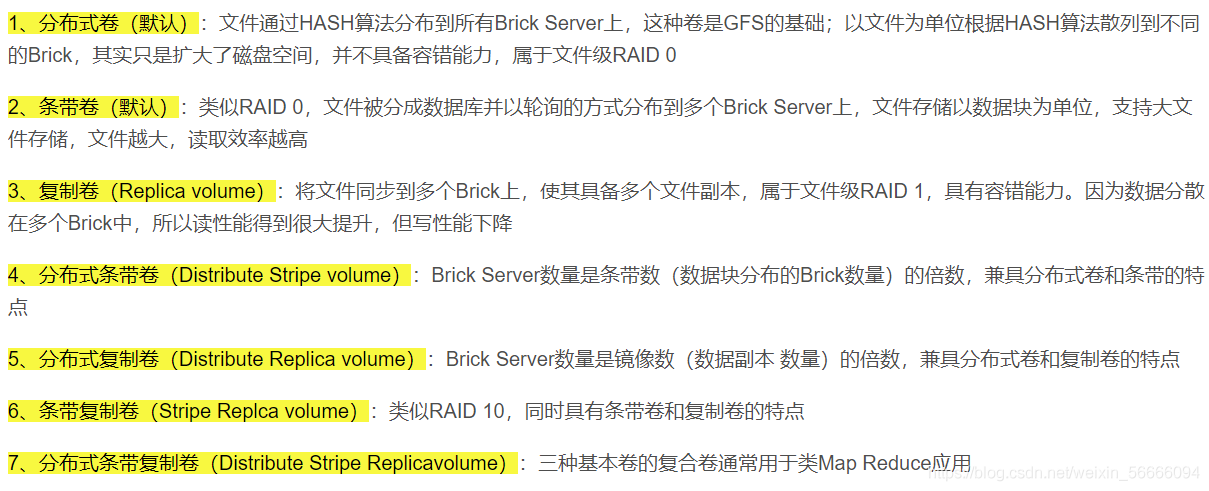

Seven volumes supported by GFS

How does the backend store locate files

Elastic HASH algorithm

Get a fixed length data through HASH algorithm (here is a 32-bit integer)

Usually, the results obtained from different data are different

In order to solve the complexity of distributed file data indexing and positioning, HASH algorithm is used to assist

deploy

First add four disks per node

Restart the server

Then ` ` ` javascript

//

Node1192.168.140.140

Node2192.168.140.141

Node3192.168.142.142

Node4192.168.142.143

Client node: 192.168.142.144

Configure / etc/hosts

```javascript // [root@node1 /opt] # echo "192.168.142.140 node1" >> /etc/hosts [root@node1 /opt] # echo "192.168.142.141 node2" >> /etc/hosts [root@node1 /opt] # echo "192.168.142.142 node3" >> /etc/hosts [root@node1 /opt] # echo "192.168.142.143 node4" >> /etc/hosts [root@node1 /opt] # echo "192.168.142.144 client" >> /etc/hosts [root@node2 /opt] # echo "192.168.142.140 node1" >> /etc/hosts [root@node2 /opt] # echo "192.168.142.141 node2" >> /etc/hosts [root@node2 /opt] # echo "192.168.142.142 node3" >> /etc/hosts [root@node2 /opt] # echo "192.168.142.143 node4" >> /etc/hosts [root@node2 /opt] # echo "192.168.142.144 client" >> /etc/hosts [root@node3 /opt] # echo "192.168.142.140 node1" >> /etc/hosts [root@node3 /opt] # echo "192.168.142.141 node2" >> /etc/hosts [root@node3 /opt] # echo "192.168.142.142 node3" >> /etc/hosts [root@node3 /opt] # echo "192.168.142.143 node4" >> /etc/hosts [root@node3 /opt] # echo "192.168.142.144 client" >> /etc/hosts [root@node4 /opt] # echo "192.168.142.140 node1" >> /etc/hosts [root@node4 /opt] # echo "192.168.142.141 node2" >> /etc/hosts [root@node4 /opt] # echo "192.168.142.142 node3" >> /etc/hosts [root@node4 /opt] # echo "192.168.142.143 node4" >> /etc/hosts [root@node4 /opt] # echo "192.168.142.144 client" >> /etc/hosts

Partition the disk and mount it

//

vim /opt/fdisk.sh

#!/bin/bash

NEWDEV=`ls /dev/sd* | grep -o 'sd[b-z]' | uniq`

for VAR in $NEWDEV

do

echo -e "n\np\n\n\n\nw\n" | fdisk /dev/$VAR &> /dev/null

mkfs.xfs /dev/${VAR}"1" &> /dev/null

mkdir -p /data/${VAR}"1" &> /dev/null

echo "/dev/${VAR}"1" /data/${VAR}"1" xfs defaults 0 0" >> /etc/fstab

done

mount -a &> /dev/null

[root@node1 ~] # chmod +x /opt/fdisk.sh

[root@node1 ~] # cd /opt/

[root@node1 ~] # ./fdisk.sh ##start-up

Install and start GFS

// [root@node1 ~]# yum -y install glusterfs glusterfs-server glusterfs-fuse glusterfs-rdma ##Other nodes do the same Plug in loaded: fastestmirror, langpacks [root@node1 opt]# systemctl start glusterd.service ##start-up [root@node1 opt]# systemctl enable glusterd.service ##Startup and self start [root@node1 opt]# ntpdate ntp1.aliyun.com

Add node to storage trust pool

Just one

// [root@node1 ~] # gluster peer probe node1 peer probe: success. Probe on localhost not needed [root@node1 ~] # gluster peer probe node2 peer probe: success. [root@node1 ~] # gluster peer probe node3 peer probe: success. [root@node1 ~] # gluster peer probe node4 peer probe: success. [root@node1 ~] # gluster peer status Number of Peers: 3 Hostname: node2 Uuid: 2ee63a35-6e83-4a35-8f54-c9c0137bc345 State: Peer in Cluster (Connected) Hostname: node3 Uuid: e63256a9-6700-466f-9279-3e3efa3617ec State: Peer in Cluster (Connected) Hostname: node4 Uuid: 9931effa-92a6-40c7-ad54-7361549dd96d State: Peer in Cluster (Connected)

Start creating volume

// name Volume type Brick dis-volume Distributed volume node1(/data/sdb1),node2(/data/sdb1) stripe-volume Strip roll node1(/data/sdc1),node2(/data/sdc1) rep-volume Copy volume node3(/data/sdb1),node4(/data/sdb1) dis-stripe Distributed striped volume node1(/data/sdd1),node2(/data/sdd1),node3(/data/sdd1),node4(/data/sdd1) dis-rep Distributed replication volume node1(/data/sde1),node2(/data/sde1),node3(/data/sde1),node4(/data/sde1)

Create distributed volume

// [root@node1 opt]# gluster volume create dis-volume node1:/data/sdb1 node3:/data/sdb1 force volume create: dis-volume: success: please start the volume to access data [root@node1 opt]# gluster volume list dis-volume [root@node1 opt]# gluster volume start dis-volume volume start: dis-volume: success [root@node1 opt]# gluster volume info dis-volume Volume Name: dis-volume Type: Distribute Volume ID: dcee994f-a69a-49dd-8799-241259f2991d Status: Started Snapshot Count: 0 Number of Bricks: 2 Transport-type: tcp Bricks: Brick1: node1:/data/sdb1 Brick2: node3:/data/sdb1 Options Reconfigured: transport.address-family: inet nfs.disable: on

Look at the volume list

// [root@node1 opt]# gluster volume list dis-rep dis-stripe dis-volume rep-volume stripe-volume

Deploy gluster client

// [root@promote ~]#systemctl stop firewalld [root@promote ~]#setenforce 0 [root@promote ~]#cd /opt [root@promote opt]#ls rh [root@promote opt]#rz -E rz waiting to receive. [root@promote opt]#ls gfsrepo.zip rh [root@promote opt]#unzip gfsrepo.zip [root@promote opt]#cd /etc/yum.repos.d/ [root@promote yum.repos.d]#ls local.repo repos.bak [root@promote yum.repos.d]#mv * repos.bak/ mv: Unable to add directory"repos.bak" Move to its own subdirectory"repos.bak/repos.bak" lower [root@promote yum.repos.d]#ls repos.bak [root@promote yum.repos.d]#vim glfs.repo [glfs] name=glfs baseurl=file:///opt/gfsrepo gpgcheck=0 enabled=1 ========>wq [root@promote yum.repos.d]#yum clean all && yum makecache [root@promote yum.repos.d]#yum -y install glusterfs glusterfs-fuse Plug in loaded: fastestmirror, langpacks Loading mirror speeds from cached hostfile matching glusterfs-3.10.2-1.el7.x86_64 The package for is already installed. Checking for updates. matching glusterfs-fuse-3.10.2-1.el7.x86_64 The package for is already installed. Checking for updates

Create a mount point to mount the gluster file system

//

[root@client opt]# mkdir -p /test/{dis,stripe,rep,dis_stripe,dis_rep}

[root@client opt]# ls /test

dis dis_rep dis_stripe rep stripe

[root@client opt]# mount.glusterfs node1:dis-volume /test/dis

[root@client opt]# mount.glusterfs node1:stripe-volume /test/stripe

[root@client opt]# mount.glusterfs node1:rep-volume /test/rep

[root@client opt]# mount.glusterfs node1:dis-stripe /test/dis_stripe

[root@client opt]# mount.glusterfs node1:dis-rep /test/dis_rep

[root@client opt]# df -hT

file system type Capacity used available used% Mount point

/dev/sda3 xfs 197G 4.5G 193G 3% /

devtmpfs devtmpfs 895M 0 895M 0% /dev

tmpfs tmpfs 910M 0 910M 0% /dev/shm

tmpfs tmpfs 910M 11M 900M 2% /run

tmpfs tmpfs 910M 0 910M 0% /sys/fs/cgroup

/dev/sda1 xfs 1014M 174M 841M 18% /boot

tmpfs tmpfs 182M 12K 182M 1% /run/user/42

tmpfs tmpfs 182M 0 182M 0% /run/user/0

node1:dis-volume fuse.glusterfs 8.0G 65M 8.0G 1% /test/dis

node1:stripe-volume fuse.glusterfs 8.0G 65M 8.0G 1% /test/stripe

node1:rep-volume fuse.glusterfs 4.0G 33M 4.0G 1% /test/rep

node1:dis-stripe fuse.glusterfs 16G 130M 16G 1% /test/dis_stripe

node1:dis-rep fuse.glusterfs 8.0G 65M 8.0G 1% /test/dis_rep

Configure hosts file

// [root@client ~]# echo "192.168.142.140 node1" >> /etc/hosts [root@client ~]# echo "192.168.142.141 node2" >> /etc/hosts [root@client ~]# echo "192.168.142.142 node3" >> /etc/hosts [root@client ~]# echo "192.168.142.143 node4" >> /etc/hosts [root@client ~]# echo "192.168.142.144 client" >> /etc/hosts