catalogue

2. Characteristics of the three basic volumes

Distributed replication volume

5. Maintenance commands for GFS file system

1, GFS overview

1. File system composition

File system interface (API)

Object managed software collection

Object and attribute

2. File system role

From a system point of view, a file system organizes and backs up the space of a file storage device

A system responsible for document storage, protection and retrieval of stored documents

Specifically, it is responsible for creating files, storing, reading, modifying, dumping files and controlling the storage of files for users

3. File system components

Brick (block storage server) the server that actually stores user data (pe of lvm)

Volume "partition" of the local file system (lvm's volume)

FUSE user space file system (category EXT4), "this is a pseudo file system", client exchange module

VFS (virtual port) is a kernel virtual file system. The user submits a request to VFS, then VFS gives it to fuse, then GFS client, and finally the client gives it to the remote storage

Glusterd (service) is a process that runs the re storage node (the client runs Gluster client). During the use of GFS, the exchange between the whole GFS is completed by Gluster client and glusterd

4. GFS features

Scalability and high performance

High availability

Global unified namespace

Elastic volume management

Standard based protocol

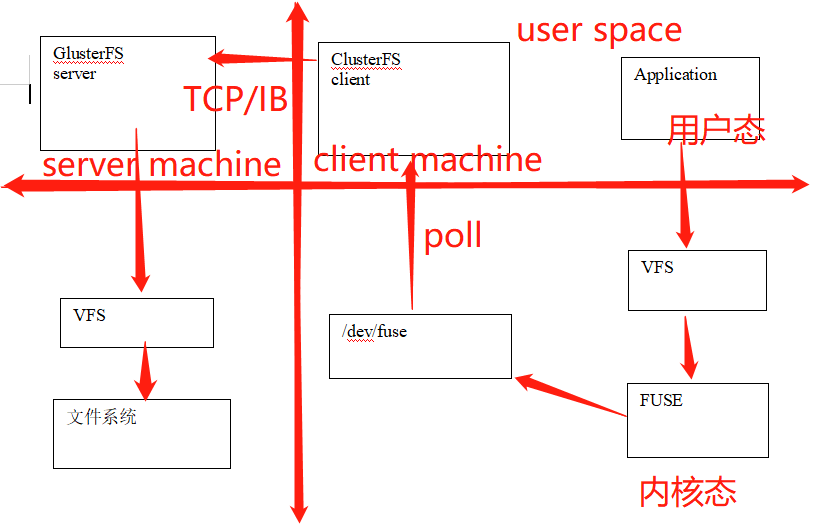

5. Working principle

First, external requests are sent to FUSE through the mount point and the VFS interface in the linux system

Then FUSE gives the data to the memory / dev/fuse, and then gives it to the GFS client for processing

The GFS client processes the data and transmits it to the GFS server through TCP and other protocols

After receiving the data, the GFS server will process the data through the VFS interface and transfer it to the file system

2, GFS volume

1. GFS volume type

Distributed volume

Strip roll

Copy volume

Distributed striped volume

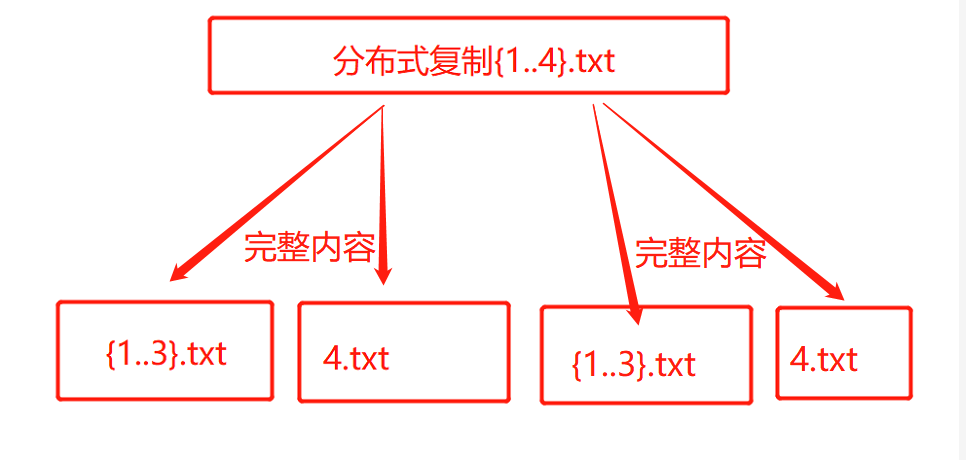

Distributed replication volume

Striped copy volume

Distributed striped data volume

2. Characteristics of the three basic volumes

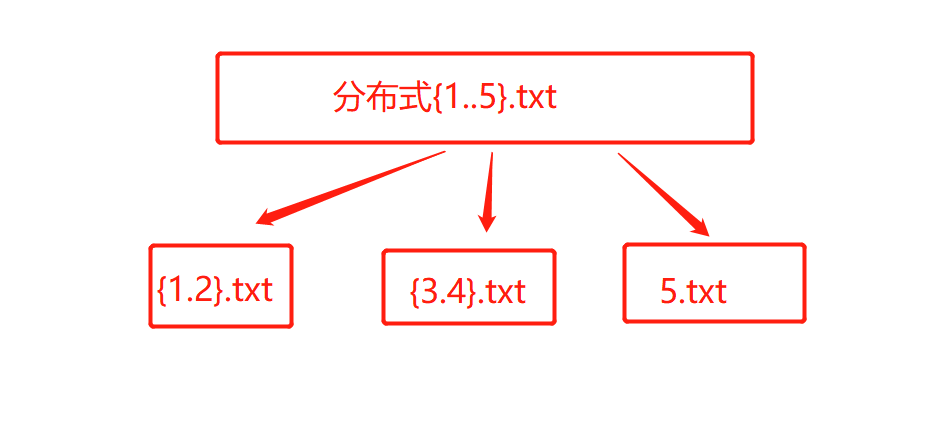

Distributed volume

The files are distributed on different servers without redundancy

Expand volume size more easily and cheaply

A single point of failure can cause data loss

Rely on underlying data protection

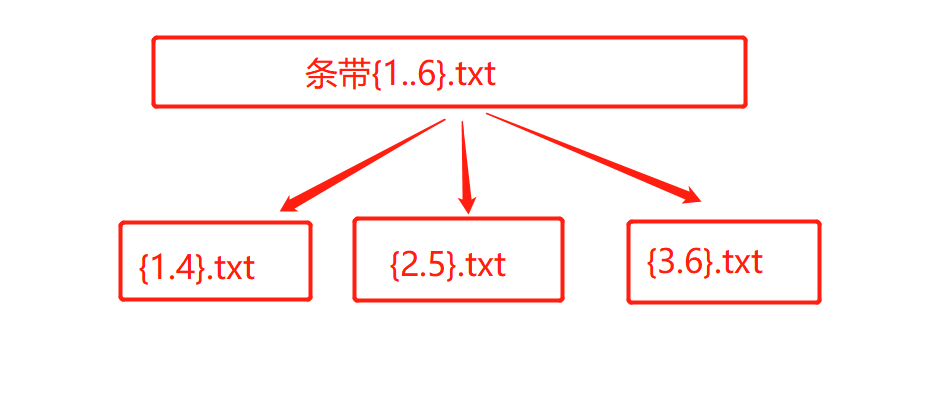

Strip roll

The data is divided into smaller pieces and distributed to different strips in the block server cluster

The data is divided into smaller pieces and distributed to different strips in the block server cluster

Distribution reduces load and speeds up access with smaller files

No data redundancy

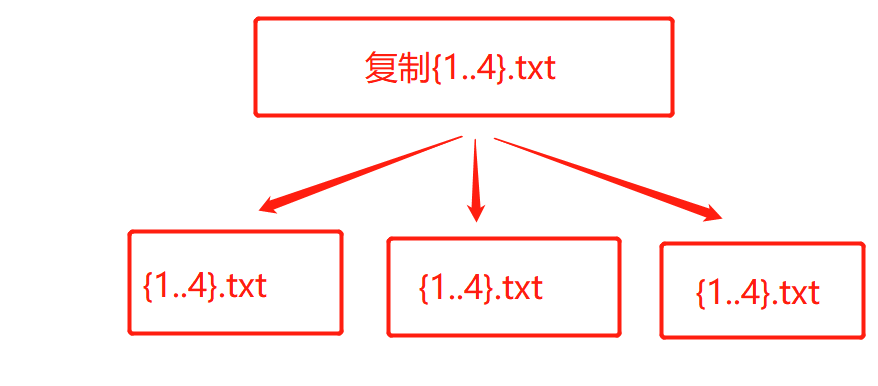

Copy volume

All servers in the volume keep a complete copy

The number of copies of a volume can be determined by the customer when it is created

By at least two block servers or more

Redundancy

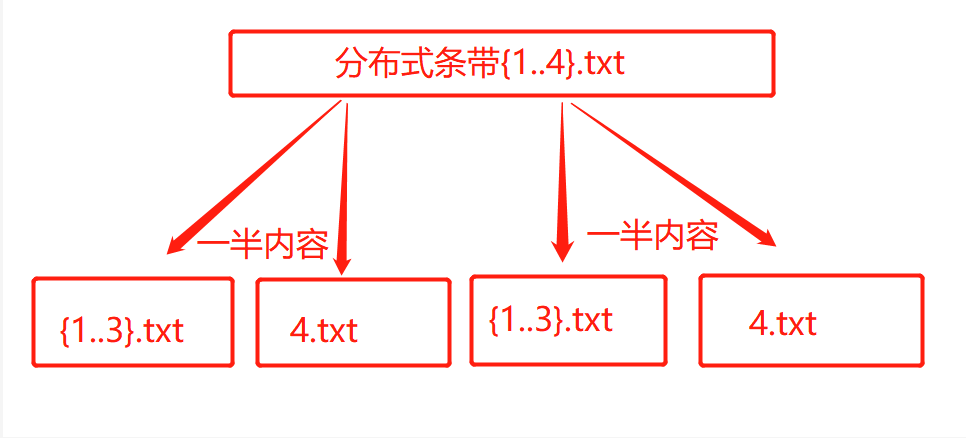

Distributed striped volume

Distributed replication volume

3. Four server configurations

#Turn off firewall

systemctl stop firewalld

setenforce 0

#Partition the disk and mount it

vim /opt/fdisk.sh

#!/bin/bash

NEWDEV=`ls /dev/sd* | grep -o 'sd[b-z]' | uniq`

for VAR in $NEWDEV

do

echo -e "n\np\n\n\n\nw\n" | fdisk /dev/$VAR &> /dev/null

mkfs.xfs /dev/${VAR}"1" &> /dev/null

mkdir -p /data/${VAR}"1" &> /dev/null

echo "/dev/${VAR}"1" /data/${VAR}"1" xfs defaults 0 0" >> /etc/fstab

done

mount -a &> /dev/null

cd /opt

chmod +x fdisk.sh

./fdisk.sh

#Configure the / etc/hosts file of each node (all node operations)

echo "192.168.255.142 node1" >> /etc/hosts

echo "192.168.255.150 node2" >> /etc/hosts

echo "192.168.255.180 node3" >> /etc/hosts

echo "192.168.255.200 node4" >> /etc/hosts

#Install and start GFS (all node operations)

unzip gfsrepo.zip

cd /etc/yum.repos.d/

mkdir repos.bak

mv * repos.bak/

vim glfs.repo

[glfs]

name=glfs

baseurl=file:///opt/gfsrepo

gpgcheck=0

enabled=1

yum clean all && yum makecache

yum -y install glusterfs glusterfs-server glusterfs-fuse glusterfs-rdma

systemctl start glusterd.service

systemctl enable glusterd.service

systemctl status glusterd.service

#Add DNS for time synchronization

echo "nameserver 114.114.114.114" >> /etc/resolv.conf

ntpdate ntp1.aliyun.com

#Add nodes to the storage trust pool (operate in any node)

gluster peer probe node1

gluster peer probe node2

gluster peer probe node3

gluster peer probe node4

#View the cluster status on each node node

gluster peer status

========Create volumes according to the following plan=========

Volume name Volume type Brick

dis-volume Distributed volume node1(/data/sdb1),node2(/data/sdb1)

stripe-volume Strip roll node1(/data/sdc1),node2(/data/sdc1)

rep-volume Copy volume node3(/data/sdb1),node4(/data/sdb1)

dis-stripe Distributed striped volume node1(/data/sdd1),node2(/data/sdd1),node3(/data/sdd1),node4(/data/sdd1)

dis-rep Distributed replication volume node1(/data/sde1),node2(/data/sde1),node3(/data/sde1),node4(/data/sde1)

#Create a distributed volume without specifying the type. The default is to create a distributed volume

gluster volume create dis-volume node1:/data/sdb1 node2:/data/sdb1 force

gluster volume list

gluster volume start dis-volume

gluster volume info dis-volume

#The specified type is stripe, the value is 2, and followed by 2 brick servers, so a striped volume is created

gluster volume create stripe-volume stripe 2 node1:/data/sdc1 node2:/data/sdc1 force

gluster volume start stripe-volume

gluster volume info stripe-volume

#The specified type is replica, the value is 2, and followed by 2 brick servers, so a replication volume is created

gluster volume create rep-volume replica 2 node3:/data/sdb1 node4:/data/sdb1 force

gluster volume start rep-volume

gluster volume info rep-volume

#Create distributed striped volumes

gluster volume create dis-stripe stripe 2 node1:/data/sdd1 node2:/data/sdd1 node3:/data/sdd1 node4:/data/sdd1 force

gluster volume start dis-stripe

gluster volume info dis-stripe

#Create distributed replication volumes

gluster volume create dis-rep replica 2 node1:/data/sde1 node2:/data/sde1 node3:/data/sde1 node4:/data/sde1 force

gluster volume start dis-rep

gluster volume info dis-rep

4. Client configuration

cd /opt

unzip gfsrepo.zip

cd /etc/yum.repos.d/

mkdir repos.bak

mv * repos.bak

vim glfs.repo

[glfs]

name=glfs

baseurl=file:///opt/gfsrepo

gpgcheck=0

enabled=1

yum clean all && yum makecache

yum -y install glusterfs glusterfs-fuse

mkdir -p /test/{dis,stripe,rep,dis_stripe,dis_rep}

echo "192.168.255.142 node1" >> /etc/hosts

echo "192.168.255.150 node2" >> /etc/hosts

echo "192.168.255.180 node3" >> /etc/hosts

echo "192.168.255.200 node4" >> /etc/hosts

mount.glusterfs node1:dis-volume /test/dis

mount.glusterfs node1:stripe-volume /test/stripe

mount.glusterfs node1:rep-volume /test/rep

mount.glusterfs node1:dis-stripe /test/dis_stripe

mount.glusterfs node1:dis-rep /test/dis_rep

df -h

5. Maintenance commands for GFS file system

1,see GlusterFS volume [root@node1 ~]# gluster volume list 2,View information for all volumes [root@node1 ~]# gluster volume info 3.View all volume status [root@node1 ~]# gluster volume status 4. ####Stop a volume [root@node1 ~]# gluster volume stop dis-stripe 5. Delete a volume [root@node1 ~]# gluster volume delete dis-stripe 6.Black and white list [root@node1 ~]# gluster volume set dis-rep auth.allow 192.168.255.* ##Set all IP addresses of the 192.168.255.0 network segment to access the dis rep volume (distributed replication volume) volume set: success

summary

Workflow of GFS: after the customer sends the request, the information is handed over to the virtual space of FUSE through VFS, and then transferred to / dev/fuse in memory. Then, at the client of GFS, the request is sent to the server of GFS through TCP, IB and other protocols. In a more GFS volume form, the request is handed over to the file system through VFS. Similarly, after obtaining the data, it is placed in memory, and the user The request for is obtained directly in memory

Type of file system volume

| type | Redundancy | Document integrity | Number of disks | Disk utilization | characteristic |

| Distributed volume | N | N | Read save fast | ||

| Strip roll | N | N | Read save fast | ||

| Copy volume | yes | yes | N>=2 | N/2 | security |

| Distributed striped volume | N>=2 | N | Read save fast | ||

| Distributed replication volume | yes | yes | N>=4 | N/2 | Relatively safe and fast |