Netty programming

NIO programming

There are many articles about NIO on the Internet. It is not intended to make a detailed and in-depth analysis here. Let's briefly describe how NIO solves the three problems of limited thread resources, low thread switching efficiency and byte based.

1. Solve the thread resource limitation

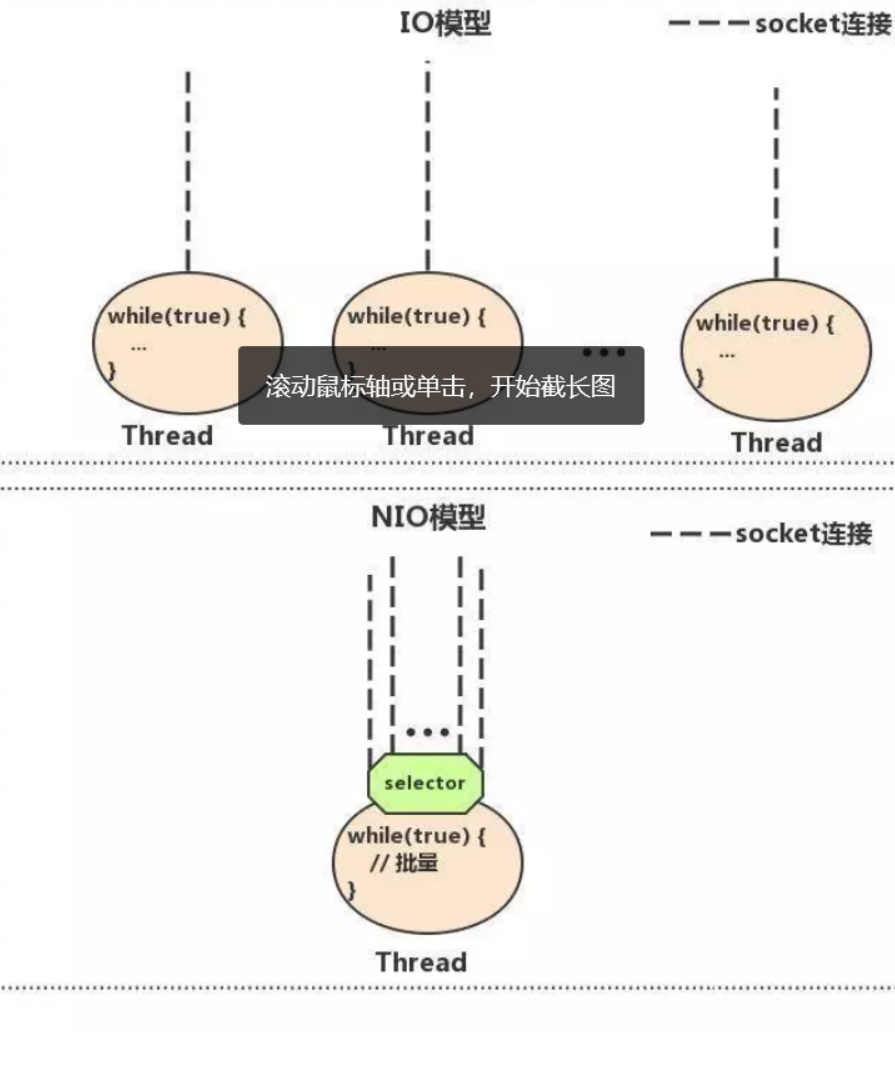

In NIO programming model, a new connection no longer creates a new thread, but can bind this connection directly to a fixed thread, and then all the reading and writing of this connection are in the charge of this thread, so how does he do it? Let's use a picture to compare IO with NIO

As shown in the figure above, in the IO model, when a connection comes, a thread will be created, corresponding to a while loop. The purpose of the loop is to constantly monitor whether there is data on the connection that can be read. In most cases, only a small number of connections in 1w at the same time have data readable. Therefore, many while loops are wasted, because they cannot be read What data?

In NIO model, he turns so many while loops into a loop, which is controlled by a thread. How can he make a thread, and a while loop can monitor 1w connections for data readability? This is the role of the selector in the NIO model. After a connection comes, instead of creating a while loop to monitor whether there is data readable, you can directly register the connection to the selector, and then, by checking the selector, you can batch monitor the connections with data readable, and then read the data. Here is a very simple example in life Sub description the difference between IO and NIO.

In a kindergarten, children have the need to go to the toilet. Children are too young to ask if they want to go to the toilet. There are 100 children in the kindergarten. There are two solutions to solve the problem of children going to the toilet:

- Each child has a teacher. Every teacher asks the children whether they want to go to the toilet or not. If they want to go to the toilet, they need 100 teachers to ask them. And every child needs a teacher to lead them to go to the toilet. This is the IO model. A connection corresponds to a thread.

- All the children have the same teacher. The teacher asked all the children if anyone wanted to go to the toilet every other time, and then every time all the children who needed to go to the toilet were batch led to the toilet, which is the NIO model. All the children were registered to the same teacher, corresponding to that all the connections were registered to a thread, and then batch polling.

2. Low efficiency of thread switching

Because the number of threads in NIO model is greatly reduced, the efficiency of thread switching is also greatly improved

3. Resolve that IO read and write are in bytes

NIO solves this problem by using byte block as unit instead of byte as unit for data reading and writing. In IO model, data is read byte by byte from the bottom of the operating system every time, while NIO maintains a buffer, from which a piece of data can be read every time. It's like a plate of delicious beans in front of you. You can use chopsticks to clamp one by one (one at a time), which is certainly not as efficient as scooping (one batch at a time).

After a brief introduction to the solution of JDK NIO, we will replace the IO solution with the NIO solution. Let's first look at how to implement the server with JDK native NIO.

Netty programming

1. Introduction to netty

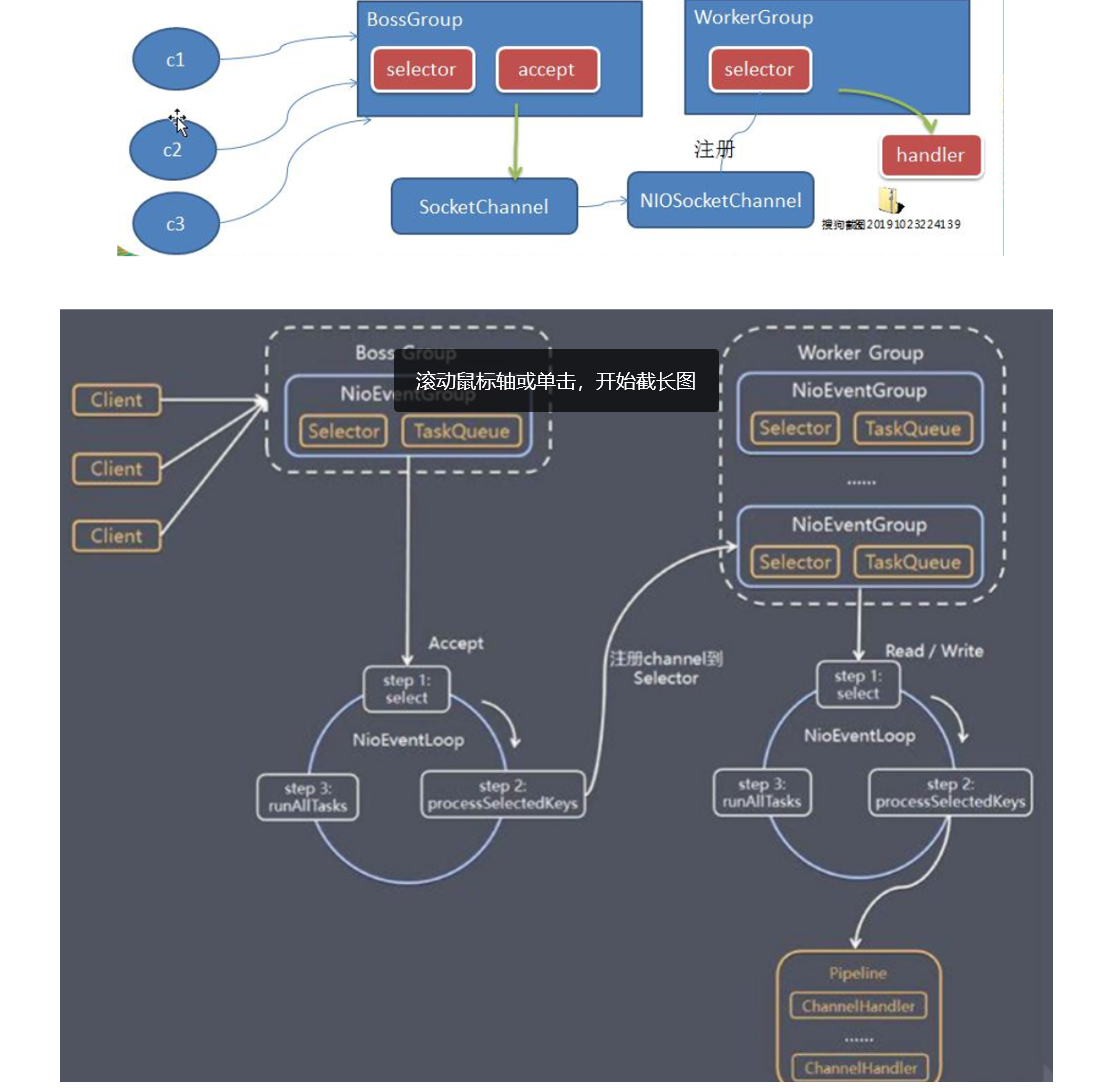

To put it simply: Netty encapsulates the NIO of JDK, which makes you use it better. You don't need to write a lot of complicated code. In official words, Netty is an asynchronous event driven network application framework for rapid development of maintainable high-performance servers and clients.

Here is my summary of the reasons why Netty does not use JDK native NIO

- Using the NIO provided by JDK requires too much understanding of concepts, complicated programming, and careless bug s

- The underlying IO model of Netty can be switched at will, and all of these only need to be changed slightly. By changing parameters, Netty can directly change from NIO model to IO model

- Netty's own unpacking, exception detection and other mechanisms let you separate from the heavy details of NIO, so that you only need to care about the business logic

- Netty solves many bug s in JDK, including empty polling

- The bottom layer of Netty optimizes threads and selector s a lot, and the well-designed reactor thread model achieves very efficient concurrent processing

- With various protocol stacks, you can handle any kind of general protocol almost without hands-on

- The community of Netty is active. If you have any problems, please feel free to use the mailing list or issue

- Netty has experienced extensive verification on various rpc frameworks, message middleware and distributed communication middleware, and its robustness is extremely strong

2. Use of netty

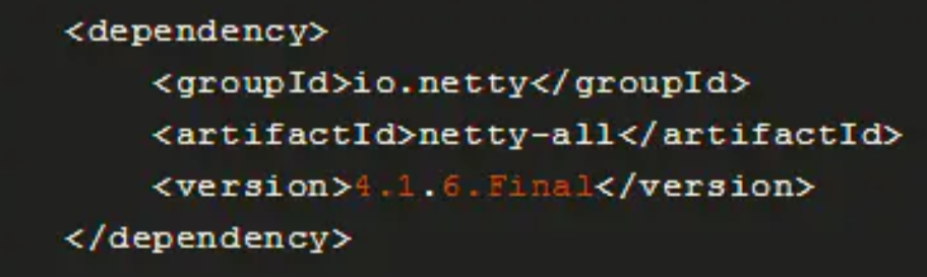

- First, we introduce maven dependency

You can also use the + sign on the right side of fail - > project structure - > modules - > dependencies

Library - > New Library - > from Maven input io.netty: netty all

-

Implementation of server:

NettyServer.java

package java_netty.simple; import io.netty.bootstrap.ServerBootstrap; import io.netty.channel.ChannelFuture; import io.netty.channel.ChannelInitializer; import io.netty.channel.ChannelOption; import io.netty.channel.EventLoopGroup; import io.netty.channel.nio.NioEventLoopGroup; import io.netty.channel.socket.SocketChannel; import io.netty.channel.socket.nio.NioServerSocketChannel; public class NettyServer { public static void main(String[] args) throws InterruptedException { //Create BossGroup and WokerGroup //Explain: //1. Create two thread groups: bossgroup and workergroup //2.BossGroup only processes connection requests //3. Real and client business processing of wokergroup //4. Both are infinite cycles //5. Number of sub threads (NioEventLoop) in bossgroup and workerGroup // Default actual (cpu cores * 2) EventLoopGroup bossGroup=new NioEventLoopGroup(1); EventLoopGroup workerGroup=new NioEventLoopGroup(); try { //Create server-side startup object and configure parameters ServerBootstrap bootstrap = new ServerBootstrap(); //Setting with chain programming bootstrap.group(bossGroup,workerGroup)//Set up two thread groups .channel(NioServerSocketChannel.class)//Using NioSocketChannel as the channel of server .option(ChannelOption.SO_BACKLOG,128)//Set thread queue to get the number of connections .childOption(ChannelOption.SO_KEEPALIVE,true)//Set keep active connection state .childHandler(new ChannelInitializer<SocketChannel>() {//Create a channel test object (anonymous class) //Set up the processor for the pipeline @Override protected void initChannel(SocketChannel socketChannel) throws Exception { socketChannel.pipeline().addLast(new NettyServerHandler());//Add a processor to the last part of the pipeline (i.e. the written NettyServerHandler) } });// Set the processor for the corresponding pipeline of EventLoopGroup of our workergroup System.out.println("The server is ready"); //Binding a port and synchronizing, a ChannelFuture object is generated. //Equivalent to starting the server and porting ChannelFuture channelFuture = bootstrap.bind(6668).sync(); //Monitor the closed channel (monitor when there is a close operation channelFuture.channel().closeFuture().sync(); }finally { bossGroup.shutdownGracefully();//Elegant closure workerGroup.shutdownGracefully();//Elegant closure } } }

-

boos corresponds to the receiving new connection thread in IOServer.java, which is mainly responsible for creating new connections

-

worker corresponds to the thread responsible for reading data in IOClient.java, which is mainly used for reading data and business logic processing

<! -- Description: - > Create two thread groups: bossgroup and workergroup -- > <! -- 2. ` bossgroup 'only processes connection requests -- > <! -- 3. ` wokergroup ` real and client business processing -- > Both are infinite loops -- > [! -- 5. Bossgroup ', and the number of nioeventloops contained in workerGroup -- > <! -- (cpu cores * 2) -- >

-

handler processor of server

NettyServerHandler.java

package java_netty.simple; import io.netty.buffer.ByteBuf; import io.netty.buffer.Unpooled; import io.netty.channel.ChannelHandlerContext; import io.netty.channel.ChannelInboundHandlerAdapter; import io.netty.util.CharsetUtil; /* Explain: 1.To customize a Handler, you need to continue netty and specify a HandlerAdapter 2.Only then can a Handler be a Handler */ public class NettyServerHandler extends ChannelInboundHandlerAdapter { //Read data actually, (here we can read the information sent by the client) /* 1.ChannelHandlerContext ctx: Context object, including pipeline and channel address 2.Object msg The data sent by the client is passed in the form of object, and the default is Obj class */ @Override public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception { System.out.println("Server read thread : "+Thread.currentThread().getName()); System.out.println("Server ctx=="+ctx+" mgs=="+msg); //Convert mgs to a ByteBuf //ByteBuffer is provided by Netty, not Nio's ByteBuffer ByteBuf buf =(ByteBuf)msg; System.out.println("The information sent by the client is:"+buf.toString(CharsetUtil.UTF_8)); System.out.println("Client address:"+ctx.channel().remoteAddress()); } //Data reading completed: @Override public void channelReadComplete(ChannelHandlerContext ctx) throws Exception { // writeAndFlush is a combination of write and flush, which is written to the buffer and refreshed //Generally speaking, the data sent is encoded ctx.writeAndFlush(Unpooled.copiedBuffer("hello,Client",CharsetUtil.UTF_8)); } //Handling exception, closing channel in case of exception @Override public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception { ctx.close(); } }

- Client

NettyClient.java

package java_netty.simple; import io.netty.bootstrap.Bootstrap; import io.netty.channel.ChannelFuture; import io.netty.channel.ChannelInitializer; import io.netty.channel.EventLoopGroup; import io.netty.channel.nio.NioEventLoopGroup; import io.netty.channel.socket.SocketChannel; import io.netty.channel.socket.nio.NioSocketChannel; public class NettyClient { public static void main(String[] args) throws InterruptedException { //Client needs an event loop group EventLoopGroup group = new NioEventLoopGroup(); try { //Create client startup object //Note that the client uses Bootstrp, while the server uses ServerBootstrp Bootstrap bootstrap = new Bootstrap(); //Chain programming related parameters bootstrap.group(group)//Set thread group .channel(NioSocketChannel.class)// Set the implementation class (reflection) of the client channel .handler(new ChannelInitializer<SocketChannel>() { @Override protected void initChannel(SocketChannel ch) throws Exception { ch.pipeline().addLast(new NettyClientHandler());//Add your own processor } }); System.out.println("Client ok.."); //Start the client to connect to the server, // For ChannelFuture, analyze the asynchronous model involving netty. ChannelFuture channelFuture = bootstrap.connect("127.0.0.1", 6668).sync(); //Monitor the closed channel channelFuture.channel().closeFuture().sync(); }finally { group.shutdownGracefully(); } } }

In the client program, group corresponds to the thread from the main function in IOClient.java.

-

Client's handler

NettyClientHandler.java

package java_netty.simple; import io.netty.buffer.ByteBuf; import io.netty.buffer.Unpooled; import io.netty.channel.ChannelHandlerContext; import io.netty.channel.ChannelInboundHandlerAdapter; import io.netty.util.CharsetUtil; // Inbound is a stack operation public class NettyClientHandler extends ChannelInboundHandlerAdapter { //This method is triggered when the channel is ready @Override public void channelActive(ChannelHandlerContext ctx) throws Exception { System.out.println("Clinet "+ctx); ctx.writeAndFlush(Unpooled.copiedBuffer("hello,server", CharsetUtil.UTF_8)); } //Triggered when a channel has a read event @Override public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception { ByteBuf buf = (ByteBuf) msg; System.out.println("Information replied by the server:"+buf.toString(CharsetUtil.UTF_8)); System.out.println("Address of the server:"+ctx.channel().remoteAddress()); } @Override public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception { cause.printStackTrace(); ctx.close(); } }

Netty encapsulates NIO perfectly and writes elegant code. On the other hand, after using netty, the performance problem of network communication is almost unnecessary.

Netty package sticking and unpacking:

1. Sticking problem:

TCP is a stream The so-called flow is the genetic data without boundary. You can imagine that if the water in the river is equivalent to the data, they are connected into one piece without boundary. The bottom layer of TCP does not know the specific meaning of the upper layer's business data. It will divide the packets according to the actual situation of the TCP buffer, that is to say, in business, a complete packet may be TCP It can be divided into multiple packets to send, or multiple small packets can be encapsulated into a large packet to send, which is the so-called packet sticking problem.

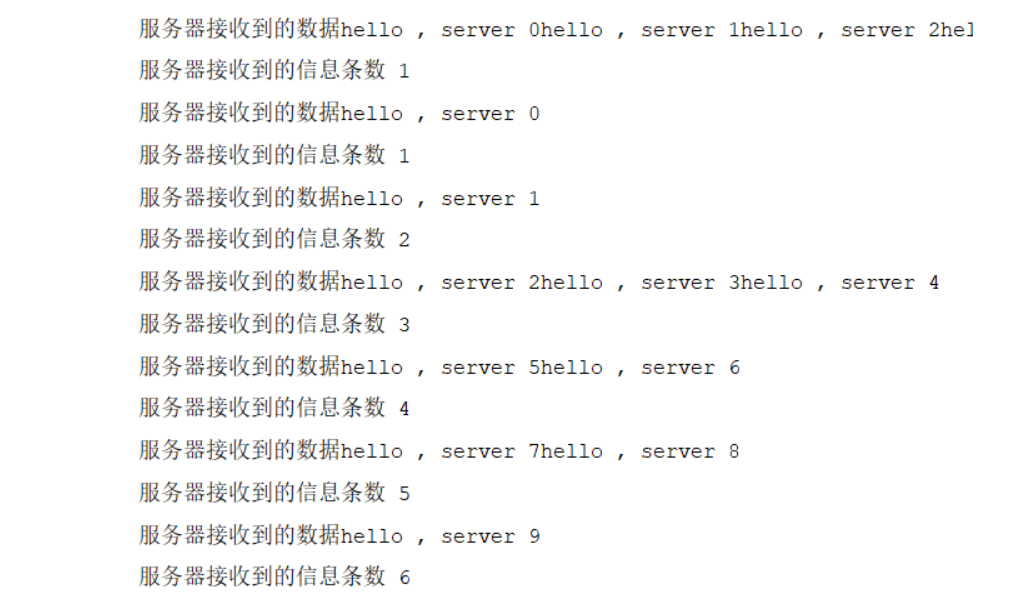

For example, run the client in the tcp packet to send 10 messages "Hello, Server" to the Server.

Ten messages are sent in six packets:

This is the sticking problem

2. unpack

Here we use the custom protocol package + encoder + decompressor to solve:

Specific code implementation unpacking:

Core code: protocol package, codec

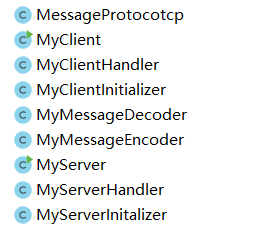

directory structure

- Server (MyServer.java)

package java_netty.protocoltcp; import io.netty.bootstrap.ServerBootstrap; import io.netty.channel.ChannelFuture; import io.netty.channel.EventLoopGroup; import io.netty.channel.nio.NioEventLoopGroup; import io.netty.channel.socket.nio.NioServerSocketChannel; public class MyServer { public static void main(String[] args) throws InterruptedException { EventLoopGroup bossGroup=new NioEventLoopGroup(1 ); EventLoopGroup workerGroup=new NioEventLoopGroup(); try { ServerBootstrap bootstrap = new ServerBootstrap(); bootstrap.group(bossGroup,workerGroup)//Set up two thread groups .channel(NioServerSocketChannel.class)//Using NioSocketChannel as the channel of server .childHandler(new MyServerInitalizer());//Custom initialization class ChannelFuture channelFuture = bootstrap.bind(7000).sync(); channelFuture.channel().closeFuture().sync(); }finally { bossGroup.shutdownGracefully();//Elegant closure workerGroup.shutdownGracefully();//Elegant closure } } }

- handler of server

package java_netty.protocoltcp; import io.netty.buffer.ByteBuf; import io.netty.buffer.Unpooled; import io.netty.channel.ChannelHandlerContext; import io.netty.channel.SimpleChannelInboundHandler; import java.nio.charset.Charset; import java.util.UUID; // handler handling business public class MyServerHandler extends SimpleChannelInboundHandler<MessageProtocotcp> { private int count; @Override public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception { ctx.close(); } @Override protected void channelRead0(ChannelHandlerContext channelHandlerContext, MessageProtocotcp msg) throws Exception { //Receive data and process int len = msg.getLen(); byte[] content = msg.getContent(); System.out.println("The information received by the server is as follows:"); System.out.println("Length:"+len); System.out.println("content:"+new String(content,Charset.forName("utf-8"))); System.out.println("Number of message packets (protocol packets) received by the server"+(++this.count)); //Reply message String responseContent = "Hello client, the message you sent has been received"; int responselen =responseContent.getBytes("utf-8").length; // int responselen =responseContent.length; byte[] responseContentBytes = responseContent.getBytes("utf-8"); //Build a protocol package MessageProtocotcp messageProtocotcp =new MessageProtocotcp(); messageProtocotcp.setLen(responselen); messageProtocotcp.setContent(responseContentBytes); //Send after building the protocol package //But you need to add an encoder to the ServerHandler, and add a decoder to the ClientHandler channelHandlerContext.writeAndFlush(messageProtocotcp); } }

- Custom initialization class (myserverinitializer. Java): configure handler, encoder and decoder for the server's startup object

package java_netty.protocoltcp; import io.netty.channel.ChannelInitializer; import io.netty.channel.ChannelPipeline; import io.netty.channel.socket.SocketChannel; public class MyServerInitalizer extends ChannelInitializer<SocketChannel> { @Override protected void initChannel(SocketChannel socketChannel) throws Exception { ChannelPipeline pipeline = socketChannel.pipeline(); pipeline.addLast(new MyMessageDecoder());// Decoder pipeline.addLast(new MyMessageEncoder());// Encoder pipeline.addLast(new MyServerHandler()); } }

- Client:

package java_netty.protocoltcp; import io.netty.bootstrap.Bootstrap; import io.netty.channel.ChannelFuture; import io.netty.channel.EventLoopGroup; import io.netty.channel.nio.NioEventLoopGroup; import io.netty.channel.socket.nio.NioSocketChannel; public class MyClient { public static void main(String[] args) throws InterruptedException { EventLoopGroup groups = new NioEventLoopGroup(); try { Bootstrap bootstrap = new Bootstrap(); bootstrap.group(groups).channel(NioSocketChannel.class)// Set the implementation class (reflection) of the client channel .handler(new MyClientInitializer());//Customize an initialization class ChannelFuture channelFuture = bootstrap.connect("localhost", 7000).sync(); //Monitor the closed channel channelFuture.channel().closeFuture().sync(); }finally { groups.shutdownGracefully(); } } }

- Client's handler

package java_netty.protocoltcp; import io.netty.buffer.ByteBuf; import io.netty.buffer.Unpooled; import io.netty.channel.ChannelHandlerContext; import io.netty.channel.SimpleChannelInboundHandler; import java.nio.charset.Charset; public class MyClientHandler extends SimpleChannelInboundHandler<MessageProtocotcp> { private int count; @Override public void channelActive(ChannelHandlerContext ctx) throws Exception { //Use the client to send 5 pieces of data to the server, for(int i=0;i<5;i++){ String mes=" Hello, server"; byte[] content = mes.getBytes(Charset.forName("utf-8")); int length =mes.getBytes(Charset.forName("utf-8")).length; //Create the agreement package object; MessageProtocotcp messageProtocotcp=new MessageProtocotcp(); messageProtocotcp.setLen(length); messageProtocotcp.setContent(content); ctx.writeAndFlush(messageProtocotcp); } } @Override protected void channelRead0(ChannelHandlerContext channelHandlerContext, MessageProtocotcp msg) throws Exception { int len = msg.getLen(); byte[] content = msg.getContent(); System.out.println("The information received by the client is as follows:"); System.out.println("Length:"+len); System.out.println("content:"+new String(content,Charset.forName("utf-8"))); System.out.println("Number of message packets (protocol packets) received by the server"+(++this.count)); } @Override public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception { System.out.println("Exception information: "+cause.getMessage()); ctx.close(); } }

- Client initialization class

package java_netty.protocoltcp; import io.netty.channel.ChannelInitializer; import io.netty.channel.ChannelPipeline; import io.netty.channel.socket.SocketChannel; public class MyClientInitializer extends ChannelInitializer<SocketChannel> { @Override protected void initChannel(SocketChannel socketChannel) throws Exception { ChannelPipeline pipeline = socketChannel.pipeline(); pipeline.addLast(new MyMessageEncoder()); // Add encoder pipeline.addLast(new MyMessageDecoder()); // Add decoder pipeline.addLast(new MyClientHandler()); } }

Encoder:

- Encoder

package java_netty.protocoltcp; import io.netty.buffer.ByteBuf; import io.netty.channel.ChannelHandlerContext; import io.netty.handler.codec.MessageToByteEncoder; //Encoder public class MyMessageEncoder extends MessageToByteEncoder<MessageProtocotcp> { @Override protected void encode(ChannelHandlerContext channelHandlerContext, MessageProtocotcp messageProtocotcp, ByteBuf byteBuf) throws Exception { System.out.println("MyMessageEncoder encode Method called"); byteBuf.writeInt(messageProtocotcp.getLen()); byteBuf.writeBytes(messageProtocotcp.getContent()); } }

Decoder

- Decoder: here, the readInt() method can automatically get the length

package java_netty.protocoltcp; import io.netty.buffer.ByteBuf; import io.netty.channel.ChannelHandlerContext; import io.netty.handler.codec.ReplayingDecoder; import java.util.List; // Decoder public class MyMessageDecoder extends ReplayingDecoder { @Override protected void decode(ChannelHandlerContext channelHandlerContext, ByteBuf byteBuf, List<Object> list) throws Exception { System.out.println(" MyMessageDecoder decoder Be called"); //Need to get the binary bytecode - > messageprotocol package (object) int length = byteBuf.readInt(); //Get length automatically byte [] content=new byte[length]; byteBuf.readBytes(content); //Encapsulate it as a MessageProtocol object and put it in the list to pass on the next handler business processing MessageProtocotcp messageProtocotcp = new MessageProtocotcp(); messageProtocotcp.setLen(length); messageProtocotcp.setContent(content); list.add(messageProtocotcp); } }

Protocol package

- Message protocotcp (message protocotcp)

(define length and data sent (generally byte array))

package java_netty.protocoltcp; // Protocol package public class MessageProtocotcp { //Defining length, critical private int len; //The data sent is usually byte array private byte[] content; public int getLen() { return len; } public void setLen(int len) { this.len = len; } public byte[] getContent() { return content; } public void setContent(byte[] content) { this.content = content; } }