In general, we are more concerned with data output after a neural network

So what does the data look like in a neural network?

Using experimental code from https://www.jianshu.com/p/df98fcc832ed

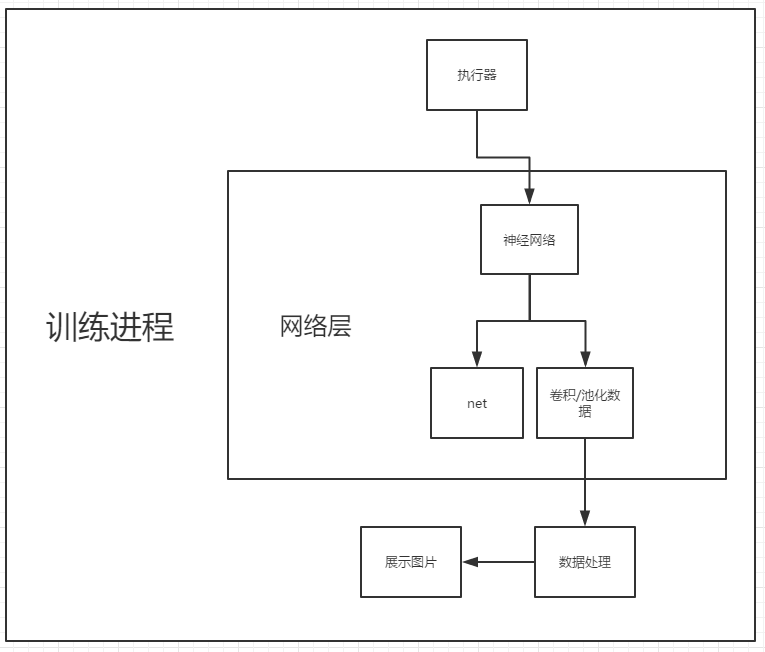

Process introduction

1. Modify the code structure of the original neural network classifier

def convolutional_neural_network(img): """ //Define a convolution neural network classifier: //Input 2-D image, through two convolution-pooling layers, using a fully connected layer with softmax as the activation function as the output layer Return: predict -- Result of classification """ # First convolution-pooling layer # Using 20 5*5 filters, the pooling size is 2, the pooling step is 2, and the activation function is Relu conv_pool_0 = fluid.nets.simple_img_conv_pool( input=img, filter_size=5, num_filters=20, pool_size=2, pool_stride=2, act="relu") conv_pool_1 = fluid.layers.batch_norm(conv_pool_0) # Second convolution-pooling layer # Using 50 5*5 filters, the pooling size is 2, the pooling step is 2, and the activation function is Relu conv_pool_2 = fluid.nets.simple_img_conv_pool( input=conv_pool_1, filter_size=5, num_filters=50, pool_size=2, pool_stride=2, act="relu") # Fully connected output layer with softmax as activation function, size of output layer must be number 10 prediction = fluid.layers.fc(input=conv_pool_2, size=10, act='softmax') return prediction, conv_pool_0

Still taken from PaddlePaddle-Book

Port: https://github.com/PaddlePaddle/book

The return value and some variable names have been modified here. Most importantly, one more data conv_pool_0 is returned, which is the first data pooled.

We can use this pooled layer data as the target image to output

Of course, the acquisition of the network will also change from net= convolutional_neural_network(x) to net, and conv0 = convolutional_neural_network(x), after all, the return value will be two.The conv0 here will be in fetch_list during training

2. Obtaining pooled layer data during training

#Training Progress for batch_id, data in enumerate(batch_reader()): outs = exe.run( feed=feeder.feed(data), fetch_list=[label, avg_cost, conv0])

Conv0 is added to fetch_list=[label, avg_cost, conv0])

The value of conv0 will be saved in out[2]

3. Processing the acquired data

Because the pooled layer does not have a 0-255 range of data, but the RGB image has a 0-255 range of color, it will be wrong to take it directly as a picture processing.

Stretch the color of the image to a range of 0-255 to ensure that you can see the specific outline of the image and display it as well.

First convert to numpy type int data

pic0 = (outs[2][i][0] * 255).astype(int)#Filter data from the first group of pictures

Next, stretch the color range

# Maximum picMax = np.max(pic0) picMin = np.min(pic0) # Get stretched color image pic1 = pic0 // (picMax - picMin) * 255 #Integral divisions are rounded down

Finally, convert the data to a format Pillow can handle

#Convert to PIL Object pic = Image.fromarray(pic1.reshape(pic1.shape[-2], pic1.shape[-1]).astype('uint8')) #Display Image pic.show()

Output 3 images to test

It's not as intuitive as you might think.... For reference only

All Code

import paddle.fluid as fluid import paddle import numpy as np from PIL import Image import os # Specify Path params_dirname = "./test02.inference.model" print("Folder path after training" + params_dirname) # Parameter Initialization place = fluid.CUDAPlace(0) # place=fluid.CPUPlace() exe = fluid.Executor(place) # Loading data datatype = 'float32' with open(path + "data/ocrData.txt", 'rt') as f: a = f.read() def dataReader(): def redaer(): for i in range(1, 1501): im = Image.open(path + "data/" + str(i) + ".jpg").convert('L') im = np.array(im).reshape(1, 30, 15).astype(np.float32) im = im / 255.0 ''' img = paddle.dataset.image.load_image(path + "data/" + str(i+1) + ".jpg")''' labelInfo = a[i - 1] yield im, labelInfo return redaer def testReader(): def redaer(): for i in range(1501, 1951): im = Image.open(path + "data/" + str(i) + ".jpg").convert('L') im = np.array(im).reshape(1, 30, 15).astype(np.float32) im = im / 255.0 * 2.0 - 1.0 ''' img = paddle.dataset.image.load_image(path + "data/" + str(i+1) + ".jpg") img=np.transpose(img, (2, 0, 1))''' labelInfo = a[i - 1] yield im, labelInfo return redaer # Define Network x = fluid.layers.data(name="x", shape=[1, 30, 15], dtype=datatype) label = fluid.layers.data(name='label', shape=[1], dtype='int64') def convolutional_neural_network(img): """ //Define a convolution neural network classifier: //Input 2-D image, through two convolution-pooling layers, using a fully connected layer with softmax as the activation function as the output layer Return: predict -- Result of classification """ # First convolution-pooling layer # Using 20 5*5 filters, the pooling size is 2, the pooling step is 2, and the activation function is Relu conv_pool_0 = fluid.nets.simple_img_conv_pool( input=img, filter_size=5, num_filters=20, pool_size=2, pool_stride=2, act="relu") conv_pool_1 = fluid.layers.batch_norm(conv_pool_0) # Second convolution-pooling layer # Using 50 5*5 filters, the pooling size is 2, the pooling step is 2, and the activation function is Relu conv_pool_2 = fluid.nets.simple_img_conv_pool( input=conv_pool_1, filter_size=5, num_filters=50, pool_size=2, pool_stride=2, act="relu") # Fully connected output layer with softmax as activation function, size of output layer must be number 10 prediction = fluid.layers.fc(input=conv_pool_2, size=10, act='softmax') return prediction, conv_pool_0 net, conv0 = convolutional_neural_network(x) # Official CNN # Define loss function cost = fluid.layers.cross_entropy(input=net, label=label) avg_cost = fluid.layers.mean(cost) acc = fluid.layers.accuracy(input=net, label=label, k=1) # Define optimization methods sgd_optimizer = fluid.optimizer.SGD(learning_rate=0.01) sgd_optimizer.minimize(avg_cost) # Data Incoming Settings batch_reader = paddle.batch(reader=dataReader(), batch_size=1024) testb_reader = paddle.batch(reader=testReader(), batch_size=1024) feeder = fluid.DataFeeder(place=place, feed_list=[x, label]) prog = fluid.default_startup_program() exe.run(prog) trainNum = 30 for i in range(trainNum): for batch_id, data in enumerate(batch_reader()): outs = exe.run( feed=feeder.feed(data), fetch_list=[label, avg_cost, conv0]) # feed enters data and label data for the data table # Print Output Panel trainTag.add_record(i, outs[1]) # print(str(i + 1) + "Loss value after training is:" + str(outs[1])) if i == 0:#Output only once for ii in range(5): # Get Images pic0 = (outs[2][ii][0] * 255).astype(int) # Filtering data by taking the first group of pictures picMax = np.max(pic0) # Maximum picMin = np.min(pic0) # Get stretched color image pic1 = pic0 // (picMax - picMin) * 255 #Integral divisions are rounded down ''' #Debug picMax = np.max(pic) # Maximum picMin = np.min(pic) print(str(picMax)+"-"+ str(picMin)) ''' # Convert filter overlook data to PIL pic = Image.fromarray(pic1.reshape(pic1.shape[-2], pic1.shape[-1]).astype('uint8')) for batch_id, data in enumerate(testb_reader()): test_acc, test_cost = exe.run( feed=feeder.feed(data), fetch_list=[label, avg_cost]) # feed enters data and label data for the data table test_costs = [] test_costs.append(test_cost[0]) testcost = (sum(test_costs) / len(test_costs)) testTag.add_record(i, testcost) # print("Predicted loss is:", testcost, "\n") pross = float(i) / trainNum print("The current percentage of training progress is:" + str(pross * 100)[:3].strip(".") + "%") # Save Forecast Model path = params_dirname def del_file(path): for i in os.listdir(path): path_file = os.path.join(path, i) if os.path.isfile(path_file): os.remove(path_file) else: del_file(path_file) fluid.io.save_inference_model(params_dirname, ['x'], [net], exe) print(params_dirname)