1. The problem appears

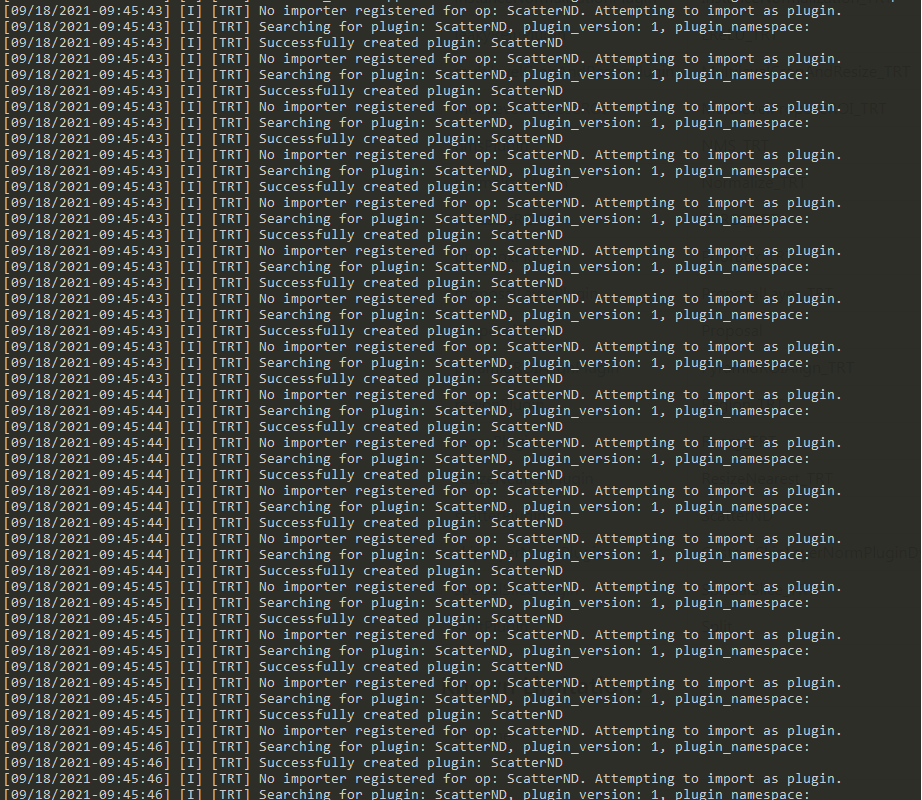

Recently, when using the built-in trtexec tool in TensorRT 7.2.3.4 to convert the onnx model of yolov3 spp into TensorRT model file, there was an error that the ScatterND plug-in could not be found. The error is as follows:

ModelImporter.cpp:135: No importer registered for op: ScatterND. Attempting to import as plugin. builtin_op_importers.cpp:3771: Searching for plugin: ScatterND, plugin_version: 1, plugin_namespace: INVALID_ARGUMENT: getPluginCreator could not find plugin ScatterND version 1 ERROR: builtin_op_importers.cpp:3773 In function importFallbackPluginImporter:

It probably means that the ScatterND plug-in is not found in TensorRT.

2 solution

2.1 solution 1

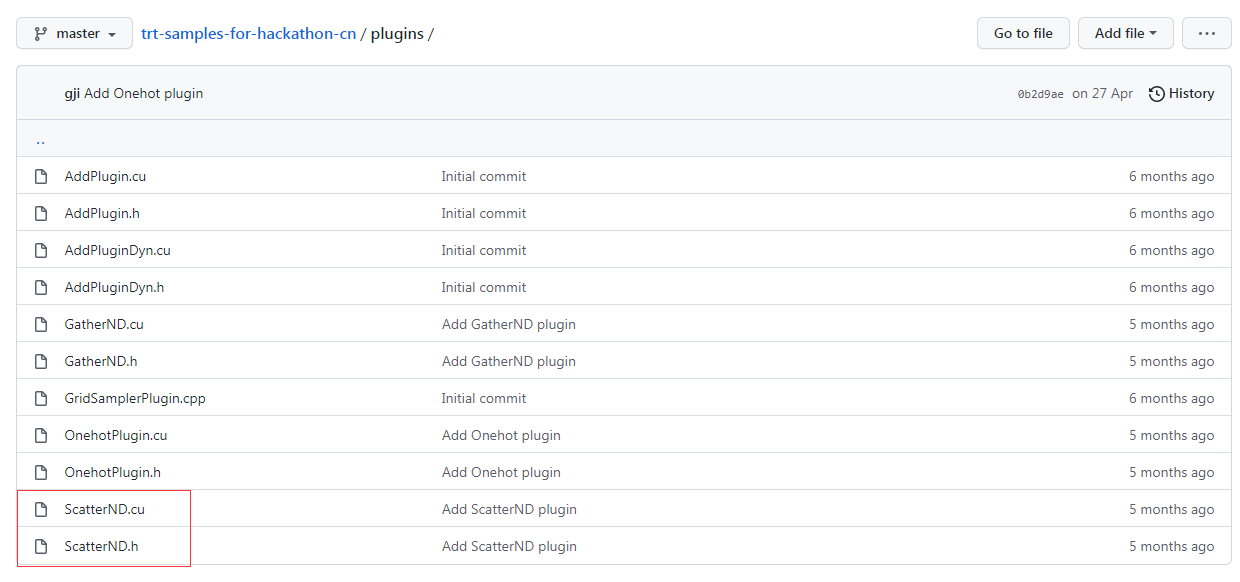

Self compilation: https://github.com/NVIDIA/trt-samples-for-hackathon-cn Plugins directory under, which contains the ScatterND plug-in

After compilation, the ScatterND.so dynamic library will be generated. During the process of converting ONNX to Tensort model, the plug-in will be manually linked in the trtexec command line:

Example command line:

trtexec --onnx=yolov3_spp.onnx --explicitBatch --saveEngine=yolov3_spp.engine --workspace=10240 --fp16 --verbose --plugins=ScatterND.so

This method refers to: https://github.com/oreo-lp/AlphaPose_TRT However, this method is not tested because it is troublesome to use makefile on Windows systems.

2.2 solution 2

2.2.1 official documents: searching for pearls in the sea

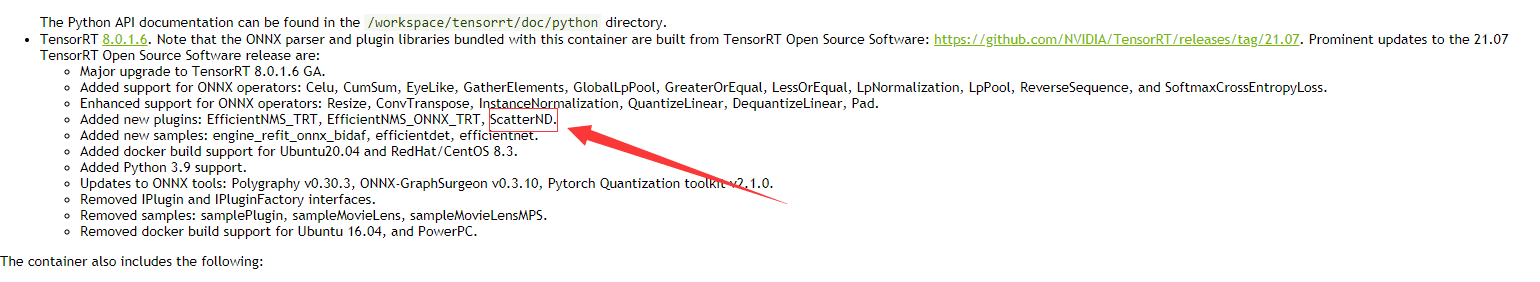

After finding the first method troublesome, I always wanted to find other time-saving and labor-saving methods. After carefully reviewing the official documents of NVIDIA, I accidentally released the version in the container( https://docs.nvidia.com/deeplearning/tensorrt/container-release-notes/rel_21-07.html#rel_21-07 )On the instructions,

Major updates to TensorRT 21.07 mention:

The Python API documentation can be found in the /workspace/tensorrt/doc/python directory. TensorRT 8.0.1.6. Note that the ONNX parser and plugin libraries bundled with this container are built from TensorRT Open Source Software: https://github.com/NVIDIA/TensorRT/releases/tag/21.07. Prominent updates to the 21.07 TensorRT Open Source Software release are: Major upgrade to TensorRT 8.0.1.6 GA. Added support for ONNX operators: Celu, CumSum, EyeLike, GatherElements, GlobalLpPool, GreaterOrEqual, LessOrEqual, LpNormalization, LpPool, ReverseSequence, and SoftmaxCrossEntropyLoss. Enhanced support for ONNX operators: Resize, ConvTranspose, InstanceNormalization, QuantizeLinear, DequantizeLinear, Pad. Added new plugins: EfficientNMS_TRT, EfficientNMS_ONNX_TRT, ScatterND. Added new samples: engine_refit_onnx_bidaf, efficientdet, efficientnet. Added docker build support for Ubuntu20.04 and RedHat/CentOS 8.3. Added Python 3.9 support. Updates to ONNX tools: Polygraphy v0.30.3, ONNX-GraphSurgeon v0.3.10, Pytorch Quantization toolkit v2.1.0. Removed IPlugin and IPluginFactory interfaces. Removed samples: samplePlugin, sampleMovieLens, sampleMovieLensMPS. Removed docker build support for Ubuntu 16.04, and PowerPC.

The main meaning of the above is the major upgrade for TensorRT 8.0.1.6 GA, including the new plug-in EfficientNMS_TRT,EfficientNMS_ONNX_TRT,ScatterND.

binggo!

This means that if we use TensorRT versions above 8.0.1.6, ScatterND is already included in TensorRT, so we don't have to compile it ourselves.

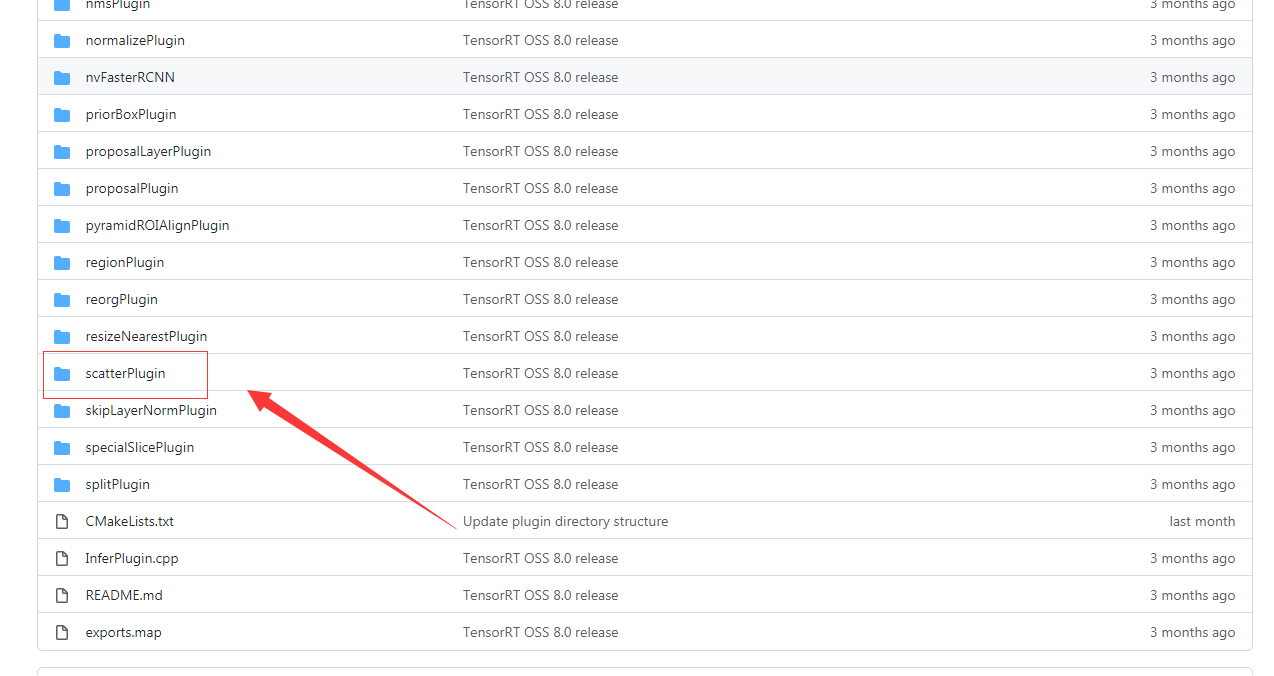

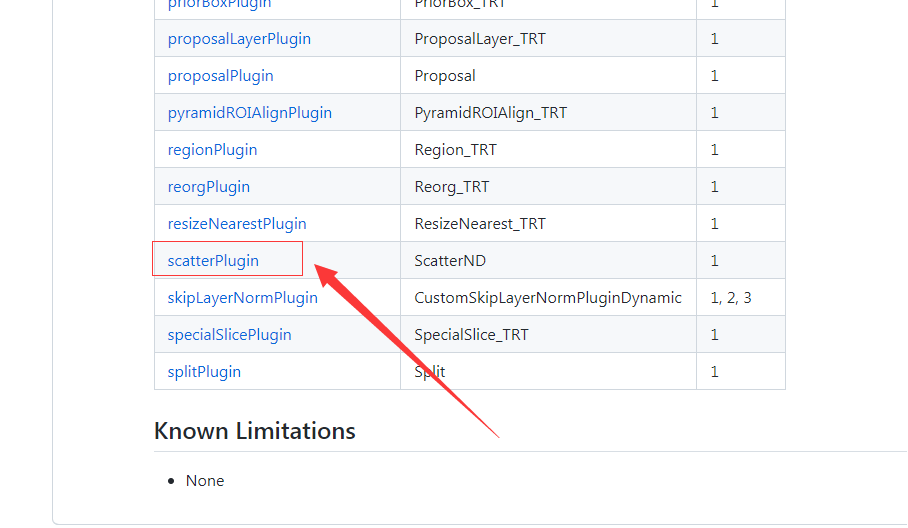

This idea has also been verified in the official warehouse on TensorRT: https://github.com/NVIDIA/TensorRT/tree/master/plugin , there is ScatterPlugin under the Plugins list, which is also the version 1 version we need.

2.2.2 compilation succeeded

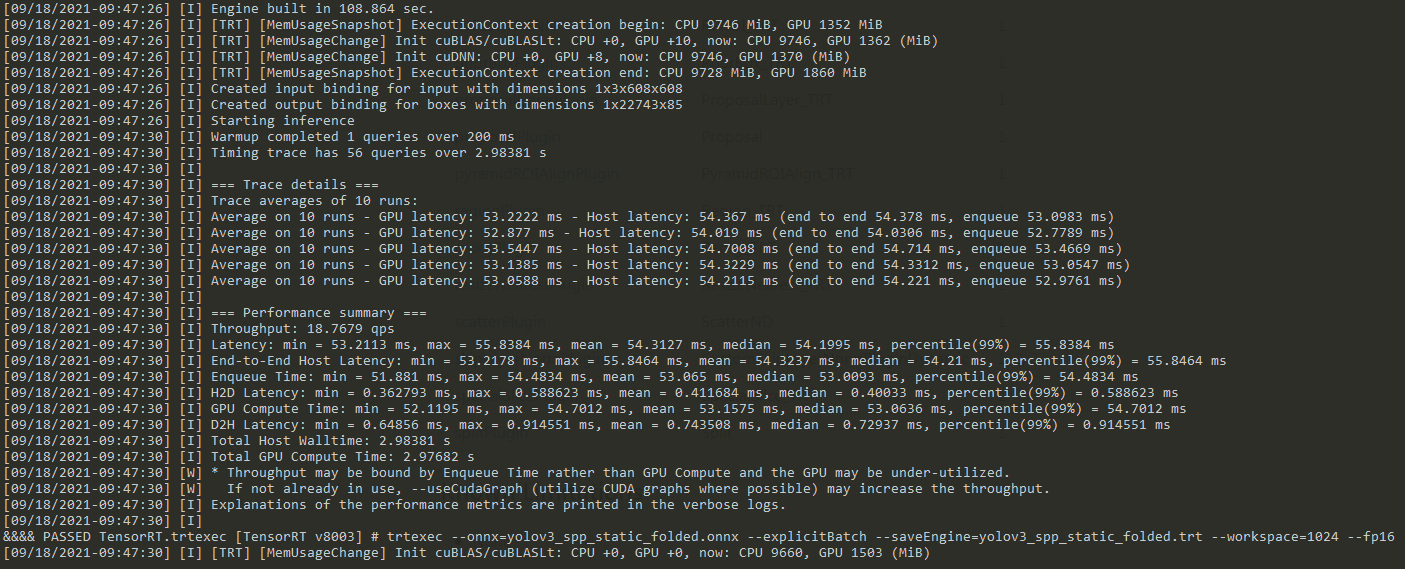

After reading the above official documents, I downloaded the latest version of TensorRT-8.0.3.4 from the official website, replaced the original version of TensorRT-7.2.3.4, and re used the trtexec tool of TensorRT-8.0.3.4 to perform ONNX model conversion. The conversion was successful!!!

The log shows the output of successfully created plug-in: ScatterND

You need to wait for a certain time during the conversion process, and finally take a successful screenshot.

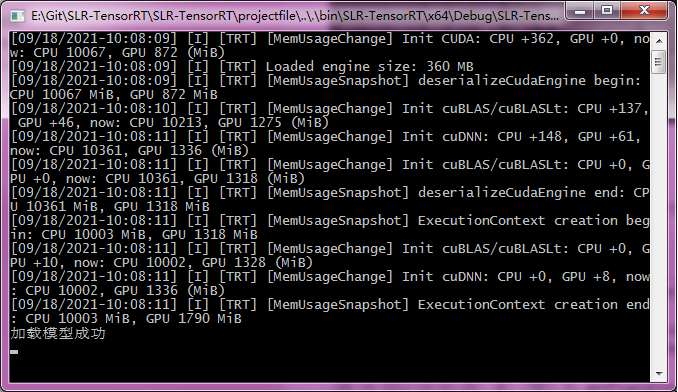

2.2.3 using the plug-in ScatterND in C++ API

After the TensorRT model transformation is completed, the TensorRT C++ API is used to load in the C + + environment, and the results are still displayed

getPluginCreator could not find plugin: ScatterND version: 1

[09/18/2021-10:02:19] [I] [TRT] [MemUsageChange] Init CUDA: CPU +345, GPU +0, no w: CPU 10718, GPU 827 (MiB) [09/18/2021-10:02:19] [I] [TRT] Loaded engine size: 360 MB [09/18/2021-10:02:19] [I] [TRT] [MemUsageSnapshot] deserializeCudaEngine begin: CPU 10719 MiB, GPU 827 MiB [09/18/2021-10:02:20] [E] [TRT] 3: getPluginCreator could not find plugin: Scatt erND version: 1 [09/18/2021-10:02:20] [E] [TRT] 1: [pluginV2Runner.cpp::nvinfer1::rt::load::292] Error Code 1: Serialization (Serialization assertion creator failed.Cannot dese rialize plugin since corresponding IPluginCreator not found in Plugin Registry) [09/18/2021-10:02:20] [E] [TRT] 4: [runtime.cpp::nvinfer1::Runtime::deserializeC udaEngine::76] Error Code 4: Internal Error (Engine deserialization failed.) Failed to load model

It's really embarrassing. I have successfully converted the model using trtexec and found the ScatterND plug-in in the log. Why can't I find the plug-in when loading the model in C + +? Have you forgotten any steps?

After going through the official documents, I found that if you need to use a plug-in in C + +, you need to initialize the plug-in at the beginning. You can find the code to initialize the plug-in in in line 123 under samplesd.cpp under the samplesd project of TensorRT8.0.3.4 official demo:

initLibNvInferPlugins(&sample::gLogger.getTRTLogger(), "");

Then add this sentence to the project to show that the model is loaded successfully.

After reading this blog, don't you stand up? No, no, no, just like it!

If you are interested, you can visit my website: https://www.stubbornhuang.com/ , more dry goods!