It's a bloody lesson. It doesn't take long to complete the code, but the environment really spits people out. Here, let's talk about the stepped pits and solutions

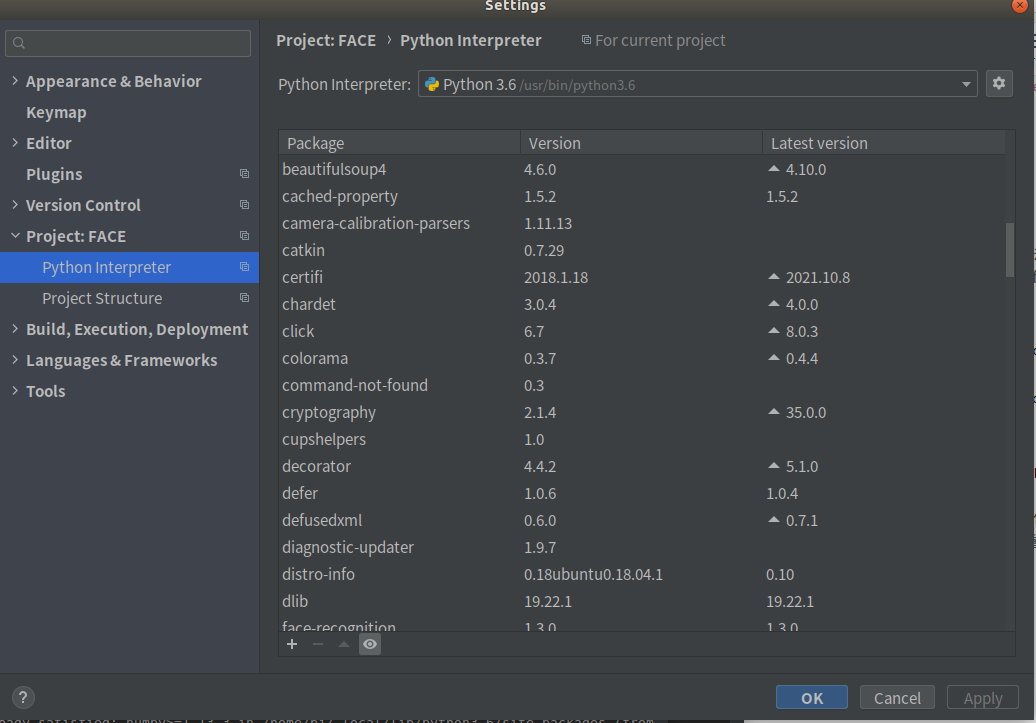

Blogger's environment: ubuntu18.04 + pychar

When I was a freshman, the laboratory required to learn Tensorflow, and took over a project from the seniors. I was responsible for visual things. I didn't know enough. I downloaded a lot of libraries and a lot of environments on the computer. The environments of various versions were intertwined and mixed, and the folders were randomly placed in corners. As a result, the environment of my computer was very messy and I didn't have a concept, I didn't delete these messy things behind. This behavior has buried countless evil consequences for me at this time

The problem starts with a function:

recognizer = cv2.face.LBPHFaceRecognizer_create()

This code is crazy and gives me an error

module 'cv2' has no attribute 'face'

Browse various websites at home and abroad and try all the solutions. Nothing more than installing an expansion package called opencv contrib python. face is the function in this package,

pip install opencv-python -i http://pypi.douban.com/simple --trusted-host pypi.douban.com pip install opencv-contrib-python -i http://pypi.douban.com/simple --trusted-host pypi.douban.com pip install opencv-contrib-python-headless -i http://pypi.douban.com/simple --trusted-host pypi.douban.com

It is reasonable to say that after installing the expansion package, the function can be used, but I still report an error. After three or four days of failure, I decided to completely delete cv2 and reconfigure the environment. First, I used the command

pip uninstall opencv-python pip uninstall opencv-contrib-python pip uninstall opencv-python-headless

And checked

I'm sure it's deleted, but when I move the mouse to import cv2 in the code, I find that it can still be clicked. At this time, I realize that the cv2 path still exists in the environment. So I went to the source code of cv2 and found that opencv came from the previously installed openvino. After deleting openvino, I completely removed all opencv in the environment, and then installed it according to the above method.

However, there is a new problem, that is, the functions in cv2 library cannot be automatically completed, but they can run normally. So far, they have not been solved... Friends with solutions can send me a private letter. Thank you very much!

When the environment is ready, the next step is face recognition. Bloggers use two methods

Method 1. Call face_recognition face recognition database

Face recognition. If it is not in the face database, you can choose whether to join the face database

# -*- coding=UTF-8 -*-

import cv2

import os

import face_recognition

import numpy as np

import sys

cap = cv2.VideoCapture(0)

new = '/home/bj/PycharmProjects/facefound/drivers' #A place to store photos

Know_face_encodings = []

Know_face_names = []

face_locations = []

face_encodings = []

face_names = []

filelist = os.listdir(new)

process_this_frame = True

def add_Driver():

print("welcome to here ,new driver")

print("please input your name,and look at the camare,it will take photos after 3s:")

i = input(":")

ret, frame = cap.read()

cv2.imshow('cap', frame)

output_path = os.path.join(new, "%s.jpg" % i)

cv2.imwrite(output_path, frame)

out = face_recognition.load_image_file(output_path)

out_encoding = face_recognition.face_encodings(out)[0]

Know_face_encodings.append(out_encoding)

Know_face_names.append(i)

for i in filelist:

# creat arrays of record face name

img_path = os.path.join('drivers', "%s" % i)

image = face_recognition.load_image_file(img_path)

img_encoding = face_recognition.face_encodings(image)[0]

Know_face_encodings.append(img_encoding)

Know_face_names.append(i)

#Initialize some variables

while True:

ret, frame = cap.read()

flag = cv2.waitKey(1)

#Resize video's size to receive faster

small_frame = cv2.resize(frame, (0, 0), fx=0.25, fy=0.25)

#turn BGR to RGB color

rgb_small_frame = small_frame[:, :, ::-1]

if process_this_frame:

#Find all face and faceencodings to contract

face_locations = face_recognition.face_locations(rgb_small_frame,model="cnn")

face_encodings = face_recognition.face_encodings(rgb_small_frame,face_locations)

face_names=[]

for face_encoding in face_encodings:

#see if the face match the face we knowen

matchs = face_recognition.compare_faces(Know_face_encodings,face_encoding)Insert the code slice here

name = "Unknown"

# print("if you want to join us,push 'o':")

# if flag==13 :

# add_Driver()

# else:

#if we find the same face

#name =we knownface

face_distances = face_recognition.face_distance(Know_face_encodings,face_encoding)

best_match_index = np.argmin(face_distances)

print(best_match_index)

if matchs[best_match_index]:

name = Know_face_names[best_match_index]

if best_match_index == 0:

while(1):

print("do you want to join in?")

a=input()

if a == 'y':

add_Driver()

break

if a == 'n':

break

face_names.append(name)

process_this_frame = not process_this_frame

#display the results

for (top, right, bottom, left), name in zip(face_locations, face_names):

top *= 4

right *= 4

bottom *= 4

left *= 4

#Draw a box around face

cv2.rectangle(frame, (left-10, top-100), (right+10, bottom+50), (0, 0, 225), 2)

#Draw a label with a name below the face

cv2.rectangle(frame, (left-10, bottom+15), (right+10, bottom+50), (0, 0, 255), cv2.FILLED)

#set zi ti

font = cv2.FONT_HERSHEY_DUPLEX

cv2.putText(frame, name, (left+6, bottom+44), font, 1.0, (255, 255, 255),1)

cv2.imshow('cap', frame)

if flag == 27:

break

cap.release()

cv2.destroyAllWindows()

Method 2: LBPHFaceRecognizer histogram recognition method

1. Create a face database and collect 50 face photos recognized by the current camera as materials

# -*- coding=UTF-8 -*-

import os

import sys

import numpy

import cv2

cap = cv2.VideoCapture(0)

face_detector = cv2.CascadeClassifier(r'/home/bj/PycharmProjects/FACE/haarcascade_frontalface_default.xml')

drivers_id = input('write down your name:')

path = '/home/bj/PycharmProjects/FACE/drivers'

if not os.path.exists(path+'/'+drivers_id):

os.makedirs(path+'/'+drivers_id)

count = 0

while True:

success, img = cap.read()

if success is True:

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

else:

break

faces = face_detector.detectMultiScale(gray, 1.3, 5)

for(x, y, w, h) in faces:

flag = cv2.waitKey(1)

cv2.rectangle(img, (x-500, y-500), (x+w+500, y+w+500), (225, 0, 0))

count += 1

thepath = os.path.join('drivers', drivers_id, "%s" % drivers_id)

cv2.imwrite(thepath+ str(count) + '.jpg', gray[y:y+h, x:x+w])

if flag == 27:

break

elif count>=50:

cap.release()

cv2.destroyAllWindows()

break

cv2.imshow('image', img)

2. Training face model

# -*-coding:utf-8-*-

import cv2

import os

import numpy as np

def face_detect(image):

gray = cv2.cv2.cvtColor(image,cv2.COLOR_BGR2GRAY)

face_detector = cv2.CascadeClassifier("/home/bj/PycharmProjects/FACE/haarcascade_frontalface_default.xml")

faces = face_detector.detectMultiScale(gray, 1.2, 6)

if(len(faces)==0):

return None, None

(x, y, w, h) = faces[0]

return gray[y:y+w, x:x+h], faces[0]

def ReFileName(dirPath):

print(os.listdir(dirPath))

faces = []

for file in os.listdir(dirPath):

print(file)

if os.path.isfile(os.path.join(dirPath, file)) == True:

c = os.path.basename(file)

name = dirPath + '/' +c

img = cv2.imread(name)

face, rect = face_detect(img)

if face is not None:

faces.append(face)

cv2.waitKey(1)

cv2.destroyAllWindows()

return faces

dirPathslx = r"drivers/slx"

slx = ReFileName(dirPathslx)

print(len(slx))

lableslx = np.array([0 for i in range(len(slx))])

dirPathyg = r"drivers/yg"

yg = ReFileName(dirPathyg)

print(len(yg))

lableyg = np.array([1 for i in range(len(yg))])

x = np.concatenate((slx, yg), axis=0)

y = np.concatenate((lableslx, lableyg), axis=0)

index = [i for i in range(len(y))]

np.random.seed(1)

np.random.shuffle(index)

train_data = x[index]

train_label = y[index]

recognizer = cv2.face.LBPHFaceRecognizer_create()

recognizer.train(train_data, train_label)

# Save training data

recognizer.write('train.yml')

3. Recognize the current camera face in real time

# -*-coding:utf-8-*-

import cv2

cap = cv2.VideoCapture(0)

face_detector = cv2.CascadeClassifier("haarcascade_frontalface_default.xml")

facelabel = ["slx", "yg"]#Character name

#Face detection function

def face_detect_demo(image):

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

faces = face_detector.detectMultiScale(gray, 1.2, 6)

# If no face is detected, the original image is returned

if (len(faces) == 0):

return None, None

# At present, it is assumed that there is only one face, xy is the upper left coordinate, and wh is the width and height of the rectangle

(x, y, w, h) = faces[0]

# Returns the face portion of the image

return gray[y:y + w, x:x + h], faces[0]

#Import training results

recognizer = cv2.face.LBPHFaceRecognizer_create()

recognizer.read('train.yml')#Read the results of the previous training

# Draw a rectangle on the image according to the given face (x, y) coordinates, width and height

def draw_rectangle(img, rect):

(x, y, w, h) = rect#Rectangular box

cv2.rectangle(img, (x, y), (x + w, y + h), (255, 255, 0), 2)

# Write out the person's name according to the given face (x, y) coordinates

def draw_text(img, text, x, y):

cv2.putText(img, text, (x, y), cv2.FONT_HERSHEY_COMPLEX, 1, (128, 128, 0), 2)

# This function recognizes the person in the image and draws a rectangle and its name around the face

def predict(image):

# Make a copy of the image and keep the original image

# Detect face region

face, rect = face_detect_demo(img)#face_ detect_ Face detection function in front of demo

#print(rect)=[x,y,w,h]

# Predict face names

label = recognizer.predict(face)

print(label)#label[0] is the name. The lower the reliability value of label[1], the higher the reliability(

if label[1]<=70:

# Obtain the person name of the corresponding label returned by the face recognizer

label_text = facelabel[label[0]]

# Draw a rectangle around the detected face

draw_rectangle(img, rect)

# Mark the predicted person's name

draw_text(img, label_text, rect[0], rect[1])

# Returns the predicted image

return img

else:

# Draw a rectangle around the detected face

draw_rectangle(img, rect)

# Mark the predicted person's name

draw_text(img, "not find", rect[0], rect[1])

# Returns the predicted image

return img

while True:

success, img = cap.read()

if success is True:

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

else:

break

faces = face_detector.detectMultiScale(gray, 1.3, 5)

for (x, y, w, h) in faces:

flag = cv2.waitKey(1)

cv2.rectangle(img, (x - 500, y - 500), (x + w + 500, y + w + 500), (225, 0, 0))

cv2.imwrite(str(1) + '.jpg', gray[y:y + h, x:x + w])

if flag == 27:

exit()

cv2.imshow('image', img)

#Execute forecast

pred_img = predict('1.jpg')

cv2.imshow('result', pred_img)

if flag == 27:

break

cv2.waitKey(0)

cv2.destroyAllWindows()

After the functions of each part are realized, I begin to summarize them. The final code is as follows