2021SC@SDUSC

In the last blog, we mentioned the RawField class except__ init__ In addition to the function, it also contains two functions: preprocess(self, x) and process(self, batch, *args, **kwargs) as the data preprocessing function and processing function.

First, let's look at the preprocess function.

def preprocess(self, x):

if self.preprocessing is not None:

return self.preprocessing(x)

else:

return xThe preprocess function is used to preprocess data. If a preprocessed pipe is provided, an example will be preprocessed. self.preprocessing is an attribute in the RawField class. In__ init__ As can be seen in the function.

def __init__(self, preprocessing=None, postprocessing=None, is_target=False):

self.preprocessing = preprocessing

self.postprocessing = postprocessing

self.is_target = is_targetNext, let's look at the process function.

def process(self, batch, *args, **kwargs):

if self.postprocessing is not None:

batch = self.postprocessing(batch)

return batchThe process function is used to process the sample list to create a batch. Batch is a parameter, and batch (list(object)) is a list of objects from a batch of examples. Return an object, give the input and custom processing object, and then post process the pipe.

After introducing the functions in the two RawField classes, let's look at the Field class.

class Field(RawField):

This class inherits the RawField class and defines a data type and instructions converted to tensor. Field class models ordinary text processing data types that can be represented through tensor. It contains a Vocab object that defines a set of possible values for the elements of the field and its corresponding numerical representation. The field object also holds other parameters related to how the data type should be digitized, such as a tokenization method and the tensor that should be generated. If a field is shared between two columns in the dataset (for example, questions and answers in the QA dataset), then they will have a shared vocabulary. This is the general function of the field class. Next, I will introduce the parameters and functions in detail. First, let's look at class functions__ init__:

def __init__(self, sequential=True, use_vocab=True, init_token=None,

eos_token=None, fix_length=None, dtype=torch.long,

preprocessing=None, postprocessing=None, lower=False,

tokenize=None, tokenizer_language='en', include_lengths=False,

batch_first=False, pad_token="<pad>", unk_token="<unk>",

pad_first=False, truncate_first=False, stop_words=None,

is_target=False):

self.sequential = sequential

self.use_vocab = use_vocab

self.init_token = init_token

self.eos_token = eos_token

self.unk_token = unk_token

self.fix_length = fix_length

self.dtype = dtype

self.preprocessing = preprocessing

self.postprocessing = postprocessing

self.lower = lower

# store params to construct tokenizer for serialization

# in case the tokenizer isn't picklable (e.g. spacy)

self.tokenizer_args = (tokenize, tokenizer_language)

self.tokenize = get_tokenizer(tokenize, tokenizer_language)

self.include_lengths = include_lengths

self.batch_first = batch_first

self.pad_token = pad_token if self.sequential else None

self.pad_first = pad_first

self.truncate_first = truncate_first

try:

self.stop_words = set(stop_words) if stop_words is not None else None

except TypeError:

raise ValueError("Stop words must be convertible to a set")

self.is_target = is_targetLet's introduce these 19 parameters one by one.

Sequential: whether the data type represents sequential data. If False, the tag is not. The default is True.

use_vocab: indicates whether to use the vocab object. If False, the data field should already be numeric. The default is True.

init_token: a token that will be attached to the field in each example using it, and None indicates that there is no initial token. No token by default.

eos_token: indicates the token field to be added to each example using this command, and None indicates that there is no sentence end tag. There is no sentence end tag by default.

fix_length: is a fixed length, and all examples using this field will be for flexible sequence length or None. There is no default length for flexible sequences.

Dtype: dtype class representing a batch of examples. The default value is torch.long.

preprocessing: the pipeline to be applied to the example uses this field after tokenization but before digitization. Many datasets replace this property with a custom preprocessor.

postprocessing: will be applied to the field tensor used after digitization but before digitization. The pipeline function takes batch processing as a list with the vocabulary of the field.

lower: indicates whether the text of the field is lowercase. The default is not lowercase.

tokenize: a continuous example of a function that uses this field to mark a string. If it is "spy", the spy marker is

used. If a non serialized function is passed as a parameter, the field cannot be serialized. The default value is string.split.

tokenizer_language: the language of the tag assignor to be constructed. (currently only various languages of SpaCy are supported).

include_length: whether to return tuples of minibatch and with padding, a list containing the length of each example, or just a padding minibatch. The default value is False.

batch_first: whether Mr. becomes the tensor of batch dimension. The default value is False.

pad_token: string token used as padding. The default value is "< pad >.

unk_token: a string token used to represent OOV words. The default value is "< unk >.

pad_first: fill the sequence at the beginning. The default value is False.

truncate_first: truncate the sequence at the beginning. The default value is false

stop_words: the token to be discarded in the preprocessing step.

is_target: whether the field is a target variable. Affect iterations on batch. The default value is False.

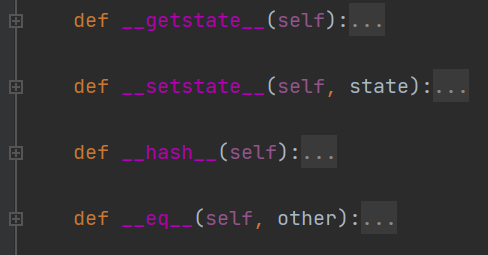

In the Field class, except__ init__ (), there are four special functions. They are special functions in the Field class and are not key code, which will not be repeated here.

The next blog will continue to introduce other key and important functions in the Field class.