environmental information

hadoop cluster installation configuration process

You need to deploy hadoop cluster before installing hive

Fully Distributed Cluster (II) Haoop 2.6.5 Installation and Deployment

Hbase Cluster Installation Deployment

Download hbase-1.2.6.1-bin.tar.gz and upload it to the server through FTP tools to decompress

[root@node222 ~]# ls /home/hadoop/hbase-1.2.6.1-bin.tar.gz /home/hadoop/hbase-1.2.6.1-bin.tar.gz [root@node222 ~]# gtar -zxf /home/hadoop/hbase-1.2.6.1-bin.tar.gz -C /usr/local/ [root@node222 ~]# ls /usr/local/hbase-1.2.6.1/ bin CHANGES.txt conf docs hbase-webapps LEGAL lib LICENSE.txt NOTICE.txt README.txt

Configure Hbase

1. Configure hbase-env.sh

# Remove the comment symbol "#" before the corresponding environment variable and modify JAVA_HOME information according to the server environment export JAVA_HOME=/usr/local/jdk1.8.0_66 # Close hbase built-in zookeeper export HBASE_MANAGES_ZK=false

2. Create hbase temporary file directory

[root@node222 ~]# mkdir -p /usr/local/hbase-1.2.6.1/hbaseData

3. Configure hbase-site.xml with reference to hadoop's core-site.xml and zk's zoo.cfg file

<configuration> <property> <name>hbase.rootdir</name> <value>hdfs://ns1/user/hbase/hbase_db</value> <!-- hbase Machine Name Port Reference for Storing Data Directory Configured as Host Node core-site.xml --> </property> <property> <name>hbase.tmp.dir</name> <value>/usr/local/hbase-1.2.6.1/hbaseData</value> </property> <property> <name>hbase.cluster.distributed</name> <value>true</value> <!-- Distributed deployment or not --> </property> <property> <name>hbase.zookeeper.quorum</name> <value>node222:2181,node224:2181,node225:2181</value> <!-- Appoint zookeeper Cluster Node Address Port --> </property> <property> <name>hbase.zookeeper.property.dataDir</name> <value>/usr/local/zookeeper-3.4.10/zkdata</value> <!-- zookooper Storage Location Reference for Configuration, Log, etc. zoo.cfg --> </property> </configuration>

After the above configuration is completed, because this is the cluster environment deployment, we need to copy the core-site.xml and hdfs-site.xml of hadoop cluster to the hbase/conf directory. User HBase correctly parses the HDFS cluster directory. Otherwise, the main node will start normally after startup, and the Hregionserver will not start normally. The error log is as follows:

2018-10-16 11:40:46,252 ERROR [main] regionserver.HRegionServerCommandLine: Region server exiting java.lang.RuntimeException: Failed construction of Regionserver: class org.apache.hadoop.hbase.regionserver.HRegionServer at org.apache.hadoop.hbase.regionserver.HRegionServer.constructRegionServer(HRegionServer.java:2682) at org.apache.hadoop.hbase.regionserver.HRegionServerCommandLine.start(HRegionServerCommandLine.java:64) at org.apache.hadoop.hbase.regionserver.HRegionServerCommandLine.run(HRegionServerCommandLine.java:87) at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70) at org.apache.hadoop.hbase.util.ServerCommandLine.doMain(ServerCommandLine.java:126) at org.apache.hadoop.hbase.regionserver.HRegionServer.main(HRegionServer.java:2697) Caused by: java.lang.reflect.InvocationTargetException at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:422) at org.apache.hadoop.hbase.regionserver.HRegionServer.constructRegionServer(HRegionServer.java:2680) ... 5 more Caused by: java.lang.IllegalArgumentException: java.net.UnknownHostException: ns1 at org.apache.hadoop.security.SecurityUtil.buildTokenService(SecurityUtil.java:373) at org.apache.hadoop.hdfs.NameNodeProxies.createNonHAProxy(NameNodeProxies.java:258) at org.apache.hadoop.hdfs.NameNodeProxies.createProxy(NameNodeProxies.java:153) at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:602) at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:547) at org.apache.hadoop.hdfs.DistributedFileSystem.initialize(DistributedFileSystem.java:139) at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2591) at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:89) at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2625) at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2607) at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:368) at org.apache.hadoop.fs.Path.getFileSystem(Path.java:296) at org.apache.hadoop.hbase.util.FSUtils.getRootDir(FSUtils.java:1003) at org.apache.hadoop.hbase.regionserver.HRegionServer.initializeFileSystem(HRegionServer.java:609) at org.apache.hadoop.hbase.regionserver.HRegionServer.<init>(HRegionServer.java:564) ... 10 more Caused by: java.net.UnknownHostException: ns1 ... 25 more

Configure regionservers to configure the node host name that needs to be used as the Hregionserver node

# Noe224 and Noe225 will be used as HregionServer nodes this time. [root@node222 ~]# vi /usr/local/hbase-1.2.6.1/conf/regionservers node224 node225

Configure backup-master node

Currently, there are two ways to configure backup-master nodes. One is to configure backup-master files without configuring them. When the cluster configuration is completed, the backup-master files are passed on a certain node.

# Start directly on the node without configuring the back-masters file. The number after start is the end of the web port. [hadoop@node225 ~]$ /usr/local/hbase-1.2.6.1/bin/local-master-backup.sh start 1 starting master, logging to /usr/local/hbase-1.2.6.1/logs/hbase-hadoop-1-master-node225.out [hadoop@node225 ~]$ jps 2883 JournalNode 2980 NodeManager 2792 DataNode 88175 HMaster 88239 Jps 87966 HRegionServer

In order to facilitate the unified management of multi-master nodes, this time, backup-masters file is added in% HBASE_HOME/conf directory (there is no backup-masters file by default). Servers that need to be backup-master nodes are set up in the file.

[root@node222 ~]# vi /usr/local/hbase-1.2.6.1/conf/backup-masters node225

When the above configuration is complete, copy the Hbase directory to the other two nodes

[root@node222 ~]# scp -r /usr/local/hbase-1.2.6.1 root@node224:/usr/local [root@node222 ~]# scp -r /usr/local/hbase-1.2.6.1 root@node225:/usr/local

Configure environment variables, all 3 nodes operate

vi /etc/profile # Add the following export HBASE_HOME=/usr/local/hbase-1.2.6.1 export PATH=$HBASE_HOME/bin:$PATH # Make configuration effective source /etc/profile

Authorize the hbase directory to hadoop users (because password-free login configured by the hadoop cluster is hadoop users), all three nodes operate

[root@node222 ~]# chown -R hadoop:hadoop /usr/local/hbase-1.2.6.1 [root@node224 ~]# chown -R hadoop:hadoop /usr/local/hbase-1.2.6.1 [root@node225 ~]# chown -R hadoop:hadoop /usr/local/hbase-1.2.6.1

Start the hbase on the primary node and check the start of the hbase service process on each node

[hadoop@node222 ~]$ /usr/local/hbase-1.2.6.1/bin/start-hbase.sh starting master, logging to /usr/local/hbase-1.2.6.1/logs/hbase-hadoop-master-node222.out Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0 Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0 node224: starting regionserver, logging to /usr/local/hbase-1.2.6.1/bin/../logs/hbase-hadoop-regionserver-node224.out node225: starting regionserver, logging to /usr/local/hbase-1.2.6.1/bin/../logs/hbase-hadoop-regionserver-node225.out node224: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0 node224: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0 node225: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0 node225: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0 node225: starting master, logging to /usr/local/hbase-1.2.6.1/bin/../logs/hbase-hadoop-master-node225.out node225: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0 node225: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0 [hadoop@node222 ~]$ jps 4037 NameNode 4597 NodeManager 4327 JournalNode 129974 HMaster 4936 DFSZKFailoverController 4140 DataNode 4495 ResourceManager 130206 Jps [hadoop@node224 ~]$ jps 3555 JournalNode 3683 NodeManager 3907 DFSZKFailoverController 116853 HRegionServer 3464 DataNode 45724 ResourceManager 3391 NameNode 117006 Jps [hadoop@node225 ~]$ jps 88449 HRegionServer 88787 Jps 2883 JournalNode 2980 NodeManager 88516 HMaster 2792 DataNode

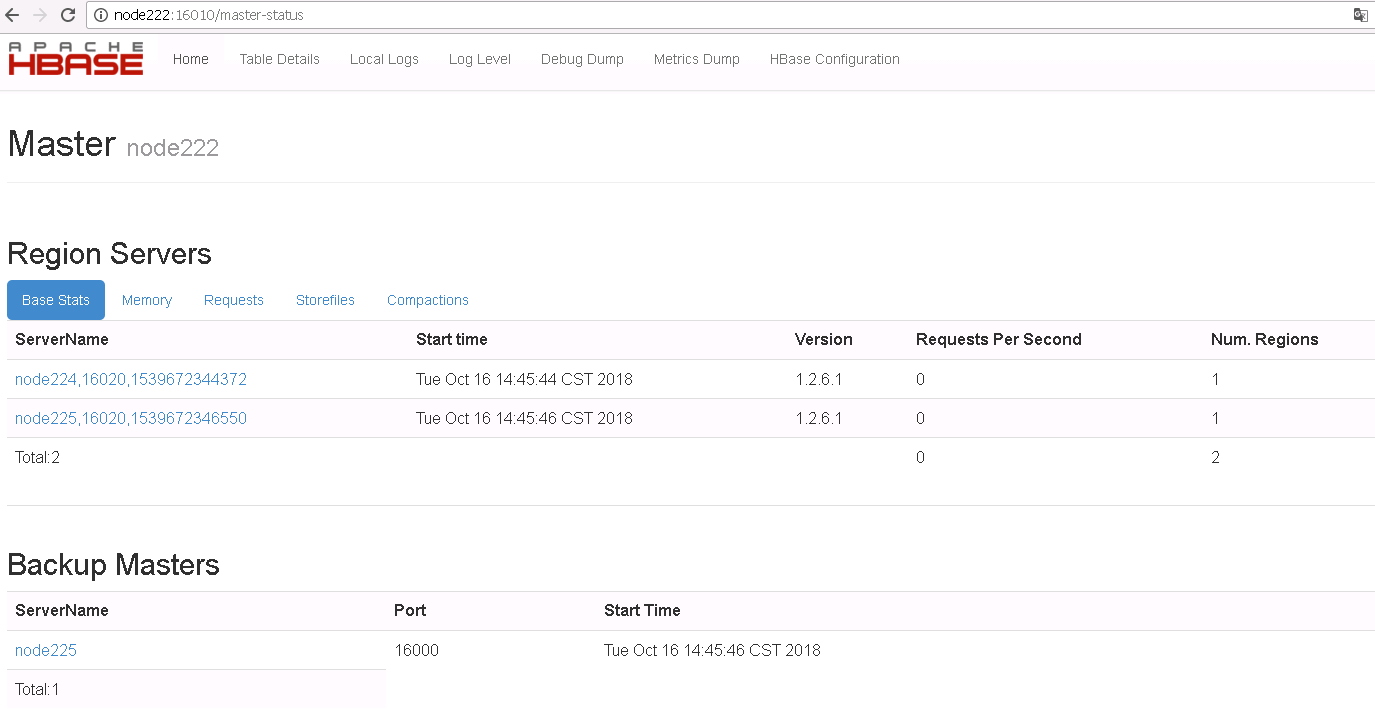

View the status of the current cluster through the hbase shell, 1 active master, 1 backup masters, 2 servers

[hadoop@node222 ~]$ /usr/local/hbase-1.2.6.1/bin/hbase shell SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/hbase-1.2.6.1/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] HBase Shell; enter 'help<RETURN>' for list of supported commands. Type "exit<RETURN>" to leave the HBase Shell Version 1.2.6.1, rUnknown, Sun Jun 3 23:19:26 CDT 2018 hbase(main):001:0> status 1 active master, 1 backup masters, 2 servers, 0 dead, 1.0000 average load

Viewing the status of the cluster through the web interface

Note the problems that configuration may encounter in the cluster

The configuration of hbase.rootdir in the hbase-site.xml file introduced by many Internet materials is the machine name of the master node. Normally, it will not be a problem. But when the HDFS activity and standby of hadoop cluster switch, it will lead to the normal start of hbase, and the normal start of HregionServer. The error information is as follows. The solution can refer to the configuration instructions in this article.

activeMasterManager] master.HMaster: Failed to become active master