There's an image classification contest on Kaggle. Digit Recognizer Data sets are well-known MNIST —— The picture is a 28*28 gray level image segmented. The handwritten digital part corresponds to the gray level of 0-255 and the background part is 0.

from keras.datasets import mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train[0] # .shape = 28*28

"""

[[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0]

...

[ 0 0 0 0 0 0 0 0 0 0 0 0 3 18 18 18 126 136

175 26 166 255 247 127 0 0 0 0]

[ 0 0 0 0 0 0 0 0 30 36 94 154 170 253 253 253 253 253

225 172 253 242 195 64 0 0 0 0]

...

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0]]

"""Handwritten digital pictures are long like this:

import matplotlib.pyplot as plt

plt.subplot(1, 3, 1)

plt.imshow(x_train[0], cmap='gray')

plt.subplot(1, 3, 2)

plt.imshow(x_train[1], cmap='gray')

plt.subplot(1, 3, 3)

plt.imshow(x_train[2], cmap='gray')

plt.show()Handwritten numeral recognition can be regarded as an image classification problem, which is to classify gray images of two-dimensional vectors.

2. Recognition

Rodrigo Benenson The error rate of 50 methods in MINIST is given. This paper will transition from traditional methods to in-depth learning, and compare the accuracy. The following code is based on Python 3.6 + sklearn 0.18.1 + keras 2.0.4.

traditional method

kNN

The idea is simple: straighten two-dimensional vectors into one-dimensional vectors, and judge the similarity between vectors based on distance measure. Obviously, this simple method of feature-free extraction loses the most important information of adjacent pixels around two-dimensional vectors. MNIST has a good performance in relatively clean data sets, the accuracy rate is 96.927%. In addition, the training of kNN model is slow.

from sklearn import neighbors

from sklearn.metrics import precision_score

num_pixels = x_train[0].shape[0] * x_train[0].shape[1]

x_train = x_train.reshape((x_train.shape[0], num_pixels))

x_test = x_test.reshape((x_test.shape[0], num_pixels))

knn = neighbors.KNeighborsClassifier()

knn.fit(x_train, y_train)

pred = knn.predict(x_test)

precision_score(y_test, pred, average='macro') # 0.96927533865705706MLP

MLP (Multi Layer Perceptron) is a three-layer feed-forward neural network. Its features are similar to kNN method. The gray value of each pixel corresponds to a neuron in the input layer, and the number of neurons in the hidden layer is 700 (generally between the number of input and output layers). The number of iterations is set to 100 epochs. sklearn MLPClassifier Implementing MLP classification, the following is the implementation of MLP based on keras. Without careful adjustment, the accuracy is about 98.530%.

from keras.layers import Dense

from keras.models import Sequential

from keras.utils import np_utils

# normalization

num_pixels = 28 * 28

x_train = x_train.reshape(x_train.shape[0], num_pixels).astype('float32') / 255

x_test = x_test.reshape(x_test.shape[0], num_pixels).astype('float32') / 255

# one-hot enconder for class

y_train = np_utils.to_categorical(y_train)

y_test = np_utils.to_categorical(y_test)

num_classes = y_train.shape[1]

model = Sequential([

Dense(700, input_dim=num_pixels, activation='relu', kernel_initializer='normal'), # hidden layer

Dense(num_classes, activation='softmax', kernel_initializer='normal') # output layer

])

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

model.summary()

model.fit(x_train, y_train, validation_data=(x_test, y_test), epochs=600, batch_size=200, verbose=2)

model.evaluate(x_test, y_test, verbose=0) # [0.10381294689745164, 0.98529999999999995]Deep learning

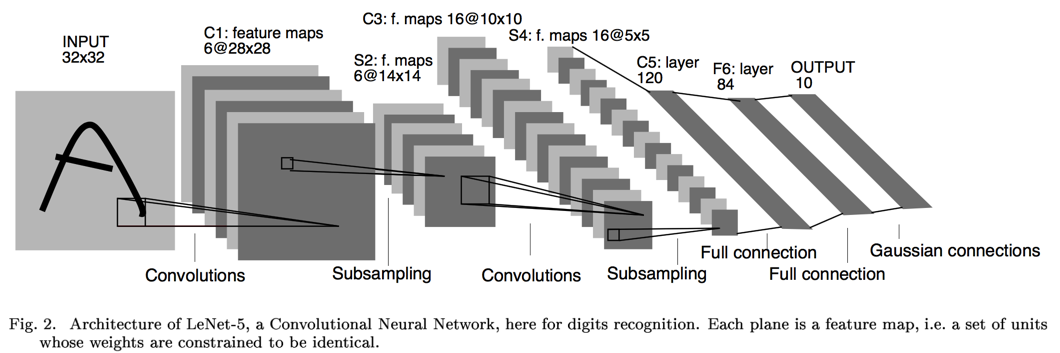

LeCun proposed using CNN (Convolutional Neural Networks) to recognize handwritten digits in his paper [1] published in 1989. Later [2] improved to Lenet-5. Its network structure is shown in the following figure:

Convolution, pooling, convolution, pooling, and then set up two full connection layers, and finally a Guassian connection layer. As we all know, CNN has its own feature extraction function, which does not require deliberate design of feature extractors. Based on keras, Lenet-5 is implemented informally as follows:

import keras

from keras.layers import Conv2D, MaxPooling2D

from keras.layers import Dense, Dropout, Flatten, Activation

from keras.models import Sequential

from keras.utils import np_utils

img_rows, img_cols = 28, 28

# TensorFlow backend: image_data_format() == 'channels_last'

x_train = x_train.reshape(x_train.shape[0], img_rows, img_cols, 1).astype('float32') / 255

x_test = x_test.reshape(x_test.shape[0], img_rows, img_cols, 1).astype('float32') / 255

# one-hot code for class

y_train = np_utils.to_categorical(y_train)

y_test = np_utils.to_categorical(y_test)

num_classes = y_train.shape[1]

model = Sequential()

model.add(Conv2D(filters=6, kernel_size=(5, 5), padding='valid', input_shape=(28, 28, 1)))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Activation("sigmoid"))

model.add(Conv2D(16, kernel_size=(5, 5), padding='valid'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Activation("sigmoid"))

model.add(Dropout(0.25))

# full connection

model.add(Conv2D(120, kernel_size=(1, 1), padding='valid'))

model.add(Flatten())

# full connection

model.add(Dense(84, activation='sigmoid'))

model.add(Dense(num_classes, activation='softmax'))

model.compile(loss=keras.losses.categorical_crossentropy,

optimizer=keras.optimizers.SGD(lr=0.08, momentum=0.9),

metrics=['accuracy'])

model.summary()

model.fit(x_train, y_train, batch_size=32, epochs=8,

verbose=1, validation_data=(x_test, y_test))

model.evaluate(x_test, y_test, verbose=0)Comparing the accuracy of the above three methods, we summarize as follows:

| Features | classifier | accuracy rate |

|---|---|---|

| gray | kNN | 96.927% |

| gray | 3-layer neural networks | 98.530% |

| Lenet-5 | 98.640% |

3. References

[1] LeCun, Yann, et al. "Backpropagation applied to handwritten zip code recognition." Neural computation 1.4 (1989): 541-551.

[2] LeCun, Yann, et al. "Gradient-based learning applied to document recognition." Proceedings of the IEEE 86.11 (1998): 2278-2324.

[3] Taylor B. Arnold,

Computer vision: LeNet-5, AlexNet, VGG-19, GoogLeNet.