Configuration introduction

in general:

source is used to receive data

sinks are used to send data

channel is used to cache data

The following are some related types that will be used later.

1.Source component type (used to receive data sent from a certain place)

Netcat Source

Accept the request data from the data client, which is often used in test development

Exec Source

Run a given unix instruction and take the execution result of the instruction as the data source

Spooling Directory Source

Monitors new files in the specified directory and parses events from new files that appear

Kafka Source

Get data from Kafka cluster

Sequence Generator Source

Sequence generator, counter starts from 0 and + 1 to long.max each time_ VALUE

Avro Source

Accept data requested from Avro Client, similar to Netcat Source

Mobile phones commonly used to build Flume clusters and RPC communication data

2.Channel component type (used to cache data)

Memory Channel

Cache Event objects into memory

Advantages: fast

Disadvantages: there is a risk of data loss

JDBC Channel

Save the Event object to DB. Currently, only Derby is supported

Advantages: Safety

Disadvantages: low efficiency

File Channel

Save the Event object to a file

Advantages: Safety

Disadvantages: low efficiency

Kafka Channel

Save Event writes to Kafka cluster

Advantages: high availability, data backup

3.Sink component (mainly output files, or output to other hosts or send information to other places)

Logger Sink

Output the collected data in the form of log

HDFS Sink

The collected data is finally written out to the HDFS distributed file system, which supports two file formats: text and sequence

Note: the file format is DataStream, and the collected data will not be serialized

A data file directory is generated every ten minutes

File Roll Sink

Based on the sink output of file scrolling, the collected data is written and saved to the local file system

Null Sink

All data collected will be discarded

HBaseSinks

Write out and save the collected data to HBase non relational database

Install flume (version 1.9 is used here)

This document is recommended for versions above 1.6, because some methods need to be debugged by themselves, and the old version will be more troublesome.

1. Download the required plug-ins before installation to simply test flume.

yum install -y nc Download plug-ins

Download the installation package we want to use

flume official download link

Installation package (click to download)

Upload our flume and then unzip it. If you don't understand these steps, you can query the Internet by yourself.

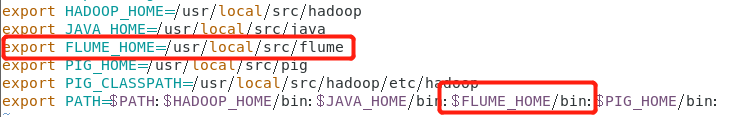

2. Configuration environment

export FLUME_HOME=Yours flume route export PATH=$PATH:$FLUME_HOME/bin

3. Start hadoop. It is recommended to use fully distributed, which involves multiple agent s.

4. Method of starting flume file

[root@master conf]# flume-ng agent -n a1 -c ./ -f /example.conf -Dflume.root.logger=INFO,console

Or add in the flume.env.sh file

export JAVA_OPTS="-Dflume.root.logger=INFO,console"

Various profiles

Note that different machines with files 1 and 2 should be separated. Pay attention to the contents of the document.

1. Tip:

At present, I use two machines with Hadoop 3. X and flume 1.9 respectively

192.168.120.129 is my host

192.168.120.134 is my copilot

2. Various configuration methods

conf directory in flume directory

1. Non persistent saved data: file name example.conf

#Define the agent name as a1 #Set the names of the 3 components a1.sources =r1 a1.sinks = k1 a1.channels = c1 #The configuration source type is NetCat, the listening address is local, and the port is 44444 a1.sources.r1.type = netcat a1.sources.r1.bind = localhost a1.sources.r1.port = 44444 #Configure sink type as sink a1.sinks.k1.type = logger #Configure the channel type as memory, the maximum capacity of memory queue is 1000, and the maximum number of Events received from source or sent to sink in a transaction is 100 a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 #Bind source and sink to channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1

Open a new session window and link flume.

NC localhost 44444 (port number)

Then just type in the text

2. Persistent save data

#Define the agent name as a1 #Set the names of the 3 components a1.sources =r1 a1.sinks = k1 a1.channels = c1 c2 #The configuration source type is NetCat, the listening address is local, and the port is 44444 a1.sources.r1.type = netcat a1.sources.r1.bind = localhost a1.sources.r1.port = 44444 #Configure sink type as sink a1.sinks.k1.type = logger #Configure the channel type as memory, the maximum capacity of memory queue is 1000, and the maximum number of Events received from source or sent to sink in a transaction is 100 a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 a1.channels.c2.type = file a1.channels.c2.checkpointDir = /usr/local/src/flume/checkpoint a1.channels.c2.dataDirs = /usr/local/src/flume/data #Bind source and sink to channel a1.sources.r1.channels = c1 c2 a1.sinks.k1.channel = c2

Open a new session window and link flume.

NC localhost 44444 (port number)

Then just type in the text

3. Single log monitoring

#Define the agent name as a1 #Set the names of the 3 components a1.sources =r1 a1.sinks = k1 a1.channels = c1 #Configure soure type to exec a1.sources.r1.type = exec a1.sources.r1.command = tail -F app.log #Configure sink type as sink a1.sinks.k1.type = logger #Configure the channel type as memory, the maximum capacity of memory queue is 1000, and the maximum number of Events received from source or sent to sink in a transaction is 100 a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 #Bind source and sink to channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1

Open a new session window and link flume.

NC localhost 44444 (port number)

Then just type in the text

4. Multiple log monitoring

#Define the agent name as a1 #Set the names of the 3 components a1.sources =r1 a1.sinks = k1 a1.channels = c1 #The configuration source type is NetCat, the listening address is local, and the port is 44444 a1.sources.r1.type = TAILDIR a1.sources.r1.filegroups = f1 f2 a1.sources.r1.positionFile =/usr/local/src/flume/conf/position.json a1.sources.r1.filegroups.f1 = /usr/local/src/flume/conf/app.log a1.sources.r1.filegroups.f2 = /usr/local/src/flume/conf/logs/.*log a1.sinks.k1.type = logger #Configure the channel type as memory, the maximum capacity of memory queue is 1000, and the maximum number of Events received from source or sent to sink in a transaction is 100 a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 #Bind source and sink to channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1

Open a new session window and link flume.

NC localhost 44444 (port number)

Then just type in the text

5. Multi agent monitoring

Document I:

#Define the agent name as a1 #Set the names of the 3 components a1.sources =r1 a1.sinks = k1 k2 a1.channels = c1 c2 #The configuration source type is NetCat, the listening address is local, and the port is 44444 a1.sources.r1.type = netcat a1.sources.r1.bind = localhost a1.sources.r1.port = 44444 #Configure sink type as sink a1.sinks.k1.type = logger a1.sinks.k2.type = avro a1.sinks.k2.hostname = 192.168.120.129 a1.sinks.k2.port = 55555 #Configure the channel type as memory, the maximum capacity of memory queue is 1000, and the maximum number of Events received from source or sent to sink in a transaction is 100 a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 a1.channels.c2.type = memory #Bind source and sink to channel a1.sources.r1.channels = c1 c2 a1.sinks.k1.channel = c1 a1.sinks.k2.channel = c2

Document II:

#Define the agent name as a1 #Set the names of the 3 components a1.sources =r1 r2 a1.sinks = k1 a1.channels = c1 #The configuration source type is NetCat, the listening address is local, and the port is 44444 a1.sources.r1.type = TAILDIR a1.sources.r1.filegroups = f1 f2 a1.sources.r1.positionFile =/usr/local/src/flume/conf/position.json a1.sources.r1.filegroups.f1 = /usr/local/src/flume/conf/app.log a1.sources.r1.filegroups.f2 = /usr/local/src/flume/conf/logs/.*log a1.sources.r2.type = avro a1.sources.r2.bind = 192.168.120.129 a1.sources.r2.port = 55555 #Configure sink type as sink a1.sinks.k1.type = logger #Configure the channel type as memory, the maximum capacity of memory queue is 1000, and the maximum number of Events received from source or sent to sink in a transaction is 100 a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 #Bind source and sink to channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1 a1.sources.r2.channels = c1

Newly open the session window of file 1 and link flume.

NC localhost 44444 (port number)

Then just type in the text

6. Interceptor:

Document 1:

#Define the agent name as a1 #Set the names of the 3 components a1.sources =r1 a1.sinks = k1 k2 a1.channels = c1 c2 #The configuration source type is NetCat, the listening address is local, and the port is 44444 a1.sources.r1.type = netcat a1.sources.r1.bind = localhost a1.sources.r1.port = 44444 #Add interceptor a1.sources.r1.interceptors = i1 a1.sources.r1.interceptors.i1.type = host #Configure sink type as sink a1.sinks.k1.type = logger a1.sinks.k2.type = avro a1.sinks.k2.hostname = 192.168.120.129 a1.sinks.k2.port = 55555 #Configure the channel type as memory, the maximum capacity of memory queue is 1000, and the maximum number of Events received from source or sent to sink in a transaction is 100 a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 a1.channels.c2.type = memory #Bind source and sink to channel a1.sources.r1.channels = c1 c2 a1.sinks.k1.channel = c1

Document 2:

#Define the agent name as a1 #Set the names of the 3 components a1.sources =r1 r2 a1.sinks = k1 a1.channels = c1 #The configuration source type is NetCat, the listening address is local, and the port is 44444 a1.sources.r1.type = TAILDIR a1.sources.r1.filegroups = f1 f2 a1.sources.r1.positionFile =/usr/local/src/flume/conf/position.json a1.sources.r1.filegroups.f1 = /usr/local/src/flume/conf/app.log a1.sources.r1.filegroups.f2 = /usr/local/src/flume/conf/logs/.*log a1.sources.r2.type = avro a1.sources.r2.bind = 192.168.120.129 a1.sources.r2.port = 55555 #Configure sink type as sink a1.sinks.k1.type = logger #Configure the channel type as memory, the maximum capacity of memory queue is 1000, and the maximum number of Events received from source or sent to sink in a transaction is 100 a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 #Bind source and sink to channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1 a1.sources.r2.channels = c1

Newly open the session window of file 1 and link flume.

NC localhost 44444 (port number)

Then just type in the text

7. Use of interceptors:

This looks familiar

#Define the agent name as a1

#Set the names of the 3 components

a1.sources =r1

a1.sinks = k1 k2

a1.channels = c1 c2

#The configuration source type is NetCat, the listening address is local, and the port is 44444

a1.sources.r1.type = netcat

a1.sources.r1.bind = localhost

a1.sources.r1.port = 44444

#Add interceptor

a1.sources.r1.interceptors = i1 i2 i3 i4 i5

a1.sources.r1.interceptors.i1.type = host

a1.sources.r1.interceptors.i2.type = timestamp

#custom interceptor

a1.sources.r1.interceptors.i3.type = static

a1.sources.r1.interceptors.i3.key = datacenter

a1.sources.r1.interceptors.i3.value = beijing

#Add UUID

a1.sources.r1.interceptors.i4.type = org.apache.flume.sink.solr.morphline.UUIDInterceptor$Builder

#Hide text

a1.sources.r1.interceptors.i5.type = search_replace

a1.sources.r1.interceptors.i5.searchPattern = \\d{6}

a1.sources.r1.interceptors.i5.replaceString = ******

#Configure sink type as sink

a1.sinks.k1.type = logger

a1.sinks.k2.type = avro

a1.sinks.k2.hostname = 192.168.120.129

a1.sinks.k2.port = 55555

#Configure the channel type as memory, the maximum capacity of memory queue is 1000, and the maximum number of Events received from source or sent to sink in a transaction is 100

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

a1.channels.c2.type = memory

#Bind source and sink to channel

a1.sources.r1.channels = c1 c2

a1.sinks.k1.channel = c1

Open a new session window and link flume.

NC localhost 44444 (port number)

Then just type in the text

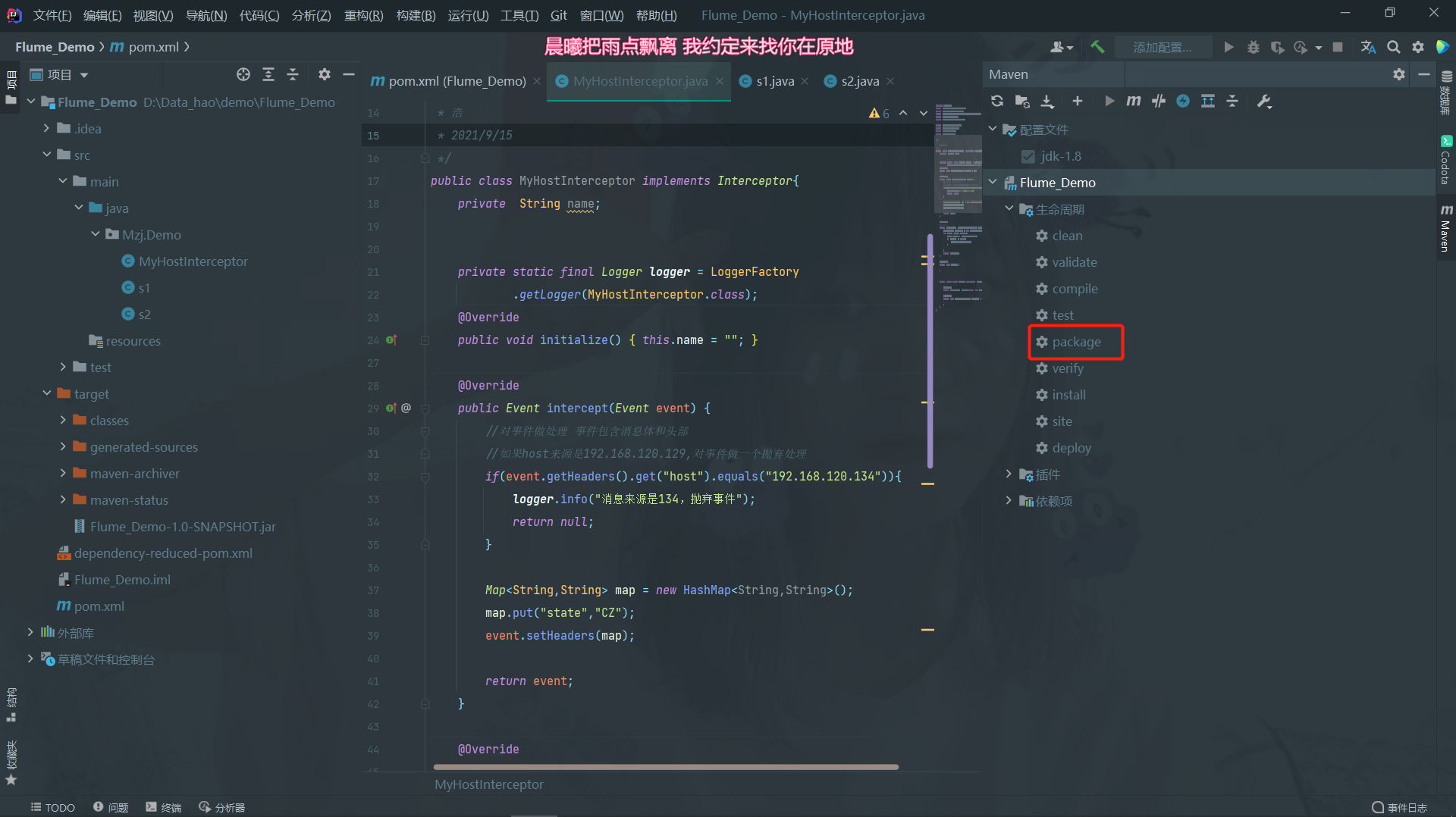

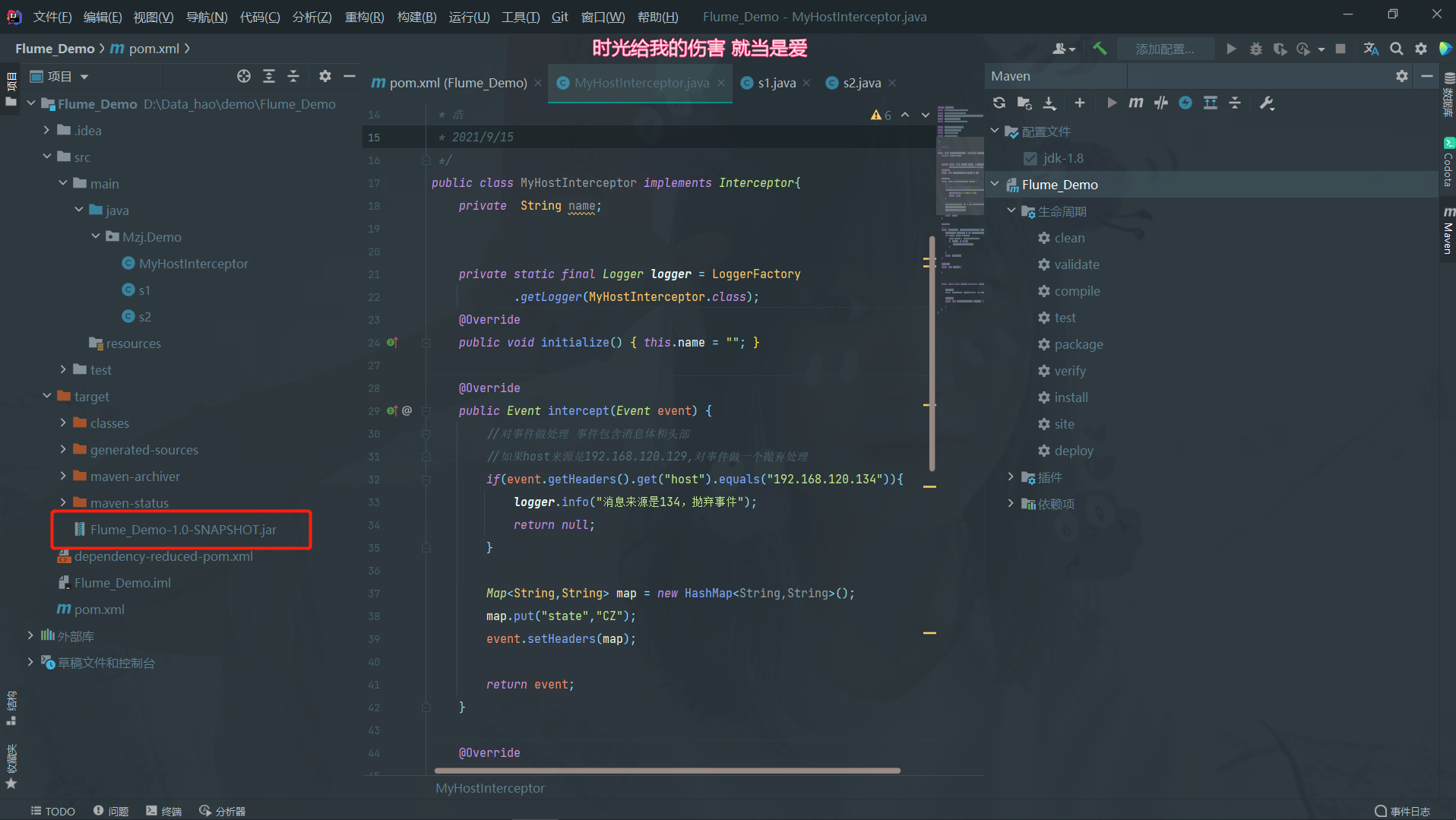

8. Custom interceptor

maven is used here

Relevant files are placed in the code test resource directory link

MyHostInterceptor.java is used

Used are:

Package name+file name Mzj.Demo.MyHostInterceptor$Builer

The packaged jar package is placed in the flume/lib directory

File 1:

#Define the agent name as a1

#Set the names of the 3 components

a1.sources =r1 r2

a1.sinks = k1

a1.channels = c1

#The configuration source type is NetCat, the listening address is local, and the port is 44444

a1.sources.r1.type = TAILDIR

a1.sources.r1.filegroups = f1 f2

a1.sources.r1.positionFile =/usr/local/src/flume/conf/position.json

a1.sources.r1.filegroups.f1 = /usr/local/src/flume/conf/app.log

a1.sources.r1.filegroups.f2 = /usr/local/src/flume/conf/logs/.*log

a1.sources.r2.type = avro

a1.sources.r2.bind = 192.168.120.129

a1.sources.r2.port = 55555

#Add custom interceptor

a1.sources.r2.interceptors = i1

a1.sources.r2.interceptors.i1.type = Mzj.Demo.MyHostInterceptor$Builder

a1.sinks.k1.type = logger

#Configure the channel type as memory, the maximum capacity of memory queue is 1000, and the maximum number of Events received from source or Events sent by > to sink in a transaction is 100

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

#Bind source and sink to channel

a1.sources.r2.channels = c1

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

#Define the agent name as a1

File 2:

#Set the names of the 3 components a1.sources =r1 a1.sinks = k1 k2 a1.channels = c1 c2 a1.sources.r1.type = netcat a1.sources.r1.bind = localhost a1.sources.r1.port = 44444 a1.sources.r1.interceptors = i1 a1.sources.r1.interceptors.i1.type = host a1.sinks.k1.type = logger a1.sinks.k2.type = avro a1.sinks.k2.hostname = 192.168.120.129 a1.sinks.k2.port = 55555 a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 a1.channels.c2.type = memory ##Bind source and sink to channel a1.sources.r1.channels = c1 c2 a1.sinks.k1.channel = c1 a1.sinks.k2.channel = c2

Open a new file 2 session window and link flume.

NC localhost 44444 (port number)

Then just type in the text

9. Pipe selector

These are all on the same machine

agent1:

#Define the agent name as a1 #Set the names of the 3 components a1.sources = r1 a1.sinks = k1 k2 k3 k4 a1.channels = c1 c2 c3 c4 #The configuration source type is NetCat, the listening address is local, and the port is 44444 a1.sources.r1.type = netcat a1.sources.r1.bind = localhost a1.sources.r1.port = 44444 #Configure the sink1 type as Logger a1.sinks.k1.type = logger #Configure sink2,3,4 as Avro a1.sinks.k2.type = avro a1.sinks.k2.hostname = 192.168.120.129 a1.sinks.k2.port = 4040 a1.sinks.k3.type = avro a1.sinks.k3.hostname = 192.168.120.129 a1.sinks.k3.port = 4041 a1.sinks.k4.type = avro a1.sinks.k4.hostname = 192.168.120.129 a1.sinks.k4.port = 4042 #Configure the channel type as memory, the maximum capacity of memory queue is 1000, and the maximum number of Events received from source > or sent to Events in a transaction is 100 a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 a1.channels.c2.type = memory a1.channels.c2.capacity = 1000 a1.channels.c2.transactionCapacity = 100 a1.channels.c3.type = memory a1.channels.c3.capacity = 1000 a1.channels.c3.transactionCapacity = 100 a1.channels.c4.type = memory a1.channels.c4.capacity = 1000 a1.channels.c4.transactionCapacity = 100 #Bind source and sink to channel a1.sources.r1.channels = c1 c2 c3 c4 a1.sinks.k1.channel = c1 a1.sinks.k2.channel = c2 a1.sinks.k3.channel = c3 a1.sinks.k4.channel = c4 #Channel selector a1.sources.r1.selector.type = multiplexing a1.sources.r1.selector.header = state a1.sources.r1.selector.mapping.CZ = c1 c2 a1.sources.r1.selector.mapping.US = c1 c3 a1.sources.r1.selector.default = c1 c4 #Interceptor a1.sources.r1.interceptors = i1 a1.sources.r1.interceptors.i1.type = static a1.sources.r1.interceptors.i1.key = state a1.sources.r1.interceptors.i1.value = US

agent2:

a2.sources = r1 a2.sinks = k1 a2.channels = c1 a2.sources.r1.type = avro a2.sources.r1.bind = 192.168.120.129 a2.sources.r1.port = 4040 a2.sinks.k1.type = logger a2.channels.c1.type = memory a2.channels.c1.capacity = 1000 a2.channels.c1.transactionCapactity = 100 a2.sources.r1.channels = c1 a2.sinks.k1.channel = c1

agent3:

a2.sources = r1 a2.sinks = k1 a2.channels = c1 a2.sources.r1.type = avro a2.sources.r1.bind = 192.168.120.129 a2.sources.r1.port = 4041 a2.sinks.k1.type = logger a2.channels.c1.type = memory a2.channels.c1.capacity = 1000 a2.channels.c1.transactionCapactity = 100 a2.sources.r1.channels = c1 a2.sinks.k1.channel = c1

agent4:

a2.sources = r1 a2.sinks = k1 a2.channels = c1 a2.sources.r1.type = avro a2.sources.r1.bind = 192.168.120.129 a2.sources.r1.port = 4042 a2.sinks.k1.type = logger a2.channels.c1.type = memory a2.channels.c1.capacity = 1000 a2.channels.c1.transactionCapactity = 100 a2.sources.r1.channels = c1 a2.sinks.k1.channel = c1

Open a new session window and link flume.

NC localhost 44444 (port number)

Then just type in the text

10. sink failover:

agent1:

#Define the agent name as a1

#Set the names of the 3 components

a1.sources = r1

a1.sinks = k1 k2 k3 k4

a1.channels = c1

#The configuration source type is NetCat, the listening address is local, and the port is 44444

a1.sources.r1.type = netcat

a1.sources.r1.bind = localhost

a1.sources.r1.port = 44444

#Configure sink group

a1.sinkgroups = g1

a1.sinkgroups.g1.sinks = k1 k2 k3 k4

a1.sinkgroups.g1.processor.type = failover

a1.sinkgroups.g1.processor.priority.k1 = 5

a1.sinkgroups.g1.processor.priority.k2 = 10

a1.sinkgroups.g1.processor.priority.k3 = 15

a1.sinkgroups.g1.processor.priority.k4 = 20

a1.sinkgroups.g1.processor.maxpenalty = 10000

#Configure the sink1 type as Logger

a1.sinks.k1.type = logger

#Configure sink2,3,4 as Avro

a1.sinks.k2.type = avro

a1.sinks.k2.hostname = 192.168.120.129

a1.sinks.k2.port = 4040

a1.sinks.k3.type = avro

a1.sinks.k3.hostname = 192.168.120.129

a1.sinks.k3.port = 4041

a1.sinks.k4.type = avro

a1.sinks.k4.hostname = 192.168.120.129

a1.sinks.k4.port = 4042

#Configure the channel type as memory, the maximum capacity of memory queue is 1000, and the maximum number of Events received from source or sent to Events in a transaction is 100

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

#Bind source and sink to channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

a1.sinks.k2.channel = c1

a1.sinks.k3.channel = c1

a1.sinks.k4.channel = c1

Other agent(n) files are the same as the agent(n) file of configuration 9 (except 1)

Open a new file 2 session window and link flume.

NC localhost 44444 (port number)

Then just type in the text

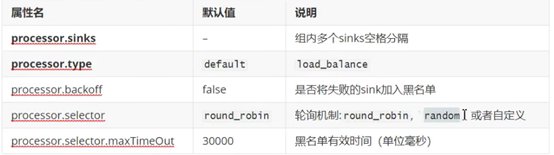

11. sink processor load balancing

#Define the agent name as a1 #Set the names of the 3 components a1.sources = r1 a1.sinks = k1 k2 k3 k4 a1.channels = c1 #The configuration source type is NetCat, the listening address is local, and the port is 44444 a1.sources.r1.type = netcat a1.sources.r1.bind = localhost a1.sources.r1.port = 44444 #Define group a1.sinkgroups = g1 a1.sinkgroups.g1.sinks = k1 k2 k3 k4 a1.sinkgroups.g1.processor.type = load_balance a1.sinkgroups.g1.processor.backoff = true a1.sinkgroups.g1.processor.selector = random #Configure the sink1 type as Logger a1.sinks.k1.type = logger #Configure sink2,3,4 as Avro a1.sinks.k2.type = avro a1.sinks.k2.hostname = 192.168.120.129 a1.sinks.k2.port = 4040 a1.sinks.k3.type = avro a1.sinks.k3.hostname = 192.168.120.129 a1.sinks.k3.port = 4041 a1.sinks.k4.type = avro a1.sinks.k4.hostname = 192.168.120.129 a1.sinks.k4.port = 4042 #Configure the channel type as memory, the maximum capacity of memory queue is 1000, and the maximum number of Events received from source or sent to Events in a transaction is 100 a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 #Bind source and sink to channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1 a1.sinks.k2.channel = c1 a1.sinks.k3.channel = c1 a1.sinks.k4.channel = c1

Other agent(n) files are the same as the agent(n) file of configuration 9 (except 1)

Open a new session window and link flume.

NC localhost 44444 (port number)

Then just type in the text

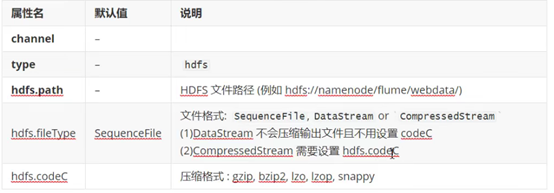

12. Export data to hdfs

#Define the agent name as a1 #Set the names of the 3 components a1.sources =r1 a1.sinks = k1 a1.channels = c1 a1.sources.r1.type = netcat a1.sources.r1.bind = localhost a1.sources.r1.port = 44444 #The path under node port 9000 / path will automatically create a folder during data sending #The configuration type is hdfs a1.sinks.k1.type = hdfs a1.sinks.k1.hdfs.path = hdfs://192.168.120.129:9000/user/flume/logs a1.sinks.k1.hdfs.fileType = DataStream a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 a1.channels.c2.type = memory ##Bind source and sink to channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1

Open a new session window and link flume.

NC localhost 44444 (port number)

Then just type in the text

13. Multiple agent s upload hdfs

Document 1:

#Define the agent name as a1 #Set the names of the 3 components a1.sources = r1 a1.sinks = k1 a1.channels = c1 #The configuration source type is NetCat, the listening address is local, and the port is 44444 a1.sources.r1.type = avro a1.sources.r1.bind = 192.168.120.129 a1.sources.r1.port = 4040 #Configure sink1 type as Avro a1.sinks.k1.type = hdfs a1.sinks.k1.hdfs.path = hdfs://192.168.120.129:9000/user/flume/logs a1.sinks.k1.hdfs.fileType = DataStream #Configure the channel type as memory, the maximum capacity of memory queue is 1000, and the maximum number of Events received from source or sent to Events in a transaction is 100 a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 #Bind source and sink to channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1

Document 2:

#Define the agent name as a1 #Set the names of the 3 components a1.sources = r1 a1.sinks = k1 a1.channels = c1 #The configuration source type is NetCat, the listening address is local, and the port is 44444 a1.sources.r1.type = netcat a1.sources.r1.bind = localhost a1.sources.r1.port = 44444 #Configure sink2,3,4 as Avro a1.sinks.k1.type = avro a1.sinks.k1.hostname = 192.168.120.129 a1.sinks.k1.port = 4040 #Configure the channel type as memory, the maximum capacity of memory queue is 1000, and the maximum number of Events received from source or sent to Events in a transaction is 100 a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 #Bind source and sink to channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1

Open a new file 2 session window and link flume.

NC localhost 44444 (port number)

Then just type in the text

hadoop fs -cat file path (the written information will appear on the host)

14. Custom source

maven is used here

Relevant files are placed in the code test resource directory link

Used are:

Package name+file name

s1java is used

The packaged jar package is placed in the flume/lib directory

#Define the agent name as a1 #Set the names of the 3 components a1.sources =r1 a1.sinks = k1 a1.channels = c1 a1.sources.r1.type = Mzj.Demo.s1 a1.sinks.k1.type = logger a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1

Custom sinks

maven is used here

Relevant files are placed in the code test resource directory link

Used are:

Package name+file name

s2.java is used

The packaged jar package is placed in the flume/lib directory

#Define the agent name as a1 #Set the names of the 3 components a1.sources =r1 a1.sinks = k1 a1.channels = c1 a1.sources.r1.type = Mzj.Demo.s1 a1.sinks.k1.type = Mzj.Demo.s2 a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1

Code test resources

Extract link

Extraction code: 6666

pack:

Double click the red box

The generated jar package is pulled into flume/lib

Introduction to maven resources:

pom.xml:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>Mzj_baby</groupId>

<artifactId>Flume_Demo</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.flume</groupId>

<artifactId>flume-ng-core</artifactId>

<version>1.9.0</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>1.7.32</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<configuration>

<source>8</source>

<target>8</target>

</configuration>

</plugin>

</plugins>

</build>

</project>

MyHostInterceptor.java:

package Mzj.Demo;

import org.apache.flume.Context;

import org.apache.flume.Event;

import org.apache.flume.interceptor.Interceptor;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.util.ArrayList;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

/**

* Vast

* 2021/9/15

*/

public class MyHostInterceptor implements Interceptor{

private String name;

private static final Logger logger = LoggerFactory

.getLogger(MyHostInterceptor.class);

@Override

public void initialize() {

this.name = "";

}

@Override

public Event intercept(Event event) {

//Handle the event. The event contains the message body and header

//If the host source is 192.168.120.129, discard the event

if(event.getHeaders().get("host").equals("192.168.120.134")){

logger.info("The source is 134, abandonment event");

return null;

}

Map<String,String> map = new HashMap<String,String>();

map.put("state","CZ");

event.setHeaders(map);

return event;

}

@Override

//Handle all events

public List<Event> intercept(List<Event> events) {

List<Event> eventList = new ArrayList<Event>();

for (Event event: events){

Event event1 = intercept(event);

if (event1 != null){

eventList.add(event1);

}

}

return eventList;

}

@Override

public void close() {

}

public static class Builder implements Interceptor.Builder {

@Override

public Interceptor build() {

return new MyHostInterceptor();

}

@Override

public void configure(Context context) {

}

}

}

s1.java

package Mzj.Demo;

import org.apache.flume.Context;

import org.apache.flume.Event;

import org.apache.flume.EventDeliveryException;

import org.apache.flume.PollableSource;

import org.apache.flume.conf.Configurable;

import org.apache.flume.event.SimpleEvent;

import org.apache.flume.source.AbstractSource;

/**

* Vast

* 2021/9/20

*/

public class s1 extends AbstractSource implements Configurable, PollableSource {

//Processing data

@Override

public Status process() throws EventDeliveryException {

Status status = null;

try {

//Self simulated data transmission

for (int i = 0; i< 10;i++){

Event event = new SimpleEvent();

event.setBody(("data:"+i).getBytes());

getChannelProcessor().processEvent(event);

//Data preparation consumption

status = Status.READY;

Thread.sleep(5000);

}

} catch (Exception e) {

e.printStackTrace();

status = Status.BACKOFF;

}

return status;

}

@Override

public long getBackOffSleepIncrement() {

return 0;

}

@Override

public long getMaxBackOffSleepInterval() {

return 0;

}

@Override

public void configure(Context context) {

}

}

s2.java

package Mzj.Demo;

import org.apache.flume.*;

import org.apache.flume.conf.Configurable;

import org.apache.flume.sink.AbstractSink;

import org.slf4j.Logger;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

/**

* Vast

* 2021/9/21

*/

public class s2 extends AbstractSink implements Configurable {

private static final Logger logger = LoggerFactory

.getLogger(s2.class);

//Processing data

@Override

public Status process() throws EventDeliveryException {

Status status = null;

//Get the Channel bound by sink

Channel ch = getChannel();

//Get transaction

Transaction transaction = ch.getTransaction();

try {

transaction.begin();

//Receive data from Channel

Event event = ch.take();

//Data can be sent to external storage

if(event == null){

status = Status.BACKOFF;

}else {

logger.info(new String(event.getBody()));

status = Status.READY;

}

transaction.commit();

}catch (Exception e){

logger.error(e.getMessage());

status = Status.BACKOFF;

}finally {

transaction.close();

}

return status;

}

@Override

public void configure(Context context) {

}

}