A Brief Introduction to flume

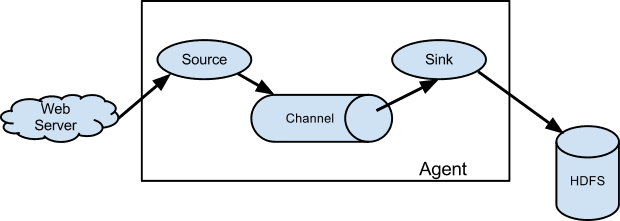

When you read this article, you should have a general understanding of flume, but in order to take care of the beginners, you will still say flume. When you first use flume, you don't need to understand too much about it. Just understand the following figure, you can use flume to transfer log data into kafka. The hdfs in the following figure is just representative sink. In practice, sink is kaf. Ka

flume installation

flume environment preparation

- centos 6.5

- JDK 1.7+

flume Download and Installation

- flume 1.7 Download links

- Install flume

1.tar -zxvf apache-flume-1.7.0-bin.tar.gz

2.mv apache-flume-1.7.0-bin flume

3.cp conf/flume-conf.properties.template conf/flume-conf.properties # flume-conf.properties configure source,channel,sink, etc.

4.cp conf/flume-env.sh.template conf/flume-env.sh # flume-env.sh configures agent startup items and JAVA environment variables, etc.

flume configuration

- Configure flume-conf.properties

agent.sources=r1

agent.sinks=k1

agent.channels=c1

agent.sources.r1.type=exec

agent.sources.r1.command=tail -F /data/logs/access.log

agent.sources.r1.restart=true

agent.sources.r1.batchSize=1000

agent.sources.r1.batchTimeout=3000

agent.sources.r1.channels=c1

agent.channels.c1.type=memory

agent.channels.c1.capacity=102400

agent.channels.c1.transactionCapacity=1000

agent.channels.c1.byteCapacity=134217728

agent.channels.c1.byteCapacityBufferPercentage=80

agent.sinks.k1.channel=c1

agent.sinks.k1.type=org.apache.flume.sink.kafka.KafkaSink

agent.sinks.k1.kafka.topic=xxxxx-kafka

agent.sinks.k1.kafka.bootstrap.servers=x.x.x.x:9092,x.x.x.x:9092

agent.sinks.k1.serializer.class=kafka.serializer.StringEncoder

agent.sinks.k1.flumeBatchSize=1000

agent.sinks.k1.useFlumeEventFormat=trueThe command rule is R1 - > source K1 - > sink C1 - > channel agent name at startup - n parameter value

- Configure flume-env.sh

export JAVA_HOME=/data/java/jdk1.8.0_102/I only configure JAVA_HOME here, and some JMX options started by the agent are not added, which can be added according to their own needs.

* Start flume-agent

Start flume-agent

./bin/flume-ng agent -c conf -f conf/flume-conf.properties -n agent -Dflume.root.logger=INFO,console

- c Configuration File Directory - f Specifies Flume Configuration File - n flume Client Name Dflume Prints INFO-level LOG to the console at startup

summary

1.flume can define its own source,sink, you can modify or re-do according to your own needs. git address pull code from github. If you just modify the code of a module, you just need to delete the previous jar and drop the compiled jar. Other ways of playing can see the official documents.

2. In use memory channels when the agent is killed will the data be lost or not be restored?

3.flume is very flexible in daily aggregation. It can be used in various ways, such as taking data from one tcp port to other flume agent s.

4. Recommend an official document.