Flume collects Log4j logs and sends them to Kafka for storage

Environmental preparation

- Download Flume: http://flume.apache.org/

- Install: extract the download package to the custom path

- Configure agent

# Function: filter json information storage kafka agent.sources = s1 agent.channels = c1 agent.sinks = k1 agent.sources.s1.type = avro agent.sources.s1.channels = c1 agent.sources.s1.bind = centos agent.sources.s1.port = 4444 agent.sources.s1.interceptors = search-replace agent.sources.s1.interceptors.search-replace.type = search_replace agent.sources.s1.interceptors.search-replace.searchPattern = [^{]*(?=\\{) agent.sources.s1.interceptors.search-replace.replaceString = agent.channels.c1.type = memory agent.channels.c1.capacity = 100 agent.channels.c1.transactionCapacity = 10 agent.sinks.k1.type = org.apache.flume.sink.kafka.KafkaSink agent.sinks.k1.kafka.bootstrap.servers = centos:9092 agent.sinks.k1.channel = c1 agent.sinks.k1.kafka.topic = flume agent.sinks.k1.kafka.flumeBatchSize = 1 agent.sinks.k1.batchSize = 5 agent.sinks.k1.kafka.producer.acks = 1

Interpretation:

- sources: avro is used to receive Log4j logs

- Interceptors: search replace search channel specify rule characters, data conversion

- channels: memory provides log processing throughput

- Sin: Kafka topic: flume

- Start Flume Agent Command: flume ng agent - C conf - F. / conf / flume Kafka. Conf - N agent - dflume. Root. Logger = debug, console

Note: it is better to debug the log level only, which is convenient for locating problems. After the environment is stable, it is changed to Info

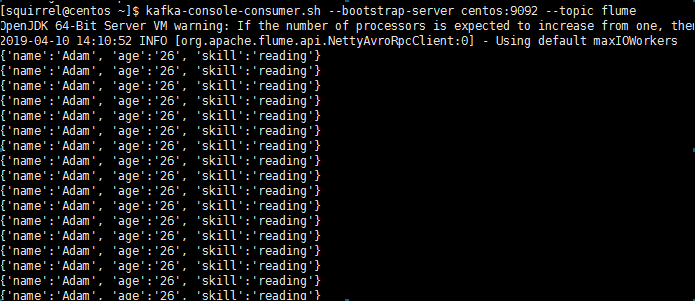

- Start Kafka and configure topic listening

Start kafka: kafka-server-start.sh server.properties Listening topic: kafka-console-consumer.sh -- bootstrap server CentOS: 9092 -- Topic flume

Test code

pom:

<dependency> <groupId>org.apache.flume</groupId> <artifactId>flume-ng-sdk</artifactId> <version>1.9.0</version> </dependency> <dependency> <groupId>org.apache.flume.flume-ng-clients</groupId> <artifactId>flume-ng-log4jappender</artifactId> <version>1.9.0</version> </dependency> <dependency> <groupId>org.slf4j</groupId> <artifactId>slf4j-log4j12</artifactId> <version>1.7.24</version> </dependency>

log4j.properties

log4j.rootLogger=debug,stdout,flume log4j.appender.flume=org.apache.flume.clients.log4jappender.Log4jAppender log4j.appender.flume.Hostname=192.168.72.129 log4j.appender.flume.Port=4444 log4j.appender.flume.layout=org.apache.log4j.PatternLayout log4j.appender.flume.layout.ConversionPattern=%d{yyyy-MM-dd HH:mm:ss} %p [%c:%L] - %m ### Output information to console ### log4j.appender.stdout=org.apache.log4j.ConsoleAppender log4j.appender.stdout.Target=System.out log4j.appender.stdout.layout=org.apache.log4j.PatternLayout log4j.appender.stdout.layout.ConversionPattern=%d{hh:mm:ss,SSS} [%t] %-5p [%c:%L] %x - %m%n

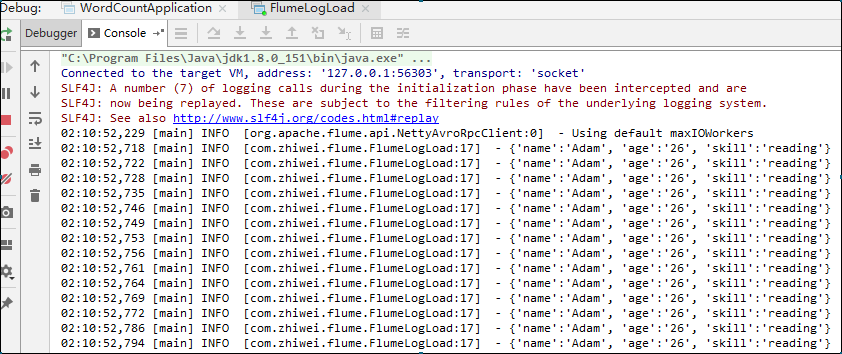

for (int i = 0; i < 100; i++) { log.info("{'name':'Adam', 'age':'26', 'skill':'reading'}"); }

Effect