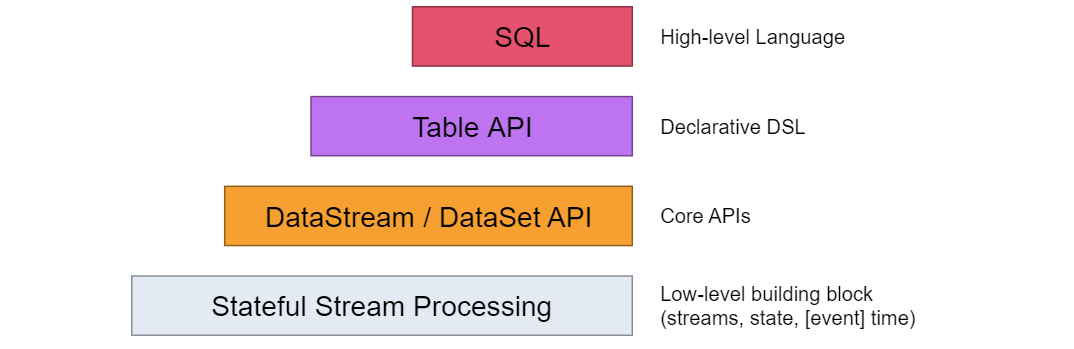

preface

Relationship between Table and SQL: SQL is the inheritance encapsulation of Table (this point is in Flink concept For example, StreamTableEnvironment can be embodied by inheriting from TableEnvironment. Therefore, the use of tables in official documents can be reflected in SQL, such as user-defined functions, Table API & custom functions under SQL Only the Table environment creation custom function in Table mode is given in. We can modify it to ste object and implement it in SQL.

Application scenario

Use FlinkSQL to convert redis dimension table information. Redis obtains the dimension table information and stores it in the function.

code

producer code reference: FlinkSQL uses DDL to create Kafka production and consumers The production data type is changed from json to csv (supplemented in this article).

Or use Table: Flink SQL & Table simple instance

Simulated production data

Producer DDL:

String ddl = "CREATE TABLE CbryProduce(\n" + "phoneNum STRING,\n" + "rechargeNum STRING,\n" + "provinceCode STRING,\n" + "cityCode STRING,\n" + "rechargeChannelCode STRING\n" + ") WITH(\n" + "'connector.type'='kafka',\n" + "'connector.version'='universal',\n" + "'connector.properties.bootstrap.servers'='KafkaClusterURL:ip:port,ip2:port',\n" + //"'connector.properties.bootstrap.servers'='localhost:9092',\n" + "'connector.topic'='event_topic_1',\n" + "'format.type'='csv',\n" + "'format.field-delimiter'='|'\n" + ")\n" ;

DML:

String insert2 = "insert into CbryProduce(phoneNum,rechargeNum,provinceCode,cityCode,rechargeChannelCode)" +

"values('1024','100','051','0750','2')";

Generate Redis dimension table information

How to generate JedisCluster object insert data:

Redis (I) Jedis single machine and cluster connection

Redis (III) redisTemplate operation and five basic data types

// Simulation data creation

// Map<String, String> cityDimensionMap = new HashedMap();

// cityDimensionMap.put("0020", "Guangzhou");

// cityDimensionMap.put("0750", "Shenzhen");

//

// Map<String, String> rechargeChannelsMap = new HashedMap();

// rechargeChannelsMap.put("1", "mobile app recharge");

// rechargeChannelsMap.put("2", "business hall recharge");

//

// jedisCluster.hmset("CityCode", cityDimensionMap);

// jedisCluster.hmset("RechargeChannels", rechargeChannelsMap);

// System.out.println(jedisCluster.hgetAll("CityCode"));

// System.out.println(jedisCluster.hgetAll("RechargeChannels"));

// System.out.println(jedisCluster.get("testttt")); // Null value returns null

Custom SQL functions

How to use FlinkSQL: FlinkSQL uses DDL to create Kafka production and consumers Or use Table: Flink SQL & Table simple instance

Here, in ScalarFunction, we only need to customize / overload an eval method:

As follows: we pass in a cityNum to the custom function and return the value corresponding to cityCodeMap.

// define function logic

// Custom SQL functions

public static class cityCodeTranslateFunction extends ScalarFunction{

Map<String, String> cityCodeMap = jedisCluster.hgetAll("CityCode");

public String eval(String cityNum) {

String res = cityCodeMap.get(cityNum);

return res == null ? "Error" : res;

}

}

Introducing custom functions

Introduce our custom functions into the StreamTableEnvironment execution environment of SQL

//StreamTableEnvironment inherits from TableEnvironment

ste.createTemporarySystemFunction("cityTranslate", cityCodeTranslateFunction.class);

ste.createTemporarySystemFunction("rechargeChannelTranslate", rechargeChannelTranslateFunction.class);

Execute printing

Table queryTable = ste.sqlQuery("select phoneNum,rechargeNum,cityCode,cityTranslate(cityCode), provinceCode,rechargeChannelCode, rechargeChannelTranslate(rechargeChannelCode)"

+ " from CbryConsumer");

DataStream<Row> result = ste.toAppendStream(queryTable, Row.class);

result.printToErr();

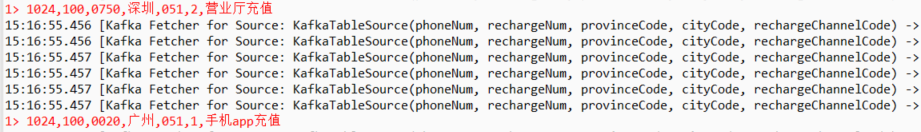

Output results

1> 10241000750, Shenzhen, 051,2, business hall recharge

1> 10241000020, Guangzhou, 051,1, mobile app recharge

Overall code

public class UserDefinedFuctions {

static JedisCluster jedisCluster;

private static GenericObjectPoolConfig getGenericObjectPoolConfig() {

GenericObjectPoolConfig genericObjectPool = new GenericObjectPoolConfig();

genericObjectPool.setMaxIdle(10);

genericObjectPool.setMaxTotal(100);

genericObjectPool.setMinEvictableIdleTimeMillis(30000); // The minimum idle time for evicting a connection is 30s

genericObjectPool.setSoftMinEvictableIdleTimeMillis(60000); // Idle eviction time 1 minute

return genericObjectPool;

}

static {

HostAndPort hostAndPort = new HostAndPort("ip", 7000);

HostAndPort hostAndPort2 = new HostAndPort("ip", 7001);

HostAndPort hostAndPort3 = new HostAndPort("ip", 7000);

HostAndPort hostAndPort4 = new HostAndPort("ip", 7001);

HostAndPort hostAndPort5 = new HostAndPort("ip", 7000);

HostAndPort hostAndPort6 = new HostAndPort("ip", 7001);

Set<HostAndPort> hostAndPortSet = new HashSet<>();

hostAndPortSet.add(hostAndPort);

hostAndPortSet.add(hostAndPort2);

hostAndPortSet.add(hostAndPort3);hostAndPortSet.add(hostAndPort4);hostAndPortSet.add(hostAndPort5);hostAndPortSet.add(hostAndPort6);

jedisCluster = new JedisCluster(hostAndPortSet, 6000, 6000, 10, password,UserDefinedFuctions.getGenericObjectPoolConfig());

// Simulation data creation

// Map<String, String> cityDimensionMap = new HashedMap();

// cityDimensionMap.put("0020", "Guangzhou");

// cityDimensionMap.put("0750", "Shenzhen");

//

// Map<String, String> rechargeChannelsMap = new HashedMap();

// rechargeChannelsMap.put("1", "mobile app recharge");

// rechargeChannelsMap.put("2", "business hall recharge");

//

// jedisCluster.hmset("CityCode", cityDimensionMap);

// jedisCluster.hmset("RechargeChannels", rechargeChannelsMap);

// System.out.println(jedisCluster.hgetAll("CityCode"));

// System.out.println(jedisCluster.hgetAll("RechargeChannels"));

// System.out.println(jedisCluster.get("testttt")); // Null value returns null

}

// define function logic

// Custom SQL functions

public static class cityCodeTranslateFunction extends ScalarFunction{

Map<String, String> cityCodeMap = jedisCluster.hgetAll("CityCode");

public String eval(String cityNum) {

String res = cityCodeMap.get(cityNum);

return res == null ? "Error" : res;

}

}

public static class rechargeChannelTranslateFunction extends ScalarFunction{

Map<String, String> rechargeChannelsMap = jedisCluster.hgetAll("RechargeChannels");

public String eval(String rechargeChannel) {

String res = rechargeChannelsMap.get(rechargeChannel);

return res == null ? "Error" : res;

}

}

public static void main(String[] args) {

final StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

EnvironmentSettings settings = EnvironmentSettings.newInstance().inStreamingMode()

// .useOldPlanner() // flink

.useBlinkPlanner() // blink

.build();

StreamTableEnvironment ste = StreamTableEnvironment.create(env, settings);

String ddl = "CREATE TABLE CbryConsumer(\n" +

"phoneNum String,\n" +

"rechargeNum String,\n" +

"provinceCode String,\n" +

"cityCode String,\n" +

"rechargeChannelCode String\n" +

") WITH(\n" + "'connector.type'='kafka',\n"

+ "'connector.version'='universal',\n" + "'connector.properties.group.id'='g2_group',\n"

+ "'connector.properties.bootstrap.servers'='KafkaClusterURL:ip:port,ip2:port',\n"

+ "'connector.topic'='event_topic_1',\n" + "'connector.startup-mode' = 'latest-offset',\n"

+ "'format.type'='csv',\n"

+ "'format.field-delimiter'='|'\n" +

")\n"

;

ste.executeSql(ddl);

//StreamTableEnvironment inherits from TableEnvironment

ste.createTemporarySystemFunction("cityTranslate", cityCodeTranslateFunction.class);

ste.createTemporarySystemFunction("rechargeChannelTranslate", rechargeChannelTranslateFunction.class);

Table queryTable = ste.sqlQuery("select phoneNum,rechargeNum,cityCode,cityTranslate(cityCode), provinceCode,rechargeChannelCode, rechargeChannelTranslate(rechargeChannelCode)"

+ " from CbryConsumer");

DataStream<Row> result = ste.toAppendStream(queryTable, Row.class);

result.printToErr();

try {

env.execute();

} catch (Exception e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

}

Implementation with interaction

In practical use, it is impossible to write code once to implement a function. How to implement the user-defined function in the form of "interaction"? Answer: overloaded constructor using java polymorphism:

// define function logic

// Custom SQL functions

public static class AutoAdaptaMapDefineFunction extends ScalarFunction {

Map<String, String> redisMap;

public AutoAdaptaMapDefineFunction(String dimensionName) {

redisMap = jedisCluster.hgetAll(dimensionName);

}

public String eval(String dimensionKey) {

String res = redisMap.get(dimensionKey);

return res == null ? "Error" : res;

}

}

//ste.createTemporarySystemFunction("cityTranslate", new AutoAdaptaMapDefineFunction("CityCodeDimensionMapKey"));

For interactive sessions, you can also parameterize functions before using or registering them. In this case, you can use a function instance instead of a function class as a temporary function.

It requires parameters to be serializable in order to pass function instances to the cluster.

PS: there are two ways to inject functions: one is to pass objects, and the other is to pass class objects

void createTemporarySystemFunction(String name, Class<? extends UserDefinedFunction> functionClass);

void createTemporarySystemFunction(String name, UserDefinedFunction functionInstance);

This also provides us with the possibility of custom class loader to specify a specific class object for function injection Flink job.