Operators convert one or more data streams into a new data stream.Programs can combine multiple transformations into complex data flow topologies.

Describes basic transformations, valid physical partitions after applying these transformations, and an understanding of Flink operator links.

DataStream Transformations

|

Conversion Method |

Description and code samples |

|

Map DataStream → DataStream |

Gets an element and generates an element.A map function, for example, doubling the value of an input stream:

|

|

FlatMap DataStream → DataStream |

Gets an element and generates zero, one, or more elements.A planar mapping function that splits a sentence into words:

|

|

Filter DataStream → DataStream |

Calculates a Boolean function for each element, preserving the elements whose function returns true.Filters that filter out zero values:

|

|

KeyBy DataStream → KeyedStream |

Logically divide the flow into disjoint partitions.All records with the same key are assigned to the same partition.Internally, keyBy() is implemented through a hash partition.There are different ways to specify keys. This transition returns a KeyedStream, which is required to use the key state. Note: The following types cannot be used as key s:

|

|

Reduce KeyedStream → DataStream |

Combines the current element with the last reduction value and returns a new value based on keyBy().

|

|

Fold KeyedStream → DataStream |

Combines the current element with the last collapsed value and returns a new value based on keyBy(). When applied to a sequence (1,2,3,4,5), the sequence "start-1", "start-1-2", "start-1-2-3",...

|

|

Aggregations KeyedStream → DataStream |

Based on keyBy(), min and minBy differ in that Min returns the minimum value, while minBy returns the element with the minimum value in the field (the same is true for max and maxBy).

|

|

Window KeyedStream → WindowedStream |

Windows can be defined on a partitioned KeyedStreams.Windows groups data in each key based on certain characteristics, such as data that has arrived in the last five seconds.Window Details//TODO

|

|

WindowAll DataStream → AllWindowedStream |

It can be defined on a regular data stream.Windows groups all stream events based on some characteristics, such as data arrived in the last five seconds. Note: In most cases, this is a non-parallel conversion.All records will be collected in one task of the windowAll operator. dataStream.windowAll(TumblingEventTimeWindows.of(Time.seconds(5))); // Last 5 seconds of data

|

|

Window Apply WindowedStream → DataStream AllWindowedStream → DataStream |

Apply a generic function to the entire window.Below is a function that manually summarizes window elements. Note: If you are using the windowAll conversion, you need to use the AllWindowFunction.

|

|

Window Reduce WindowedStream → DataStream |

Apply the reduce function to the window and return the reduced value.

|

|

Window Fold WindowedStream → DataStream |

Apply the fold function to the window and return the value after the fold.When an example function is applied to a sequence (1,2,3,4,5), the sequence is collapsed into the string "start 1-2-3-4-5":

|

|

Aggregations on windows WindowedStream → DataStream |

Based on window(), in differs from minBy in that min returns the minimum value, whereas minBy returns the element with the minimum value in the field (the same is true for max and maxBy).

|

|

Union DataStream* → DataStream |

Union of two or more data streams to create a new stream containing all elements from all streams.Note: If you combine a data stream with itself, you get each element in the result stream twice. dataStream.union(otherStream1, otherStream2, ...);

|

|

Window Join DataStream,DataStream → DataStream |

Connect two data streams on a given key and public window.

|

|

Interval Join KeyedStream,KeyedStream → DataStream |

Connect two elements e1 and e2 of two key streams with a common key at a given time interval, so that e1.timestamp + lowerBound <= e2.timestamp <= e1.timestamp + upperBound

|

|

Window CoGroup DataStream,DataStream → DataStream |

Combines two data streams on a given key and a public window.

|

|

Connect DataStream,DataStream → ConnectedStreams |

Connect two data streams of reserved type.Allows a state-sharing connection between two streams.

|

|

CoMap, CoFlatMap ConnectedStreams → DataStream |

Similar to map and flatMap on connect-based data streams

|

|

Split DataStream → SplitStream |

Divides a stream into two or more streams according to some criteria.

|

|

Select SplitStream → DataStream |

Select one or more streams from the shunt.

|

|

Iterate DataStream → IterativeStream → DataStream |

Create a feedback loop in the stream by redirecting the output of one operator to a previous operator.This is particularly useful for defining algorithms that constantly update models.The following code starts from a stream and continues to apply an iteration volume.Elements greater than 0 are sent back to the feedback channel, and the rest are forwarded downstream.Iterations Subsequent Details//TODO

|

|

Extract Timestamps DataStream → DataStream |

Extract timestamps from records to use windows that use event time semantics.Event Time Subsequent Details//TODO |

|

Project DataStream → DataStream |

The following transformations can be used for the data flow of tuples: Select a subset of fields from a tuple DataStream<Tuple3<Integer, Double, String>> in = // [...] DataStream<Tuple2<String, Integer>> out = in.project(2,0);

|

Physical partition

Flink also provides low-level control over the converted flow partitions through the following functions, if required.

| Method | Description and code samples |

|

Custom partitioning DataStream → DataStream |

Select a target task for each element using a user-defined partitioner.

|

|

Random partitioning DataStream → DataStream |

Randomly divide elements according to uniform distribution.

|

|

Rebalancing (Round-robin partitioning) DataStream → DataStream |

Partition element loops, creating the same load for each partition.This is useful for performance optimization where there is data skew. dataStream.rebalance();

|

|

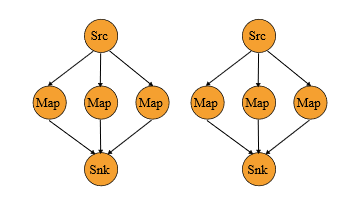

Rescaling DataStream → DataStream |

Divide elements (loops) into subsets of downstream operations.This is useful if you want pipelines, for example, to spread the load from each parallel instance of a source to a subset of several mappers, but you don't want rebalance() to result in a complete rebalance of the data.This will require only local data transfer, not over the network, depending on other configuration values, such as the number of slots in TaskManagers. The subset of downstream operations to which an upstream operation sends elements depends on the degree of parallelism between the upstream and downstream operations.For example, if an upstream operation has parallelism 2 and a downstream operation has parallelism 6, one upstream operation will assign elements to three downstream operations and the other upstream operation to three other downstream operations.On the other hand, if the parallelism of the downstream operation is 2 and that of the upstream operation is 6, the parallelism of the upstream operation is 3. One or more downstream operations have a different number of inputs than upstream operations when different degrees of parallelism are not multiples of each other.

|

|

Broadcasting DataStream → DataStream |

Broadcast elements to each partition. dataStream.broadcast();

|

Task Links and Resource Groups

chaining means putting them in the same thread for better performance.If possible, the Flink chain operator is used by default (for example, two subsequent mapping transformations).The API can fine-grained control links if needed:

If you want to disable links throughout the job, use StreamExecutionEnvironment.disableOperator Chaining ().For finer-grained control, the following functions can be used.Note that these functions can only be used after a DataStream transformation because they refer to the previous transformation.For example, you can use someStream.map(...). startnewchain(), but someStream.startNewChain() cannot be used.

resource group is a slot in Flink, see Slot//TODO.If needed, operators can be manually isolated in separate slots.

| Method | Description and code samples |

| Start new chain |

Start with this operator and create a new chain.These two mappers will be linked, and filters will not be linked to the first mapper. someStream.filter(...).map(...).startNewChain().map(...);

|

| Disable chaining |

Do not chain the map operator

|

|

Do not chain the map operator |

Set the slot share group for the operation.Flink will place the operators with the same slot sharing group in the same slot, while keeping the operators without the slot sharing group in the other slots.This can be used to isolate the slots.If all input operators are in the same slot sharing group, the slot sharing group will inherit from the input operator.The default slot sharing group is named'default', and operations can be explicitly placed in the group by calling slotSharingGroup('default').

|