This paper mainly introduces the process that Flink reads Kafka data and sinks (Sink) data to Redis in real time. Through the following link: Flink official documents , we know that the fault tolerance mechanism for saving data to Redis is at least once. So we use idempotent operation and the principle of overwriting old data with new data under the same data condition to realize exactly once.

1.config.properties configuration file

bootstrap.server=192.168.204.210:9092,192.168.204.211:9092,192.168.204.212:9092 group.id=testGroup auto.offset.reset=earliest enable.auto.commit=false topics=words redis.host=192.168.204.210 redis.port=6379 redis.password=123456 redis.timeout=5000 redis.db=0

2.RedisUtils tool class

/** * TODO FlinkUtils Tool class (continuous update writing) * * @author liuzebiao * @Date 2020-2-18 9:11 */ public class FlinkUtils { private static StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); /** * Back to Flink streaming environment * @return */ public static StreamExecutionEnvironment getEnv(){ return env; } /** * Flink Reading data from Kafka (satisfying exactly once) * @param parameters * @param clazz * @param <T> * @return * @throws IllegalAccessException * @throws InstantiationException */ public static <T> DataStream<T> createKafkaStream(ParameterTool parameters, Class<? extends DeserializationSchema> clazz) throws IllegalAccessException, InstantiationException { //Set global parameters env.getConfig().setGlobalJobParameters(parameters); //1. Only when checkpoint is turned on can there be a restart strategy //Set Checkpoint mode (integrate with Kafka, be sure to set Checkpoint mode to exactly "once") env.enableCheckpointing(parameters.getLong("checkpoint.interval",5000L),CheckpointingMode.EXACTLY_ONCE); //2. The default restart strategy is: fixed delay infinite restart //The restart policy is set here as follows: restart 3 times in case of exception, every 5 seconds (you can also write in the flink-conf.yaml configuration file). Configuration here will overwrite) env.getConfig().setRestartStrategy(RestartStrategies.fixedDelayRestart(10, Time.seconds(20))); //If the system exits abnormally or cancels manually, the checkpoint data will not be deleted env.getCheckpointConfig().enableExternalizedCheckpoints(CheckpointConfig.ExternalizedCheckpointCleanup.RETAIN_ON_CANCELLATION); /**2.Source:Read messages in Kafka**/ //Kafka props Properties properties = new Properties(); //Specify the Broker address of Kafka properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, parameters.getRequired("bootstrap.server")); //Specified group ID properties.put(ConsumerConfig.GROUP_ID_CONFIG, parameters.getRequired("group.id")); //If the offset is not recorded, the first consumption is from the beginning properties.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, parameters.get("auto.offset.reset","earliest")); //Kafka's consumers, do not automatically submit offsets properties.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG, parameters.get("enable.auto.commit","false")); String topics = parameters.getRequired("topics"); List<String> topicList = Arrays.asList(topics.split(",")); FlinkKafkaConsumer<T> kafkaConsumer = new FlinkKafkaConsumer(topicList, clazz.newInstance(), properties); return env.addSource(kafkaConsumer); } }

3.Flink integrates Kafka

Flink integrates Kafka to realize Exactly Once, which is not covered in this article. You can refer to: Flink integrates Kafka (to realize exactly once)

4. Customize Redis Sink

Flink has released other flow connectors (including ActiveMQ, Flume, Redis, Akka, Netty) for Flink through Apache Bahir. The official link is as follows: Flink official Apache Bahir sink link

The official Redis Sink is not very convenient for us to use, so here we come from the definition of Redis Sink.

/** * TODO Customize Redis Sink * * @author liuzebiao * @Date 2020-2-18 10:26 */ public class MyRedisSink extends RichSinkFunction<Tuple3<String, String, Integer>> { private transient Jedis jedis; @Override public void open(Configuration config) { ParameterTool parameters = (ParameterTool)getRuntimeContext().getExecutionConfig().getGlobalJobParameters(); String host = parameters.getRequired("redis.host"); String password = parameters.get("redis.password", ""); Integer port = parameters.getInt("redis.port", 6379); Integer timeout = parameters.getInt("redis.timeout", 5000); Integer db = parameters.getInt("redis.db", 0); jedis = new Jedis(host, port, timeout); jedis.auth(password); jedis.select(db); } @Override public void invoke(Tuple3<String, String, Integer> value, Context context) throws Exception { if (!jedis.isConnected()) { jedis.connect(); } //Preservation jedis.hset(value.f0, value.f1, String.valueOf(value.f2)); } @Override public void close() throws Exception { jedis.close(); } }

5. code

/** 1. TODO Read Kafka words in real time, calculate and save data to Redis 2. 3. @author liuzebiao 3. @Date 2020-2-18 9:50 */ public class Test { public static void main(String[] args) throws Exception { StreamExecutionEnvironment env = FlinkUtils.getEnv(); ParameterTool parameters = ParameterTool.fromPropertiesFile("config.properties Path of configuration file"); DataStream<String> kafkaStream = FlinkUtils.createKafkaStream(parameters, SimpleStringSchema.class); SingleOutputStreamOperator<Tuple3<String, String, Integer>> tuple3Operator = kafkaStream.flatMap((String lines, Collector<Tuple3<String, String, Integer>> out) -> { Arrays.stream(lines.split(" ")).forEach(word -> out.collect(Tuple3.of("WordCount",word, 1))); }).returns(Types.TUPLE(Types.STRING, Types.STRING,Types.INT)); SingleOutputStreamOperator<Tuple3<String, String, Integer>> sum = tuple3Operator.keyBy(1).sum(2); sum.addSink(new MyRedisSink()); env.execute("Test"); } }

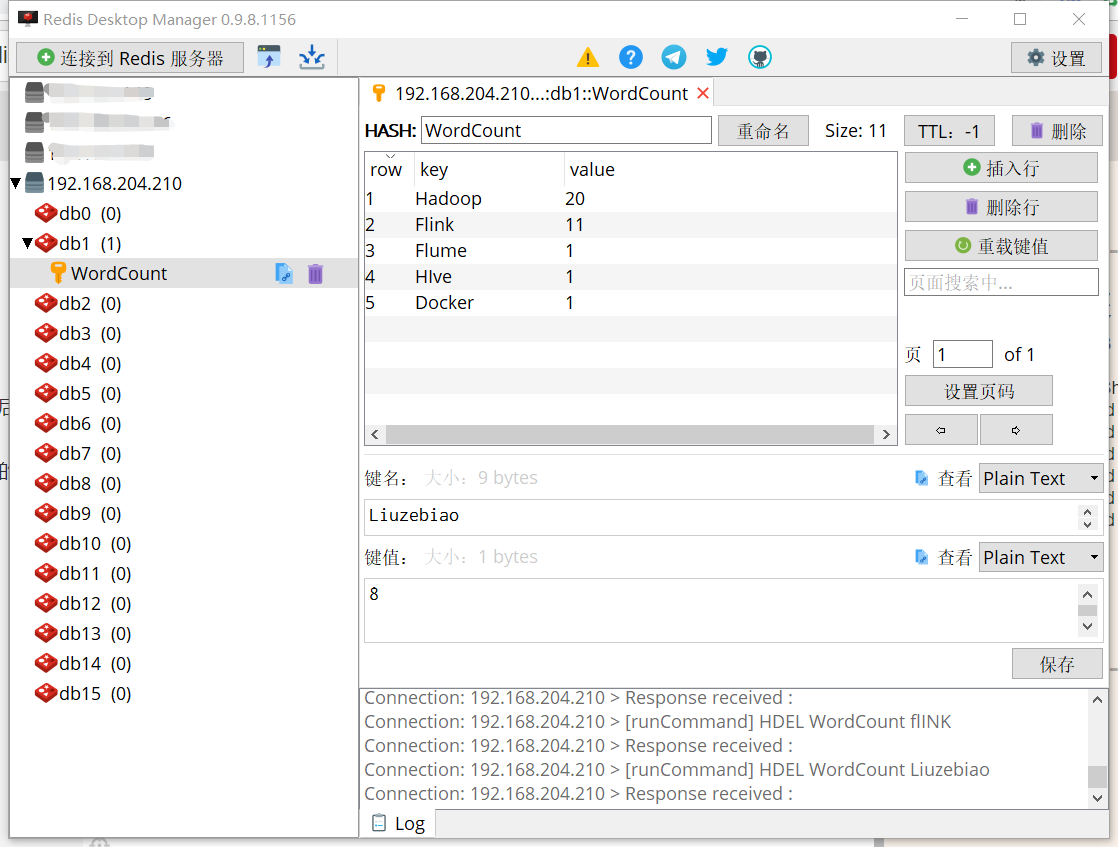

6. Test results

- Read data from Kafka and save the calculation results to Redis in real time.

- At this time, if the Redis service is abnormal, Kafka continues to write messages. After the Redis service is restarted, the data during the abnormal period can be recalculated to ensure that the data is not lost.

- Save the data to Redis. This is the way that the latter overwrites the former to ensure the most effective once in Chengdu.

The data saved to Redis is as follows:

Flink integrates Kafka to read data and save Redis. So far

The articles are all written by the blogger's heart. If this article helps you, please give me a compliment^

End