Preface

In Learn Flink from 0 to 1: introduction to Data Source In this article, I will introduce Flink Data Source and user-defined Data Source briefly. In this article, I will introduce it in more detail and write a demo for you to understand.

Flink Kafka source

Preparation

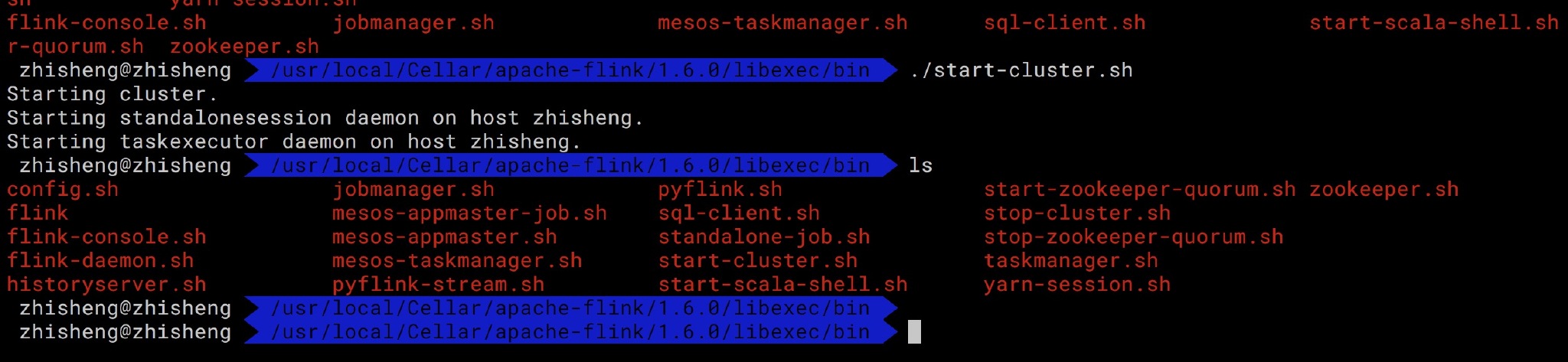

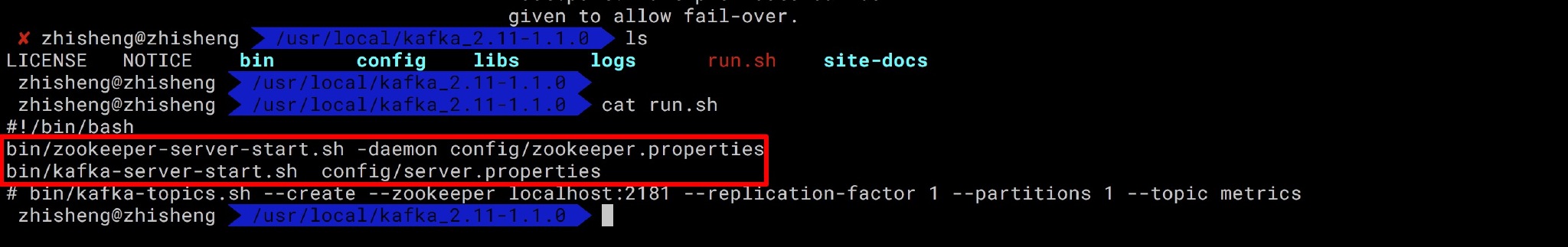

Let's take a look at the demo for Flink to get data from Kafka topic. First, you need to install Flink and Kafka.

Run and start Flink, Zookepeer and Kafka.

All right, it's on!

maven dependence

<!--flink java--> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-java</artifactId> <version>${flink.version}</version> <scope>provided</scope> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-streaming-java_${scala.binary.version}</artifactId> <version>${flink.version}</version> <scope>provided</scope> </dependency> <!--Journal--> <dependency> <groupId>org.slf4j</groupId> <artifactId>slf4j-log4j12</artifactId> <version>1.7.7</version> <scope>runtime</scope> </dependency> <dependency> <groupId>log4j</groupId> <artifactId>log4j</artifactId> <version>1.2.17</version> <scope>runtime</scope> </dependency> <!--flink kafka connector--> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-connector-kafka-0.11_${scala.binary.version}</artifactId> <version>${flink.version}</version> </dependency> <!--alibaba fastjson--> <dependency> <groupId>com.alibaba</groupId> <artifactId>fastjson</artifactId> <version>1.2.51</version> </dependency>

Send data to kafka

Entity class Metric.java

package com.zhisheng.flink.model; import java.util.Map; /** * Desc: * weixi: zhisheng_tian * blog: http://www.54tianzhisheng.cn/ */ public class Metric { public String name; public long timestamp; public Map<String, Object> fields; public Map<String, String> tags; public Metric() { } public Metric(String name, long timestamp, Map<String, Object> fields, Map<String, String> tags) { this.name = name; this.timestamp = timestamp; this.fields = fields; this.tags = tags; } @Override public String toString() { return "Metric{" + "name='" + name + '\'' + ", timestamp='" + timestamp + '\'' + ", fields=" + fields + ", tags=" + tags + '}'; } public String getName() { return name; } public void setName(String name) { this.name = name; } public long getTimestamp() { return timestamp; } public void setTimestamp(long timestamp) { this.timestamp = timestamp; } public Map<String, Object> getFields() { return fields; } public void setFields(Map<String, Object> fields) { this.fields = fields; } public Map<String, String> getTags() { return tags; } public void setTags(Map<String, String> tags) { this.tags = tags; } }

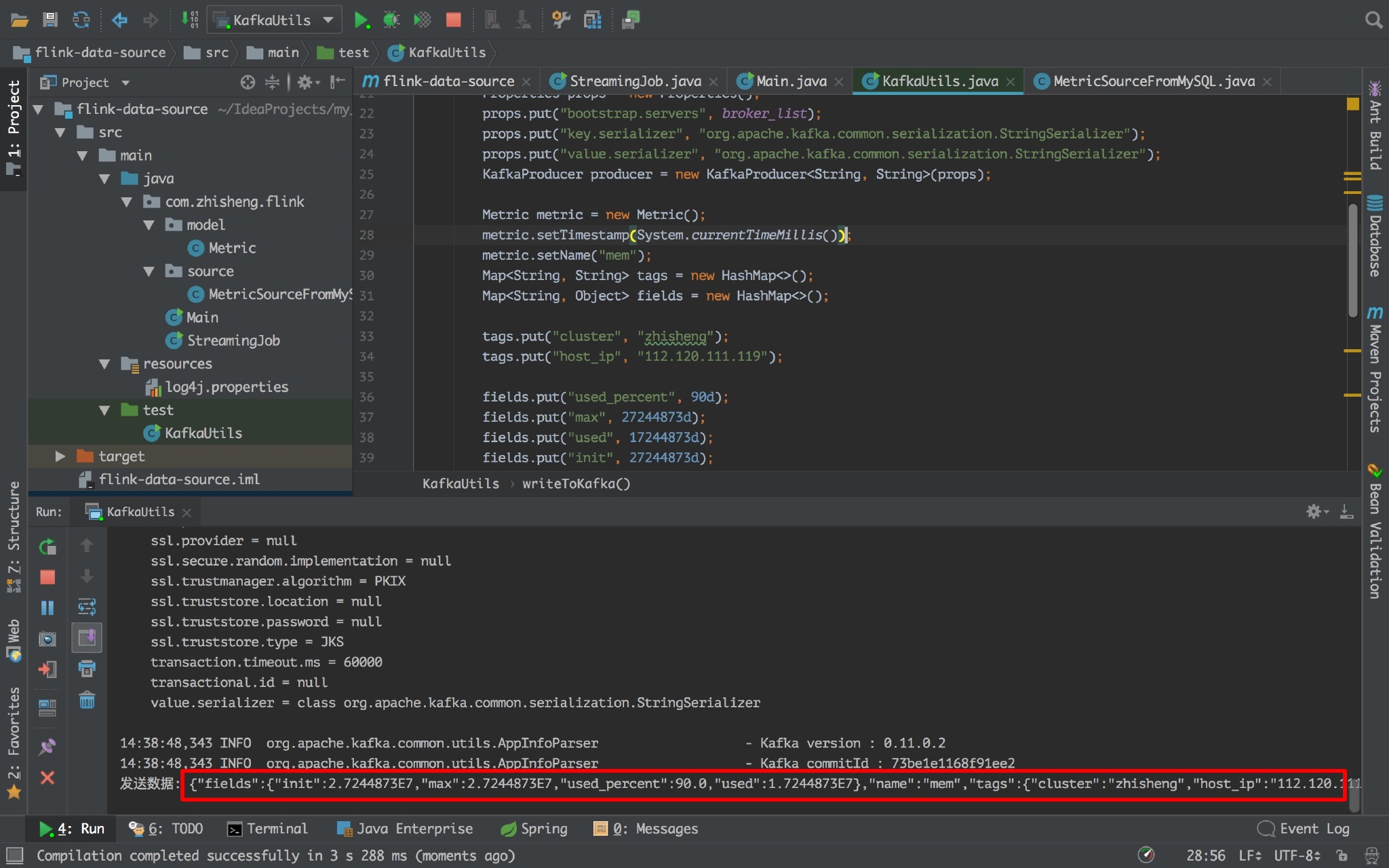

Write data tool class to kafka: KafkaUtils.java

import com.alibaba.fastjson.JSON; import com.zhisheng.flink.model.Metric; import org.apache.kafka.clients.producer.KafkaProducer; import org.apache.kafka.clients.producer.ProducerRecord; import java.util.HashMap; import java.util.Map; import java.util.Properties; /** * Write data to kafka * You can use this main function to test * weixin: zhisheng_tian * blog: http://www.54tianzhisheng.cn/ */ public class KafkaUtils { public static final String broker_list = "localhost:9092"; public static final String topic = "metric"; // kafka topic and Flink program need to be unified with this public static void writeToKafka() throws InterruptedException { Properties props = new Properties(); props.put("bootstrap.servers", broker_list); props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer"); //key serialization props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer"); //value serialization KafkaProducer producer = new KafkaProducer<String, String>(props); Metric metric = new Metric(); metric.setTimestamp(System.currentTimeMillis()); metric.setName("mem"); Map<String, String> tags = new HashMap<>(); Map<String, Object> fields = new HashMap<>(); tags.put("cluster", "zhisheng"); tags.put("host_ip", "101.147.022.106"); fields.put("used_percent", 90d); fields.put("max", 27244873d); fields.put("used", 17244873d); fields.put("init", 27244873d); metric.setTags(tags); metric.setFields(fields); ProducerRecord record = new ProducerRecord<String, String>(topic, null, null, JSON.toJSONString(metric)); producer.send(record); System.out.println("send data: " + JSON.toJSONString(metric)); producer.flush(); } public static void main(String[] args) throws InterruptedException { while (true) { Thread.sleep(300); writeToKafka(); } } }

Function:

If the above icon appears, it means that data can be sent to kafka continuously.

Flink handler

Main.java

package com.zhisheng.flink; import org.apache.flink.api.common.serialization.SimpleStringSchema; import org.apache.flink.streaming.api.datastream.DataStreamSource; import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment; import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer011; import java.util.Properties; /** * Desc: * weixi: zhisheng_tian * blog: http://www.54tianzhisheng.cn/ */ public class Main { public static void main(String[] args) throws Exception { final StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); Properties props = new Properties(); props.put("bootstrap.servers", "localhost:9092"); props.put("zookeeper.connect", "localhost:2181"); props.put("group.id", "metric-group"); props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); //key deserialization props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); props.put("auto.offset.reset", "latest"); //value deserialization DataStreamSource<String> dataStreamSource = env.addSource(new FlinkKafkaConsumer011<>( "metric", //kafka topic new SimpleStringSchema(), // String serialization props)).setParallelism(1); dataStreamSource.print(); //Print the data read from kafka on the console env.execute("Flink add data source"); } }

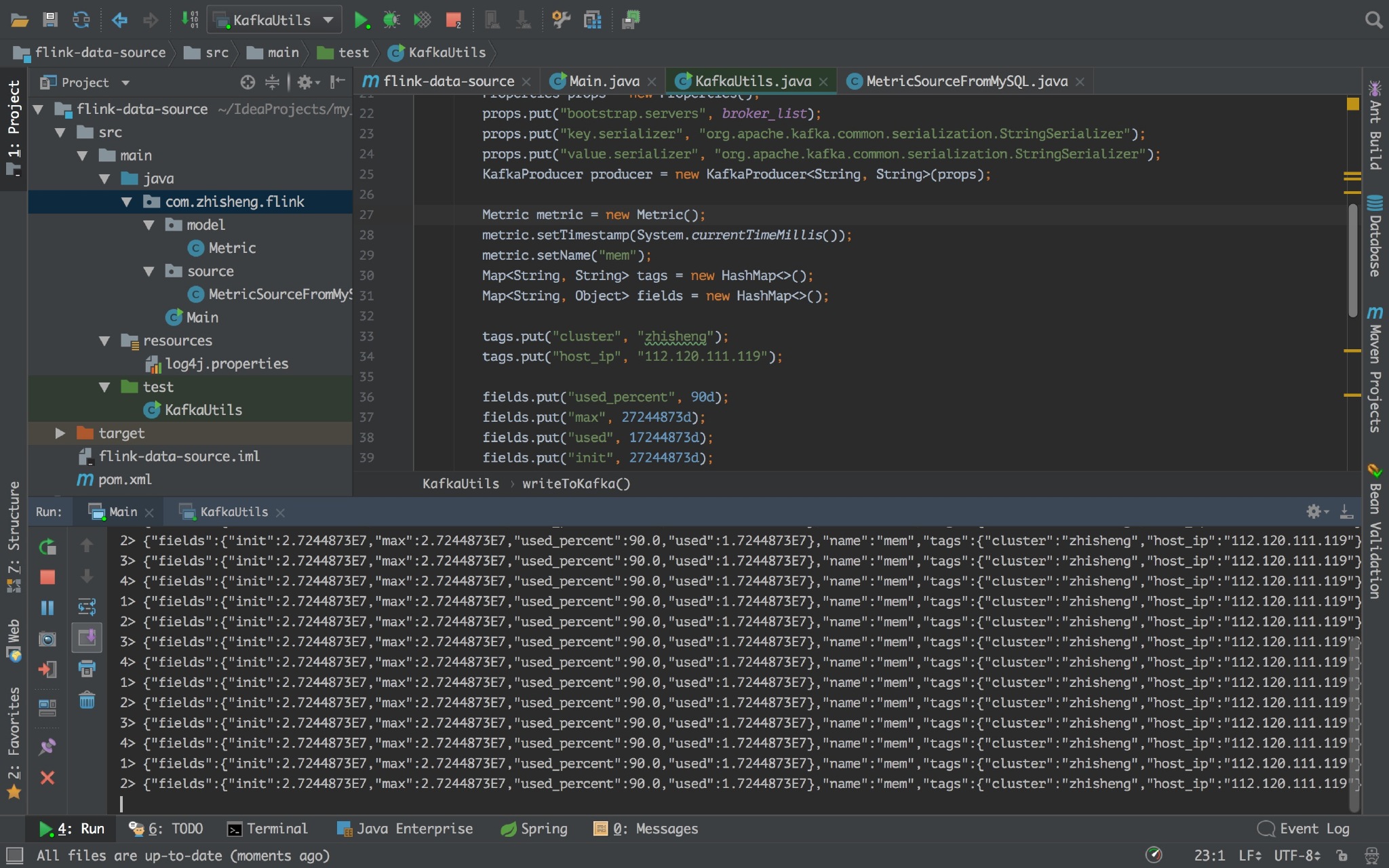

Run:

See no program, Flink program console can continuously print data.

Custom Source

The above is the Kafka source that comes with Flink, so the next step is to write a Source to read data from MySQL.

First, add MySQL dependency in pom.xml:

<dependency> <groupId>mysql</groupId> <artifactId>mysql-connector-java</artifactId> <version>5.1.34</version> </dependency>

The database table is as follows:

DROP TABLE IF EXISTS `student`; CREATE TABLE `student` ( `id` int(11) unsigned NOT NULL AUTO_INCREMENT, `name` varchar(25) COLLATE utf8_bin DEFAULT NULL, `password` varchar(25) COLLATE utf8_bin DEFAULT NULL, `age` int(10) DEFAULT NULL, PRIMARY KEY (`id`) ) ENGINE=InnoDB AUTO_INCREMENT=5 DEFAULT CHARSET=utf8 COLLATE=utf8_bin;

Insert data:

INSERT INTO `student` VALUES ('1', 'zhisheng01', '123456', '18'), ('2', 'zhisheng02', '123', '17'), ('3', 'zhisheng03', '1234', '18'), ('4', 'zhisheng04', '12345', '16'); COMMIT;

New entity class: Student.java

package com.zhisheng.flink.model; /** * Desc: * weixi: zhisheng_tian * blog: http://www.54tianzhisheng.cn/ */ public class Student { public int id; public String name; public String password; public int age; public Student() { } public Student(int id, String name, String password, int age) { this.id = id; this.name = name; this.password = password; this.age = age; } @Override public String toString() { return "Student{" + "id=" + id + ", name='" + name + '\'' + ", password='" + password + '\'' + ", age=" + age + '}'; } public int getId() { return id; } public void setId(int id) { this.id = id; } public String getName() { return name; } public void setName(String name) { this.name = name; } public String getPassword() { return password; } public void setPassword(String password) { this.password = password; } public int getAge() { return age; } public void setAge(int age) { this.age = age; } }

Create a new Source class SourceFromMySQL.java, which inherits RichSourceFunction and implements the open, close, run and cancel methods:

package com.zhisheng.flink.source; import com.zhisheng.flink.model.Student; import org.apache.flink.configuration.Configuration; import org.apache.flink.streaming.api.functions.source.RichSourceFunction; import java.sql.Connection; import java.sql.DriverManager; import java.sql.PreparedStatement; import java.sql.ResultSet; /** * Desc: * weixi: zhisheng_tian * blog: http://www.54tianzhisheng.cn/ */ public class SourceFromMySQL extends RichSourceFunction<Student> { PreparedStatement ps; private Connection connection; /** * open() Method so that you don't have to establish a connection and release a connection every time you invoke. * * @param parameters * @throws Exception */ @Override public void open(Configuration parameters) throws Exception { super.open(parameters); connection = getConnection(); String sql = "select * from Student;"; ps = this.connection.prepareStatement(sql); } /** * After the program is executed, you can close the connection and release the resources. * * @throws Exception */ @Override public void close() throws Exception { super.close(); if (connection != null) { //Close connections and release resources connection.close(); } if (ps != null) { ps.close(); } } /** * DataStream Call the run() method once to get the data * * @param ctx * @throws Exception */ @Override public void run(SourceContext<Student> ctx) throws Exception { ResultSet resultSet = ps.executeQuery(); while (resultSet.next()) { Student student = new Student( resultSet.getInt("id"), resultSet.getString("name").trim(), resultSet.getString("password").trim(), resultSet.getInt("age")); ctx.collect(student); } } @Override public void cancel() { } private static Connection getConnection() { Connection con = null; try { Class.forName("com.mysql.jdbc.Driver"); con = DriverManager.getConnection("jdbc:mysql://localhost:3306/test?useUnicode=true&characterEncoding=UTF-8", "root", "root123456"); } catch (Exception e) { System.out.println("-----------mysql get connection has exception , msg = "+ e.getMessage()); } return con; } }

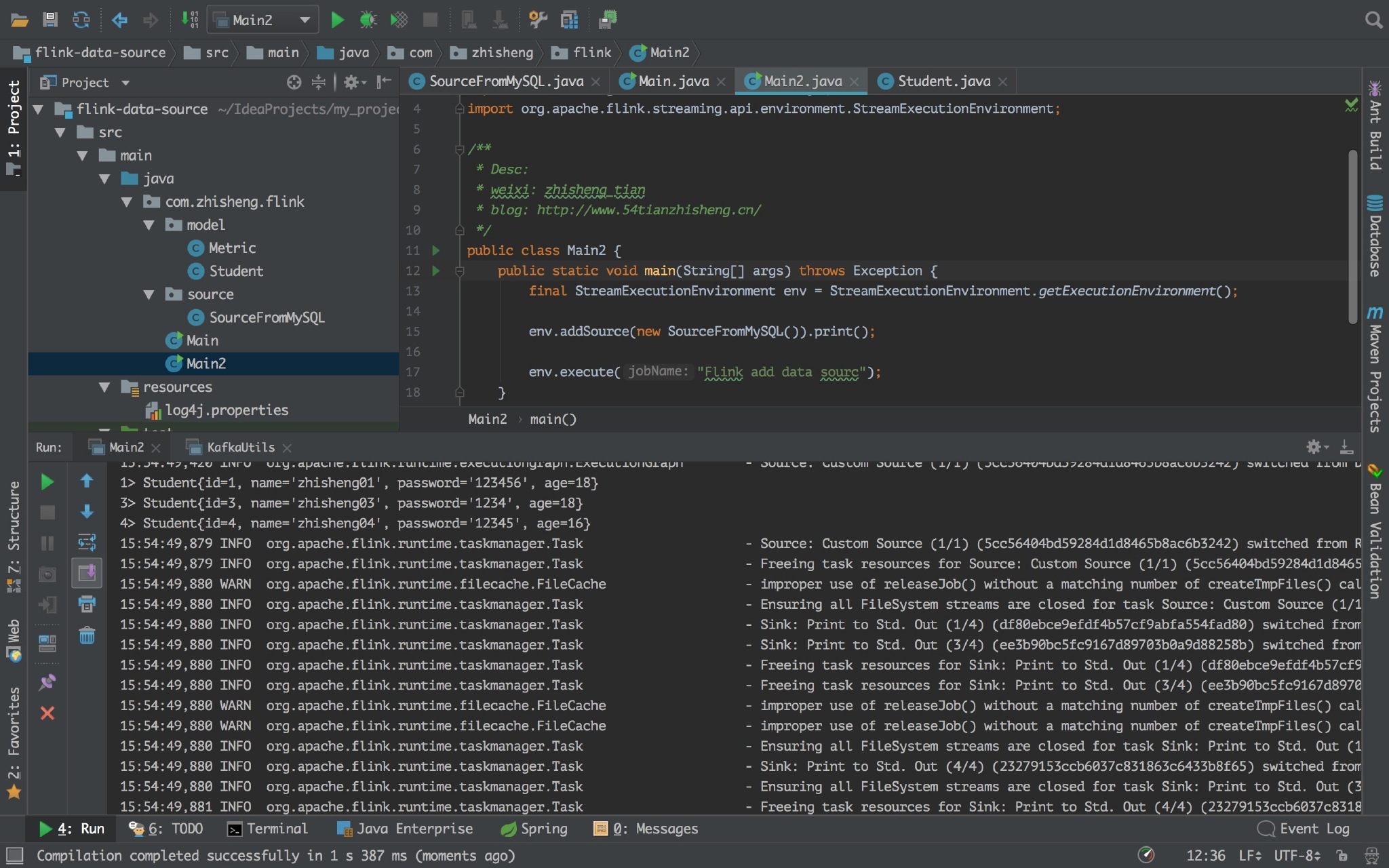

Flink program:

package com.zhisheng.flink; import com.zhisheng.flink.source.SourceFromMySQL; import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment; /** * Desc: * weixi: zhisheng_tian * blog: http://www.54tianzhisheng.cn/ */ public class Main2 { public static void main(String[] args) throws Exception { final StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); env.addSource(new SourceFromMySQL()).print(); env.execute("Flink add data sourc"); } }

Run the Flink program, and the printed student information can be seen in the console log.

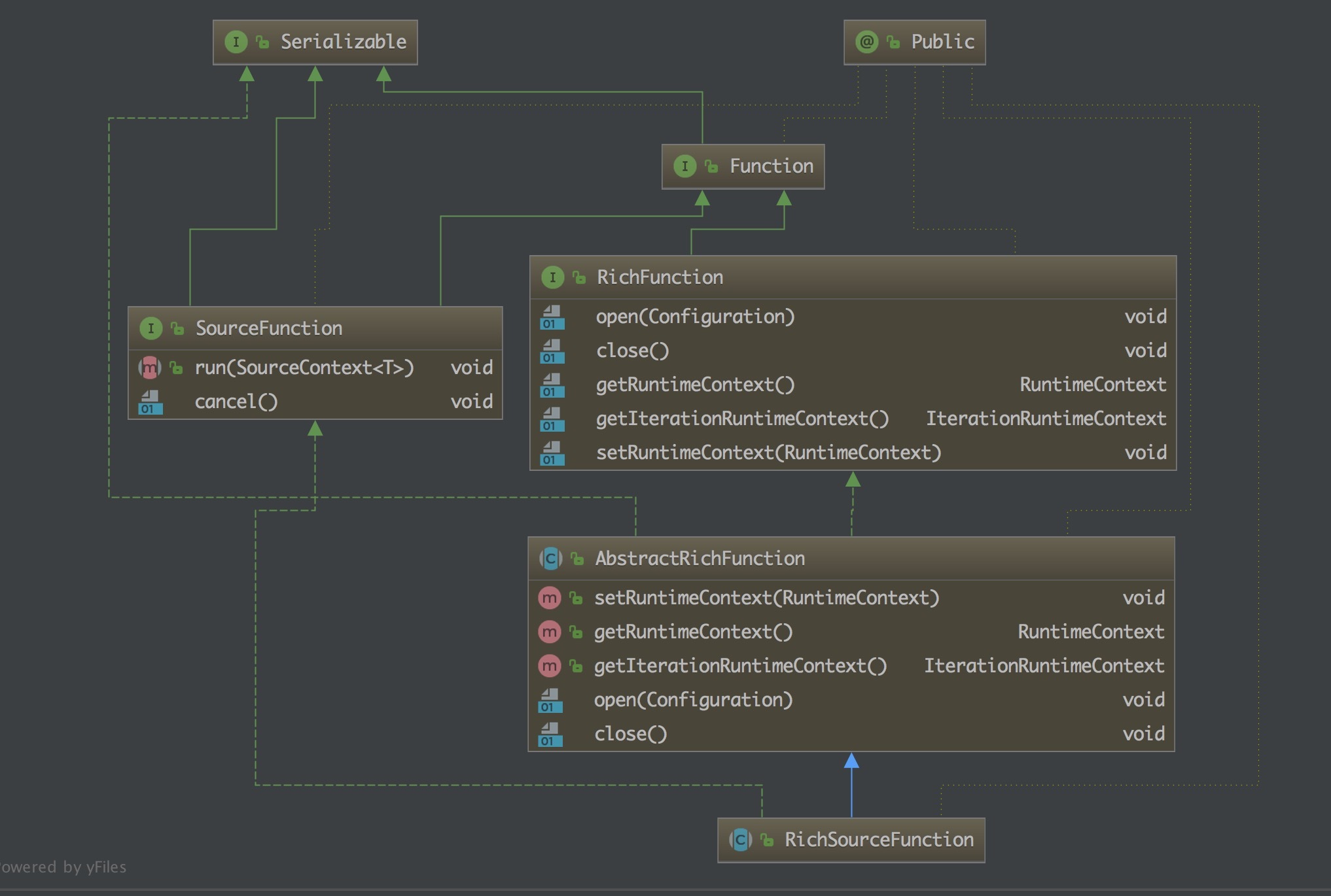

RichSourceFunction

From the above customized Source, we can see that we inherit this RichSourceFunction class, so let's understand:

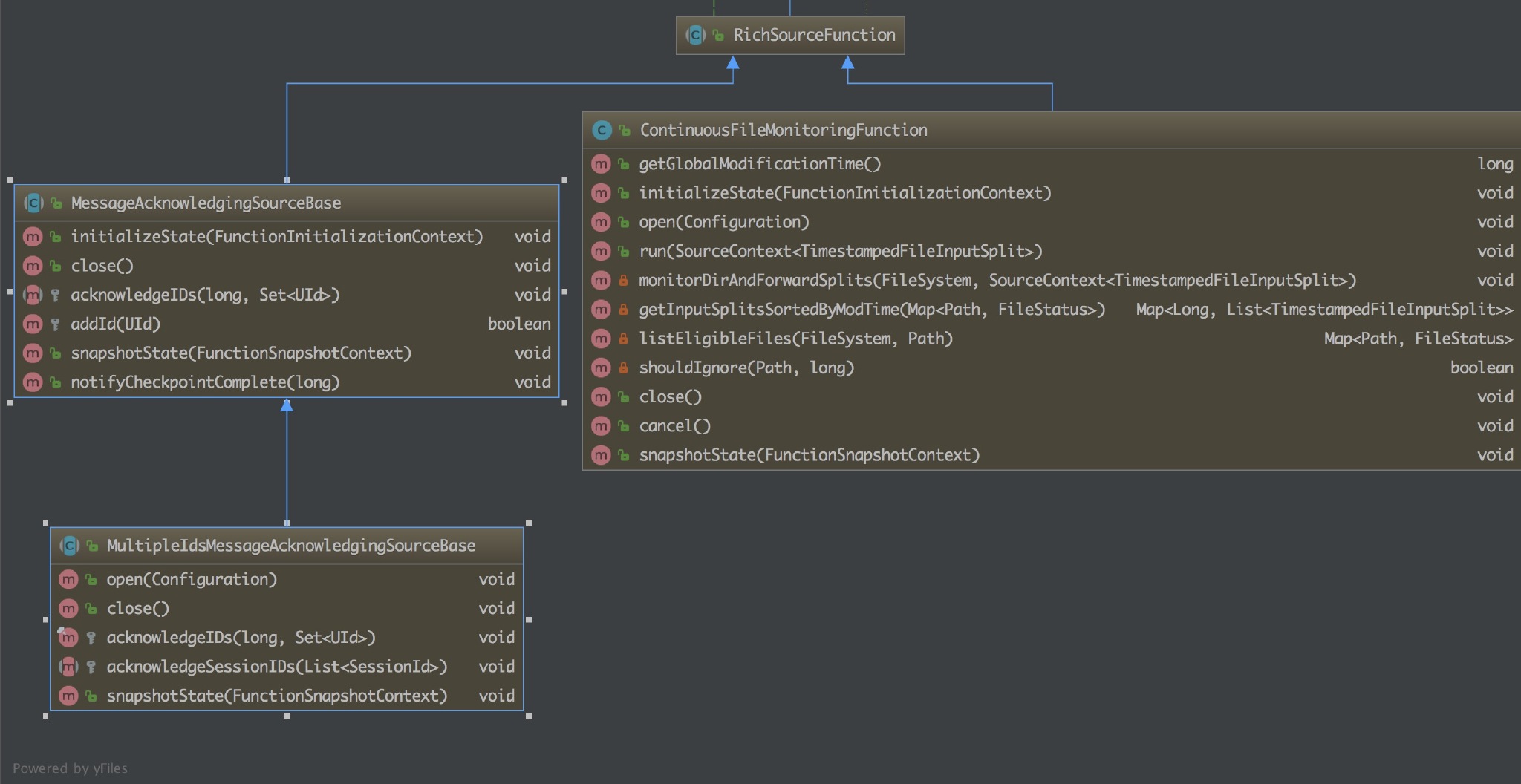

An abstract class, inherited from AbstractRichFunction. Provide basic capabilities for implementing a Rich SourceFunction. There are three subclasses of this class, two are abstract classes, based on which a more specific implementation is provided, and the other is ContinuousFileMonitoringFunction.

- MessageAcknowledgingSourceBase: it aims at the scenario where the data source is a message queue and provides an ID based response mechanism.

- Multipleidsmessageacknowledgedgingsourcebase: Based on messageacknowledgedgingsourcebase, the ID response mechanism is further subdivided. It supports two ID response models: session id and unique message id.

- ContinuousFileMonitoringFunction: This is a single (non parallel) monitoring task. It accepts FileInputFormat and monitors the path provided by users according to FileProcessingMode and FilePathFilter. It decides which files should be read and processed further. It creates FileInputSplit corresponding to these files and assigns them to downstream tasks for further development. Step processing.

Last

This article mainly talks about the use of Kafka Source by Flink, and provides a demo to teach you how to customize the source and read data from MySQL. Of course, you can also read from other places to realize your own data source. It may be more complicated than this in normal work, so we need to be flexible!