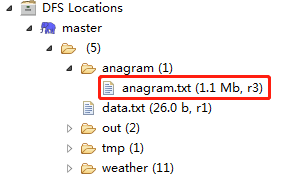

Dataset import HDFS

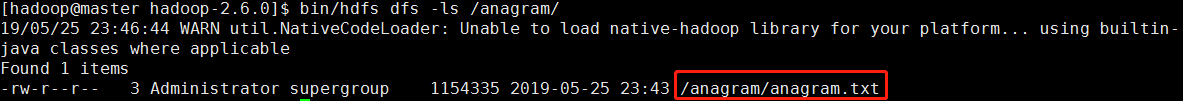

Command line access to the dataset just uploaded to HDFS

[hadoop@master hadoop-2.6.0]$ bin/hdfs dfs -ls /anagram/

MapReduce program compilation and operation:

Step 1: in the Map stage, sort each word alphabetically to generate sortedWord, and then output the key/value key value pair (sortedWord,word).

//Writing Map process

public static class Anagramsmapper extends Mapper<LongWritable, Text, Text, Text> {

public void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String text = value.toString(); //Converts the alphabet value of the entered Text type to the String type

char[] textCharArray = text.toCharArray(); //Convert alphabet of String type to character array

Arrays.sort(textCharArray); //Sort character arrays

String sortedText = new String(textCharArray); //Convert the sorted character array to String string String

context.write(new Text(sortedText), value); //Write context, output key (sorted alphabet) and output value (original alphabet)

}

}

Step 2: in the Reduce stage, count all anagrams made up of the same letters in each group.

//Write Reduce process

public static class Anagramsreducer extends Reducer<Text, Text, Text, Text> {

public void reduce(Text key, Iterable<Text> values, Context context) throws IOException, InterruptedException {

StringBuffer res = new StringBuffer(); //Create an empty StringBuffer instance res

int count = 0; //Counter initial value is 0

//Start traversing values

for (Text text : values) {

//If there is a value in the res array, add a "," sign as the separator before adding a new value

if(res.length() > 0) {

res.append(",");

}

//Add values to res

res.append(text);

//count

count++;

}

//Only words with two or more identical letters are displayed

if(count > 1) {

context.write(key, new Text(res.toString()));

}

}

}

Step 3: unit test and debug the code.

public class AnagramsMapperTest {

private Mapper mapper;

private MapDriver driver;

@Before

public void init() {

mapper = new Anagrams.Anagramsmapper();

driver = new MapDriver(mapper);

}

@Test

public void test() throws IOException {

String line = "gfedcba"; //Customize this letter to verify that the output will be sorted correctly

driver.withInput(new LongWritable(), new Text(line))

.withOutput(new Text("abcdefg"),new Text("gfedcba")) //Verify that the output Key is alphabetized and the output Value is unchanged

.runTest();

}

}

public class AnagramsReduceTest {

private Reducer reducer;

private ReduceDriver driver;

@Before

public void init() {

reducer = new Anagrams.Anagramsreducer();

driver = new ReduceDriver(reducer);

}

@Test

public void test() throws IOException {

Text key = new Text("abcdefg"); //Create a new Key, the output is fixed

List values = new ArrayList(); //Write 4 sets of letter Value values in the new array list to verify whether they are finally output in the predetermined format

values.add(new Text("gfedcba"));

values.add(new Text("decgfba"));

values.add(new Text("fedgcba"));

values.add(new Text("gcbfeda"));

driver.withInput(key, values)

.withOutput(key, new Text("gfedcba,decgfba,fedgcba,gcbfeda")) //Verify output in this format

.runTest();

}

}

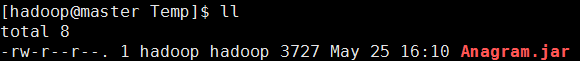

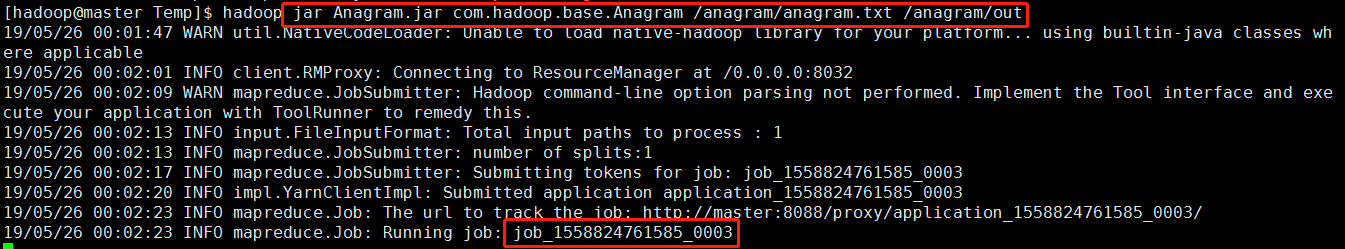

Step 4: compile and package the project as Anagram.jar, and use the client to upload Anagram.jar to the / home/hadoop/Temp directory of hadoop.

Step 5: use cd /home/hadoop/Temp to switch to the current directory, and execute the task through Hadoop jar anagram.jar com.hadoop.base.anagram/anagram/anagram.txt/anagram/out command line.

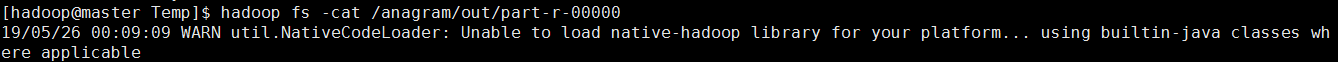

Step 6: output the final result of the task to HDFS, and use the hadoop fs -cat /anagram/out/part-r-00000 command to view the result.