Feature extraction and matching of opencv (2)

demo: http://download.csdn.net/detail/keen_zuxwang/9852587

RANSAC is the abbreviation of "RANdom SAmple Consensus Random Sample Consistency".

It can estimate the parameters of the mathematical model iteratively from a set of observation data containing "outliers".

It is an uncertain algorithm - it has a certain probability to get a reasonable result. In order to improve the probability, the number of iterations must be increased.

The algorithm was first proposed by Fischler and Bolles in 1981.

The basic assumptions of RANSAC are:

1. Data are composed of "intra-point". For example, the distribution of data can be explained by some model parameters.

2. "Outside point" is the data that can not adapt to the model.

3. In addition, the data belong to noise. The reasons for the occurrence of outliers are: the extremum of noise; the wrong measurement method; the wrong assumption of data.

//! computes the best-fit perspective transformation mapping srcPoints to dstPoints.

CV_EXPORTS_W Mat findHomography( InputArray srcPoints, InputArray dstPoints,

int method=0, double ransacReprojThreshold=3,

OutputArray mask=noArray());Transform of Function Finding Key Points on Two Plane Matching

srcPoints:

The coordinates of the midpoint of the original plane, a CV_32FC2 or vector matrix;

dstPoints:

The coordinates of the midpoint in the target plane, a CV_32FC2 or vector matrix;

method:

The method for calculating homography matrix is an enumeration value (default is 0, representing the conventional method of using all points).

CV_RANSAC: Robust method based on RANSAC;

CV_LMEDS: the most uneven method;

ransacReprojThreshold:

Only when CV_RANSAC method is used, a pair of points are considered as the maximum projection error of the inner window.

mask:

Optional output mask settings for robust methods;

mask parameter, if there are N matching points to calculate homography matrix, then the value has N elements, each element has a value of 0 or 1.

When the value is 0, the mismatch (outlier) representing the matching point is valid only when the RANSAC and LMeds methods are used.

This value can be used to delete false matches - to indicate whether the matching point is an outlier to optimize the matching results?

//! performs perspective transformation of each element of multi-channel input matrix

CV_EXPORTS_W void perspectiveTransform(InputArray src, OutputArray dst, InputArray m );Function: Vector Projection Matrix Conversion

src:

Input a floating-point array of two or three channels, each element being a converted 2D/3D vector;

dst:

The output matrix of the same size and type as the input;

m:

3x3 or 4x4 floating-point conversion matrix;

// Draws matches of keypints from two images on output image.

CV_EXPORTS void drawMatches( const Mat& img1, const vector<KeyPoint>& keypoints1,

const Mat& img2, const vector<KeyPoint>& keypoints2,

const vector<DMatch>& matches1to2, Mat& outImg,

const Scalar& matchColor=Scalar::all(-1), const Scalar& singlePointColor=Scalar::all(-1),

const vector<char>& matchesMask=vector<char>(), int flags=DrawMatchesFlags::DEFAULT );Function: Key points of matching found from two pictures

img1: Source image

img2: source image;

Keypoints 1: key points in source images

Keypoints 2: key points in source images;

Matches 1 to 2: matching the second image from the first image, that is, finding the corresponding point with keypoints 2 [i] from keypoints 1 [i];

outImg: Out image; its value depends on what flags - draw in the image;

matchColor:

Match color (line and point color), match color == Scalar:: all (-1), color random generation;

singlePointColor:

The color of a single key point (that is, no key point matches it), matchColor==Scalar::all(-1), and the color is generated randomly.

matchesMask:

The mask decides which key points to match. If the mask is empty, all key points to match are drawn.

Flags: Set up the drawing function, and the value of possible flags is determined by "Draw Matches Flags";

JNI :

JNIEXPORT jlong JNICALL Java_com_example_orbhog_MainActivity_doFeatureMatch(JNIEnv *env, jclass clz, jlong imgObject, jlong imgScene)

{

Mat img_object = Mat(*(Mat*)imgObject);

cvtColor(img_object, img_object, CV_BGRA2BGR);

Mat img_scene = Mat(*(Mat*)imgScene);

cvtColor(img_scene, img_scene, CV_BGRA2BGR);

LOGD(" featureTest %d, %d !",img_object.cols, img_object.rows);

//initModule_nonfree();//only for SIFT or SURF

//# include "opencv2/features2d/features2d.hpp" declared before it can be created effectively

Ptr<FeatureDetector> detector = FeatureDetector::create("ORB"); // FAST

Ptr<DescriptorExtractor> descriptor = DescriptorExtractor::create("ORB"); // BRIEF

Ptr<DescriptorMatcher> descriptor_matcher = DescriptorMatcher::create("BruteForce");

if( detector.empty() || descriptor.empty()) {

LOGD(" fail to create detector! ");

}

vector<KeyPoint> keypoints_object, keypoints_scene;

detector->detect( img_object, keypoints_object );

detector->detect( img_scene, keypoints_scene );

Mat descriptors_object, descriptors_scene;

descriptor->compute( img_object, keypoints_object, descriptors_object );

descriptor->compute( img_scene, keypoints_scene, descriptors_scene );

//Mat img_keypoints1,img_keypoints2;

//drawKeypoints(img1,keypoints1,img_keypoints1,Scalar::all(-1),0);

//drawKeypoints(img2,keypoints2,img_keypoints2,Scalar::all(-1),0);

vector<DMatch> matches;

descriptor_matcher->match( descriptors_object, descriptors_scene, matches );

double max_dist = 0;

double min_dist = 50;

long length = matches.size();

LOGD(" FlannBasedMatcher %ld \n", length );

//Quick calculation of max and min distances between keypoints

for(int i=0; i<length; i++) { //descriptors_object.rows

double dist = matches[i].distance;

if( dist < min_dist )

min_dist = dist;

if( dist > max_dist )

max_dist = dist;

}

LOGD("-- Max dist : %f \n", max_dist );

LOGD("-- Min dist : %f \n", min_dist );

//-- Draw only "good" matches (i.e. whose distance is less than 3*min_dist )

vector< DMatch > good_matches;

int m_start = length/4;

int m_end = length - m_start;

for(int i=m_start; i<length; i++) { // descriptors_object.rows

good_matches.push_back(matches[i]);

}

/*

double max_d = max_dist*0.9;

for(int i=0; i<length; i++) { // descriptors_object.rows

if( matches[i].distance > min_dist+ && matches[i].distance<max_d){

good_matches.push_back( matches[i]);

}

}

*/

Mat img_matches;

drawMatches(img_object, keypoints_object, img_scene, keypoints_scene,

good_matches,

img_matches,

Scalar::all(-1),

Scalar::all(-1),

vector<char>(),

DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS );

//-- Localize the object

vector<Point2f> obj;

vector<Point2f> scene;

for( int i = 0; i < good_matches.size(); i++ ) {

//-- Get the keypoints from the good matches

obj.push_back(keypoints_object[good_matches[i].queryIdx].pt); // query descriptor index

scene.push_back(keypoints_scene[good_matches[i].trainIdx].pt); // train descriptor index

}

Mat H = findHomography( obj, scene, CV_RANSAC );

// The homography matrix H is calculated according to the matching points, and the matching results are refined (matrix H stores the homography matrix obtained by RANSAC for perspective matrix) to find the transformation of key points on two plane matching.

//Get the corners from the img_object ( the object to be "detected" )

vector<Point2f> obj_corners(4);

obj_corners[0] = cvPoint(0,0);

obj_corners[1] = cvPoint( img_object.cols, 0 );

obj_corners[2] = cvPoint( img_object.cols, img_object.rows );

obj_corners[3] = cvPoint( 0, img_object.rows );

vector<Point2f> scene_corners(4);

perspectiveTransform( obj_corners, scene_corners, H); // Projection Matrix Conversion of Vectors

//-- Draw lines between the corners (the mapped object in the scene - img_scene )

line( img_matches, scene_corners[0] + Point2f( img_object.cols, 0), scene_corners[1] + Point2f( img_object.cols, 0), Scalar(0, 255, 0), 4 );

line( img_matches, scene_corners[1] + Point2f( img_object.cols, 0), scene_corners[2] + Point2f( img_object.cols, 0), Scalar( 0, 255, 0), 4 );

line( img_matches, scene_corners[2] + Point2f( img_object.cols, 0), scene_corners[3] + Point2f( img_object.cols, 0), Scalar( 0, 255, 0), 4 );

line( img_matches, scene_corners[3] + Point2f( img_object.cols, 0), scene_corners[0] + Point2f( img_object.cols, 0), Scalar( 0, 255, 0), 4 );

Mat *hist = new Mat(img_matches);

return (jlong) hist;

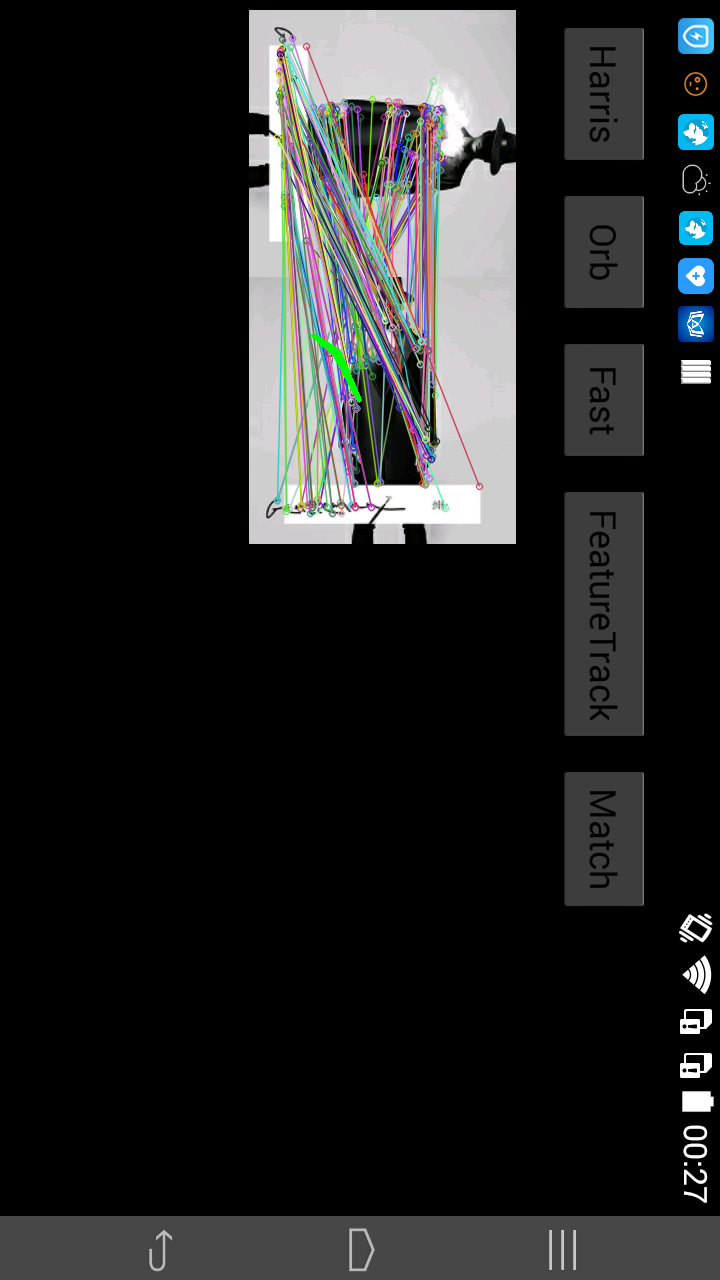

}ORB-ORB matching:

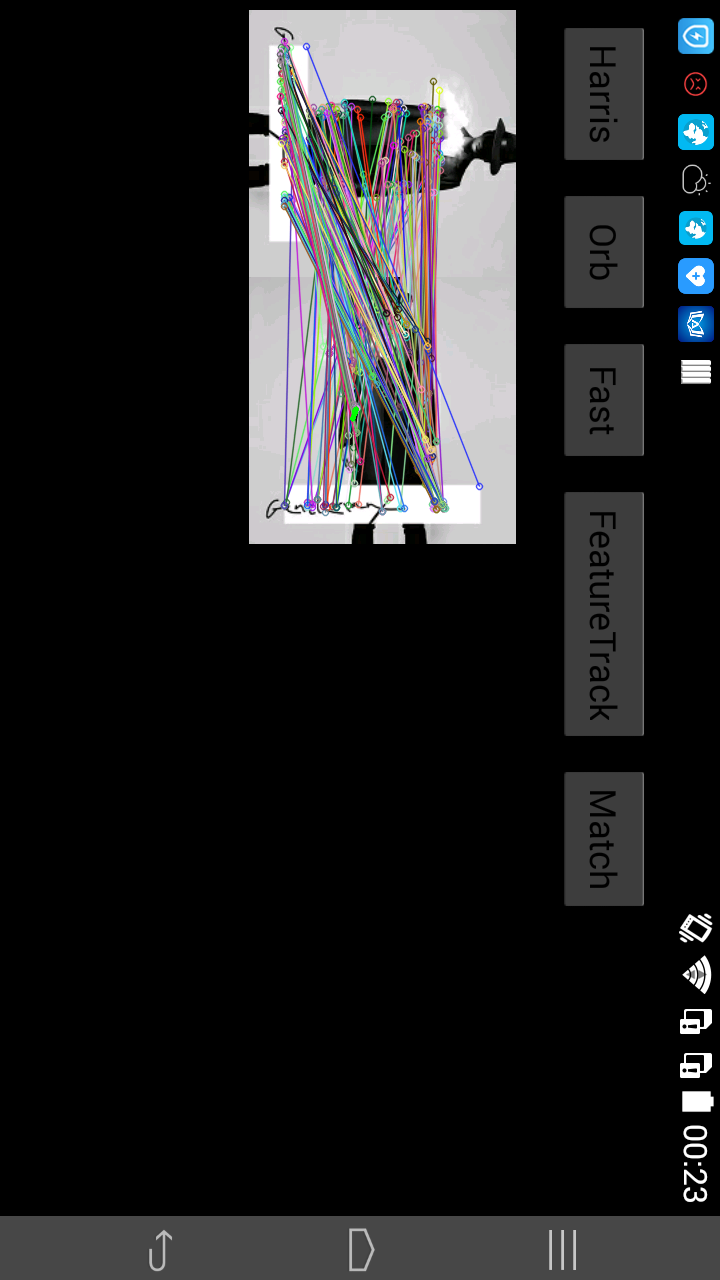

FAST-ORB Matching:

FAST-BRIEF Matching: