In order to speed up the program, we often choose openmp.

There are several problems that we often encounter. To sum up.

1. The result of calculation is inconsistent with expectation. The results of each operation are different.

#pragma omp parallel for

for(long i = 0; i< 10000000000; i++){

sum += 1;

}

//Cout < < sum < < endl; the results are different each time.

//Solution: Reduction (both efficiency and correctness)

/*****************Improvement of *****************************************************************/

#pragma omp parallel for reduction(+:sum)

for(long i = 0; i< 10000000000; i++){

sum += 1;

}2. Scope of # pragma omp parallel for

#pragma omp parallel for reduction(+:sum)

for(long i = 0; i< 10000000000; i++){

sum += 1;

}

for(long i = 0; i< 10000000000; i++){

var += 1;

}

//Do two for work?

//Answer: Only the first (following the pragma omp parallel for statement) worked.

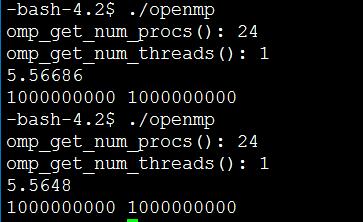

The left figure does not open openmp. The cumulative results. The right figure shows the result of two for loops declaring # pragma omp parallel for once. (The right one is to verify the scope of openmp).

/*****************Improvement of *****************************************************************/

omp_set_num_threads(num_thread); //Num_threads are preceded by (int)

#pragma omp parallel for reduction(+:sum)

for(long i = 0; i< 10000000000; i++){

sum += 1;

}

omp_set_num_threads(num_thread);

#pragma omp parallel for reduction(+:sumw)

for(long i = 0; i< 10000000000; i++){

sumw += 1;

}

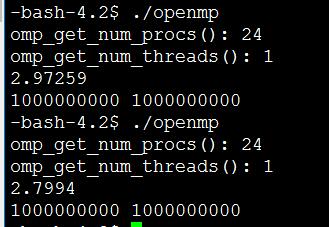

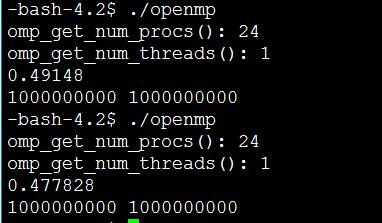

The figure shows the result of the improvement. As you can see, opening openmp is more than 10 times faster than not.

/***************************************Q&A******************************************/

1) Sometimes we wonder why I didn't improve my openmp efficiency. There are even reductions.

This question: basically, there are two aspects:

One is that the program is too small (the original run time is very short). Second, it may be wrong. openmp didn't work. Note: The time unit I used in the screenshot is seconds.

2) Timing function

double start = omp_get_wtime(); //#include <opm.h>

/******do something******/

double finish = omp_get_wtime();

cout << finish-start << endl;3. What if I want to configure openmp in CMakeLists.txt?

// Add the following code to your CMakeLists.txt.

find_package(OpenMP)

if (OPENMP_FOUND)

set (CMAKE_C_FLAGS "${CMAKE_C_FLAGS} ${OpenMP_C_FLAGS}")

set (CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} ${OpenMP_CXX_FLAGS}")

endif()4. Which openmp technologies are often used to optimize serial code?

reduction vectorization (simd)

5. Can you optimize the while loop with openmp?

No, only for loops with known number of loops can be optimized.

6. What are the most commonly used functions of openmp and macro definitions?

omp_get_num_procs()// Return number processors (cat/proc/cpuinfo) Header file definitions generally use this: # Macros defined by ifdef_OPENMP//openmp #include "omp.h" #else #define omp_get_thread_num() 0 #endif

7. Is there a difference between parallel and paralle for? Great difference!

#pragma omp parallel num_threads(3)

{ //Braces must be exclusive

for(int i = 0; i < 3;i++){

printf("hello wolrd %d",i); //In order to output correctly, cout is not used

}

}// Meaning: Open three threads, each thread executes the contents in braces once. So output helloworld nine times.

#pragma omp parallel for num_threads(3)

for(int i = 0; i < 3;i++){

printf("hello wolrd %d",i); //In order to output correctly, cout is not used

}

//Three threads share a for task. Output hello world three times.8. Does openmp support container technology in c++stl?

I don't know. At present, please give me an answer.