Etcd is a very important Service of kubernetes cluster. It stores all data information of kubernetes cluster, such as Namespace, Pod, Service, routing and other status information. In case of etcd cluster disaster or etcd cluster data loss, it will affect k8s cluster data recovery. Therefore, it is very important to backup etcd data to realize the disaster recovery environment of kubernetes cluster.

1, etcd cluster backup

- The backup operation can be performed on one of the nodes of the etcd cluster.

- The api of etcd v3 is used here. Since k8s 1.13, k8s no longer supports v2 etcd, that is, the cluster data of k8s exists in v3 etcd. Therefore, the backup data only backs up the etcd data added with v3, and the etcd data added with v2 is not backed up.

- This case uses the k8s v1.18.6 + Calico container environment for binary deployment (the "ETCDCTL_API=3 etcdctl" in the following command is equivalent to "etcdctl")

1 2 3 4 5 6 7 8 9 10 11 12 13 14 etcd Data directory [root@k8s-master01 ~]# cat /opt/k8s/bin/environment.sh |grep "ETCD_DATA_DIR=" export ETCD_DATA_DIR="/data/k8s/etcd/data" etcd WAL catalogue [root@k8s-master01 ~]# cat /opt/k8s/bin/environment.sh |grep "ETCD_WAL_DIR=" export ETCD_WAL_DIR="/data/k8s/etcd/wal" [root@k8s-master01 ~]# ls /data/k8s/etcd/data/ member [root@k8s-master01 ~]# ls /data/k8s/etcd/data/member/ snap [root@k8s-master01 ~]# ls /data/k8s/etcd/wal/ 0000000000000000-0000000000000000.wal 0.tmp

1 # mkdir -p /data/etcd_backup_dir

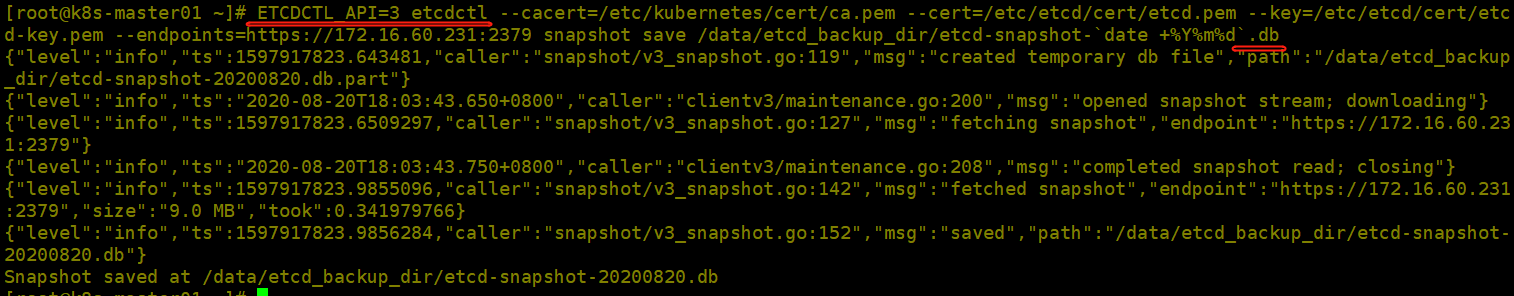

Perform a backup on one of the etcd cluster nodes (here at k8s-master01):

1 [root@k8s-master01 ~]# ETCDCTL_API=3 etcdctl --cacert=/etc/kubernetes/cert/ca.pem --cert=/etc/etcd/cert/etcd.pem --key=/etc/etcd/cert/etcd-key.pem --endpoints=https://172.16.60.231:2379 snapshot save /data/etcd_backup_dir/etcd-snapshot-`date +%Y%m%d`.db

1 2 [root@k8s-master01 ~]# rsync -e "ssh -p22" -avpgolr /data/etcd_backup_dir/etcd-snapshot-20200820.db root@k8s-master02:/data/etcd_backup_dir/ [root@k8s-master01 ~]# rsync -e "ssh -p22" -avpgolr /data/etcd_backup_dir/etcd-snapshot-20200820.db root@k8s-master03:/data/etcd_backup_dir/

You can put the etcd backup command of the above k8s-master01 node into the script and perform scheduled backup in combination with crontab:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 [root@k8s-master01 ~]# cat /data/etcd_backup_dir/etcd_backup.sh #!/usr/bin/bash date; CACERT="/etc/kubernetes/cert/ca.pem" CERT="/etc/etcd/cert/etcd.pem" EKY="/etc/etcd/cert/etcd-key.pem" ENDPOINTS="172.16.60.231:2379" ETCDCTL_API=3 /opt/k8s/bin/etcdctl \ --cacert="${CACERT}" --cert="${CERT}" --key="${EKY}" \ --endpoints=${ENDPOINTS} \ snapshot save /data/etcd_backup_dir/etcd-snapshot-`date +%Y%m%d`.db # Backup retention for 30 days find /data/etcd_backup_dir/ -name "*.db" -mtime +30 -exec rm -f {} \; # Synchronize to the other two etcd nodes /bin/rsync -e "ssh -p5522" -avpgolr --delete /data/etcd_backup_dir/ root@k8s-master02:/data/etcd_backup_dir/ /bin/rsync -e "ssh -p5522" -avpgolr --delete /data/etcd_backup_dir/ root@k8s-master03:/data/etcd_backup_dir/

1 2 3 4 [root@k8s-master01 ~]# chmod 755 /data/etcd_backup_dir/etcd_backup.sh [root@k8s-master01 ~]# crontab -l #etcd cluster data backup 0 5 * * * /bin/bash -x /data/etcd_backup_dir/etcd_backup.sh > /dev/null 2>&1

2, etcd cluster recovery

The etcd cluster backup operation only needs to be completed on one of the etcd nodes, and then copy the backup files to other nodes.

However, etcd cluster recovery must be completed on all etcd nodes!

1) Simulating etcd cluster data loss

Delete the data of the three etcd cluster nodes (or directly delete the data directory)

1 # rm -rf /data/k8s/etcd/data/*

To view k8s cluster status:

1 2 3 4 5 6 7 [root@k8s-master01 ~]# kubectl get cs NAME STATUS MESSAGE ERROR etcd-2 Unhealthy Get https://172.16.60.233:2379/health: dial tcp 172.16.60.233:2379: connect: connection refused etcd-1 Unhealthy Get https://172.16.60.232:2379/health: dial tcp 172.16.60.232:2379: connect: connection refused etcd-0 Unhealthy Get https://172.16.60.231:2379/health: dial tcp 172.16.60.231:2379: connect: connection refused scheduler Healthy ok controller-manager Healthy ok

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 [root@k8s-master01 ~]# kubectl get cs NAME STATUS MESSAGE ERROR controller-manager Healthy ok scheduler Healthy ok etcd-0 Healthy {"health":"true"} etcd-2 Healthy {"health":"true"} etcd-1 Healthy {"health":"true"} [root@k8s-master01 ~]# ETCDCTL_API=3 etcdctl --endpoints="https://172.16.60.231:2379,https://172.16.60.232:2379,https://172.16.60.233:2379" --cert=/etc/etcd/cert/etcd.pem --key=/etc/etcd/cert/etcd-key.pem --cacert=/etc/kubernetes/cert/ca.pem endpoint health https://172.16.60.231:2379 is healthy: successfully committed proposal: took = 9.918673ms https://172.16.60.233:2379 is healthy: successfully committed proposal: took = 10.985279ms https://172.16.60.232:2379 is healthy: successfully committed proposal: took = 13.422545ms [root@k8s-master01 ~]# ETCDCTL_API=3 etcdctl --endpoints="https://172.16.60.231:2379,https://172.16.60.232:2379,https://172.16.60.233:2379" --cert=/etc/etcd/cert/etcd.pem --key=/etc/etcd/cert/etcd-key.pem --cacert=/etc/kubernetes/cert/ca.pem member list --write-out=table +------------------+---------+------------+----------------------------+----------------------------+------------+ | ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS | IS LEARNER | +------------------+---------+------------+----------------------------+----------------------------+------------+ | 1d1d7edbba38c293 | started | k8s-etcd03 | https://172.16.60.233:2380 | https://172.16.60.233:2379 | false | | 4c0cfad24e92e45f | started | k8s-etcd02 | https://172.16.60.232:2380 | https://172.16.60.232:2379 | false | | 79cf4f0a8c3da54b | started | k8s-etcd01 | https://172.16.60.231:2380 | https://172.16.60.231:2379 | false | +------------------+---------+------------+----------------------------+----------------------------+------------+

1 # systemctl restart etcd

1 2 3 4 5 6 7 8 [root@k8s-master01 ~]# ETCDCTL_API=3 etcdctl -w table --cacert=/etc/kubernetes/cert/ca.pem --cert=/etc/etcd/cert/etcd.pem --key=/etc/etcd/cert/etcd-key.pem --endpoints="https://172.16.60.231:2379,https://172.16.60.232:2379,https://172.16.60.233:2379" endpoint status +----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS | +----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | https://172.16.60.231:2379 | 79cf4f0a8c3da54b | 3.4.9 | 1.6 MB | true | false | 5 | 24658 | 24658 | | | https://172.16.60.232:2379 | 4c0cfad24e92e45f | 3.4.9 | 1.6 MB | false | false | 5 | 24658 | 24658 | | | https://172.16.60.233:2379 | 1d1d7edbba38c293 | 3.4.9 | 1.7 MB | false | false | 5 | 24658 | 24658 | | +----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

1 2 3 4 5 6 7 8 9 10 [root@k8s-master01 ~]# kubectl get ns NAME STATUS AGE default Active 9m47s kube-node-lease Active 9m39s kube-public Active 9m39s kube-system Active 9m47s [root@k8s-master01 ~]# kubectl get pods -n kube-system No resources found in kube-system namespace. [root@k8s-master01 ~]# kubectl get pods --all-namespaces No resources found

1 2 # systemctl stop kube-apiserver # systemctl stop etcd

1 # rm -rf /data/k8s/etcd/data && rm -rf /data/k8s/etcd/wal

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 172.16.60.231 node ------------------------------------------------------- ETCDCTL_API=3 etcdctl \ --name=k8s-etcd01 \ --endpoints="https://172.16.60.231:2379" \ --cert=/etc/etcd/cert/etcd.pem \ --key=/etc/etcd/cert/etcd-key.pem \ --cacert=/etc/kubernetes/cert/ca.pem \ --initial-cluster-token=etcd-cluster-0 \ --initial-advertise-peer-urls=https://172.16.60.231:2380 \ --initial-cluster=k8s-etcd01=https://172.16.60.231:2380,k8s-etcd02=https://172.16.60.232:2380,k8s-etcd03=https://192.168.137.233:2380 \ --data-dir=/data/k8s/etcd/data \ --wal-dir=/data/k8s/etcd/wal \ snapshot restore /data/etcd_backup_dir/etcd-snapshot-20200820.db 172.16.60.232 node ------------------------------------------------------- ETCDCTL_API=3 etcdctl \ --name=k8s-etcd02 \ --endpoints="https://172.16.60.232:2379" \ --cert=/etc/etcd/cert/etcd.pem \ --key=/etc/etcd/cert/etcd-key.pem \ --cacert=/etc/kubernetes/cert/ca.pem \ --initial-cluster-token=etcd-cluster-0 \ --initial-advertise-peer-urls=https://172.16.60.232:2380 \ --initial-cluster=k8s-etcd01=https://172.16.60.231:2380,k8s-etcd02=https://172.16.60.232:2380,k8s-etcd03=https://192.168.137.233:2380 \ --data-dir=/data/k8s/etcd/data \ --wal-dir=/data/k8s/etcd/wal \ snapshot restore /data/etcd_backup_dir/etcd-snapshot-20200820.db 192.168.137.233 node ------------------------------------------------------- ETCDCTL_API=3 etcdctl \ --name=k8s-etcd03 \ --endpoints="https://192.168.137.233:2379" \ --cert=/etc/etcd/cert/etcd.pem \ --key=/etc/etcd/cert/etcd-key.pem \ --cacert=/etc/kubernetes/cert/ca.pem \ --initial-cluster-token=etcd-cluster-0 \ --initial-advertise-peer-urls=https://192.168.137.233:2380 \ --initial-cluster=k8s-etcd01=https://172.16.60.231:2380,k8s-etcd02=https://172.16.60.232:2380,k8s-etcd03=https://192.168.137.233:2380 \ --data-dir=/data/k8s/etcd/data \ --wal-dir=/data/k8s/etcd/wal \ snapshot restore /data/etcd_backup_dir/etcd-snapshot-20200820.db

1 2 # systemctl start etcd # systemctl status etcd

1 2 3 4 5 6 7 8 9 10 11 12 13 [root@k8s-master01 ~]# ETCDCTL_API=3 etcdctl --endpoints="https://172.16.60.231:2379,https://172.16.60.232:2379,https://172.16.60.233:2379" --cert=/etc/etcd/cert/etcd.pem --key=/etc/etcd/cert/etcd-key.pem --cacert=/etc/kubernetes/cert/ca.pem endpoint health https://172.16.60.232:2379 is healthy: successfully committed proposal: took = 12.837393ms https://172.16.60.233:2379 is healthy: successfully committed proposal: took = 13.306671ms https://172.16.60.231:2379 is healthy: successfully committed proposal: took = 13.602805ms [root@k8s-master01 ~]# ETCDCTL_API=3 etcdctl -w table --cacert=/etc/kubernetes/cert/ca.pem --cert=/etc/etcd/cert/etcd.pem --key=/etc/etcd/cert/etcd-key.pem --endpoints="https://172.16.60.231:2379,https://172.16.60.232:2379,https://172.16.60.233:2379" endpoint status +----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS | +----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | https://172.16.60.231:2379 | 79cf4f0a8c3da54b | 3.4.9 | 9.0 MB | false | false | 2 | 13 | 13 | | | https://172.16.60.232:2379 | 4c0cfad24e92e45f | 3.4.9 | 9.0 MB | true | false | 2 | 13 | 13 | | | https://172.16.60.233:2379 | 5f70664d346a6ebd | 3.4.9 | 9.0 MB | false | false | 2 | 13 | 13 | | +----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

1 2 # systemctl start kube-apiserver # systemctl status kube-apiserver

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 [root@k8s-master01 ~]# kubectl get cs NAME STATUS MESSAGE ERROR controller-manager Healthy ok scheduler Healthy ok etcd-2 Unhealthy HTTP probe failed with statuscode: 503 etcd-1 Unhealthy HTTP probe failed with statuscode: 503 etcd-0 Unhealthy HTTP probe failed with statuscode: 503 because etcd Once the service is restarted, it needs to be brushed several times, and the status will be normal: [root@k8s-master01 ~]# kubectl get cs NAME STATUS MESSAGE ERROR controller-manager Healthy ok scheduler Healthy ok etcd-2 Healthy {"health":"true"} etcd-0 Healthy {"health":"true"} etcd-1 Healthy {"health":"true"}

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 [root@k8s-master01 ~]# kubectl get ns NAME STATUS AGE default Active 7d4h kevin Active 5d18h kube-node-lease Active 7d4h kube-public Active 7d4h kube-system Active 7d4h [root@k8s-master01 ~]# kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE default dnsutils-ds-22q87 0/1 ContainerCreating 171 7d3h default dnsutils-ds-bp8tm 0/1 ContainerCreating 138 5d18h default dnsutils-ds-bzzqg 0/1 ContainerCreating 138 5d18h default dnsutils-ds-jcvng 1/1 Running 171 7d3h default dnsutils-ds-xrl2x 0/1 ContainerCreating 138 5d18h default dnsutils-ds-zjg5l 1/1 Running 0 7d3h default kevin-t-84cdd49d65-ck47f 0/1 ContainerCreating 0 2d2h default nginx-ds-98rm2 1/1 Running 2 7d3h default nginx-ds-bbx68 1/1 Running 0 7d3h default nginx-ds-kfctv 0/1 ContainerCreating 1 5d18h default nginx-ds-mdcd9 0/1 ContainerCreating 1 5d18h default nginx-ds-ngqcm 1/1 Running 0 7d3h default nginx-ds-tpcxs 0/1 ContainerCreating 1 5d18h kevin nginx-ingress-controller-797ffb479-vrq6w 0/1 ContainerCreating 0 5d18h kevin test-nginx-7d4f96b486-qd4fl 0/1 ContainerCreating 0 2d1h kevin test-nginx-7d4f96b486-qfddd 0/1 Running 0 2d1h kube-system calico-kube-controllers-578894d4cd-9rp4c 1/1 Running 1 7d3h kube-system calico-node-d7wq8 0/1 PodInitializing 1 7d3h

3, Final summary

- When backing up an ETCD cluster, only one ETCD data needs to be backed up and then synchronized to other nodes.

- When recovering ETCD data, you can recover the backup data of one of the nodes

Turn from

K8S cluster disaster recovery environment deployment - scattered flashiness - blog Park

https://www.cnblogs.com/kevingrace/p/14616824.html