1. Over fitting optimization, data enhancement, Cats v Dogs Augmentation

https://colab.research.google.com/github/lmoroney/dlaicourse/blob/master/Course%202%20-%20Part%204%20-%20Lesson%202%20-%20Notebook%20(Cats%20v%20Dogs%20Augmentation).ipynb

Let's start with a very effective model for learning Cats v Dogs.

It's similar to the model you used before, but I updated the layer definition. Note that there are now four convolutions with 32, 64, 128, and 128 convolutions.

In addition, this will train 100 epoch s because I want to chart loss and accuracy.

!wget --no-check-certificate \

https://storage.googleapis.com/mledu-datasets/cats_and_dogs_filtered.zip \

-O /tmp/cats_and_dogs_filtered.zip

import os

import zipfile

import tensorflow as tf

from tensorflow.keras.optimizers import RMSprop

from tensorflow.keras.preprocessing.image import ImageDataGenerator

local_zip = '/tmp/cats_and_dogs_filtered.zip'

zip_ref = zipfile.ZipFile(local_zip, 'r')

zip_ref.extractall('/tmp')

zip_ref.close()

base_dir = '/tmp/cats_and_dogs_filtered'

train_dir = os.path.join(base_dir, 'train')

validation_dir = os.path.join(base_dir, 'validation')

# Directory with our training cat pictures

train_cats_dir = os.path.join(train_dir, 'cats')

# Directory with our training dog pictures

train_dogs_dir = os.path.join(train_dir, 'dogs')

# Directory with our validation cat pictures

validation_cats_dir = os.path.join(validation_dir, 'cats')

# Directory with our validation dog pictures

validation_dogs_dir = os.path.join(validation_dir, 'dogs')

model = tf.keras.models.Sequential([

tf.keras.layers.Conv2D(32, (3,3), activation='relu', input_shape=(150, 150, 3)),

tf.keras.layers.MaxPooling2D(2, 2),

tf.keras.layers.Conv2D(64, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

tf.keras.layers.Conv2D(128, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

tf.keras.layers.Conv2D(128, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(512, activation='relu'),

tf.keras.layers.Dense(1, activation='sigmoid')

])

model.compile(loss='binary_crossentropy',

optimizer=RMSprop(lr=1e-4),

metrics=['accuracy'])

# All images will be rescaled by 1./255

train_datagen = ImageDataGenerator(rescale=1./255)

test_datagen = ImageDataGenerator(rescale=1./255)

# Flow training images in batches of 20 using train_datagen generator

train_generator = train_datagen.flow_from_directory(

train_dir, # This is the source directory for training images

target_size=(150, 150), # All images will be resized to 150x150

batch_size=20,

# Since we use binary_crossentropy loss, we need binary labels

class_mode='binary')

# Flow validation images in batches of 20 using test_datagen generator

validation_generator = test_datagen.flow_from_directory(

validation_dir,

target_size=(150, 150),

batch_size=20,

class_mode='binary')

history = model.fit(

train_generator,

steps_per_epoch=100, # 2000 images = batch_size * steps

epochs=100,

validation_data=validation_generator,

validation_steps=50, # 1000 images = batch_size * steps

verbose=2)

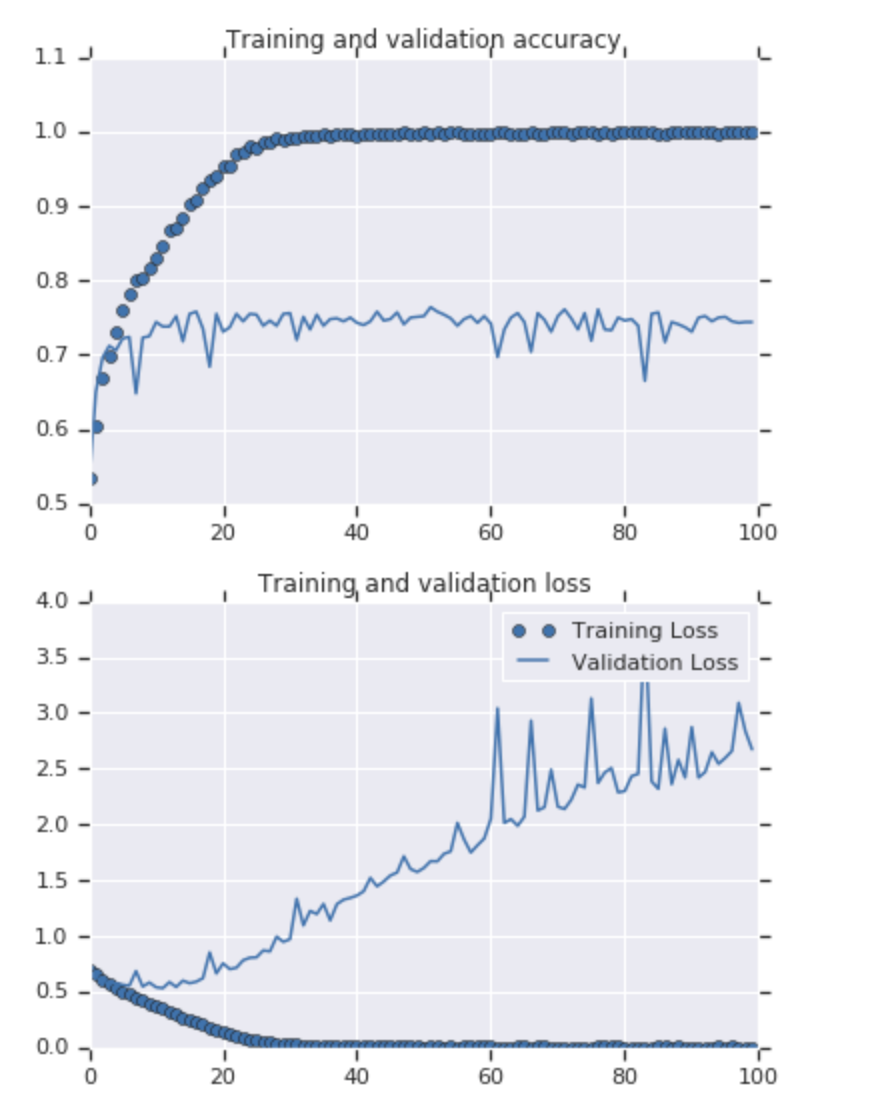

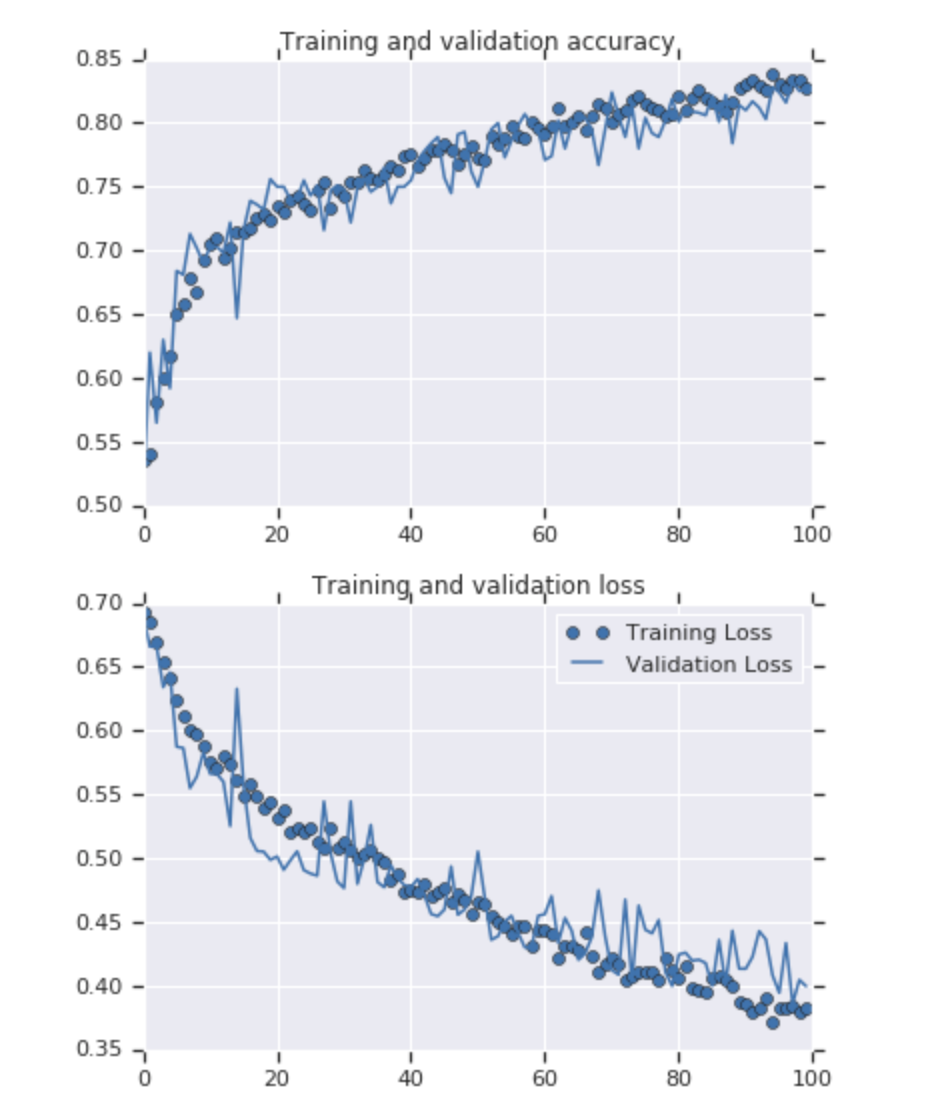

import matplotlib.pyplot as plt

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'bo', label='Training accuracy')

plt.plot(epochs, val_acc, 'b', label='Validation accuracy')

plt.title('Training and validation accuracy')

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training Loss')

plt.plot(epochs, val_loss, 'b', label='Validation Loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

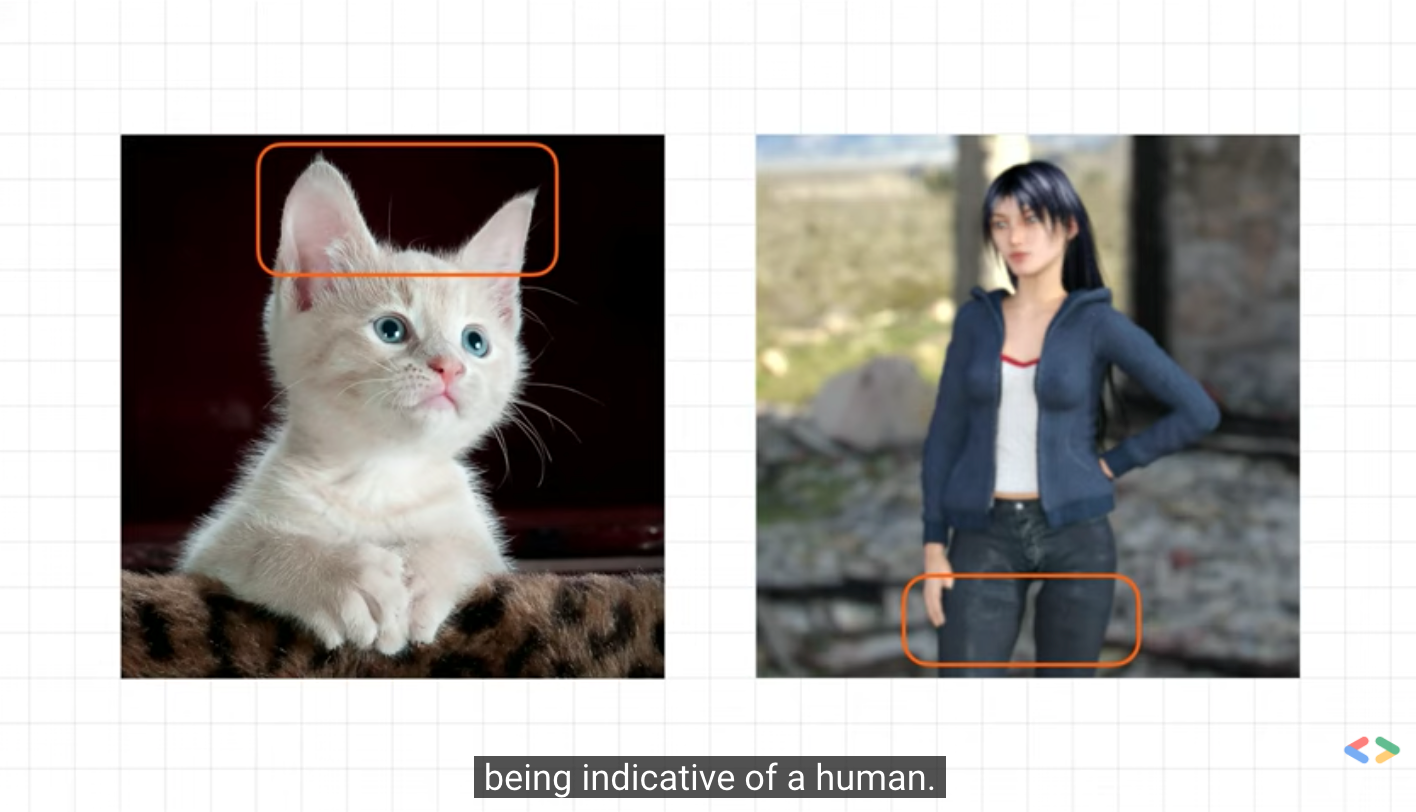

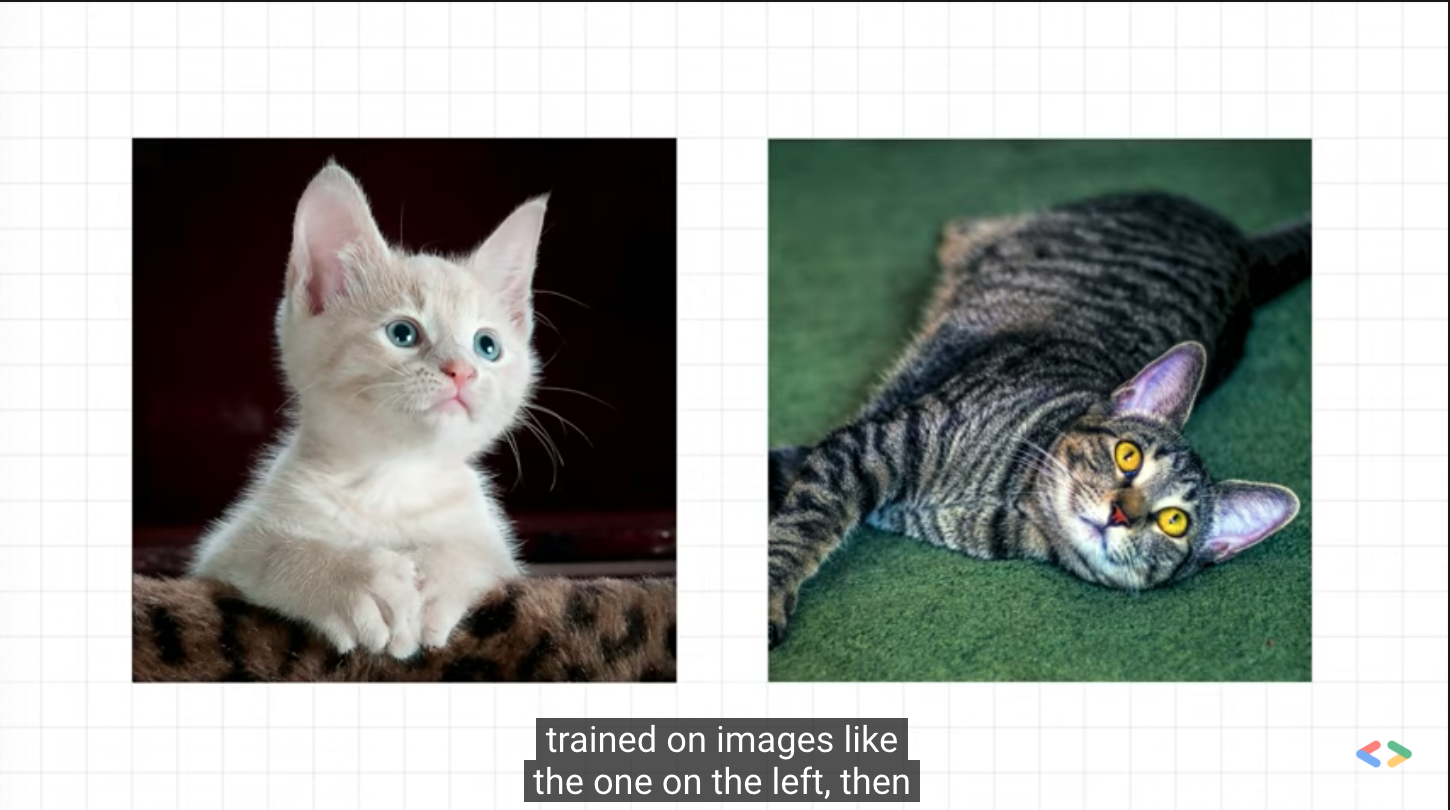

The training accuracy is close to 100%, and the verification accuracy is in the range of 70% - 80%. This is a good example of over fitting - in short, it means that it works well with previously seen images, but not with previously seen images. Let's see if we can better avoid overfitting - a simple way is to increase the image slightly. If you think about it, most pictures of cats are very similar - ears at the top, then eyes, then mouth, etc. Things like the distance between eyes and ears are always very similar.

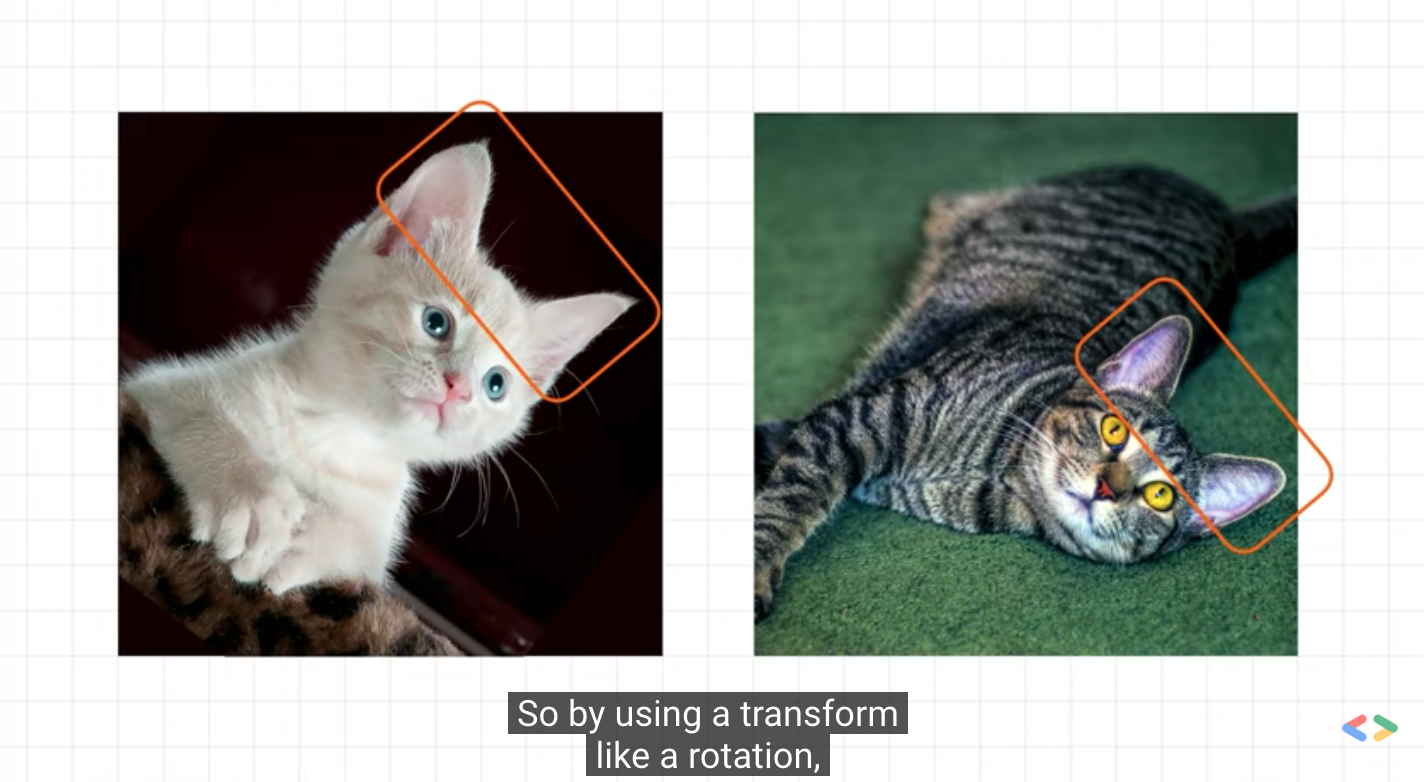

What happens if we adjust the image to change it slightly - rotate the image, squeeze it, and so on. This is the whole meaning of image enhancement. There is also an API that makes it easy

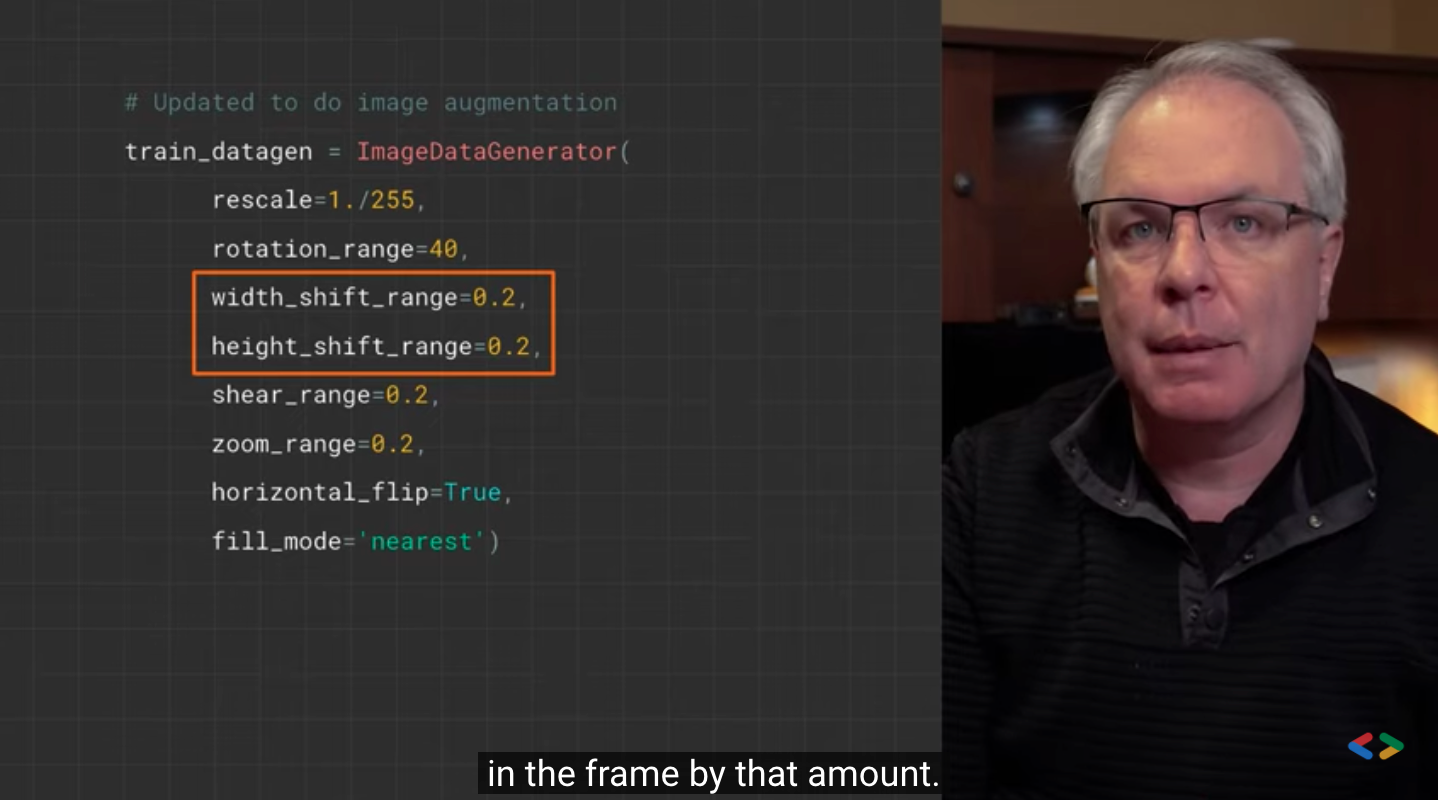

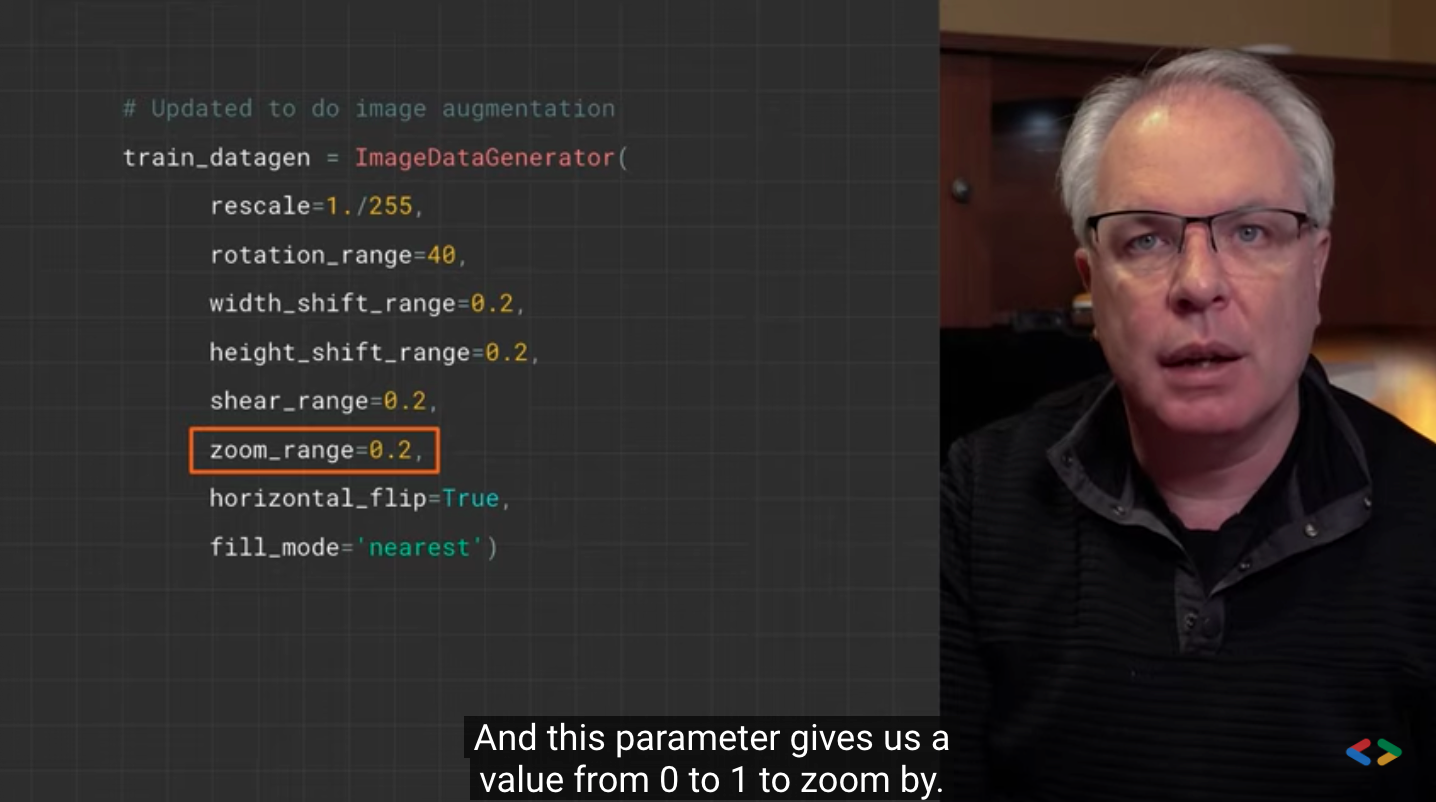

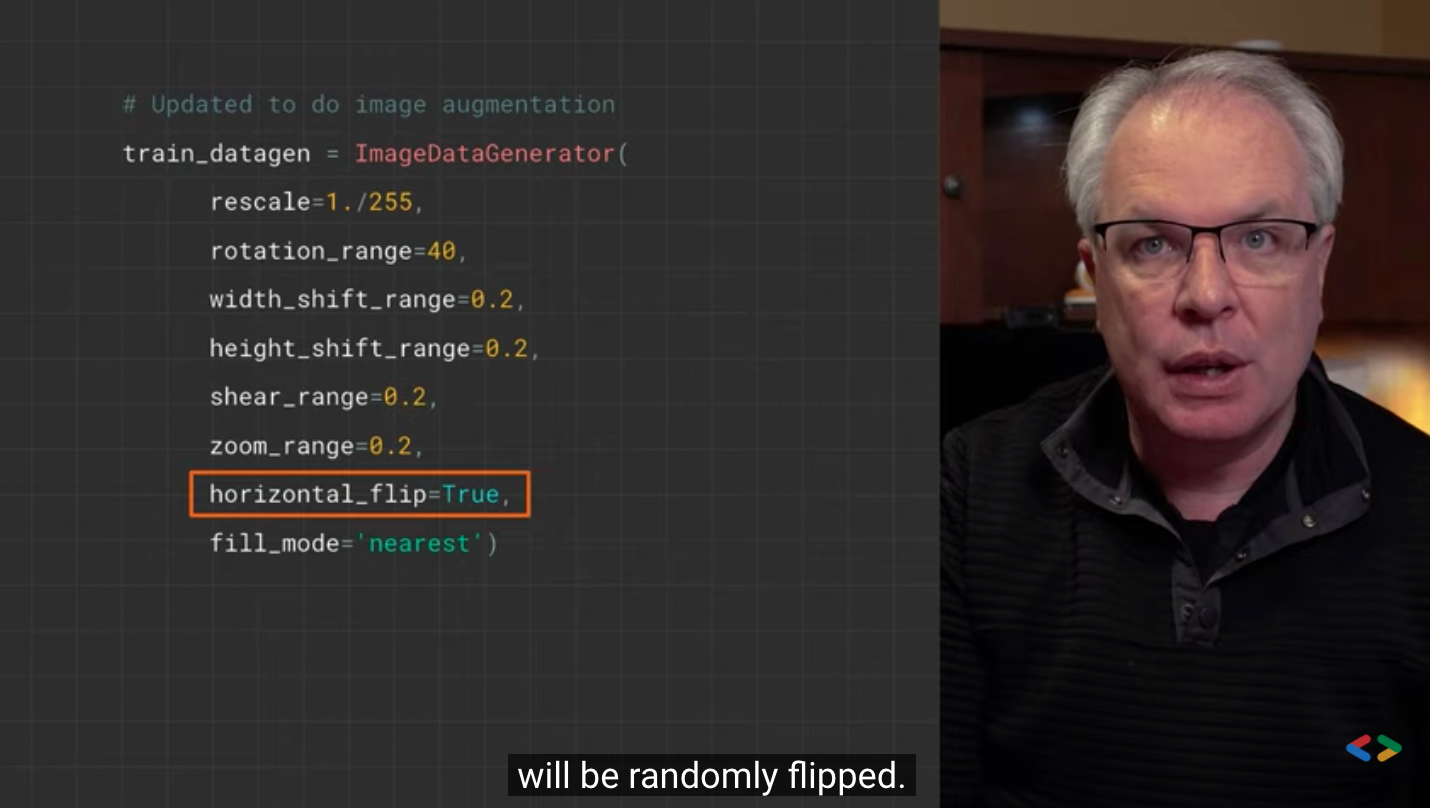

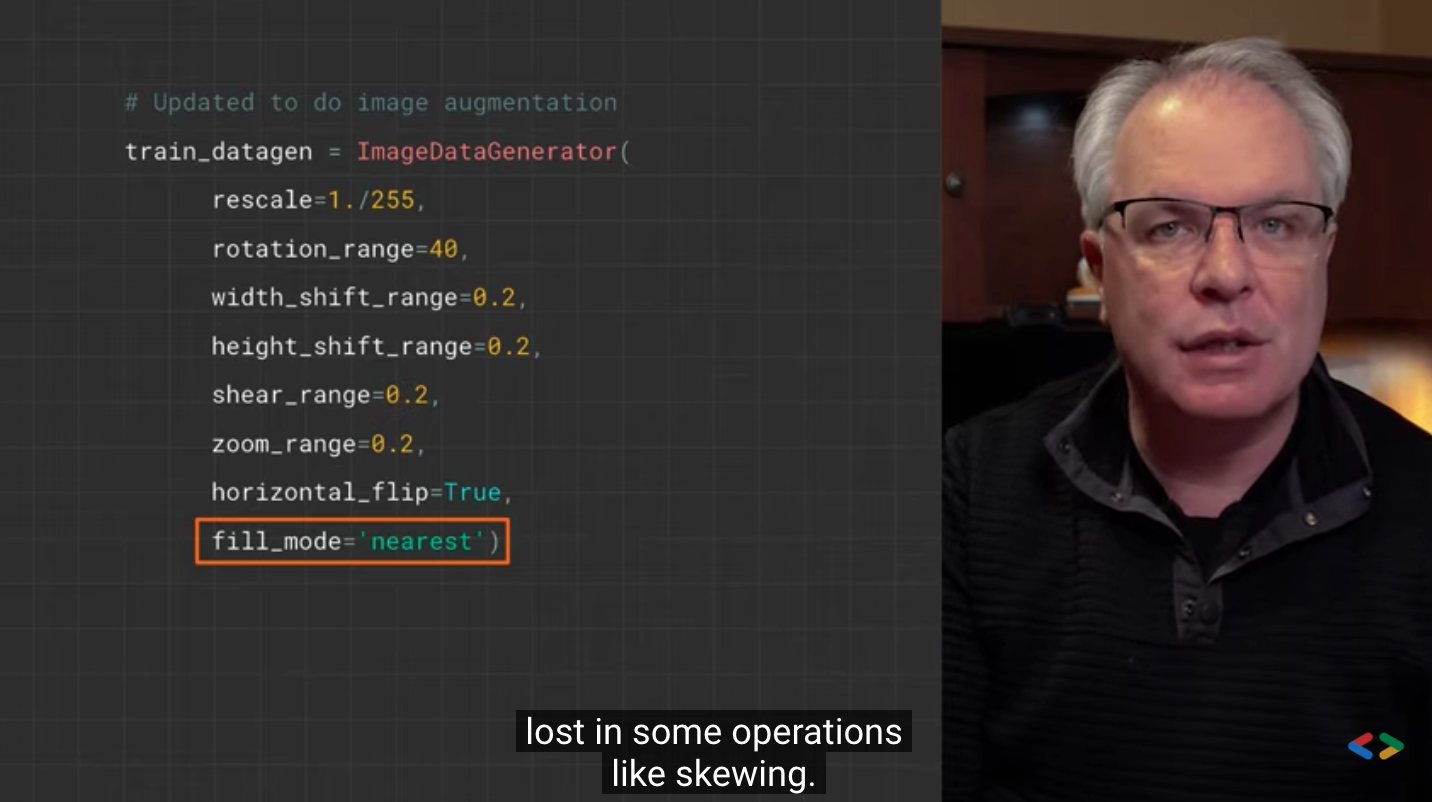

Now look at the ImageGenerator. You can use some properties on it to enhance the image.

# Updated to do image augmentation

train_datagen = ImageDataGenerator(

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode='nearest')

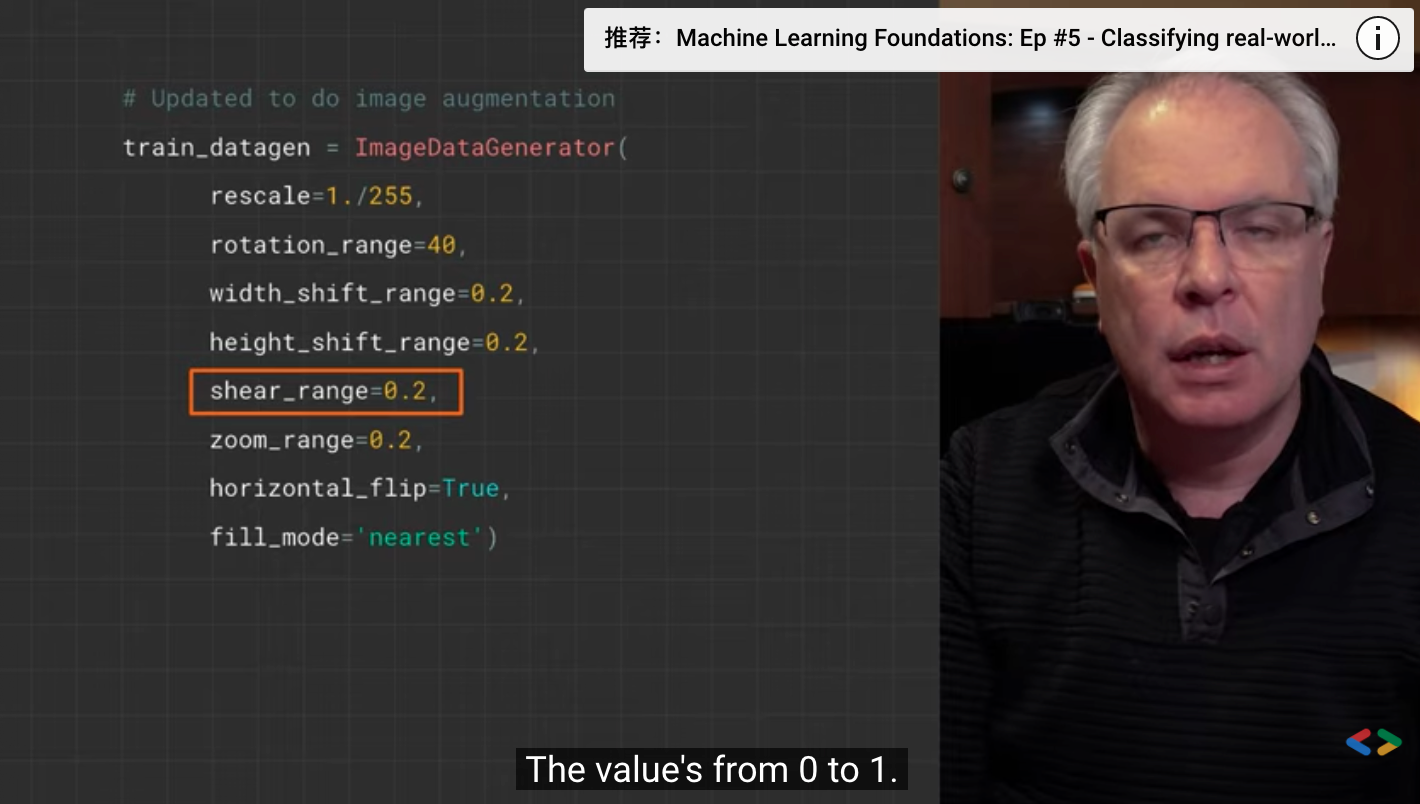

These are just some of the options available (see the Keras documentation for more information. Let's quickly review what we just wrote:

- rotation_range is a value in degrees (0 – 180), which is a range of randomly rotated pictures.

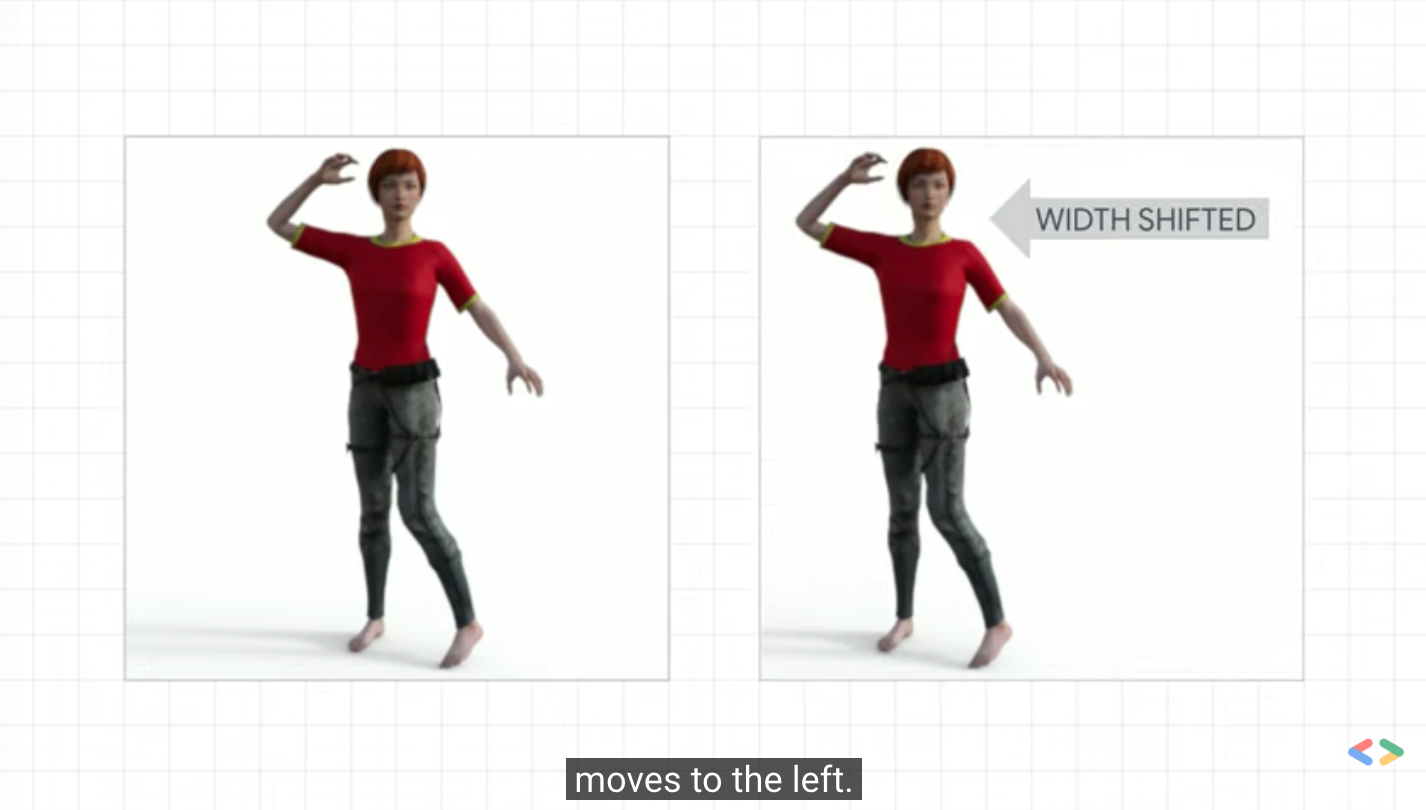

- width_shift and height_shift are ranges (as part of the total width or height) within which the picture is randomly translated vertically or horizontally.

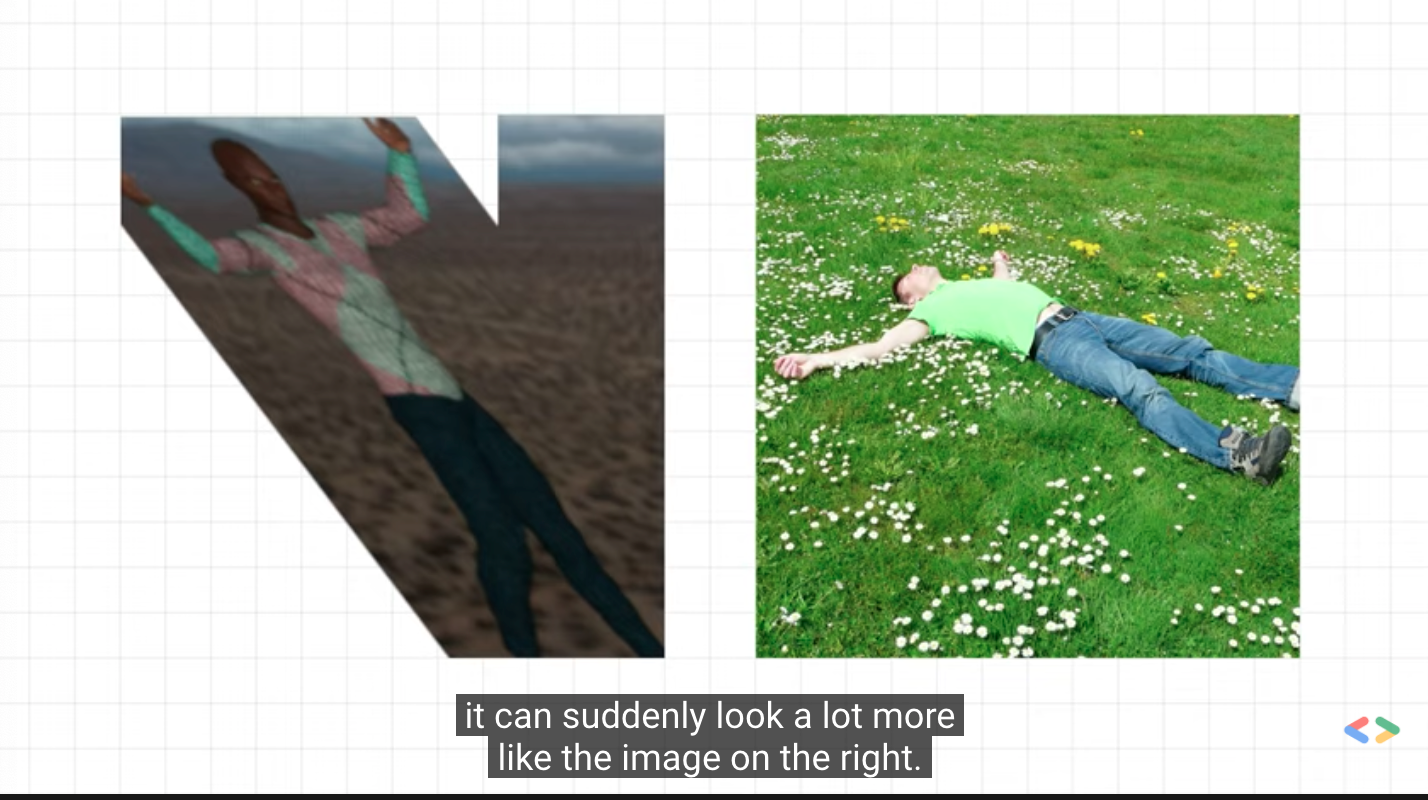

- The shear range is used to randomly apply the shear transform.

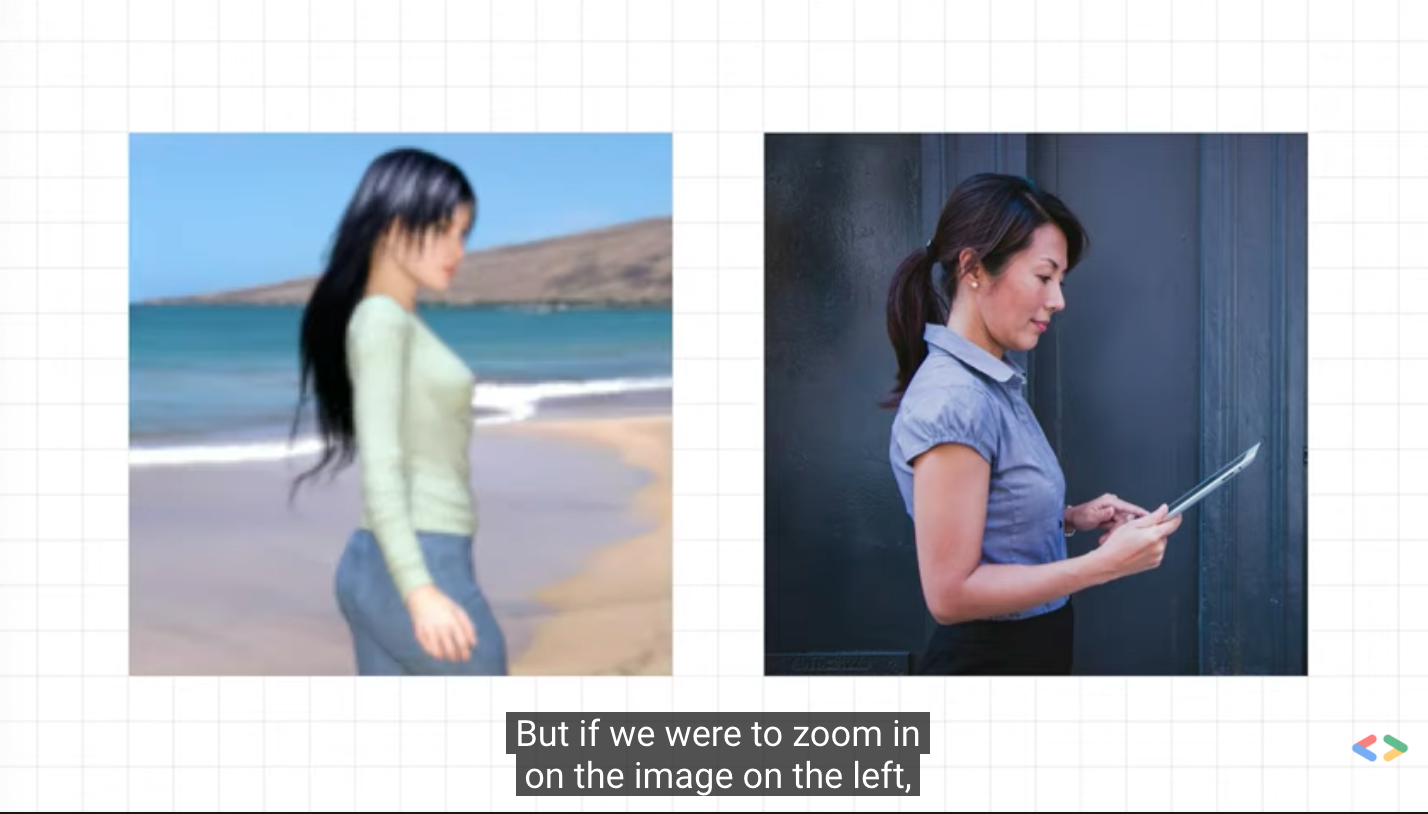

- zoom_range is used to randomly scale the inside of a picture.

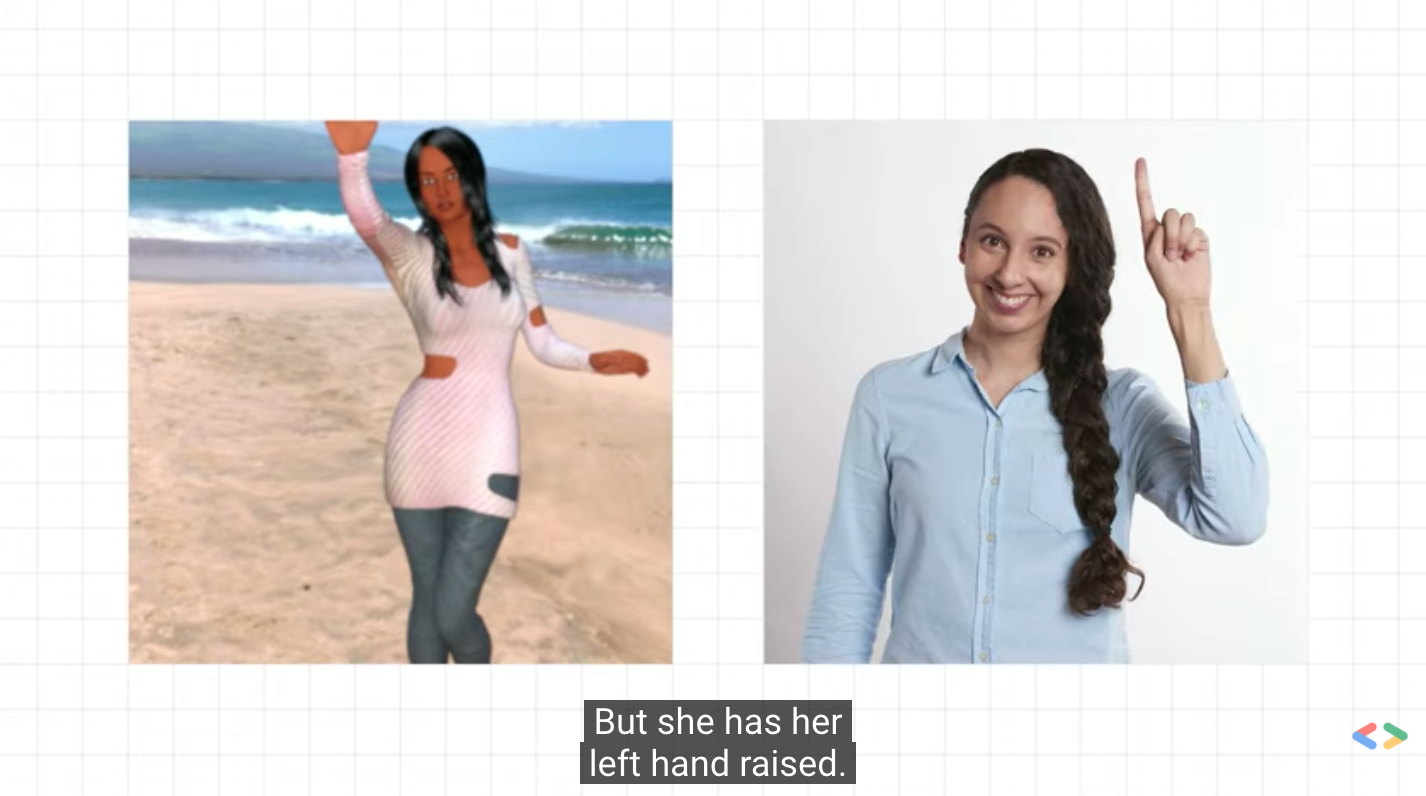

- horizo # tal_flip is used to randomly flip half the image horizontally. This is relevant when there is no assumption of horizontal asymmetry (such as real-world pictures).

- fill_mode is a strategy for filling newly created pixels, which can appear after rotation or width / height offset.

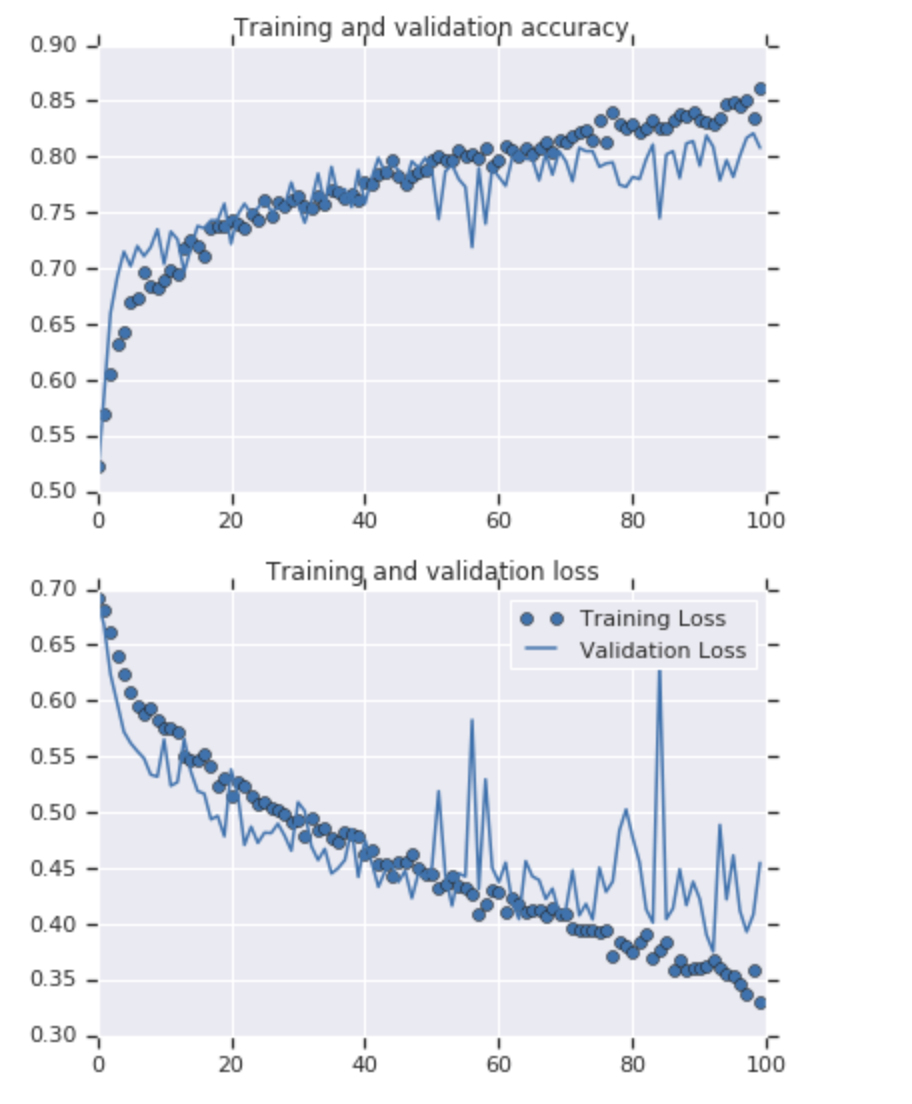

This is some code we added image enhancement. Run it to see the impact.

!wget --no-check-certificate \

https://storage.googleapis.com/mledu-datasets/cats_and_dogs_filtered.zip \

-O /tmp/cats_and_dogs_filtered.zip

import os

import zipfile

import tensorflow as tf

from tensorflow.keras.optimizers import RMSprop

from tensorflow.keras.preprocessing.image import ImageDataGenerator

local_zip = '/tmp/cats_and_dogs_filtered.zip'

zip_ref = zipfile.ZipFile(local_zip, 'r')

zip_ref.extractall('/tmp')

zip_ref.close()

base_dir = '/tmp/cats_and_dogs_filtered'

train_dir = os.path.join(base_dir, 'train')

validation_dir = os.path.join(base_dir, 'validation')

# Directory with our training cat pictures

train_cats_dir = os.path.join(train_dir, 'cats')

# Directory with our training dog pictures

train_dogs_dir = os.path.join(train_dir, 'dogs')

# Directory with our validation cat pictures

validation_cats_dir = os.path.join(validation_dir, 'cats')

# Directory with our validation dog pictures

validation_dogs_dir = os.path.join(validation_dir, 'dogs')

model = tf.keras.models.Sequential([

tf.keras.layers.Conv2D(32, (3,3), activation='relu', input_shape=(150, 150, 3)),

tf.keras.layers.MaxPooling2D(2, 2),

tf.keras.layers.Conv2D(64, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

tf.keras.layers.Conv2D(128, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

tf.keras.layers.Conv2D(128, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(512, activation='relu'),

tf.keras.layers.Dense(1, activation='sigmoid')

])

model.compile(loss='binary_crossentropy',

optimizer=RMSprop(lr=1e-4),

metrics=['accuracy'])

# This code has changed. Now instead of the ImageGenerator just rescaling

# the image, we also rotate and do other operations

# Updated to do image augmentation

train_datagen = ImageDataGenerator(

rescale=1./255,

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode='nearest')

test_datagen = ImageDataGenerator(rescale=1./255)

# Flow training images in batches of 20 using train_datagen generator

train_generator = train_datagen.flow_from_directory(

train_dir, # This is the source directory for training images

target_size=(150, 150), # All images will be resized to 150x150

batch_size=20,

# Since we use binary_crossentropy loss, we need binary labels

class_mode='binary')

# Flow validation images in batches of 20 using test_datagen generator

validation_generator = test_datagen.flow_from_directory(

validation_dir,

target_size=(150, 150),

batch_size=20,

class_mode='binary')

history = model.fit(

train_generator,

steps_per_epoch=100, # 2000 images = batch_size * steps

epochs=100,

validation_data=validation_generator,

validation_steps=50, # 1000 images = batch_size * steps

verbose=2)

import matplotlib.pyplot as plt

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'bo', label='Training accuracy')

plt.plot(epochs, val_acc, 'b', label='Validation accuracy')

plt.title('Training and validation accuracy')

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training Loss')

plt.plot(epochs, val_loss, 'b', label='Validation Loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

!wget --no-check-certificate \

https://storage.googleapis.com/mledu-datasets/cats_and_dogs_filtered.zip \

-O /tmp/cats_and_dogs_filtered.zip

import os

import zipfile

import tensorflow as tf

from tensorflow.keras.optimizers import RMSprop

from tensorflow.keras.preprocessing.image import ImageDataGenerator

local_zip = '/tmp/cats_and_dogs_filtered.zip'

zip_ref = zipfile.ZipFile(local_zip, 'r')

zip_ref.extractall('/tmp')

zip_ref.close()

base_dir = '/tmp/cats_and_dogs_filtered'

train_dir = os.path.join(base_dir, 'train')

validation_dir = os.path.join(base_dir, 'validation')

# Directory with our training cat pictures

train_cats_dir = os.path.join(train_dir, 'cats')

# Directory with our training dog pictures

train_dogs_dir = os.path.join(train_dir, 'dogs')

# Directory with our validation cat pictures

validation_cats_dir = os.path.join(validation_dir, 'cats')

# Directory with our validation dog pictures

validation_dogs_dir = os.path.join(validation_dir, 'dogs')

model = tf.keras.models.Sequential([

tf.keras.layers.Conv2D(32, (3,3), activation='relu', input_shape=(150, 150, 3)),

tf.keras.layers.MaxPooling2D(2, 2),

tf.keras.layers.Conv2D(64, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

tf.keras.layers.Conv2D(128, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

tf.keras.layers.Conv2D(128, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

tf.keras.layers.Dropout(0.5),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(512, activation='relu'),

tf.keras.layers.Dense(1, activation='sigmoid')

])

model.compile(loss='binary_crossentropy',

optimizer=RMSprop(lr=1e-4),

metrics=['accuracy'])

# This code has changed. Now instead of the ImageGenerator just rescaling

# the image, we also rotate and do other operations

# Updated to do image augmentation

train_datagen = ImageDataGenerator(

rescale=1./255,

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode='nearest')

test_datagen = ImageDataGenerator(rescale=1./255)

# Flow training images in batches of 20 using train_datagen generator

train_generator = train_datagen.flow_from_directory(

train_dir, # This is the source directory for training images

target_size=(150, 150), # All images will be resized to 150x150

batch_size=20,

# Since we use binary_crossentropy loss, we need binary labels

class_mode='binary')

# Flow validation images in batches of 20 using test_datagen generator

validation_generator = test_datagen.flow_from_directory(

validation_dir,

target_size=(150, 150),

batch_size=20,

class_mode='binary')

history = model.fit(

train_generator,

steps_per_epoch=100, # 2000 images = batch_size * steps

epochs=100,

validation_data=validation_generator,

validation_steps=50, # 1000 images = batch_size * steps

verbose=2)

import matplotlib.pyplot as plt

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'bo', label='Training accuracy')

plt.plot(epochs, val_acc, 'b', label='Validation accuracy')

plt.title('Training and validation accuracy')

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training Loss')

plt.plot(epochs, val_loss, 'b', label='Validation Loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

reference resources

https://www.youtube.com/watch?v=QWdYWwW6OAE