catalogue

1, Disadvantages of traditional log collection

2, ELK collection system process

1, Disadvantages of traditional log collection

We know that most of us use logs to judge where the program reports errors, so that we can take a powerful medicine against the log. In a cluster environment, if hundreds of servers report errors, how can we find logs one by one? That'll embarrass us.

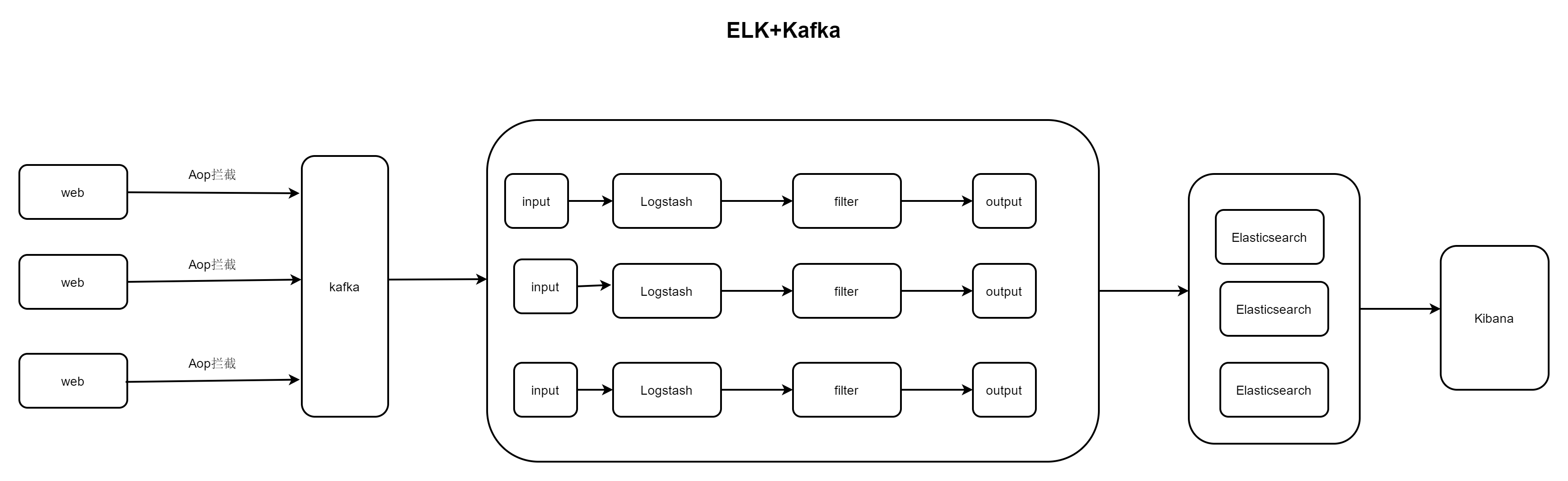

2, ELK collection system process

Based on Elasticsearch, Logstash and Kibana, the distributed log collection system can be realized. In addition, Kibana's visualization system can analyze the data. Um, it's really fragrant.

Create an AOP during the request process, intercept the request, and then start an asynchronous thread in the AOP method to send the message to Kafka (single machine or cluster). logstash receives Kafka's log, filters the message, and then sends it to the ElasticSearch system, and then searches and analyzes the log through the Kibana visual interface.

3, Build ELK system

Zookeeper Construction: Build zookeeper 3.7.0 cluster (Traditional & docker)_ Familiar snail blog - CSDN blog

Kafka Construction: Kafka cluster installation (traditional mode & docker mode) & springboot integration Kafka_ Familiar snail blog - CSDN blog

ElasticSearch setup:

Kibana Construction:

Kibana installation & integration ElasticSearch_ Familiar snail blog - CSDN blog

Logstash setup

Installation and use of LogStash (Traditional & docker)_ Familiar snail blog - CSDN blog

This article demonstrates that based on docker compose, all are stand-alone. For clusters, please refer to the above construction methods

1. Build docker compose

#Download the docker compose file sudo curl -L "https://github.com/docker/compose/releases/download/1.29.2/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose #to grant authorization sudo chmod +x /usr/local/bin/docker-compose

2. Create directory

mkdir -p /usr/local/docker-compose/elk

3. Create the docker-compose.yml file in the above directory

version: '2'

services:

zookeeper:

image: zookeeper:latest

container_name: zookeper

ports:

- "2181:2181"

kafka:

image: wurstmeister/kafka:latest

container_name: kafka

volumes:

- /etc/localtime:/etc/localtime

ports:

- "9092:9092"

environment:

KAFKA_ADVERTISED_HOST_NAME: 192.168.139.160

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

KAFKA_ADVERTISED_PORT: 9092

KAFKA_LOG_RETENTION_HOURS: 120

KAFKA_MESSAGE_MAX_BYTES: 10000000

KAFKA_REPLICA_FETCH_MAX_BYTES: 10000000

KAFKA_GROUP_MAX_SESSION_TIMEOUT_MS: 60000

KAFKA_NUM_PARTITIONS: 3

KAFKA_DELETE_RETENTION_MS: 1000

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:7.15.2

restart: always

container_name: elasticsearch

environment:

- discovery.type=single-node #Single point start, not allowed in actual production

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ports:

- 9200:9200

kibana:

image: docker.elastic.co/kibana/kibana:7.15.2

restart: always

container_name: kibana

ports:

- 5601:5601

environment:

- elasticsearch_url=http://192.168.139.160:9200

depends_on:

- elasticsearch

logstash:

image: docker.elastic.co/logstash/logstash:7.15.2

volumes:

- /data/logstash/pipeline/:/usr/share/logstash/pipeline/

- /data/logstash/config/logstash.yml:/usr/share/logstash/config/logstash.yml

- /data/logstash/config/pipelines.yml:/usr/share/logstash/config/pipelines.yml

restart: always

container_name: logstash

ports:

- 9600:9600

depends_on:

- elasticsearch4. Start

#Enter the directory where docker compose is located and execute [root@localhost elk]# docker-compose up

4, Code

Section class

package com.xiaojie.elk.aop;

import com.alibaba.fastjson.JSONObject;

import com.xiaojie.elk.pojo.RequestPojo;

import org.aspectj.lang.JoinPoint;

import org.aspectj.lang.annotation.*;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.stereotype.Component;

import org.springframework.web.context.request.RequestContextHolder;

import org.springframework.web.context.request.ServletRequestAttributes;

import javax.servlet.http.HttpServletRequest;

import java.net.InetAddress;

import java.net.UnknownHostException;

import java.text.SimpleDateFormat;

import java.util.Arrays;

import java.util.Date;

/**

* @author xiaojie

* @version 1.0

* @description: Log facet class

* @date 2021/12/5 16:51

*/

@Aspect

@Component

public class AopLogAspect {

@Value("${server.port}")

private String serverPort;

@Autowired

private KafkaTemplate<String, Object> kafkaTemplate;

// Declare an execution expression inside a pointcut

@Pointcut("execution(* com.xiaojie.elk.service.*.*(..))")

private void serviceAspect() {

}

@Autowired

private LogContainer logContainer;

// Print content before requesting method

@Before(value = "serviceAspect()")

public void methodBefore(JoinPoint joinPoint) {

ServletRequestAttributes requestAttributes = (ServletRequestAttributes) RequestContextHolder

.getRequestAttributes();

HttpServletRequest request = requestAttributes.getRequest();

RequestPojo requestPojo = new RequestPojo();

SimpleDateFormat df = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");// Format date

requestPojo.setRequestTime(df.format(new Date()));

requestPojo.setUrl(request.getRequestURL().toString());

requestPojo.setMethod(request.getMethod());

requestPojo.setSignature(joinPoint.getSignature().toString());

requestPojo.setArgs(Arrays.toString(joinPoint.getArgs()));

// IP address information

requestPojo.setAddress(getIpAddr(request) + ":" + serverPort);

// Post log information to kafka

String log = JSONObject.toJSONString(requestPojo);

logContainer.put(log);

}

// Print the returned content after the method execution is completed

/* @AfterReturning(returning = "o", pointcut = "serviceAspect()")

public void methodAfterReturing(Object o) {

ServletRequestAttributes requestAttributes = (ServletRequestAttributes) RequestContextHolder

.getRequestAttributes();

HttpServletRequest request = requestAttributes.getRequest();

JSONObject respJSONObject = new JSONObject();

JSONObject jsonObject = new JSONObject();

SimpleDateFormat df = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");// Format date

jsonObject.put("response_time", df.format(new Date()));

jsonObject.put("response_content", JSONObject.toJSONString(o));

// IP Address information

jsonObject.put("ip_addres", getIpAddr(request) + ":" + serverPort);

respJSONObject.put("response", jsonObject);

logContainer.put(respJSONObject.toJSONString());

}*/

/**

* Exception notification

*

* @param point

*/

@AfterThrowing(pointcut = "serviceAspect()", throwing = "e")

public void serviceAspect(JoinPoint joinPoint, Exception e) {

ServletRequestAttributes requestAttributes = (ServletRequestAttributes) RequestContextHolder

.getRequestAttributes();

HttpServletRequest request = requestAttributes.getRequest();

SimpleDateFormat df = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");// Format date

RequestPojo requestPojo = new RequestPojo();

requestPojo.setRequestTime(df.format(new Date()));

requestPojo.setUrl(request.getRequestURL().toString());

requestPojo.setMethod(request.getMethod());

requestPojo.setSignature(joinPoint.getSignature().toString());

requestPojo.setArgs(Arrays.toString(joinPoint.getArgs()));

// IP address information

requestPojo.setAddress(getIpAddr(request) + ":" + serverPort);

requestPojo.setError(e.toString());

// Post log information to kafka

String log = JSONObject.toJSONString(requestPojo);

logContainer.put(log);

}

public static String getIpAddr(HttpServletRequest request) {

//X-Forwarded-For (XFF) is an HTTP request header field used to identify the most original IP address of the client connected to the Web server through HTTP proxy or load balancing.

String ipAddress = request.getHeader("x-forwarded-for");

if (ipAddress == null || ipAddress.length() == 0 || "unknown".equalsIgnoreCase(ipAddress)) {

ipAddress = request.getHeader("Proxy-Client-IP");

}

if (ipAddress == null || ipAddress.length() == 0 || "unknown".equalsIgnoreCase(ipAddress)) {

ipAddress = request.getHeader("WL-Proxy-Client-IP");

}

if (ipAddress == null || ipAddress.length() == 0 || "unknown".equalsIgnoreCase(ipAddress)) {

ipAddress = request.getRemoteAddr();

if (ipAddress.equals("127.0.0.1") || ipAddress.equals("0:0:0:0:0:0:0:1")) {

//Take the configured IP of the machine according to the network card

InetAddress inet = null;

try {

inet = InetAddress.getLocalHost();

} catch (UnknownHostException e) {

e.printStackTrace();

}

ipAddress = inet.getHostAddress();

}

}

//In the case of multiple agents, the first IP is the real IP of the client, and multiple IPS are divided according to ','

if (ipAddress != null && ipAddress.length() > 15) { //"***.***.***.***".length() = 15

if (ipAddress.indexOf(",") > 0) {

ipAddress = ipAddress.substring(0, ipAddress.indexOf(","));

}

}

return ipAddress;

}

}Asynchronous thread

package com.xiaojie.elk.aop;

import org.apache.commons.lang3.StringUtils;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.stereotype.Component;

import java.util.concurrent.BlockingDeque;

import java.util.concurrent.LinkedBlockingDeque;

/**

* @author xiaojie

* @version 1.0

* @description: Enable asynchronous thread to send log

* @date 2021/12/5 16:50

*/

@Component

public class LogContainer {

private static BlockingDeque<String> logDeque = new LinkedBlockingDeque<>();

@Autowired

private KafkaTemplate<String, Object> kafkaTemplate;

public LogContainer() {

// initialization

new LogThreadKafka().start();

}

/**

* Log in

*

* @param log

*/

public void put(String log) {

logDeque.offer(log);

}

class LogThreadKafka extends Thread {

@Override

public void run() {

while (true) {

String log = logDeque.poll();

if (!StringUtils.isEmpty(log)) {

// Post message to kafka

kafkaTemplate.send("kafka-log", log);

}

}

}

}

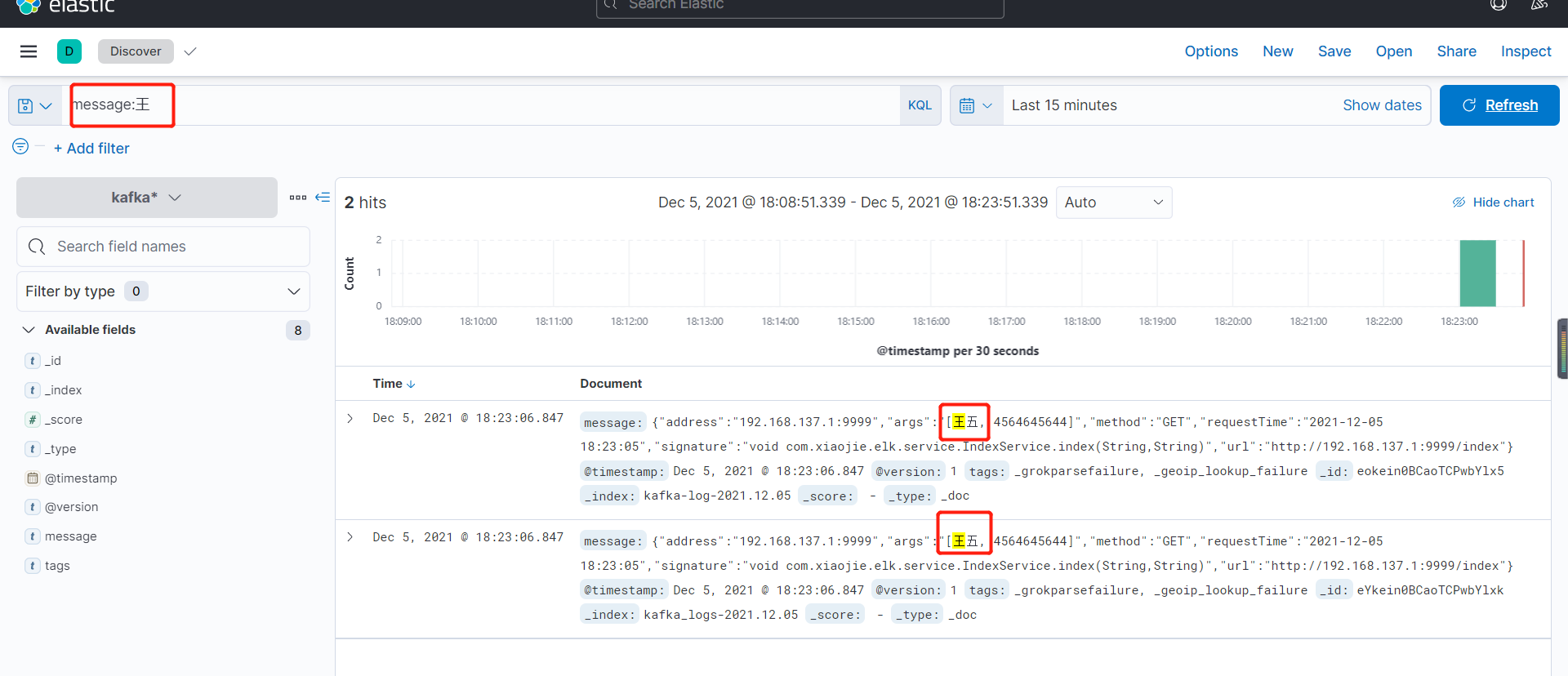

}5, Verification effect

Full code: Spring boot: spring boot integrates redis, message oriented middleware and other related codes elk module