1 getting started with elasticsearch

Elasticsearch is an open source search engine based on Apache Lucene. Whether in open source or proprietary domain, Lucene can be regarded as the most advanced, best performing and most powerful search engine library so far. However, Lucene is just a library. If you want to use it, you must use Java as the development language and integrate it directly into your application. What's worse, Lucene is very complex. You need to deeply understand the relevant knowledge of retrieval to understand how it works.

Elasticsearch also uses Java to develop and use Lucene as its core to realize all indexing and search functions, but its purpose is to hide the complexity of Lucene through a simple RESTful API, so as to make full-text search simple.

1.1 Elasticsearch installation

1.1 downloading software

Elasticsearch's official address: Elastic

Download address: https://www.elastic.co/cn/downloads/past-releases#elasticsearch

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-r95dln0c-1637994003567)( https://i.loli.net/2021/11/23/SkNfEl75oTJXFAv.png )]

Select the version of Elasticsearch as Windows.

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-wexu9p1f-1637994003571)( https://i.loli.net/2021/11/27/zidIg8xhqcWLyYw.png )]

1.2 installing software

The installation is completed after decompression. The directory structure of Elasticsearch after decompression is as follows:

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-ug83bqla-1637994003573)( https://i.loli.net/2021/11/23/kDyaBsmVzvblx9N.png )]

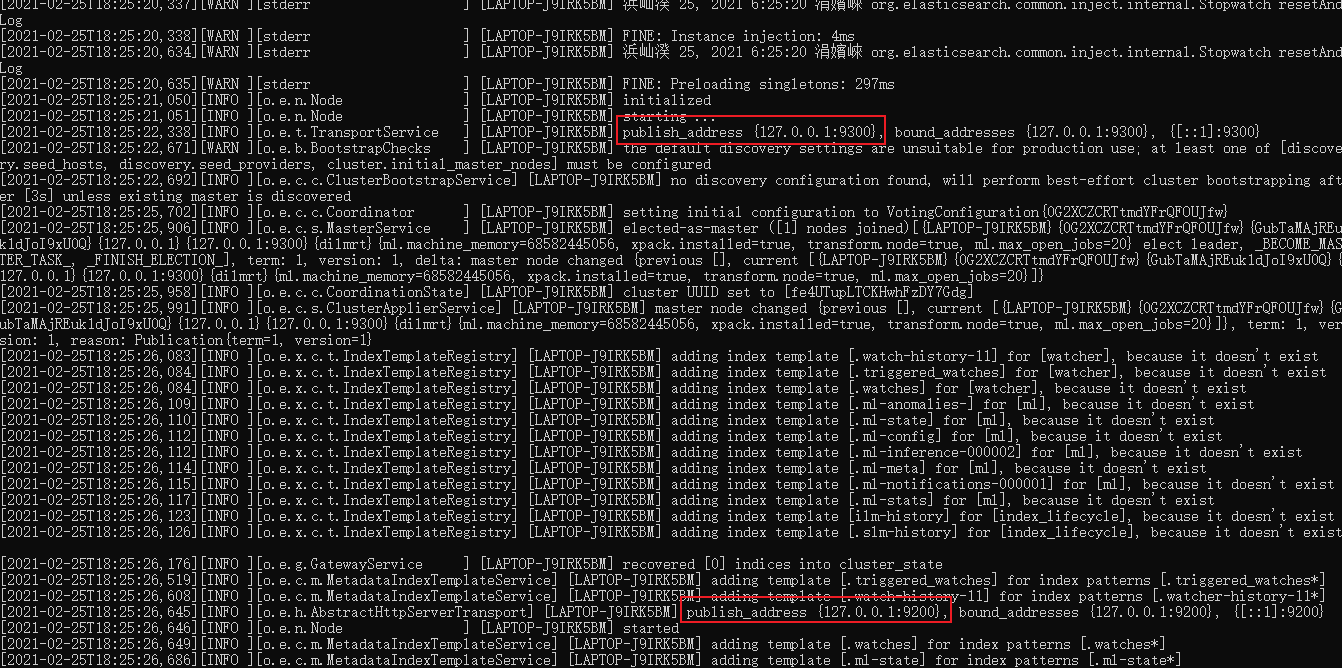

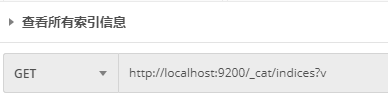

After decompression, enter the bin file directory and click elasticsearch.bat to start the ES service:

Note: Port 9300 is the communication port of Elasticsearch cluster components, and port 9200 is the http protocol RESTful port accessed by the browser.

Open with browser: http://localhost:9200/ , the following content appears, and the ES installation is started.

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-7muz0lhl-1637994003578)( https://i.loli.net/2021/11/23/9FaEzUToSPQ5jkO.png )]

1.2 basic operation

Elastic search is a document oriented database, where a piece of data is a document. For understanding, the concept of document data stored in Elasticsearch and data stored in relational database MySQL can be compared:

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-sq1chqlt-1637994003579)( https://i.loli.net/2021/11/23/lcwHNpTLhKJXOdW.png )]

The index in ES can be regarded as a library, while Types is equivalent to a table and Documents is equivalent to rows of a table. The concept of Types has been gradually weakened with the version update. In Elasticsearch 6.X, an index can only contain one Type. In Elasticsearch 7.X, the concept of Type has been deleted.

1.2.1 index operation

1.2.1.1 create index

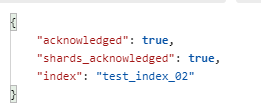

Create an index and send a request to the server using PUT: http://localhost:9200/test_index_02,test_ index_ 02 is the corresponding index name:

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-csjj4kjc-1637994003580)( https://i.loli.net/2021/11/23/xpe1c9kMulvO75F.png )]

After the request, the server returns a response:

Return result Field Description:

{

"acknowledged"[Response results]: true, // true operation succeeded

"shards_acknowledged"[[segmentation results]: true, // Slicing operation succeeded

"index"[[index name]: "test_index_02"

}

// Note: the default number of slices for creating an index library is 1. In elastic search before 7.0.0, the default number is 5

If the index is added repeatedly, an error message will be returned:

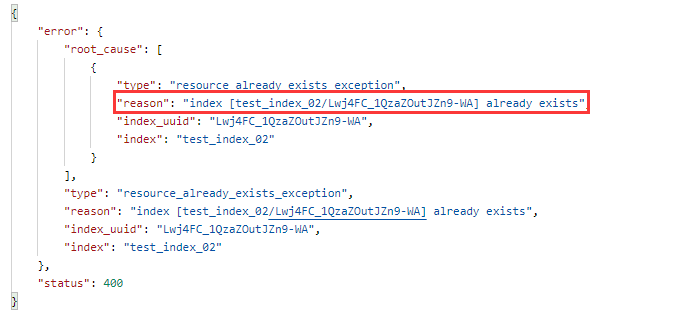

1.2.1.2 view all indexes created

Send GET request to ES server: http://localhost:9200/_cat/indices?v

In the above request path_ cat indicates view, indexes indicates index, and the server response results are as follows:

[external link picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-8lug1dks-1637994003584)( https://i.loli.net/2021/11/23/h6GEbUtfvNQ8Bk3.png )]

Return result Field Description:

| Header | meaning |

|---|---|

| health | Current server health status: green, yellow, red |

| status | Index open and close status |

| index | Index name |

| uuid | Indexes are numbered uniformly and generated automatically by the server |

| pri | Number of main segments |

| rep | Number of copies |

| docs.count | Number of documents available |

| docs.deleted | Number of documents deleted (logical deletion) |

| store.size | Overall space occupied by main partition and sub partition |

| pri.store.size | Space occupied by main partition |

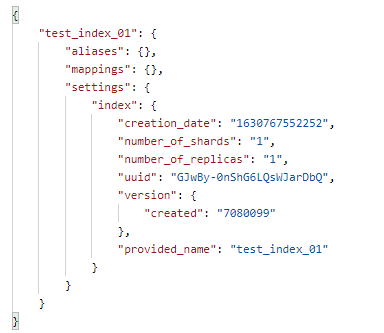

1.2.1.3 view the created specified index

Send GET request to ES server: http://localhost:9200/test_index_01,test_ index_ 01 is the index name:

[the external chain picture transfer fails. The source station may have an anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-EE2aXEaO-1637994003584)(Elasticsearch/image-20210904231348533.png)]

After the request, the server returns a response:

Return result Field Description:

{

"test_index_01"[[index name]: {

"aliases"[Alias]: {},

"mappings"[Mapping]: {},

"settings"[[settings]: {

"index": [set up-[index]{

"creation_date"[set up-Indexes-[creation time]: "1630767552252",

"number_of_shards"[set up-Indexes-[number of main segments]: "1",

"number_of_replicas"[set up-Indexes-Number of sub segments]: "1",

"uuid"[set up-Indexes-[unique identification]: "GJwBy-0nShG6LQsWJarDbQ",

"version"[set up-Indexes-[version number]: {

"created": "7080099"

},

"provided_name"[set up-Indexes-[name]: "test_index_01"

}

}

}

}

1.2.1.4 viewing the total number of indexed documents

Send GET request to ES server: http://localhost:9200/movies/_count, movies is the index name_ count counts the number of documents.

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-gmcsjdwr-1637994003586)( https://i.loli.net/2021/11/23/6r8nm4EILNKqvRh.png )]

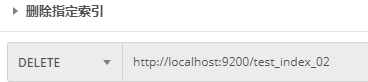

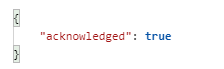

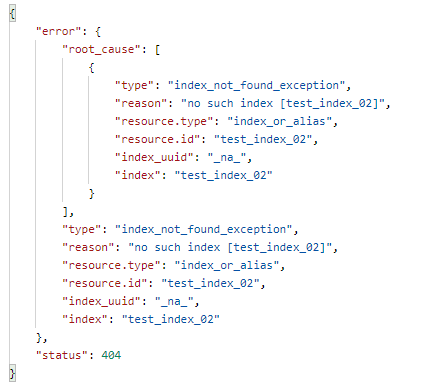

1.2.1.5 delete specified index

Send DELETE request to ES server: http://localhost:9200/test_index_02,test_ index_ 02 is the index name:

Server response result:

After deleting the index and accessing the index again, the server will return a response: the index does not exist.

1.2.2 document operation

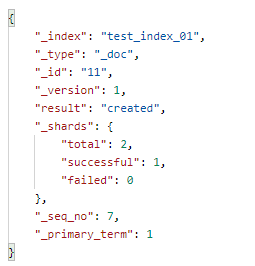

1.2.2.1 create document

After the index is created, create a document on the index and add data.

Send POST request to ES server: http://localhost:9200/test_index_01/_doc. The content of the request body is:

{

"brand": "millet",

"model": "MIX4",

"images": "https://cdn.cnbj1.fds.api.mi-img.com/product-images/mix4/specs_m.png",

"price": 3999.00,

"stock": 1000

}

[the external chain picture transfer fails. The source station may have an anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-8urcczau-1637994003590)( https://i.loli.net/2021/11/23/n87MuQAijoO9JH1.png )]

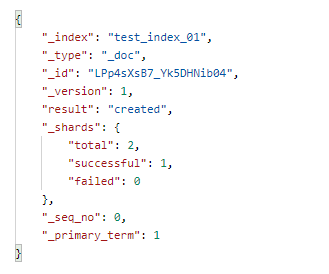

The server returned the response result:

Return result Field Description:

{

"_index"[[index name]: "test_index_01",

"_type"[type-[document]: "_doc",

"_id"[[unique identification]: "LPp4sXsB7_Yk5DHNib04", // Similar to primary key, randomly generated

"_version"[[version number]: 1,

"result"[Results]: "created", // created: indicates that the creation was successful, and updated: indicates that the update was successful

"_shards"[[slice]: {

"total"[Slice-Total]: 2,

"successful"[Slice-[success]: 1,

"failed"[Slice-Failed]: 0

},

"_seq_no"[[incremental serial number]: 0,

"_primary_term": 1

}

After the above document is created successfully, by default, the ES server will randomly generate a unique ID.

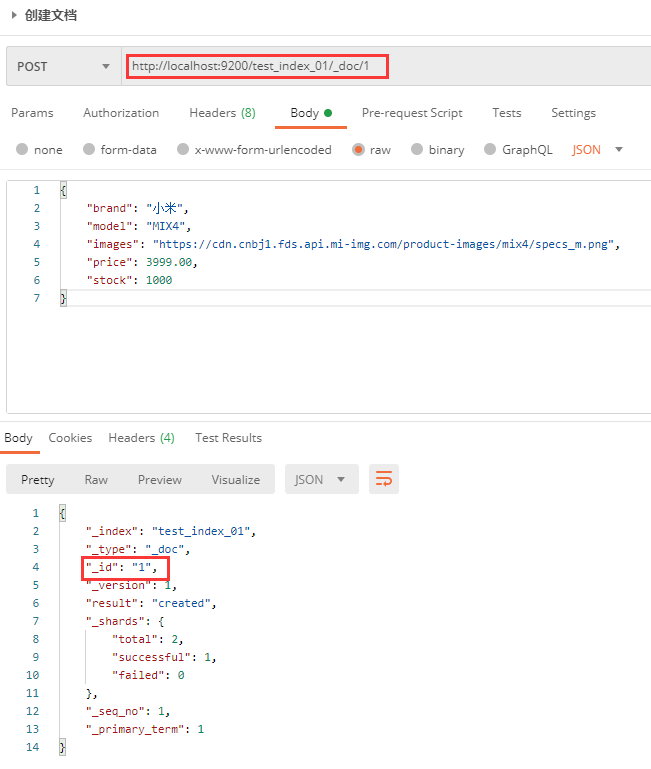

You can also specify a unique ID when creating a document: http://localhost:9200/test_index_01/_doc/1, 1 is the specified unique ID.

If the document is added with a clear and unique identification, the request method can also be PUT.

1.2.2.2 viewing documents

When viewing a document, you need to specify the unique ID of the document, which is similar to the primary key query of data in MySQL. Send GET request to ES server: http://localhost:9200/test_index_01/_doc/1:

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-sfx5gb5l-1637994003592)( https://i.loli.net/2021/11/23/1gx87hI5sW4Lykm.png )]

The query is successful, and the server returns the following results:

Return result Field Description:

{

"_index"[[index name]: "test_index_01",

"_type"[type-[document]: "_doc",

"_id"[[unique document ID]: "1",

"_version"[[version number]: 5,

"_seq_no"[[incremental serial number]: 6,

"_primary_term": 1,

"found"[[query results]: true, // true: indicates found, false: indicates not found

"_source"[[document source information]: {

"brand": "millet",

"model": "MIX4",

"images": "https://cdn.cnbj1.fds.api.mi-img.com/product-images/mix4/specs_m.png",

"price": 3999.00,

"stock": 1001

}

}

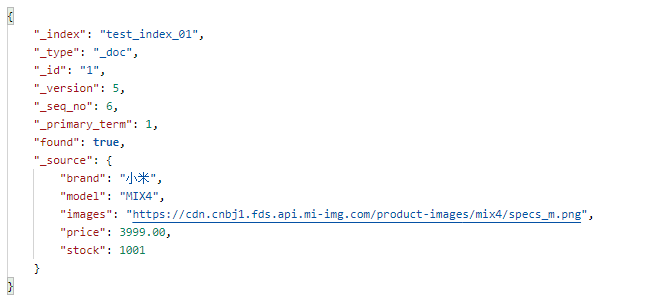

1.2.2.3 modifying documents

Modifying a document is the same as creating a document, and the request path is the same. If the request body changes, the original data content will be overwritten and updated. The content of the request body is:

{

"brand": "Huawei",

"model": "P50",

"images": "https://res.vmallres.com/pimages//product/6941487233519/78_78_C409A15DAE69B8B4E4A504FBDF5AB6FEB2C8F5868A7C84C4mp.png",

"price": 7488.00,

"stock": 100

}

The modification is successful, and the server returns the following results:

Return result Field Description:

{

"_index"[[index]: "test_index_01",

"_type"[type-[document]: "_doc",

"_id"[[unique identification]: "1",

"_version"[[version]: 6,

"result"[Results]: "updated",

"_shards"[[slice]: {

"total"[Total number of slices]: 2,

"successful"[[slicing succeeded]: 1,

"failed"[[fragmentation failed]: 0

},

"_seq_no": 8,

"_primary_term": 1

}

1.2.2.4 update of some documents

The update API also supports the update of some documents.

Send POST request to ES server: http://localhost:9200/test_index_01/_update/1,_ Update means update. The request body is:

{

"doc":{

"stock": 123

}

}

After the modification is successful, the response result is returned:

[the external chain picture transfer fails. The source station may have an anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-xxwex7rg-1637994003596)( https://i.loli.net/2021/11/23/OexFtoATLyQJmp9.png )]

Note: if the field to be modified does not exist in the source data, it will be added to the source data.

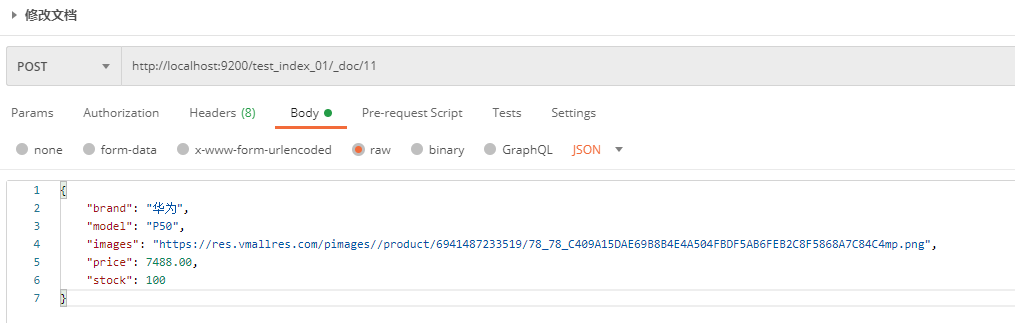

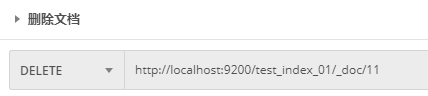

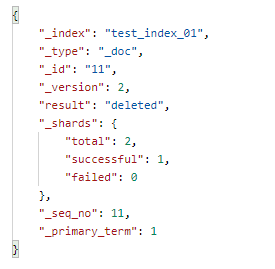

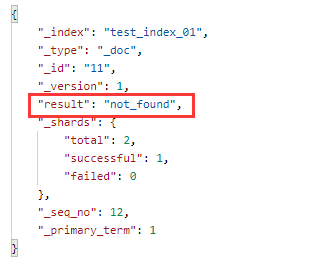

1.2.2.5 deleting documents

When a document is deleted, it will not be removed from the disk immediately, but will be marked as deleted (logical deletion).

Send DELETE request to ES server: http://localhost:9200/test_index_01/_doc/11

After the deletion is successful, the server returns the response result:

Return result Field Description:

{

"_index"[[index]: "test_index_01",

"_type"[type-[document]: "_doc",

"_id"[[unique identification]: "11",

"_version"[[version number]: 2,

"result"[Results]: "deleted", // Deleted: deleted successfully

"_shards"[[slice]: {

"total"[Total number of slices]: 2,

"successful"[[slicing succeeded]: 1,

"failed"[[fragmentation failed]: 0

},

"_seq_no": 11,

"_primary_term": 1

}

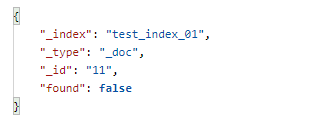

To view a document after deleting it:

Delete a deleted document or a document that does not exist:

1.2.2.6 delete documents according to conditions

In addition to deleting according to the unique identification of the document, you can also delete the document according to conditions.

Send POST request to ES server: http://localhost:9200/test_index_01/_delete_by_query, the request body is:

{

"query": {

"match": {

"price": 1999.00

}

}

}

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-ij26eg5h-1637994003600)( https://i.loli.net/2021/11/27/w2IXpFHQtTCZ3lh.png )]

After the deletion is successful, the server returns the response result:

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-xydvdfkt-1637994003601)( https://i.loli.net/2021/11/23/TNimV93j7PRrDQE.png )]

Return result Field Description:

{

"took"[[time consuming]: 626,

"timed_out"[[request timeout]: false,

"total"[Total number of documents]: 1,

"deleted"[Number of deleted documents]: 1,

"batches": 1,

"version_conflicts": 0,

"noops": 0,

"retries": {

"bulk": 0,

"search": 0

},

"throttled_millis": 0,

"requests_per_second": -1.0,

"throttled_until_millis": 0,

"failures": []

}

1.2.3 mapping operation

1.2.3.1 create mapping

Send PUT request to ES server: http://localhost:9200/test_index_01/_mapping. Request body:

{

"properties": {

"brand": {

"type": "text",

"index": true

},

"price": {

"type": "float",

"index": true

},

"stock": {

"type": "long",

"index": true

}

}

}

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-kkfismnm-1637994003602)( https://i.loli.net/2021/11/23/TfLKP38ZydM2Ont.png )]

The map is created successfully, and the response is returned:

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-u7ivzs5w-1637994003602)( https://i.loli.net/2021/11/23/htVTumbRj6Q98zy.png )]

Create mapping. Parameter Description:

| Field name | describe |

|---|---|

| properties | Create mapping Attribute Collection |

| brand | Attribute name, which can be filled in arbitrarily. Multiple attributes can be specified |

| type | Types. ES supports rich data types. Common are: 1. String can be divided into two types: text, which indicates separable words, and keyword, which indicates non separable words. The data will be matched as a complete field. 2. Numerical value types are divided into two categories Basic data types: long, integer, short, byte, double, float, half_float High precision floating point number: scaled_float 3. Date date type 4. Array array type 5. Object object |

| index | Whether to index. The default value is true. The field will be indexed and can be searched. |

| store | Whether to store data independently. The default value is false. The original text is stored in the_ In the store, by default, the fields are not stored independently, but from_ Extracted from store |

| analyzer | Word splitter, e.g. ik_max_word |

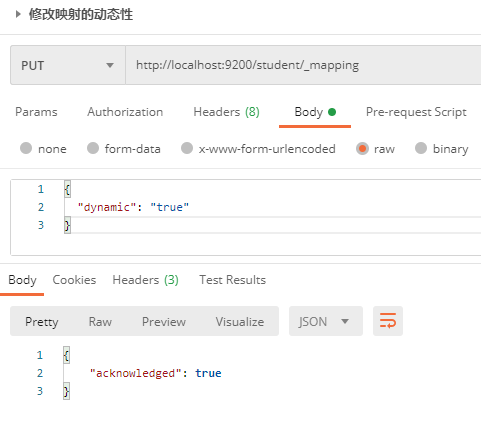

1.2.3.2 modify mapping

Once the mapping is created, the existing field types cannot be modified, but new fields can be added, and we can control the dynamic s of the mapping.

Send PUT request to ES server: http://localhost:9200/student/_mapping, the request body is as follows:

{

"dynamic": "true" // true: enable dynamic mode, false: enable static mode, strict: enable strict mode

}

1.2.3.3 view mapping

Send GET request to ES server: http://localhost:9200/test_index_01/_mapping

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (IMG dajviybj-1637994003604)( https://i.loli.net/2021/11/23/cytRxLEvY5s6CMJ.png )]

The server returned the response result:

{

"test_index_01": {

"mappings": {

"properties": {

"123": {

"type": "long"

},

"brand": {

"type": "text",

"fields": {

"keyword": {

"type": "keyword",

"ignore_above": 256

}

}

},

"images": {

"type": "text",

"fields": {

"keyword": {

"type": "keyword",

"ignore_above": 256

}

}

},

"model": {

"type": "text",

"fields": {

"keyword": {

"type": "keyword",

"ignore_above": 256

}

}

},

"price": {

"type": "float"

},

"qwewq": {

"type": "text"

},

"stock": {

"type": "long"

}

}

}

}

}

1.2.4 advanced query

Elasticsearch provides a complete query DSL based on JSON to define queries.

First create the index student, and then create the document:

// Create index

// POST http://localhost:9200/student

// create documents

// POST http://localhost:9200/student/_doc/10001

{

"name": "Huai Yong",

"sex": "male",

"age": 24,

"level": 3,

"phone": "15071833125"

}

// POST http://localhost:9200/student/_doc/10002

{

"name": "Zhu Hao",

"sex": "male",

"age": 28,

"level": 6,

"phone": "15072833125"

}

// POST http://localhost:9200/student/_doc/10003

{

"name": "Vegetable head",

"sex": "male",

"age": 28,

"level": 5,

"phone": "178072833125"

}

// POST http://localhost:9200/student/_doc/10004

{

"name": "base",

"sex": "male",

"age": 24,

"level": 3,

"phone": "15071833124"

}

// PSOT http://localhost:9200/student/_doc/10005

{

"name": "Zhang Ya",

"sex": "female",

"age": 26,

"level": 3,

"phone": "151833124"

}

1.2.4.1 query all documents

Send GET request to ES server: http://localhost:9200/student/_search. The request body is:

{

"query": {

"match_all":{}

}

}

// Query represents a query object, match_all means query all

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (IMG nwhtambv-1637994003605)( https://i.loli.net/2021/11/23/XfaYyOueg2EM64K.png )]

The response results returned by the server are as follows:

{

"took": 1,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 5,

"relation": "eq"

},

"max_score": 1.0,

"hits": [

......

]

}

}

Description of the result field returned by the server

hits

The most important part of the response is hits, which contains the total field to represent the total number of matched documents. The hits array also contains the first 10 matched data.

Each result in the hits array contains_ index , _ type and document_ id field, and the document source data is added to_ In the source field, this means that all documents will be available directly in the search results.

Each node has one_ Score field, which is the correlation score, which measures the matching degree between the document and the query. By default, the most relevant documents in the returned results are ranked first; This means that it is in accordance with_ Scores are in descending order.

max_score refers to all documents in the matching query_ The maximum value of score.

Total represents the total number of documents matching the search criteria, where value represents the value of the total hit count and relation represents the value rule (eq count is accurate and gte count is inaccurate).

took

The number of milliseconds the entire search request took.

_shards

_ The shards node indicates the number of fragments participating in the query (total field), in which how many are successful (successful field) and how many are failed (failed field). If both the primary partition and the replica partition fail due to some major faults, the data of this partition will not be able to respond to the search request. In this case, Elasticsearch will report the fragment failed, but will continue to return the results on the remaining fragments.

timed_out

time_ The out value indicates whether the query times out or not. Generally, search requests do not time out. If the response speed is more important than the complete result, you can define the timeout parameter as 10 or 10ms (10ms), or 1s (1s), then Elasticsearch will return the results collected before the request times out. Send request to ES server: http://localhost:9200/student/_search?timeout=1ms,? Timeout = 1ms means that the node data that successfully returns the result within 1ms is returned.

Note: setting timeout will not stop the query execution. It only returns the node time when the query is successfully executed, and then closes the connection. In the background, other shards may still execute queries, even though the results have been sent.

{

"took"[Query time in milliseconds]: 1,

"timed_out"[Timeout]: false,

"_shards"[[slice information]: {

"total"[Total number of slices]: 1,

"successful[Number of successful slices]": 1,

"skipped"[[number of segments ignored]: 0,

"failed"[Failed fragments]: 0

},

"hits"[[search criteria hit result information]: {

"total"[Total number of documents matching search criteria]: {

"value"[[value of total hit count]: 5,

"relation"[Counting rules]: "eq" // eq: accurate count gte: inaccurate count

},

"max_score"[Matching score]: 1.0,

"hits"[Search criteria hit result set]: [

{

"_index[[index name]": "student",

"_type"[[type]: "_doc",

"_id"[file id]: "10001",

"_score"[Correlation score]: 1.3862942,

"_source": {

"name": "Huai Yong",

"sex": "male",

"age": 24,

"level": 3,

"phone": "15071833125"

}

}

]

}

}

1.2.4.2 matching query

For a match type query, the query criteria will be segmented and then queried. The relationship between multiple terms is or.

Send GET request to ES server: http://localhost:9200/student/_search, the request body is:

{

"query": {

"match": {

"name": "Huai Yong",

"operator": "and"

}

}

}

Server returned results:

{

"took": 2,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 1,

"relation": "eq"

},

"max_score": 1.3862942,

"hits": [

{

"_index": "student",

"_type": "_doc",

"_id": "10001",

"_score": 1.3862942,

"_source": {

"name": "Huai Yong",

"sex": "male",

"age": 24,

"level": 3,

"phone": "15071833125"

}

}

]

}

}

1.2.4.3 multi field matching query

Match can only match one field. To match multiple fields, you have to use multi_match.

Send GET request to ES server: http://localhost:9200/student/_search, the request body is:

{

"query": {

"multi_match": {

"query": 24,

"fields":["age", "phone"]

}

}

}

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (IMG okbcwunq-1637994003066)( https://i.loli.net/2021/11/23/d8GR3pkfac5rxno.png )]

The server returned the response result:

{

"took": 1,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 2,

"relation": "eq"

},

"max_score": 1.0,

"hits": [

{

"_index": "student",

"_type": "_doc",

"_id": "10001",

"_score": 1.0,

"_source": {

"name": "Huai Yong",

"sex": "male",

"age": 24,

"level": 3,

"phone": "15071833125"

}

},

{

"_index": "student",

"_type": "_doc",

"_id": "10004",

"_score": 1.0,

"_source": {

"name": "base",

"sex": "male",

"age": 24,

"level": 3,

"phone": "15071833124"

}

}

]

}

}

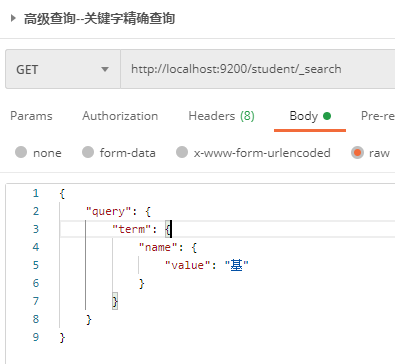

1.2.4.4 keyword accurate query

Use term query to accurately match keywords without word segmentation of query conditions.

Send GET request to ES server: http://localhost:9200/student/_search, the request body is:

{

"query": {

"term": {

"name.keyword": { // term query, the query criteria will not be word segmentation, and the data can be correctly matched only by adding. keyword

"value": "base"

}

}

}

}

The server returned the response result:

{

"took": 1,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 1,

"relation": "eq"

},

"max_score": 1.3862942,

"hits": [

{

"_index": "student",

"_type": "_doc",

"_id": "10004",

"_score": 1.3862942,

"_source": {

"name": "base",

"sex": "male",

"age": 24,

"level": 3,

"phone": "15071833124"

}

}

]

}

}

1.2.4.5 multi keyword accurate query

terms has the same effect as term, but multiple keywords can be specified. The effect is similar to in query.

Send GET request to ES server: http://localhost:9200/student/_search, the request body is:

{

"query": {

"terms": {

"name.keyword": ["base", "Huai Yong"]

}

}

}

The server returned the response result:

{

"took": 1,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 2,

"relation": "eq"

},

"max_score": 1.0,

"hits": [

{

"_index": "student",

"_type": "_doc",

"_id": "10001",

"_score": 1.0,

"_source": {

"name": "Huai Yong",

"sex": "male",

"age": 24,

"level": 3,

"phone": "15071833125"

}

},

{

"_index": "student",

"_type": "_doc",

"_id": "10004",

"_score": 1.0,

"_source": {

"name": "base",

"sex": "male",

"age": 24,

"level": 3,

"phone": "15071833124"

}

}

]

}

}

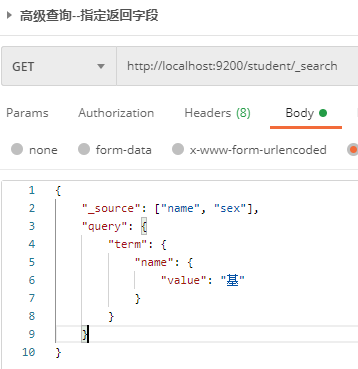

1.2.4.6 specify query fields

By default, ES saves documents in the search results_ All fields of source are returned. Can pass_ Source specifies the field to return.

Send GET request to ES server: http://localhost:9200/student/_search, the request body is:

{

"_source": ["name", "sex"],

"query": {

"term": {

"name": {

"value": "base"

}

}

}

}

The server returned the response result:

{

"took": 1,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 1,

"relation": "eq"

},

"max_score": 1.3862942,

"hits": [

{

"_index": "student",

"_type": "_doc",

"_id": "10004",

"_score": 1.3862942,

"_source": {

"sex": "male",

"name": "base"

}

}

]

}

}

1.2.4.7 filter fields

You can also pass_ includes specifies the fields to display_ excludes specifies the fields you do not want to display.

Send GET request to ES server: http://localhost:9200/student/_search, the request body is:

{

"_source": {

"includes": ["name", "sex"]

},

"query": {

"term": {

"name": {

"value": "base"

}

}

}

}

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-vvnafwck-1637994003610)( https://i.loli.net/2021/11/23/7SxVWJHqlftijzg.png )]

The response result returned by the server is:

{

"took": 1,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 1,

"relation": "eq"

},

"max_score": 1.3862942,

"hits": [

{

"_index": "student",

"_type": "_doc",

"_id": "10004",

"_score": 1.3862942,

"_source": {

"sex": "male",

"name": "base"

}

}

]

}

}

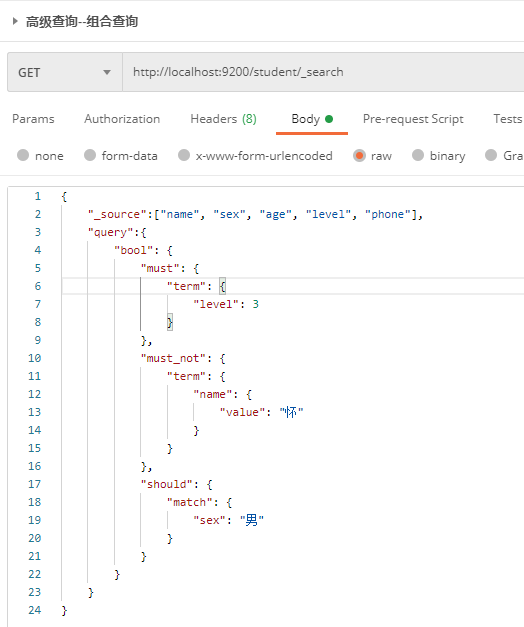

1.2.4.8 combined query

bool is a Boolean logic that can be used to merge query results of multiple filter conditions. It contains the following operators:

1. must: multiple query criteria are completely matched, which is equivalent to and, accounting relevance score;

2,must_not: the opposite matching of multiple query criteria, which is equivalent to not, and the correlation score will not be calculated;

3. should: at least one query condition matches, equivalent to or, accounting relevance score;

4. filter: equivalent to must, but the correlation is not calculated.

Send GET request to ES server: http://localhost:9200/student/_search, the request body is:

{

"_source":["name", "sex", "age", "level", "phone"],

"query":{

"bool": {

"must": {

"term": {

"level": 3

}

},

"must_not": {

"term": {

"name": {

"value": "Conceive"

}

}

},

"should": {

"match": {

"sex": "male"

}

}

}

}

}

The result returned by the server is:

{

"took": 2,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 2,

"relation": "eq"

},

"max_score": 1.287682,

"hits": [

{

"_index": "student",

"_type": "_doc",

"_id": "10004",

"_score": 1.287682,

"_source": {

"level": 3,

"phone": "15071833124",

"sex": "male",

"name": "base",

"age": 24

}

},

{

"_index": "student",

"_type": "_doc",

"_id": "10005",

"_score": 1.0,

"_source": {

"level": 3,

"phone": "151833124",

"sex": "female",

"name": "Zhang Ya",

"age": 26

}

}

]

}

}

1.2.4.9 range query

Through range, you can find the number or time within the specified interval. Range supports the following characters:

| Operator | explain |

|---|---|

| gt | Greater than > |

| gte | Greater than or equal to |

| lt | Less than< |

| lte | Less than or equal to |

Send GET request to ES server: http://localhost:9200/student/_search, the request body is:

{

"_source": ["name", "age", "level", "sex"],

"query": {

"bool": {

"must": [

{

"range": {

"age": {

"gt": 25,

"lt": 30

}

}

},

{

"match": {

"sex": "female"

}

}

]

}

}

}

The result returned by the server is:

{

"took": 2,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 1,

"relation": "eq"

},

"max_score": 2.3862944,

"hits": [

{

"_index": "student",

"_type": "_doc",

"_id": "10005",

"_score": 2.3862944,

"_source": {

"level": 3,

"sex": "female",

"name": "Zhang Ya",

"age": 26

}

}

]

}

}

1.2.4.10 fuzzy query

Returns a document containing words similar to the search term.

Edit distance is the number of character changes required to convert one word to another. These changes include:

1. Change characters (Box - > Fox)

2. Delete characters (black - > lack)

3. Insert character (SiC - > sick)

4. Transpose two adjacent characters (act - > cat)

In order to find similar terms, a fuzzy query creates all possible variants or extensions of a set of search terms within a specified editing distance. The query then returns an exact match for each extension. Modify and edit the distance through fuzziness. Generally, the default value AUTO is used to generate the editing distance according to the length of the term.

Send GET request to ES server: http://127.0.0.1:9200/student/_search, the request body is:

{

"query": {

"fuzzy": {

"name": {

"value": "base",

"fuzziness": 0

}

}

}

}

The server returned the response result:

{

"took": 2,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 4,

"relation": "eq"

},

"max_score": 0.72615415,

"hits": [

{

"_index": "student",

"_type": "_doc",

"_id": "10004",

"_score": 0.72615415,

"_source": {

"name": "base",

"sex": "male",

"age": 24,

"level": 3,

"phone": "15071833124"

}

},

{

"_index": "student",

"_type": "_doc",

"_id": "10008",

"_score": 0.72615415,

"_source": {

"name": "foundation",

"sex": "male",

"age": 56,

"level": 7,

"phone": "15071833124"

}

},

{

"_index": "student",

"_type": "_doc",

"_id": "10006",

"_score": 0.60996956,

"_source": {

"name": "Base 1",

"sex": "male",

"age": 21,

"level": 4,

"phone": "15071833124"

}

},

{

"_index": "student",

"_type": "_doc",

"_id": "10007",

"_score": 0.60996956,

"_source": {

"name": "1 base",

"sex": "1 male",

"age": 25,

"level": 4,

"phone": "15071833124"

}

}

]

}

}

1.2.4.11 single field sorting

sort can be sorted according to different fields, and the sorting method is specified through order: desc descending and asc ascending.

Send GET request to ES server: http://localhost:9200/student/_search, the request body is:

{

"query": {

"bool": {

"must": {

"match": {

"name": "base"

}

},

"must_not": {

"range": {

"level": {

"gte": 1,

"lte": 3

}

}

}

}

},

"sort": [

{

"age": {

"order": "desc"

}

}

]

}

The server returned the response result:

{

"took": 1,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 3,

"relation": "eq"

},

"max_score": null,

"hits": [

{

"_index": "student",

"_type": "_doc",

"_id": "10008",

"_score": null,

"_source": {

"name": "foundation",

"sex": "male",

"age": 56,

"level": 7,

"phone": "15071833124"

},

"sort": [

56

]

},

{

"_index": "student",

"_type": "_doc",

"_id": "10007",

"_score": null,

"_source": {

"name": "1 base",

"sex": "1 male",

"age": 25,

"level": 4,

"phone": "15071833124"

},

"sort": [

25

]

},

{

"_index": "student",

"_type": "_doc",

"_id": "10006",

"_score": null,

"_source": {

"name": "Base 1",

"sex": "male",

"age": 21,

"level": 4,

"phone": "15071833124"

},

"sort": [

21

]

}

]

}

}

Observe the returned results and find:

1,_ Score and Max_ The correlation calculation is not performed for the score field because of the calculation_ Score is used to compare performance consumption and is usually mainly used for sorting. When sorting is not performed by correlation, it is not necessary to count its correlation. If you want to force the correlation calculation, you can set track_scores is true. For example, send a GET request to the ES server: http://localhost:9200/student/_search?track_scores=true;

2. Each returned result in hits array has a sort field, which contains values for sorting.

Note: sorting is based on the original content of the field, and the inverted index does not work. You can use fielddata and DOC in ES_ Values.

1.2.4.12 multi field sorting

Send GET request to ES server: http://localhost:9200/student/_search, the request body is:

{

"query": {

"bool": {

"must_not": {

"match": {

"name": "Conceive"

}

},

"must": {

"range": {

"age": {

"gte": 56,

"lte": 56

}

}

}

}

},

"sort": [

{

"age": {

"order": "desc"

}

},

{

"level": {

"order": "asc"

}

}

]

}

The result set is sorted by the first sorting field. When the values used for sorting the first field are the same, then the second field is used to sort the documents with the same first sorting value, and so on.

1.2.4.13 highlight query

ES supports setting the label and style of the keyword part of the query content through highlight.

Send GET request to ES server: http://localhost:9200/student/_search, the request body is:

{

"query": {

"match": {

"name": "Zhu"

}

},

"highlight": {

"pre_tags"[[front label]: "<font color = 'red'>",

"post_tags"[[post label]: "</font>",

"fields"[[fields to highlight]: {

"name"[[field name]: {}

}

}

}

Server returned results:

{

"took": 2,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 1,

"relation": "eq"

},

"max_score": 2.1382177,

"hits": [

{

"_index": "student",

"_type": "_doc",

"_id": "10002",

"_score": 2.1382177,

"_source": {

"name": "Zhu Hao",

"sex": "male",

"age": 28,

"level": 6,

"phone": "15072833125"

},

"highlight": {

"name": [

"<font color = 'red'>Zhu</font>Vast"

]

}

}

]

}

}

1.2.4.15 paging query

ES supports paging queries. Set the size of the current page through size, and set the starting index of the current page from 0 by default. Calculation rules:

f

r

o

m

=

(

p

a

g

e

N

u

m

−

1

)

∗

p

a

g

e

S

i

z

e

from = (pageNum - 1) * pageSize

from=(pageNum−1)∗pageSize

Send GET request to ES server: http://localhost:9200/student/_search, the request body is:

{

"query": {

"match": {

"name": "base"

}

},

"sort": [

{

"age": {

"order": "asc"

}

}

],

"from": 0,

"size": 1

}

Server returned results:

{

"took": 2,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 5,

"relation": "eq"

},

"max_score": null,

"hits": [

{

"_index": "student",

"_type": "_doc",

"_id": "10006",

"_score": null,

"_source": {

"name": "Base 1",

"sex": "male",

"age": 21,

"level": 4,

"phone": "15071833124"

},

"sort": [

21

]

}

]

}

}

1.2.4.16 aggregate query

ES can perform statistical analysis on documents through aggregation, similar to group by, max, avg, etc. in relational databases.

1. max for a field

Send GET request to ES server: http://localhost:9200/student/_search, the request body is:

{

"query": {

"match": {

"name": "base"

}

},

"sort": [

{

"age": {

"order": "asc"

}

}

],

"size": 0, // Restrict not returning source data

"aggs": {

"max_age": {

"max": {

"field": "age"

}

}

}

}

Server returned results:

{

"took": 5,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 5,

"relation": "eq"

},

"max_score": null,

"hits": []

},

"aggregations": {

"max_age": {

"value": 56.0

}

}

}

**2. Take the minimum value min for a field**

Send GET request to ES server: http://localhost:9200/student/_search, the request body is:

{

"aggs": {

"min_level": {

"min": {

"field": "level"

}

}

},

"size": 0

}

The result returned by the server is:

{

"took": 1,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 9,

"relation": "eq"

},

"max_score": null,

"hits": []

},

"aggregations": {

"min_levels": {

"value": null

}

}

}

3. Sum a field

Send GET request to ES server: http://localhost:9200/student/_search, the request body is:

{

"aggs": {

"sum_age": {

"sum": {

"field": "age"

}

}

},

"size": 0

}

The result returned by the server is:

{

"took": 1,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 9,

"relation": "eq"

},

"max_score": null,

"hits": []

},

"aggregations": {

"sum_age": {

"value": 288.0

}

}

}

4. Average a field

avg is used for the average value, and the others are consistent with max.

5. The total number is obtained after de duplication of the value of a field

The request body is:

{

"aggs": {

"distinct_age": {

"cardinality": {

"field": "age"

}

}

},

"size": 0

}

6. State aggregation

stats aggregation returns count, max, min, avg and sum for a field at one time.

The request body is:

{

"aggs": {

"stats_age": {

"stats": {

"field": "age"

}

}

},

"size": 0

}

The result returned by the server is:

{

"took": 1,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 9,

"relation": "eq"

},

"max_score": null,

"hits": []

},

"aggregations": {

"stats_age": {

"count": 9,

"min": 21.0,

"max": 56.0,

"avg": 32.0,

"sum": 288.0

}

}

}

7. Barrel polymerization

Bucket aggregation is equivalent to the group by clause in sql.

1. terms aggregation and grouping statistics

The request body is:

{

"aggs": {

"age_groupby": {

"terms": {

"field": "level"

}

}

},

"size": 0

}

The response result returned by the server is:

{

"took": 1,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 9,

"relation": "eq"

},

"max_score": null,

"hits": []

},

"aggregations": {

"age_groupby": {

"doc_count_error_upper_bound": 0,

"sum_other_doc_count": 0,

"buckets": [

{

"key": 3,

"doc_count": 3

},

{

"key": 4,

"doc_count": 2

},

{

"key": 5,

"doc_count": 1

},

{

"key": 6,

"doc_count": 1

},

{

"key": 7,

"doc_count": 1

},

{

"key": 8,

"doc_count": 1

}

]

}

}

}

2. Aggregate under terms group

The request body is:

{

"aggs": {

"age_groupby": {

"terms": {

"field": "age"

},

"aggs": {

"sum_age": {

"sum":{

"field": "age"

}

}

}

}

},

"size": 0

}

The result returned by the server is:

{

"took": 5,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 9,

"relation": "eq"

},

"max_score": null,

"hits": []

},

"aggregations": {

"age_groupby": {

"doc_count_error_upper_bound": 0,

"sum_other_doc_count": 0,

"buckets": [

{

"key": 24,

"doc_count": 2,

"sum_age": {

"value": 48.0

}

},

{

"key": 28,

"doc_count": 2,

"sum_age": {

"value": 56.0

}

},

{

"key": 56,

"doc_count": 2,

"sum_age": {

"value": 112.0

}

},

{

"key": 21,

"doc_count": 1,

"sum_age": {

"value": 21.0

}

},

{

"key": 25,

"doc_count": 1,

"sum_age": {

"value": 25.0

}

},

{

"key": 26,

"doc_count": 1,

"sum_age": {

"value": 26.0

}

}

]

}

}

}

1.2.4.17 filter query

Using filter, the correlation score will not be calculated, and the related queries will be cached, which can improve the server response performance.

Send GET request to ES server: http://localhost:9200/student/_search, the request body is as follows:

{

"query": {

"constant_score": {

"filter": { // filter

"term": {

"name.keyword": "base"

}

}

}

}

}

1.2.4.18 validation query

The validate API can verify whether a query statement is legal. Send GET request to ES server: http://localhost:9200/student/_validate/query?explain. The request body is as follows:

{

"query": {

"multi_match": {

"query": 24,

"fields":["age","phone"]

}

}

}

[the external chain picture transfer fails. The source station may have an anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-dsisuswb-1637994003612)( https://i.loli.net/2021/11/23/YiEt3UMDCXv674F.png )]

The server returned a response:

{

"_shards"[[slice information]: {

"total": 1,

"successful": 1,

"failed": 0

},

"valid"[[verification results]: true,

"explanations"[[index description]: [

{

"index": "student",

"valid": true,

"explanation": "(phone:24 | age:[24 TO 24])"

}

]

}

1.3 ES native API operation

1.3.1 create project

Create a project in IDEA, modify pom file, and add ES related dependencies:

<dependencies>

<dependency>

<groupId>org.elasticsearch</groupId>

<artifactId>elasticsearch</artifactId>

<version>7.8.0</version>

</dependency>

<!-- elasticsearch Client for -->

<dependency>

<groupId>org.elasticsearch.client</groupId>

<artifactId>elasticsearch-rest-high-level-client</artifactId>

<version>7.8.0</version>

</dependency>

<!-- elasticsearch Dependency 2.x of log4j -->

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-api</artifactId>

<version>2.8.2</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<version>2.8.2</version>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-databind</artifactId>

<version>2.9.9</version>

</dependency>

<!-- junit unit testing -->

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>1.18.12</version>

</dependency>

</dependencies>

1.3.2 connecting to ES server

public class ConnectionTest {

/**

* ES client

*/

private static RestHighLevelClient client;

/**

* The client establishes a connection with the server

*/

@Before

public void connect(){

client = new RestHighLevelClient(RestClient.builder(new HttpHost("localhost", 9200, "http")));

}

/**

* Close the connection between the client and the server

*/

@After

public void close(){

if(Objects.nonNull(client)){

try {

client.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

}

Note: 9200 is the Web communication interface of ES.

1.3.3 index operation

1.3.3.1 create index

/**

* Create index

*/

@Test

public void createIndex(){

// Create index -- request object

CreateIndexRequest request = new CreateIndexRequest("user1");

try {

// Send request

CreateIndexResponse response = client.indices().create(request, RequestOptions.DEFAULT);

// The server returned a response

boolean acknowledged = response.isAcknowledged();

System.out.println("Create index, server response:" + acknowledged);

} catch (IOException e) {

e.printStackTrace();

}

}

1.3.3.2 view index

@Test

public void getIndex() throws IOException {

// Query index -- request object

GetIndexRequest request = new GetIndexRequest("user");

// Server response

GetIndexResponse response = client.indices().get(request, RequestOptions.DEFAULT);

System.out.println(response.getSettings());

}

Server returned results:

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-xcx9uvtr-1637994003613)( https://i.loli.net/2021/11/23/zeFfJqoaI7GbC6T.png )]

1.3.3.3 delete index

/**

* Delete index

*/

@Test

public void deleteIndex() throws IOException {

// Delete index -- request object

DeleteIndexRequest request = new DeleteIndexRequest("user1");

// The server returned a response

AcknowledgedResponse response = client.indices().delete(request, RequestOptions.DEFAULT);

System.out.println(response.isAcknowledged());

}

1.3.4 document operation

Create the data model first:

package com.jidi.elastic.search.test;

import lombok.Data;

/**

* @Description User entity

* @Author jidi

* @Email jidi_jidi@163.com

* @Date 2021/9/6

*/

@Data

public class UserDto {

/**

* Primary key id

*/

private Integer id;

/**

* name

*/

private String name;

/**

* nickname

*/

private String nickName;

/**

* Age

*/

private Integer age;

/**

* Gender 1: male 2: Female

*/

private byte sex;

/**

* level

*/

private Integer level;

/**

* phone number

*/

private String phone;

@Override

public String toString() {

return "UserDto{" +

"id=" + id +

", name='" + name + '\'' +

", nickName='" + nickName + '\'' +

", age=" + age +

", sex=" + sex +

", level=" + level +

", phone='" + phone + '\'' +

'}';

}

}

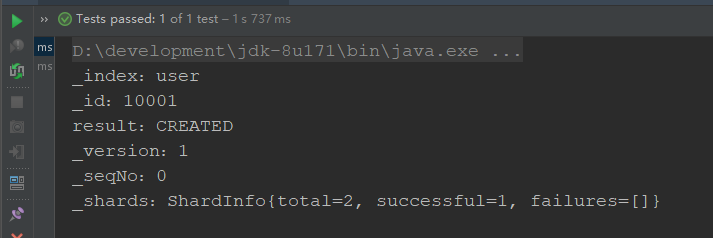

1.3.4.1 create document

/**

* Create a document (if the document exists, the whole document will be modified)

*/

@Test

public void createDocument() throws IOException {

// Create document -- request object

IndexRequest request = new IndexRequest();

// Set index and unique identification

request.index("user").id("10001");

// Create data object

UserDto user = new UserDto();

user.setId(10001);

user.setName("base");

user.setAge(24);

user.setLevel(3);

user.setSex((byte)1);

user.setNickName("Chicken brother");

user.setPhone("15071833124");

// Add document data

String userJson = new ObjectMapper().writeValueAsString(user);

request.source(userJson, XContentType.JSON);

// The server returned a response

IndexResponse response = client.index(request, RequestOptions.DEFAULT);

// Print result information

System.out.println("_index: " + response.getIndex());

System.out.println("_id: " + response.getId());

System.out.println("result: " + response.getResult());

System.out.println("_version: " + response.getVersion());

System.out.println("_seqNo: " + response.getSeqNo());

System.out.println("_shards: " + response.getShardInfo());

}

Execution results:

####1.3.4.3 document modification

/**

* Modify document

*/

@Test

public void updateDocument() throws IOException {

// Modify document -- request object

UpdateRequest request = new UpdateRequest();

// Configuration modification parameters

request.index("user").id("10001");

// Set request body

request.doc(XContentType.JSON, "sex", 1, "age", 24, "phone", "15071833124");

// Send request and get response

UpdateResponse response = client.update(request, RequestOptions.DEFAULT);

System.out.println("_index: " + response.getIndex());

System.out.println("_id: " + response.getId());

System.out.println("result: " + response.getResult());

}

Execution results:

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-87susfug-1637994003614)( https://i.loli.net/2021/11/27/hpd3t8myvCHuEbN.png )]

1.3.4.4 query documents

/**

* consult your documentation

*/

@Test

public void searchDocument() throws IOException {

// Create request object

GetRequest request = new GetRequest().id("10001").index("user");

// Return response body

GetResponse response = client.get(request, RequestOptions.DEFAULT);

System.out.println(response.getIndex());

System.out.println(response.getType());

System.out.println(response.getId());

System.out.println(response.getSourceAsString());

}

Execution results:

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-gyg5n1hq-1637994003615)( https://i.loli.net/2021/11/23/OFDLZf3uJWkc1lA.png )]

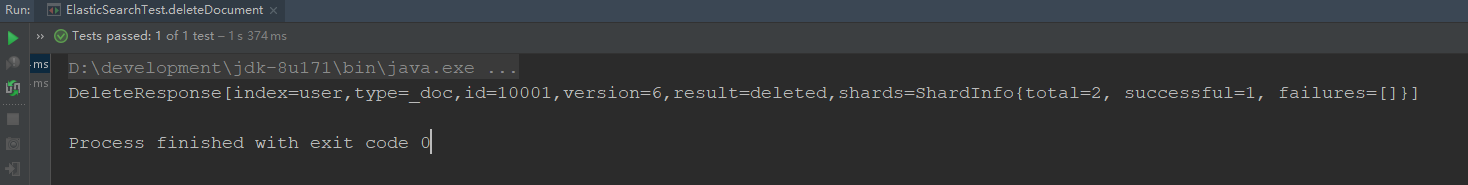

1.3.4.5 delete document

/**

* remove document

*/

@Test

public void deleteDocument() throws IOException {

// Create request object

DeleteRequest request = new DeleteRequest();

// Build request body

request.index("user");

request.id("10001");

// Send request and return response

DeleteResponse response = client.delete(request, RequestOptions.DEFAULT);

System.out.println(response.toString());

}

Execution results:

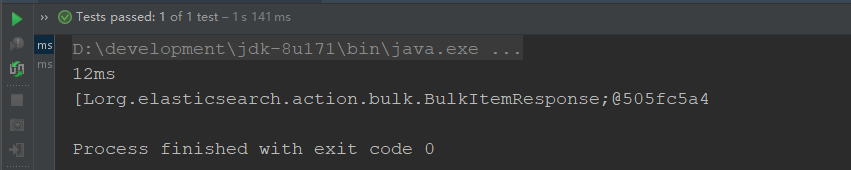

1.3.4.6 batch creation of documents

/**

* Batch create documents

*/

@Test

public void batchCreateDocument() throws IOException {

// Create request object

BulkRequest request = new BulkRequest();

request.add(

new IndexRequest()

.index("user")

.id("10001")

.source(

XContentType.JSON,

"id", 10001, "name", "base", "nickName", "Chicken brother", "age", 24, "sex", 1, "level", 3, "phone", "15071833124"));

request.add(

new IndexRequest()

.index("user")

.id("10002")

.source(

XContentType.JSON,

"id", 10002, "name", "Huai Jing", "nickName", "Brother Yongzi", "age", 23, "sex", 1, "level", 3, "phone", "15071831234"));

// Send request and return response

BulkResponse responses = client.bulk(request, RequestOptions.DEFAULT);

System.out.println(responses.getTook());

System.out.println(responses.getItems());

}

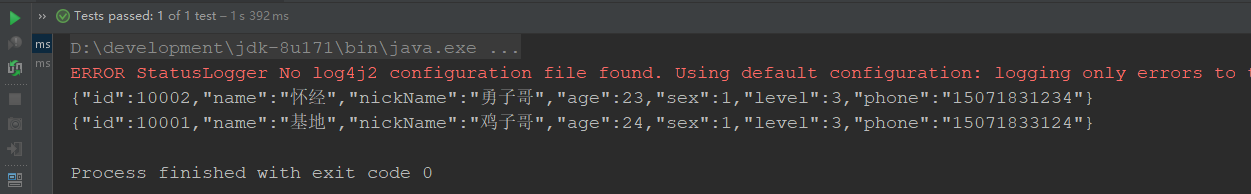

The execution results are:

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-v7kjtrm7-1637994003617)( https://i.loli.net/2021/11/23/WG6yu8LcjkNnigx.png )]

1.3.4.7 deleting documents in batch

/**

* Batch delete documents

*/

@Test

public void batchDeleteDocument() throws IOException {

// Create request object

BulkRequest request = new BulkRequest();

request.add(new DeleteRequest("user").id("10001"));

request.add(new DeleteRequest("user").id("10002"));

// Send request and return response

BulkResponse responses = client.bulk(request, RequestOptions.DEFAULT);

System.out.println(responses.getTook());

System.out.println(responses.getItems());

}

The execution results are:

1.3.5 advanced query

1.3.5.1 query all document data

/**

* Query all document data

*/

@Test

public void getAllDocument() throws IOException {

// Create request object

SearchRequest request = new SearchRequest();

request.indices("user");

// Build query request body

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

// Query all data

sourceBuilder.query(QueryBuilders.matchAllQuery());

request.source(sourceBuilder);

// Send request and return response

SearchResponse response = client.search(request, RequestOptions.DEFAULT);

SearchHits hits = response.getHits();

for (SearchHit hit: hits) {

System.out.println(hit.getSourceAsString());

}

}

Execution results:

1.3.5.2 matching query

/**

* Single field matching query

*/

@Test

public void getDocumentByMatch() throws IOException {

// Create request object

SearchRequest request = new SearchRequest();

request.indices("user");

// Build query request body

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

// Single field matching data

sourceBuilder.query(new MatchQueryBuilder("name", "base"));

request.source(sourceBuilder);

// Send request and return response

SearchResponse response = client.search(request, RequestOptions.DEFAULT);

SearchHits hits = response.getHits();

for (SearchHit hit: hits) {

System.out.println(hit.getSourceAsString());

}

}

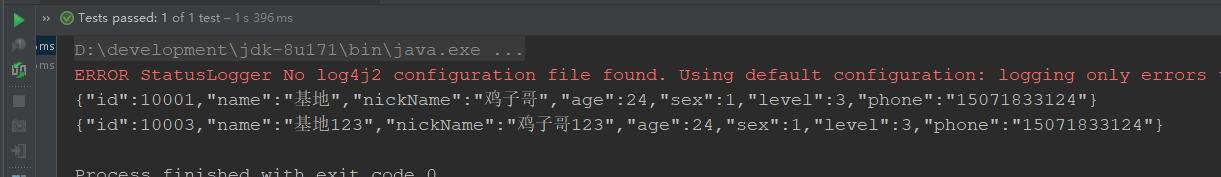

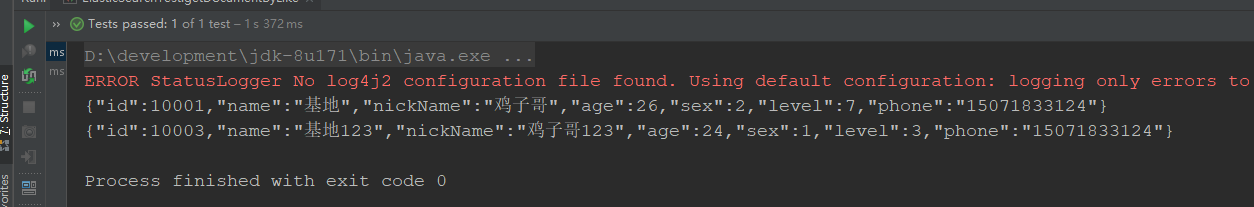

Execution results:

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-xh0b4cqf-1637994003619)( https://i.loli.net/2021/11/23/xWRfHMNeCdm84U1.png )]

1.3.5.3 multi field matching query

/**

* Single field matching query

*/

@Test

public void getDocumentByMatchMultiField() throws IOException {

// Create request object

SearchRequest request = new SearchRequest();

request.indices("user");

// Build query request body

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

// Single field matching data

sourceBuilder.query(new MultiMatchQueryBuilder("base", "name", "nickName"));

request.source(sourceBuilder);

// Send request and return response

SearchResponse response = client.search(request, RequestOptions.DEFAULT);

SearchHits hits = response.getHits();

for (SearchHit hit: hits) {

System.out.println(hit.getSourceAsString());

}

}

Execution results:

1.3.5.4 keyword accurate query

/**

* Keyword exact query

*/

@Test

public void getDocumentByKeWorld() throws IOException {

// Create request object

SearchRequest request = new SearchRequest();

request.indices("user");

// Build request body

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

sourceBuilder.query(new TermQueryBuilder("name", "base"));

sourceBuilder.query(new TermQueryBuilder("age", "26"));

sourceBuilder.query(new TermQueryBuilder("level", "7"));

request.source(sourceBuilder);

// Send request and return response

SearchResponse response = client.search(request, RequestOptions.DEFAULT);

SearchHits hits = response.getHits();

for (SearchHit hit : hits) {

System.out.println(hit.getSourceAsString());

}

}

Execution results:

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-svnmidkt-1637994003621)( https://i.loli.net/2021/11/27/LufN5edpD4SF186.png )]

1.3.5.5 multi keyword accurate query

/**

* Multi keyword exact query

*/

@Test

public void getDocumentByMultiKeyWorld() throws IOException {

// Create request object

SearchRequest request = new SearchRequest();

request.indices("user");

// Create request body

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

sourceBuilder.query(new TermsQueryBuilder("name", "2", "123"));

request.source(sourceBuilder);

// Send request and return response

SearchResponse response = client.search(request, RequestOptions.DEFAULT);

SearchHits hits = response.getHits();

for (SearchHit hit : hits) {

System.out.println(hit.getSourceAsString());

}

}

Execution results:

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-c5hp84aa-1637994003622)( https://i.loli.net/2021/11/27/TvruZzpiXmh2ctP.png )]

1.3.5.6 filter fields

/**

* Filter field

*/

@Test

public void getDocumentByFetchField() throws IOException {

// Create request object

SearchRequest request = new SearchRequest();

request.indices("user");

// Build request body

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

sourceBuilder.query(new MatchAllQueryBuilder());

// Specify query fields

sourceBuilder.fetchSource(new String[]{"id", "name"}, null);

request.source(sourceBuilder);

// Send request and return response

SearchResponse response = client.search(request, RequestOptions.DEFAULT);

SearchHits hits = response.getHits();

for (SearchHit hit : hits) {

System.out.println(hit.getSourceAsString());

}

}

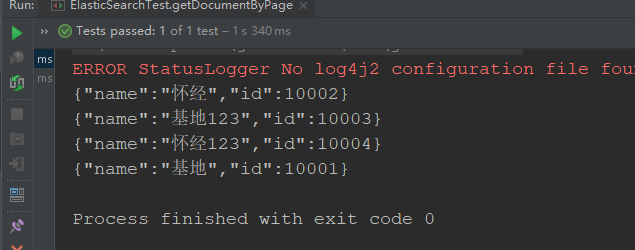

Execution results:

1.3.5.7 combined query

/**

* Combined query

*/

@Test

public void getDocumentByBool() throws IOException {

// Create request object

SearchRequest request = new SearchRequest();

request.indices("user");

// Build request body

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

// Combined query

BoolQueryBuilder boolQueryBuilder = new BoolQueryBuilder();

// Must contain

boolQueryBuilder.must(new MatchQueryBuilder("name", "base"));

boolQueryBuilder.must(new TermQueryBuilder("nickName", "brother"));

// Must not contain

boolQueryBuilder.mustNot(new TermQueryBuilder("level", 7));

// May contain

boolQueryBuilder.should(new MatchQueryBuilder("sex", 1));

sourceBuilder.query(boolQueryBuilder);

request.source(sourceBuilder);

// Send request and return response

SearchResponse response = client.search(request, RequestOptions.DEFAULT);

SearchHits hits = response.getHits();

for (SearchHit hit : hits) {

System.out.println(hit.getSourceAsString());

}

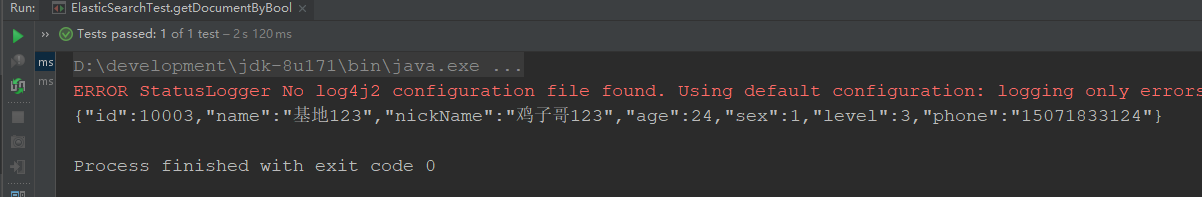

}

Execution results:

1.3.5.8 range query

/**

* Range query

*/

@Test

public void getDocumentByRange() throws IOException {

// Create request object

SearchRequest request = new SearchRequest();

request.indices("user");

// Build request body

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

// Range query

RangeQueryBuilder rangeQueryBuilder = new RangeQueryBuilder("age");

// Greater than or equal to

rangeQueryBuilder.gte(24);

// Less than or equal to

rangeQueryBuilder.lte(35);

sourceBuilder.query(rangeQueryBuilder);

sourceBuilder.from(0);

sourceBuilder.size(10);

request.source(sourceBuilder);

// Send request and return response

SearchResponse response = client.search(request, RequestOptions.DEFAULT);

SearchHits hits = response.getHits();

for (SearchHit hit : hits) {

System.out.println(hit.getSourceAsString());

}

}

Execution results:

[the external chain picture transfer fails. The source station may have an anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-m03uitwn-1637994003624)( https://i.loli.net/2021/11/23/ZYgA9HMo1LxDtPU.png )]

1.3.5.9 fuzzy query

/**

* Fuzzy query

*/

@Test

public void getDocumentByLike() throws IOException {

// Create request object

SearchRequest request = new SearchRequest();

request.indices("user");

// Build request body

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

// Fuzzy query

sourceBuilder.query(new FuzzyQueryBuilder("name", "base").fuzziness(Fuzziness.AUTO));

request.source(sourceBuilder);

// Send request and return response

SearchResponse response = client.search(request, RequestOptions.DEFAULT);

SearchHits hits = response.getHits();

for (SearchHit hit : hits) {

System.out.println(hit.getSourceAsString());

}

}

Execution results:

1.3.5.10 Sorting Query

/**

* Sort query

*/

@Test

public void getDocumentByOrder() throws IOException {

// Create request object

SearchRequest request = new SearchRequest();

request.indices("user");

// Build request body

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

sourceBuilder.query(new MatchAllQueryBuilder());

// Ascending order

sourceBuilder.sort("age", SortOrder.ASC);

request.source(sourceBuilder);

// Send request and return response

SearchResponse response = client.search(request, RequestOptions.DEFAULT);

SearchHits hits = response.getHits();

for (SearchHit hit : hits) {

System.out.println(hit.getSourceAsString());

}

}

Execution results:

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-eql4iilx-1637994003626)( https://i.loli.net/2021/11/23/PFRjJzZray65Ivc.png )]

1.3.5.11 highlight query

/**

* Highlight query

*/

@Test

public void getDocumentByHighLight() throws IOException {

// Create request object

SearchRequest request = new SearchRequest();

request.indices("user");

// Build request body

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

sourceBuilder.query(new TermQueryBuilder("name", "base"));

// Highlight query

HighlightBuilder highlightBuilder = new HighlightBuilder();

highlightBuilder.field("name");

highlightBuilder.preTags("<font color='red'>");

highlightBuilder.postTags("</font>");

sourceBuilder.highlighter(highlightBuilder);

request.source(sourceBuilder);

// Send request and return response

SearchResponse response = client.search(request, RequestOptions.DEFAULT);

SearchHits hits = response.getHits();

for (SearchHit hit : hits) {

System.out.println(hit.getSourceAsString());

// Get highlighted results

Map<String, HighlightField> highlightFields = hit.getHighlightFields();

System.out.println(highlightFields);

}

}

Execution results:

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-zyr7ooxw-1637994003627)( https://i.loli.net/2021/11/23/hfYNH5g7iIktQsc.png )]

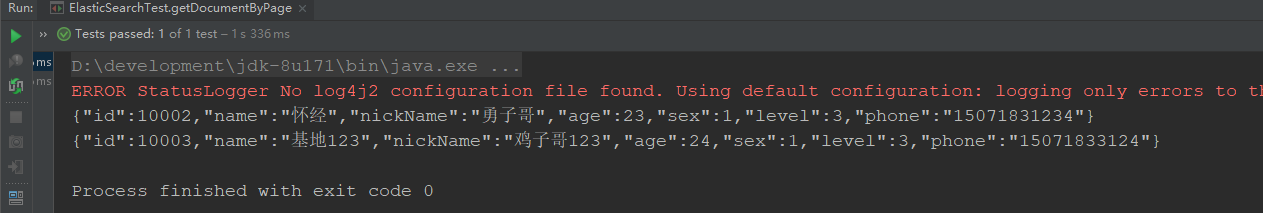

1.3.5.12 paging query

/**

* Paging query

*/

@Test

public void getDocumentByPage() throws IOException {

// Create request object

SearchRequest request = new SearchRequest();

request.indices("user");

// Build request body

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

sourceBuilder.query(new MatchAllQueryBuilder());

// paging

sourceBuilder.from(0);

sourceBuilder.size(2);

request.source(sourceBuilder);

// Send request and return response

SearchResponse response = client.search(request, RequestOptions.DEFAULT);

SearchHits hits = response.getHits();

for (SearchHit hit : hits) {

System.out.println(hit.getSourceAsString());

}

}

Execution results:

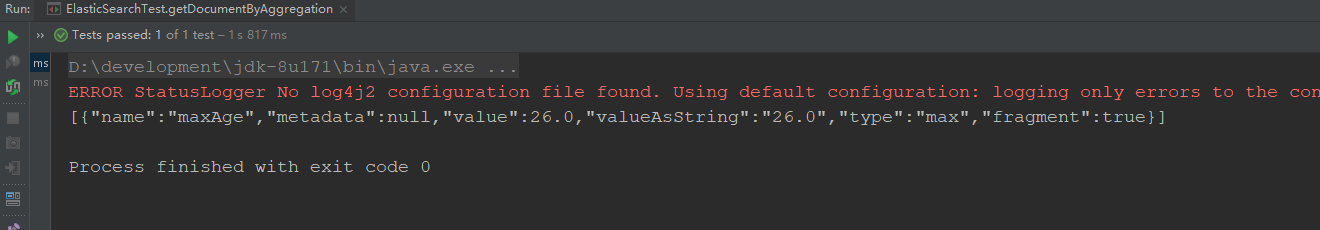

1.3.5.13 aggregate query

/**

* Aggregate query

*/

@Test

public void getDocumentByAggregation() throws IOException {

// Create request object

SearchRequest request = new SearchRequest();

request.indices("user");

// Build request body

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

// Oldest

sourceBuilder.aggregation(AggregationBuilders.max("maxAge").field("age"));

sourceBuilder.size(0);

request.source(sourceBuilder);

// Send request and return response

SearchResponse response = client.search(request, RequestOptions.DEFAULT);

System.out.println(new ObjectMapper().writeValueAsString(response.getAggregations().getAsMap().values()));

}

Execution results:

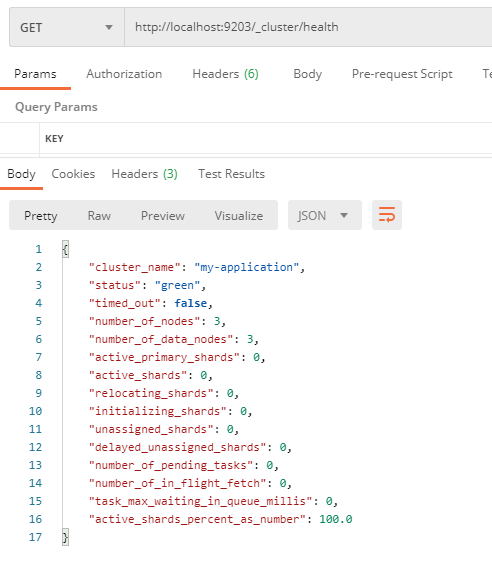

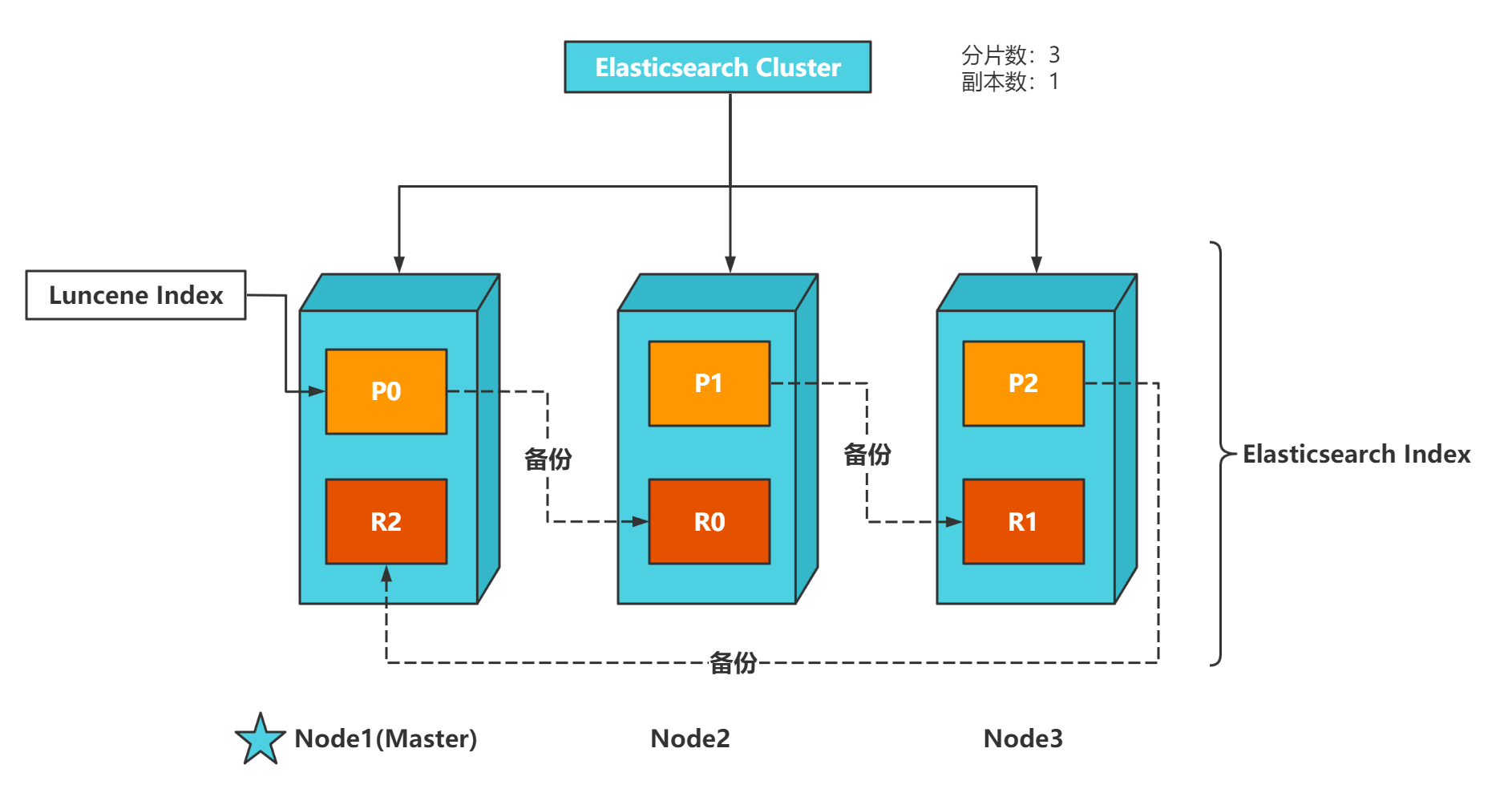

1.4 Elasticsearch environment

1.4.1 interpretation of terms

1.4.1.1 single machine & cluster

When a single Elasticsearch server provides services, it often has the maximum load capacity. If it exceeds this threshold, the server performance will be greatly reduced or even unavailable. Therefore, in the production environment, it generally runs in the specified server cluster. In addition to load capacity, single point servers have other problems:

1. The storage capacity of a single machine is limited;

2. Single server is prone to single point of failure and cannot achieve high availability;

3. The concurrent processing capacity of a single server is limited.

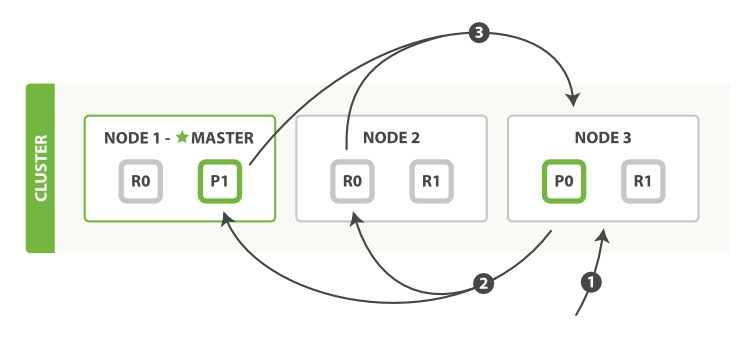

When configuring a server cluster, there is no limit on the number of nodes in the cluster. If there are more than or equal to 2 nodes, it can be regarded as a cluster. Generally, considering high performance and high availability, the number of nodes in the cluster is more than 3.

1.4.1.2 Cluster

A cluster is organized by multiple server nodes to jointly hold the whole data and provide indexing and search functions together. An elasticsearch cluster has a unique name ID, which is elasticsearch by default. Nodes can only join a cluster by specifying its name.

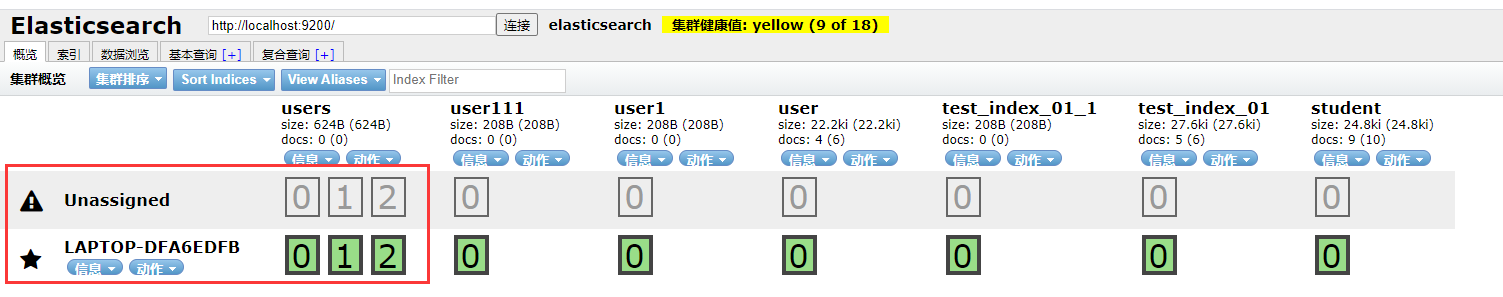

In Elasticsearch cluster, you can monitor and count a lot of information, but only one is the most important: cluster health. Cluster health has three states:

1. green: all master slices and replica slices are available;

2. yellow: all primary partitions are available, but not all replica partitions are available;

3. red: not all primary partitions are available.

1.4.1.3 Node

A node is an Elasticsearch instance, and a cluster is composed of one or more nodes with the same cluster.name. They work together to share data and load. When a new node is added or a node is deleted, the cluster will sense and balance the data. As a part of the cluster, it stores data and participates in the indexing and search functions of the cluster.

A node is also identified by a name. By default, this name is the name of a random Marvel comic character. This name will be given to the node at startup. A node can join a specified cluster by configuring the cluster name. By default, each node is scheduled to join a cluster called elastic search.

Each node saves the status of the cluster, but only the Master node can modify the status information of the cluster (all node information, all indexes and related Mapping and Setting information, fragment routing information). The Master node does not participate in document level change or search, which means that the Master node will not become the bottleneck of the cluster when the traffic increases. Any node can be the Master node.

After each node is started, it is a Master eligible node by default. A Master eligible node can participate in the selected main process and become a Master node, which can be prohibited by setting node.master:false.

Node classification

Nodes can be divided into:

1. Master Node: the Master Node, which is responsible for modifying the cluster status information;

2. Data Node: a Data Node, which is responsible for saving fragment data;

3. Ingrest node: preprocessing node, which is responsible for preprocessing files before actually indexing documents;

4. Coordinating Node: the Coordinating Node is responsible for receiving the client's request, distributing the request to the appropriate node, and finally collecting the results and returning them to the client. Each node is responsible for coordinating nodes by default.

Other node types

1,Hot & Warm Node: Hot and cold node , data nodes with different hardware configurations are used to implement the hot & warm architecture and reduce the cost of cluster deployment;

2. Machine learning node: the node responsible for running machine learning tasks;

3. Triple node: the triple node (Deprecated) can be connected to different elasticsearch clusters and can be treated as a separate cluster.

1.4.1.4 Node configuration

Generally, a node in the development environment can assume multiple roles; In a production environment, in order to improve performance, nodes should be set to a single role.

| Node type | configuration parameter | Default value |

|---|---|---|

| master eligible | node.master | true |

| data | node.data | true |

| ingest | node.ingest | true |

| coordinating only | nothing | Each node is a coordination node by default. |

| machine learning | node.ml | true (you need to enable x-pack, which includes security, alarm, monitoring, reporting and graphics functions in an easy to install package) |

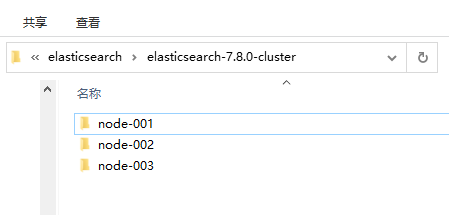

1.4.2 Windows cluster deployment

1. Create the elasticsearch-7.8.0-cluster folder and internally copy three elasticsearch services

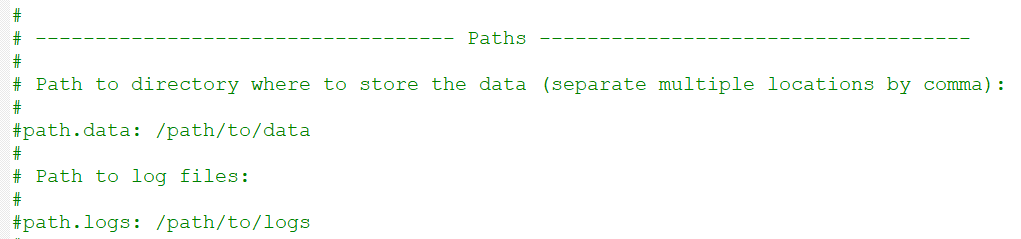

2. Modify the configuration information of each node (config/elasticsearch.yml)

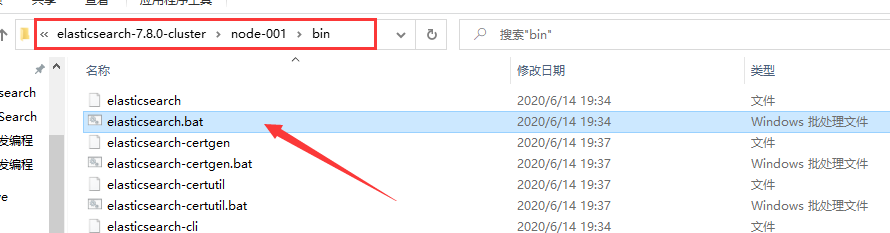

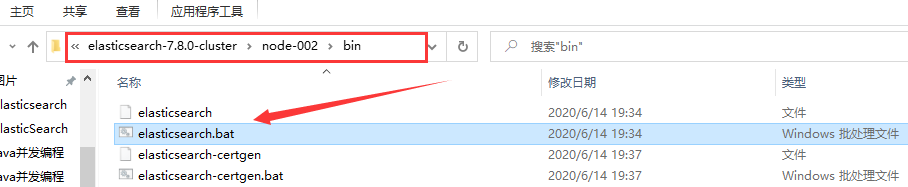

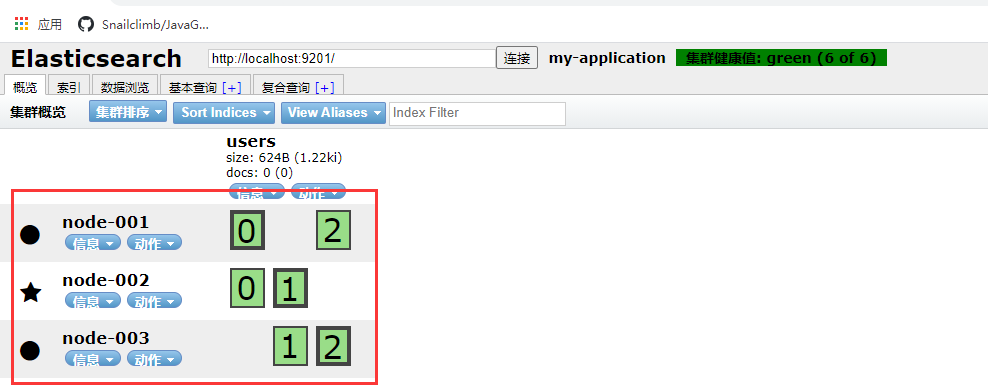

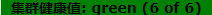

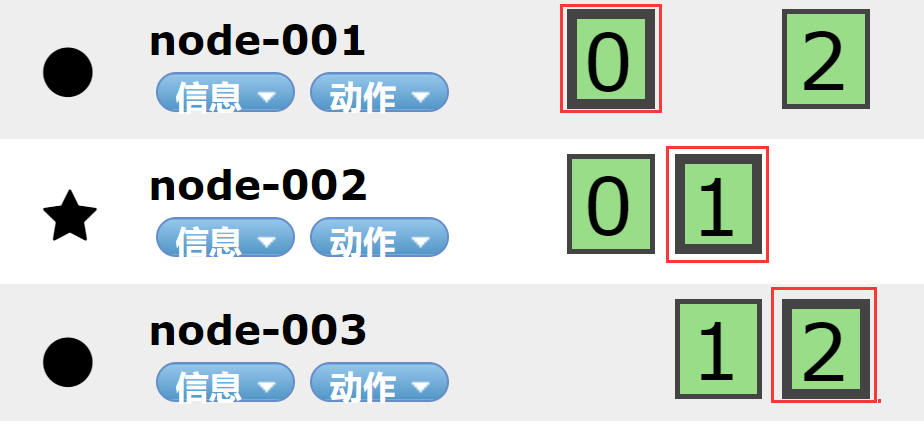

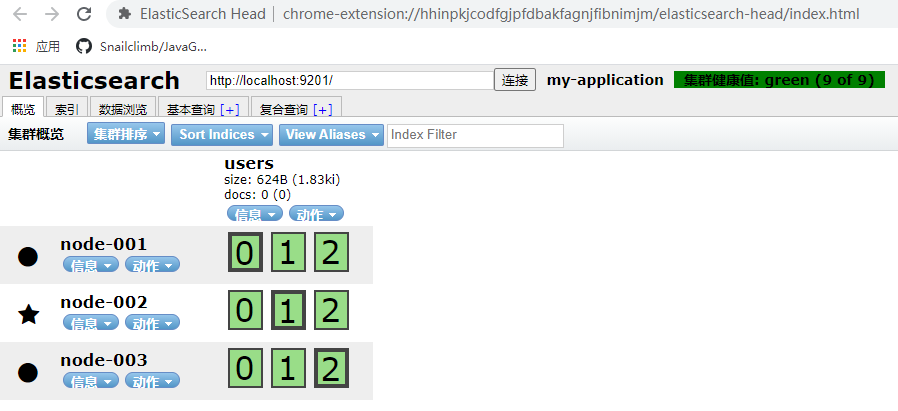

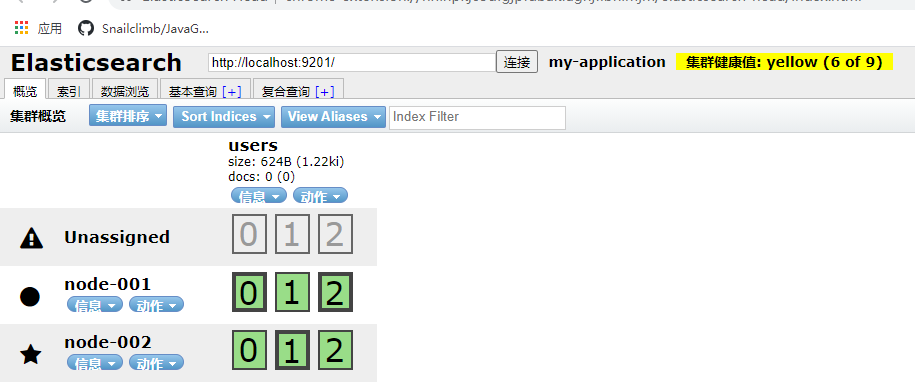

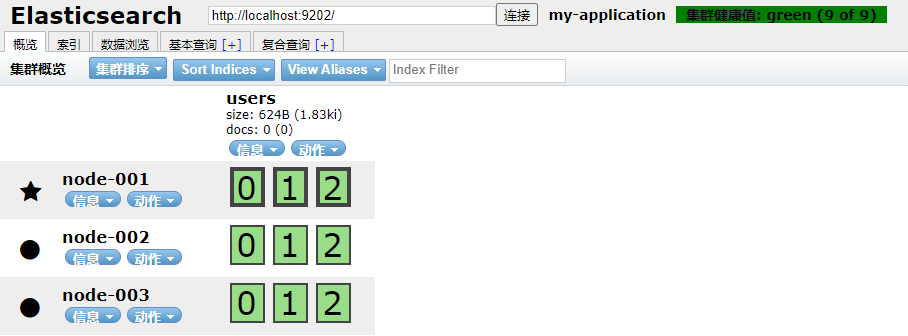

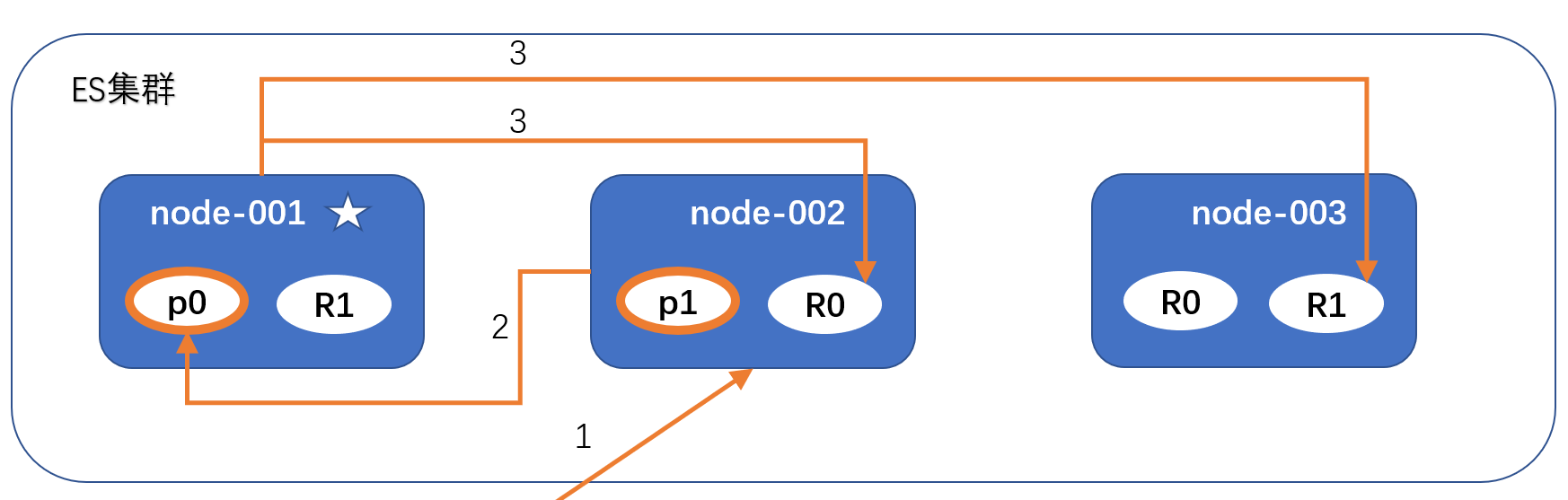

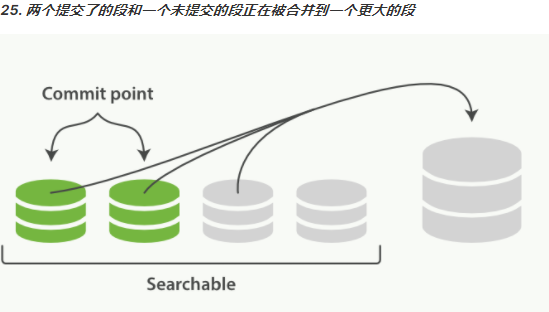

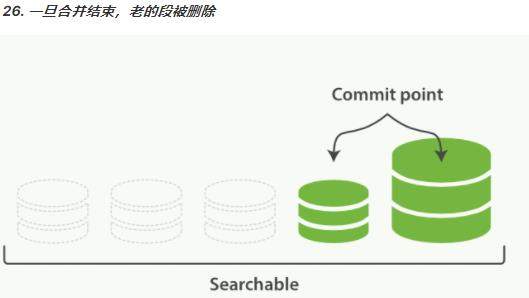

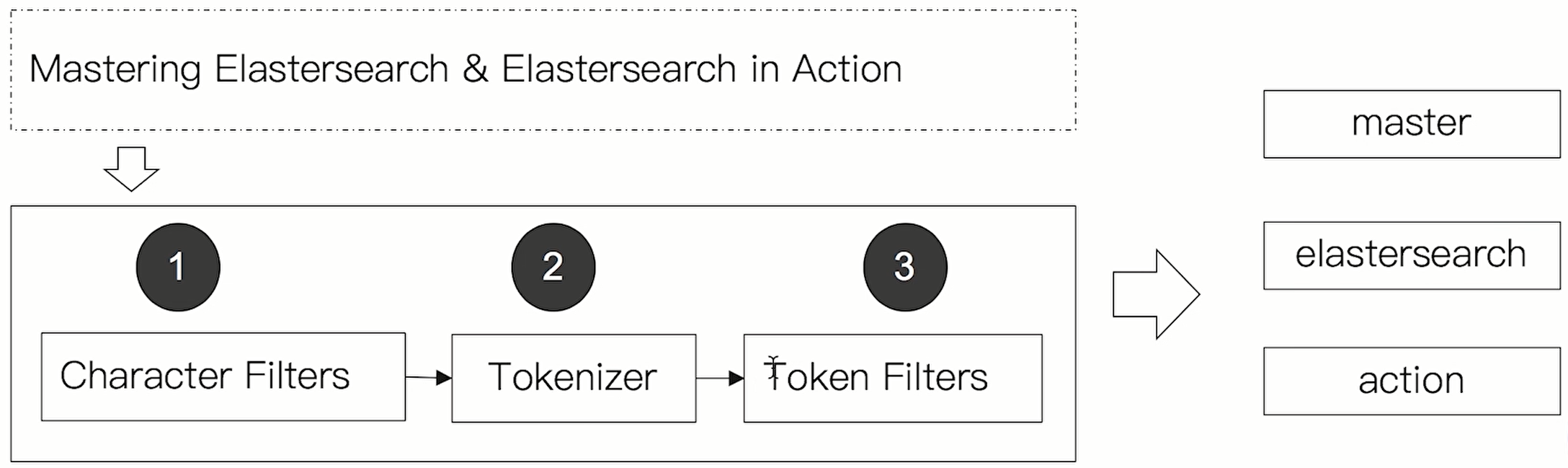

node-001 node configuration: