ELK is the acronym for three software products: Elastic search Logstash Kibana

| Elasticsearch | Responsible for log retrieval and storage |

| Logstash | Responsible for the collection, analysis and processing of logs |

| Kibana | Responsible for visualization of logs |

Uses: Distributed log data centralized query and management; system monitoring, including the monitoring of system hardware and application components; troubleshooting; security information and event management; report function

Workflow:

|

web server (install filebeat beat) Log files | | | Pass to Logstash Internal input--filter--output - > passed to Elastic search < - kibana to get data from it, aggregate - > client client read |

Elasticsearch

The Lucene-based search server developed in java provides a full-text search engine with distributed multi-user capabilities and a Web interface based on RESTful API.

Features: Real-time analysis; Distributed real-time file storage and indexing of each field; Document-oriented; High availability, scalability, support for clustering, fragmentation and replication; JSON support

But it has no typical transaction and authorization and authentication features.

Related concepts

Node: Node with an ES server

Cluster has clusters of Node s

Document is a basic information unit that can be searched

A collection of documents with similar characteristics in Index

Type An index can define one or more types

Field is the smallest unit of ES, equivalent to a column of data.

Shards index slices, each of which is a shard

Copies of Replicas Index

| Relational database | Elasticsearch |

|---|---|

| Database | Index |

| Table | Type |

| Row | Document |

| Column | Field |

| Schema | Mapping |

| Index | Everything is indexed |

| SQL | Query DSL |

| SELECT* FROM table | GET http:// |

| UPDATE table SET | PUT http:// |

HTTP protocol

http request method GET POST HEAD other method OPTIONS PUT DELETE TRACE CONNECT

DELETE Delete DELETE Delete POST Change GET Check

System command curl

linux uses url rules to work under the command line file transfer tools, powerful http command line tools to support a variety of request patterns, custom request head and other powerful functions

Common parameter - A modifies request agent-X setting request method - i displays return header information

[root@ecs-guo ~]# curl -X GET www.baidu.com <!DOCTYPE html> <!--STATUS OK--><html> <head><meta http-equiv=content-type content=text/html;charset=utf-8><meta http-equiv=X-UA-Compatible content=IE=Edge><meta content=always name=referrer><link rel=stylesheet type=text/css href=http://S1.bdstatic.com/r/www/cache/bdorz/baidu.min.css> <title>Baidu, you know </title>...

es plug-in

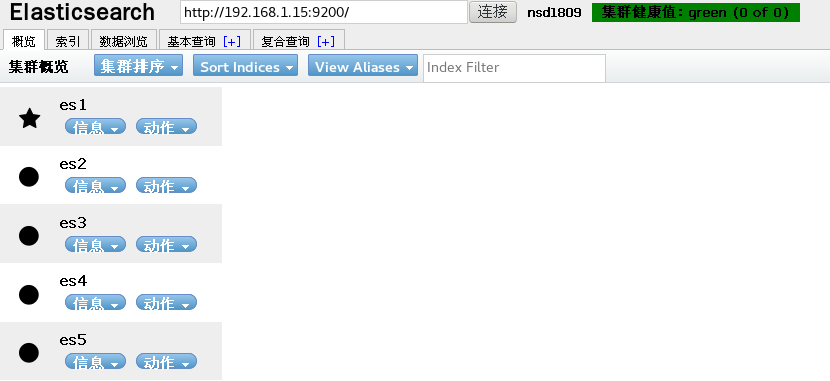

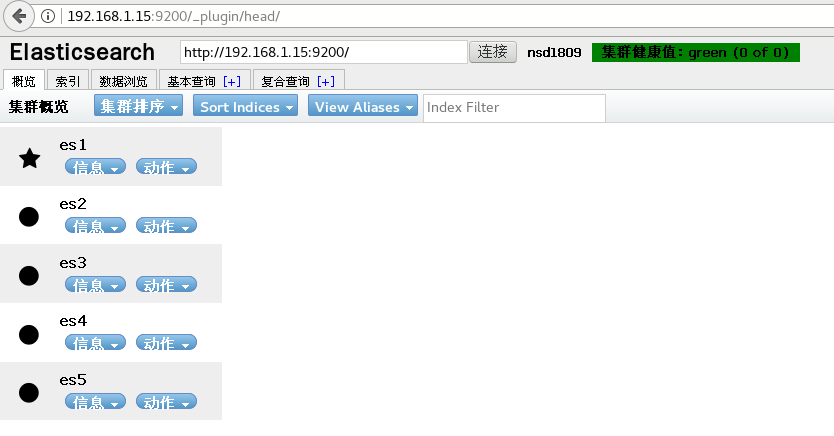

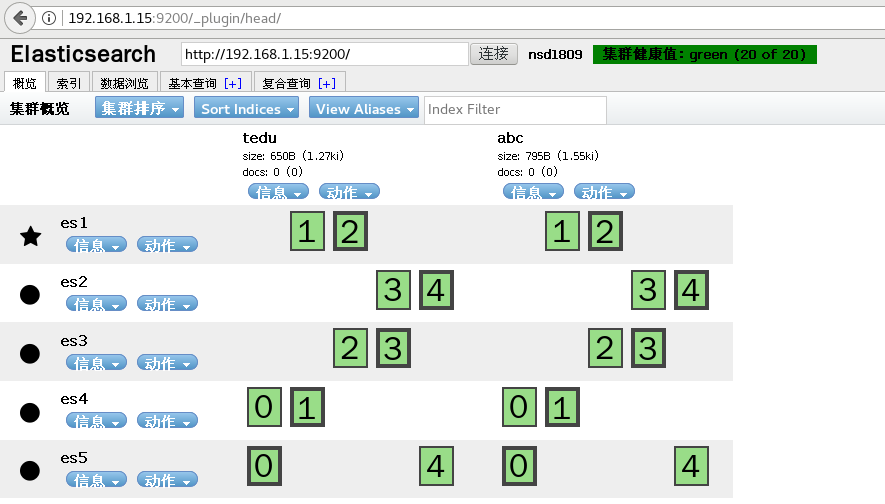

head plug-in: It shows the topology of ES cluster, and can be used for indexing and node-level operation. It provides query API for cluster. The results are returned in the form of json and tables. It also provides some shortcut menus to represent various states of cluster.

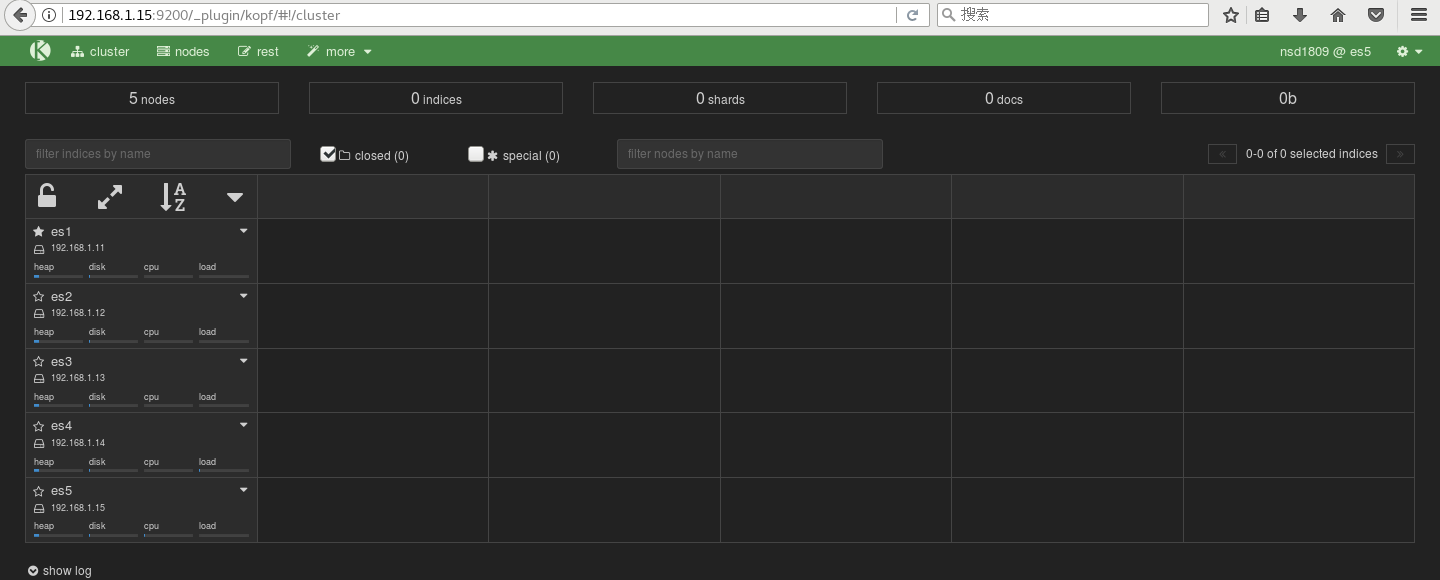

kopf Plug-in: Management Tool, which provides an API for ES cluster operation

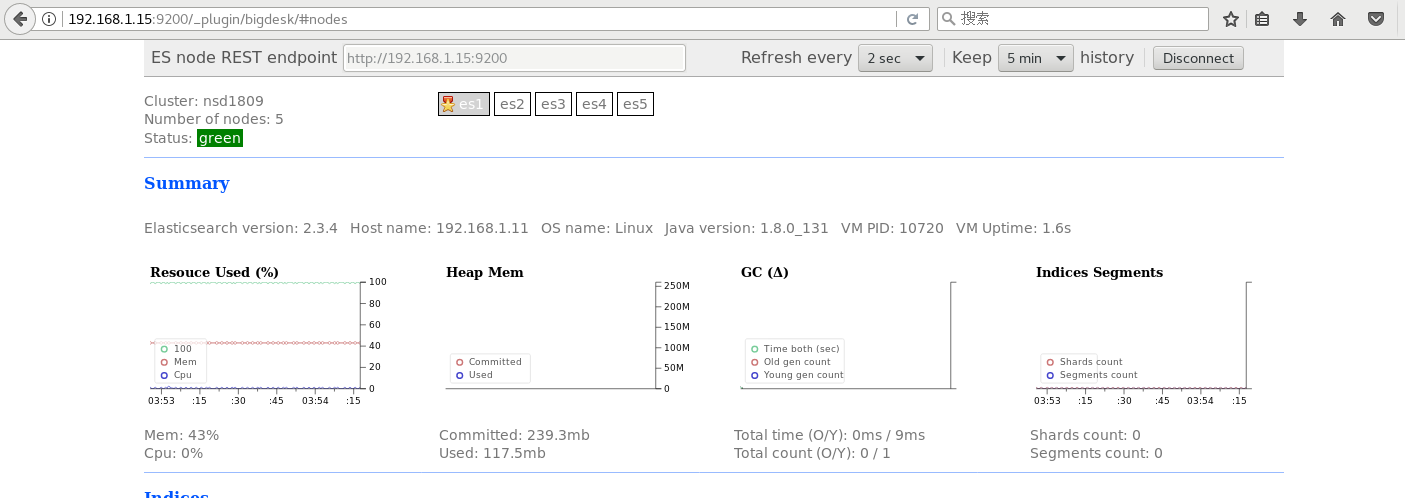

bigdesk Plug-in: Cluster Monitoring Tool, which can monitor various states

Installation plug-in 192.168.1.15

elasticsearch-head-master.zip elasticsearch-kopf-master.zip bigdesk-master.zip

[root@es5 ~]# cd /usr/share/elasticsearch/bin/

[root@es5 bin]# ls

elasticsearch elasticsearch.in.sh elasticsearch-systemd-pre-exec plugin

[root@es5 bin]# . / plugin install file:///root/elastic search-head-master.zip//Note that the format must be file://root/elastic search-head-master.zip/////

[root@es5 bin]# ./plugin install file:///root/bigdesk-master.zip

[root@es5 bin]# ./plugin install file:///root/elasticsearch-kopf-master.zip

[root@es5 bin]# ./plugin list

Installed plugins in /usr/share/elasticsearch/plugins:

- head

- kopf

- bigdeskOpen plug-in

http://192.168.1.15:9200/_plugin/head

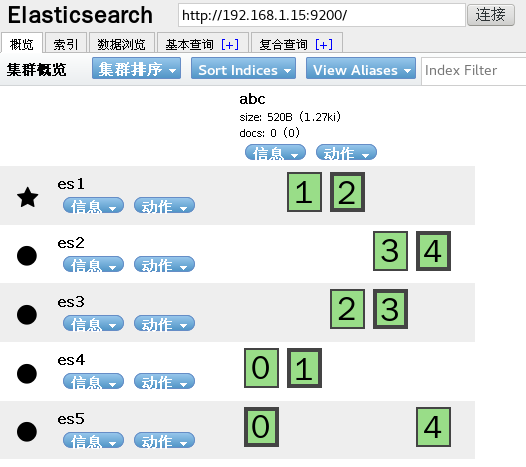

Click on the index to create an index, a distributed storage rule, a fragment of five pieces of source data, a copy (default)

http://192.168.1.15:9200/_plugin/kopf

http://192.168.1.15:9200/_plugin/bigdesk

RESTful API call

1. Provides a series of API s to check the health, status and statistics of clusters, nodes and indexes.

Managing data and metadata for clusters, nodes, and miniatures

CRUD operation and query operation on index

Perform other advanced operations such as paging, sorting, filtering, etc.

2. Use json format for POST or PUT data

3.Json (Javascript Object Notation) Javascript Object Representation, a lightweight text-independent language-based data exchange format

The transmission is a string, and the corresponding string, list and dictionary in Python can be converted to the corresponding JSON format.

4._cat API Query cluster status, node information

v Parameter Display Details

help Displays help Information

nodes queries node status information

[root@es5 bin]# curl "http://192.168.1.15:9200/_cat/health?v"//View cluster health information,(? v Details? help View help) epoch timestamp cluster status node.total node.data shards pri relo init unassign pending_tasks max_task_wait_time active_shards_percent 1547628559 16:49:19 nsd1809 green 5 5 10 5 0 0 0 0 - 100.0% [root@es5 bin]# curl "http://192.168.1.15:9200/_cat/nodes?v"//query node host ip heap.percent ram.percent load node.role master name 192.168.1.12 192.168.1.12 10 40 0.00 d m es2 192.168.1.14 192.168.1.14 11 41 0.18 d m es4 192.168.1.11 192.168.1.11 11 44 0.00 d * es1 192.168.1.15 192.168.1.15 11 42 0.00 d m es5 192.168.1.13 192.168.1.13 11 40 0.00 d m es3 [root@es5 bin]# curl "http://192.168.1.15:9200/_cat/master?v"//Query the main library id host ip node 141WVGOsRiyI99AKLFJNVg 192.168.1.11 192.168.1.11 es1 [root@es5 bin]# curl "http://192.168.1.15:9200/_cat/shards?v"//query fragmentation information index shard prirep state docs store ip node abc 4 r STARTED 0 159b 192.168.1.15 es5 abc 4 p STARTED 0 159b 192.168.1.12 es2 abc 3 p STARTED 0 159b 192.168.1.13 es3 abc 3 r STARTED 0 159b 192.168.1.12 es2 abc 1 p STARTED 0 159b 192.168.1.14 es4 abc 1 r STARTED 0 159b 192.168.1.11 es1 abc 2 p STARTED 0 159b 192.168.1.11 es1 abc 2 r STARTED 0 159b 192.168.1.13 es3 abc 0 p STARTED 0 159b 192.168.1.15 es5 abc 0 r STARTED 0 159b 192.168.1.14 es4 [root@es5 bin]# curl "http://192.168.1.15:9200/_cat/indices?v"//View Index health status index pri rep docs.count docs.deleted store.size pri.store.size green open abc 5 1 0 0 1.5kb 795b

RESTful API Increase

Create an index (either host is available) and set the number of fragments and copies.

[root@es5 bin]# Curl-XPUT'http://192.168.1.12:9200/tedu/'-d'{//Create an index Tedu

> "settings":{

> "index":{

> "number_of_shards":5, //Fragment quantity

> "number_of_replicas":1 //Number of copies

> }

> }

> }'

{"acknowledged":true}[root@es5 bin]#

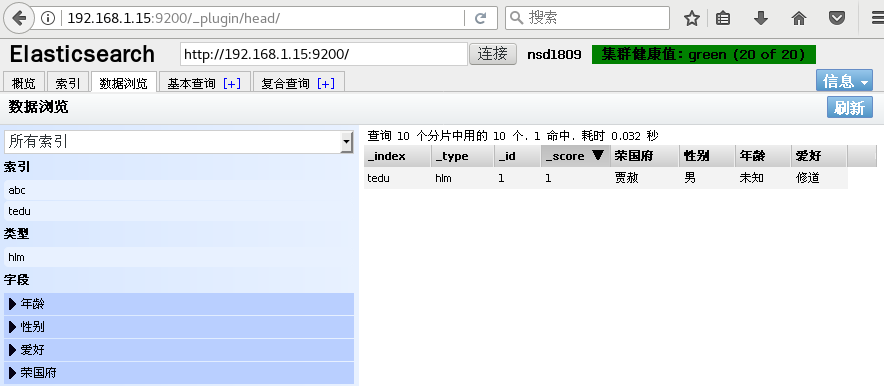

Insert data. If you continue to add data, the following id needs to be different

[root@es5 bin]# curl -XPUT '192.168.1.12:9200/tedu/hlm/1' -d '{

> "Rong Guo Fu":"Jia Shu",

> "Gender":"male",

> "Age":"Unknown",

> "hobby":"Monastic way"

> }'

{"_index":"tedu","_type":"hlm","_id":"1","_version":1,"_shards":{"total":2,"successful":2,"failed":0},"created":true}[root@es5 bin]#

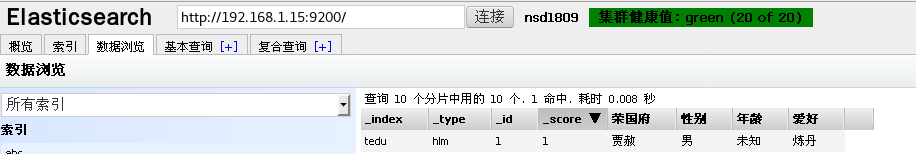

Modify the data XPOST_update to update which key is "doc"

[root@es5 bin]# curl -XPOST '192.168.1.12:9200/tedu/hlm/1/_update' -d '{

> "doc":{"hobby":"Alchemy"}

> }'

{"_index":"tedu","_type":"hlm","_id":"1","_version":2,"_shards":{"total":2,"successful":2,"failed":0}}[root@es5 bin]#

Query data

[root@es5 bin]# Curl-XGET'192.168.1.12:9200/tedu/hlm/1?pretty'/? pretty vertical display

{

"_index" : "tedu",

"_type" : "hlm",

"_id" : "1",

"_version" : 2,

"found" : true,

"_source" : {

"Rong Guo Fu" : "Jia Shu",

"Gender" : "male",

"Age" : "Unknown",

"hobby" : "Alchemy"

}

}

Delete data

[root@es5 bin]# Curl-XDELETE 192.168.1.12:9200/*//Delete all data

{"acknowledged":true}[root@es5 bin]#